前言,作者搭建部署教程并非原创,而是自己参照官方文档和网上一些教程拼凑而来,很多图都是网络截图,还是感谢那些真正原创的大神;文章里会包含官网没有说明的一些”坑“点,可以让大家避免这些坑,从而快速部署一套属于自己的私有云平台。

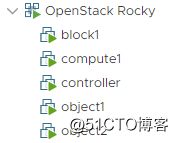

一. 环境介绍及基础环境搭建:

基于官网的硬件要求搭建:

1. 各节点硬件设置:

本次部署搭建基于vSphere 7.0虚拟化平台,所有都是虚机环境,虚机都是Centos 7.5版本,更新包后变成7.8版本,或者大家直接用7.8即可。

注:提前关闭firewalld防火墙、selinux。

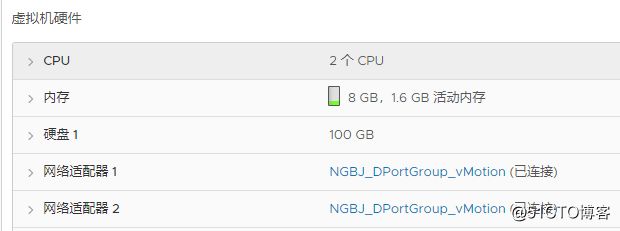

⑴ controller控制节点:

⑵ compute1计算节点:

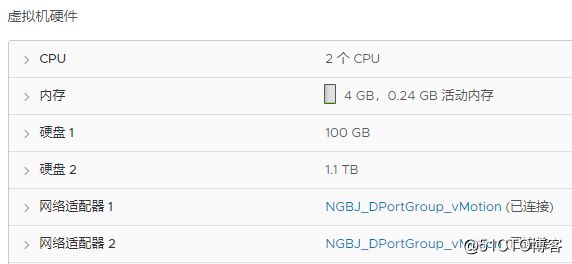

⑶ block1块存储节点:

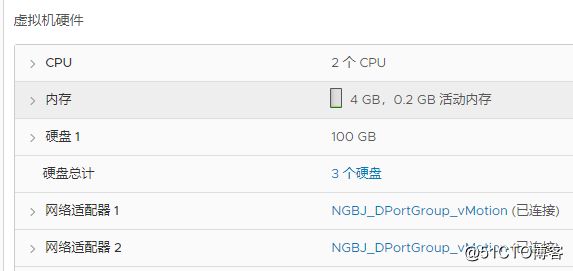

⑷ object1对象存储节点:

⑸ object2对象存储节点:

2. 网络:

⑴ 所有节点添加以下hosts:

#vim /etc/hosts

# controller

10.0.0.11 controller

# compute1

10.0.0.31 compute1

# block1

10.0.0.41 block1

# object1

10.0.0.51 object1

# object2

10.0.0.52 object2

⑵ 修改各节点网卡:

controller:10.0.0.11(内网管理IP)、10.1.0.100(外网访问IP)

compute1:10.0.0.31(内网管理IP)、10.1.0.101(外网访问IP)

blokc1:10.0.0.41(内网管理IP)、10.1.0.102(外网访问IP)

object1:10.0.0.51(内网管理IP)、10.1.0.103(外网访问IP)

object2:10.0.0.11(内网管理IP)、10.1.0.104(外网访问IP)

注1:读者根据自己的网络环境设置外网访问IP,块存储、对象存储节点可以不用设置外网IP,但考虑到远程管理和YUM安装就分配了IP。

注2:其实内网管理IP可以设置为自己网络环境的内网IP地址也是可以的,我这里是为了让大家能够区分开,就按照官网的设置了。

① 外网网卡修改(控制节点修改IP为例):

#vim /etc/sysconfig/network-scripts/ifcfg-ens192

TYPE="Ethernet"

PROXY_METHOD="none"

BROWSER_ONLY="no"

BOOTPROTO="none"

DEFROUTE="yes"

IPV4_FAILURE_FATAL="no"

IPV6INIT="yes"

IPV6_AUTOCONF="yes"

IPV6_DEFROUTE="yes"

IPV6_FAILURE_FATAL="no"

IPV6_ADDR_GEN_MODE="stable-privacy"

NAME="ens192"

UUID="9d919b09-31cc-4d35-b7b4-6fbd78820468"

DEVICE="ens192"

ONBOOT="yes"

IPADDR="10.1.0.100"

PREFIX="24"

GATEWAY="10.1.0.254"

DNS1="10.1.0.1"

DNS2="10.1.0.5"

DOMAIN="10.1.0.1"

IPV6_PRIVACY="no"

② 内网网卡修改(控制节点修改IP为例):

#vim /etc/sysconfig/network-scripts/ifcfg-ens224

TYPE="Ethernet"

PROXY_METHOD="none"

BROWSER_ONLY="no"

BOOTPROTO="none"

DEFROUTE="yes"

IPV4_FAILURE_FATAL="no"

IPV6INIT="yes"

IPV6_AUTOCONF="yes"

IPV6_DEFROUTE="yes"

IPV6_FAILURE_FATAL="no"

IPV6_ADDR_GEN_MODE="stable-privacy"

NAME="ens224"

UUID="49600b26-df10-364a-aa4d-2a6114ebbf43"

DEVICE="ens224"

ONBOOT="yes"

IPADDR="10.0.0.11"

PREFIX="24"

GATEWAY="10.0.0.1"

IPV6_PRIVACY="no"

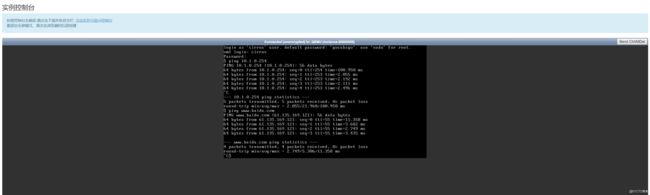

③ 各节点重启网络并PING测试:

#systemctl restart network

#ping –c 4 www.baidu.com

#ping –c 4 controller //在其他各节点互相PING节点HOSTNAME

3. 各节点安装NTP服务:

⑴ controller节点安装(作为NTP服务器):

#yum install –y chrony

#vim /etc/chrony/chrony.conf

server ntp1.aliyun.com iburst

server ntp2.aliyun.com iburst

allow 10.0.0.0/24

allow 10.1.0.0/24

#systemctl enable chronyd ; systemctl restart chronyd

⑵ 其他节点安装(NTP客户端):

#yum install –y chrony

#vim /etc/chrony/chrony.conf

server controller iburst

#systemctl enable chronyd ; systemctl restart chronyd

⑶ 验证:

在controller节点及各节点输入如下命令:

#chronyc sources

4. 各节点YUM源设置:

⑴ 所有节点都设置好阿里云YUM源:

① 阿里云CENTOS YUM源:

#mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup

#wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

② 阿里云EPEL源:

#mv /etc/yum.repos.d/epel.repo /etc/yum.repos.d/epel.repo.backup

#wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

③ 阿里云OPENSTACK YUM源:

#vim /etc/yum.repos.d/openstack.repo

[openstack-rocky]

name=OpenStack Icehouse Repository

baseurl=https://mirrors.aliyun.com/centos/7/cloud/x86_64/openstack-rocky/

enabled=1

skip_if_unavailable=0

gpgcheck=0

priority=98

④ 阿里云QEMU YUM源(注意自己版本,作者是7.5 YUM源):

先清除CentOS-QEMU-EV.repo文件里内容,添加如下内容:

#vim /etc/yum.repos.d/CentOS-QEMU-EV.repo

[centos-qemu-ev]

name=CentOS-$releasever - QEMU EV

baseurl=https://mirrors.aliyun.com/centos/7.5.1804/virt/x86_64/kvm-common/

gpgcheck=0

enabled=1

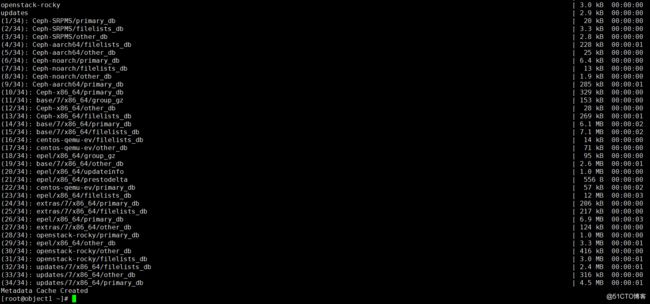

⑤ 更新YUM缓存:

#yum clean all

#yum makecache

5. 更新系统包,安装组件:

#yum upgrade //更新完成后,重启系统

#init 6

重启完成后,安装组件:

#cat /etc/redhat-release //系统从7.5变成7.8

#yum install -y python-openstackclient openstack-selinux

二. 控制节点controller基础服务搭建:

controller节点完成以上操作后,还需要安装如下服务:

1. 安装数据库SQL:

#yum install -y mariadb mariadb-server python2-PyMySQL

配置新openstack.cnf文件:

#/etc/my.cnf.d/openstack.cnf

[mysqld] bind-address = 10.0.0.11

default-storage-engine = innodb

innodb_file_per_table =

on max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

#systemctl enable mariadb ; systemctl restart mariadb

#mysql_secure_installation //初始化MYSQL数据库

2. 安装MESSAGE消息队列服务:

#yum install –y rabbitmq-server

#systemctl enable rabbitmq-server ; systemctl enable rabbitmq-server

#rabbitmqctl add_user openstack RABBIT_PASS //添加openstack用户,RABBIT_PASS(用你的密码代替,密码里千万不能带@,后面会提到!!!)

#rabbitmqctl set_permissions openstack ".*" ".*" ".*" //授权

3. 安装Memcached缓存服务:

#yum install -y memcached python-memcached

#vim /etc/sysconfig/memcached

OPTIONS="-l 127.0.0.1,::1,controller"

#systemctl enable memcached ; systemctl restart memcached

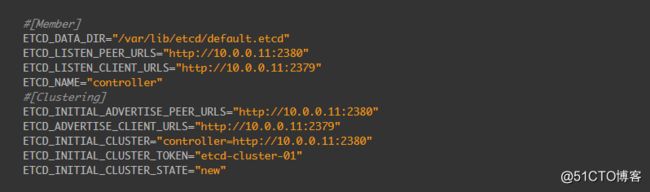

4. 安装ETCD服务:

#yum install –y etcd

#vim /etc/etcd/etcd.conf //修改如下参数

#[Member]

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="http://10.0.0.11:2380"

ETCD_LISTEN_CLIENT_URLS="http://10.0.0.11:2379"

ETCD_NAME="controller"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://10.0.0.11:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://10.0.0.11:2379"

ETCD_INITIAL_CLUSTER="controller=http://10.0.0.11:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-01"

ETCD_INITIAL_CLUSTER_STATE="new"

#systemctl enable etcd ; systemctl restart etcd

至此,所有基层环境搭建完成,准备搭建OpenStack服务组件。

三. OpenStack各服务组件搭建:

1. 部署Keystone服务组件controller节点:

⑴ 配置数据库:

#mysql –u root –p //登录MYSQL

① 创建keystone数据库:

MariaDB [(none)]> CREATE DATABASE keystone;

② 对keystone数据库的访问权限:

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' \

IDENTIFIED BY 'KEYSTONE_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' \

IDENTIFIED BY 'KEYSTONE_DBPASS';

MariaDB [(none)]> flush privileges;

替换KEYSTONE_DBPASS为访问密码

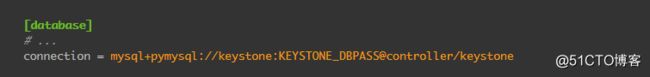

⑵ 安装配置组件:

#yum install –y openstack-keystone httpd mod_wsgi

#vim /etc/keystone/keystone.conf

[database]

# ...

connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone

替换KEYSTONE_DBPASS为你的密码,前面我也讲到了,密码不能用@,如果你用了@,那么数据库会认为@后面就是HOSTNAME,在部署NOVA节点的时候,会报错,提示找不到相关的HOST主机,无法启动服务。

[token]

# ...

provider = fernet

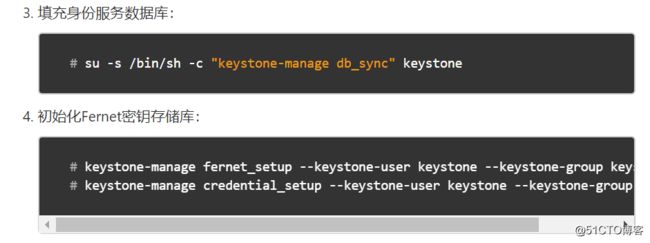

#su -s /bin/sh -c "keystone-manage db_sync" keystone //填充身份服务数据库

#keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone //初始化Fernet密钥存储库

#keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

⑶ 启动相关服务:

keystone-manage bootstrap --bootstrap-password ADMIN_PASS \

--bootstrap-admin-url http://controller:5000/v3/ \

--bootstrap-internal-url http://controller:5000/v3/ \

--bootstrap-public-url http://controller:5000/v3/ \

--bootstrap-region-id RegionOne

//ADMIN_PASS为设置的密码

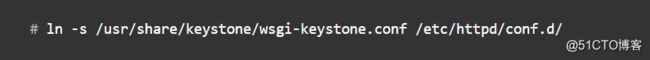

⑷配置Apache HTTP服务:

#vim /etc/httpd/conf/httpd.conf

ServerName controller

创建软链接:

#ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

启动服务:

#systemctl enable httpd ; systemctl restart httpd

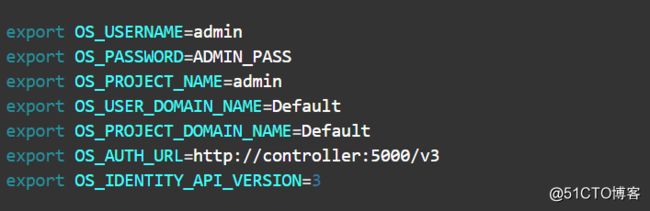

⑸ 创建临时变量文件:

#vim admin-openrc

export OS_USERNAME=admin

export OS_PASSWORD=ADMIN_PASS

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

替换ADMIN_PASS为设置的密码

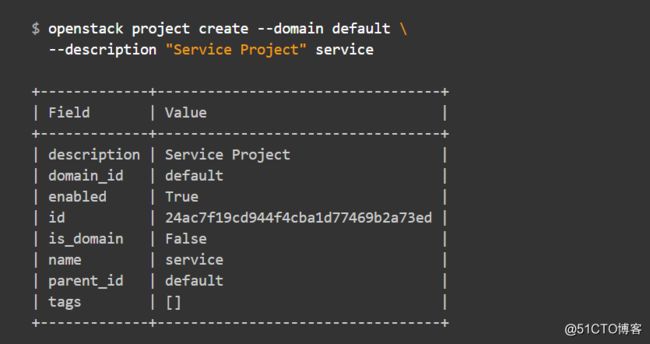

⑹创建域、项目,用户和角色:

① 创建service项目,其中包含添加到环境中的每个服务的唯一用户:

#openstack project create --domain default \

--description "Service Project" service

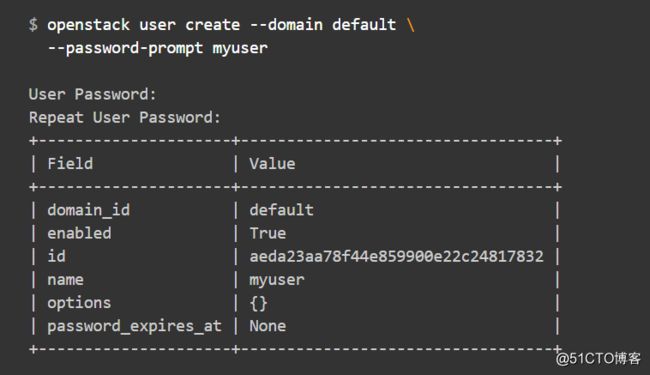

② 创建myproject项目,常规(非管理员)任务应使用没有特权的项目和用户:

#openstack project create --domain default \

--description "Demo Project" myproject

创建myuser用户,需要创建密码:

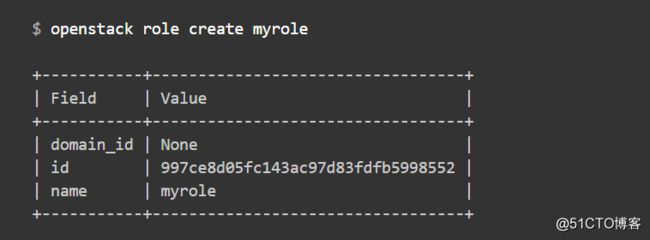

创建myrole角色:

#openstack role create myrole

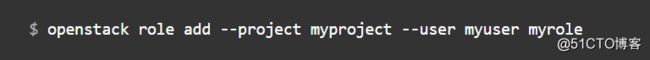

将myrole角色添加到myproject项目和myuser用户:

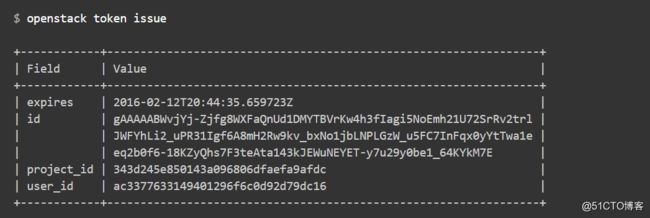

⑺ 验证:

① 取消临时变量:

#unset OS_AUTH_URL OS_PASSWORD

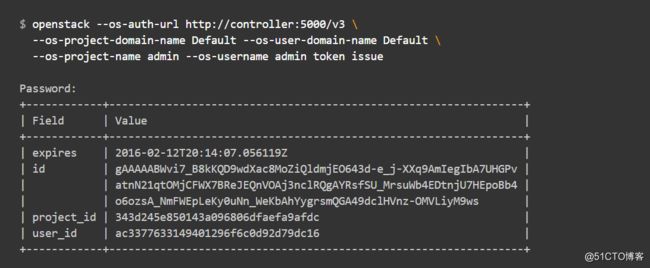

② 以admin用户身份请求身份验证令牌,输入admin密码:

#openstack --os-auth-url http://controller:5000/v3 \

--os-project-domain-name Default --os-user-domain-name Default \

--os-project-name admin --os-username admin token issue

③ 以myuser用户身份请求身份验证令牌,输入myuser密码:

#openstack --os-auth-url http://controller:5000/v3 \

--os-project-domain-name Default --os-user-domain-name Default \

--os-project-name myproject --os-username myuser token issue

⑻ 创建临时变量脚本:

先删除之前的临时脚本:

① 创建admin管理员临时变量脚本:

#vim admin-openrc

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=ADMIN_PASS

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

用密码替换ADMIN_PASS

② 创建demo用户临时变量脚本:

#vim demo-openrc

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=myproject

export OS_USERNAME=myuser

export OS_PASSWORD=MYUSER_PASS

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

用密码替换MYUSER_PASS

③ 验证脚本:

#. admin-openrc

#openstack token issue

2. 部署Glance服务组件controller节点:

⑴ 配置数据库:

#mysql –u root –p

MariaDB [(none)]> CREATE DATABASE glance;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' \

IDENTIFIED BY 'GLANCE_DBPASS';

MariaDB [(none)]> flush privileges;

替换GLANCE_DBPASS为合适的密码

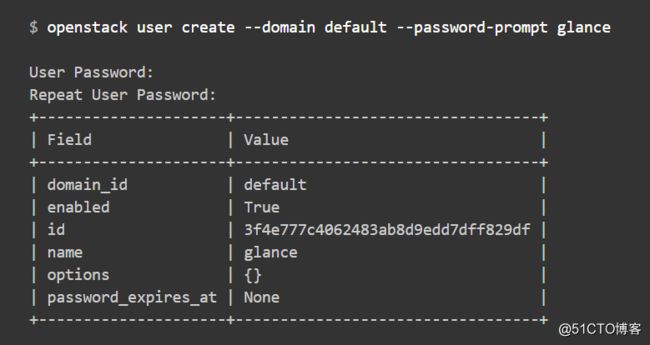

⑵ 创建凭证及API端点:

#. admin-openrc

创建glance用户:

#openstack user create --domain default --password-prompt glance

添加admin role:

#openstack role add --project service --user glance admin

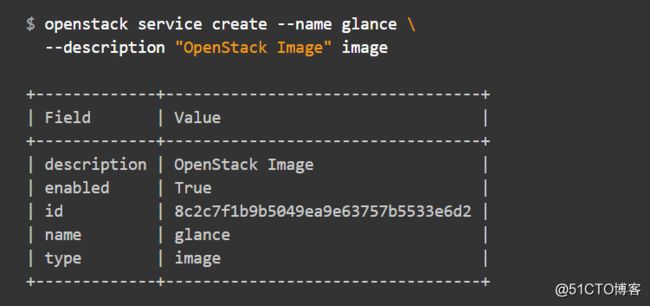

创建glance服务:

#openstack service create --name glance \

--description "OpenStack Image" image

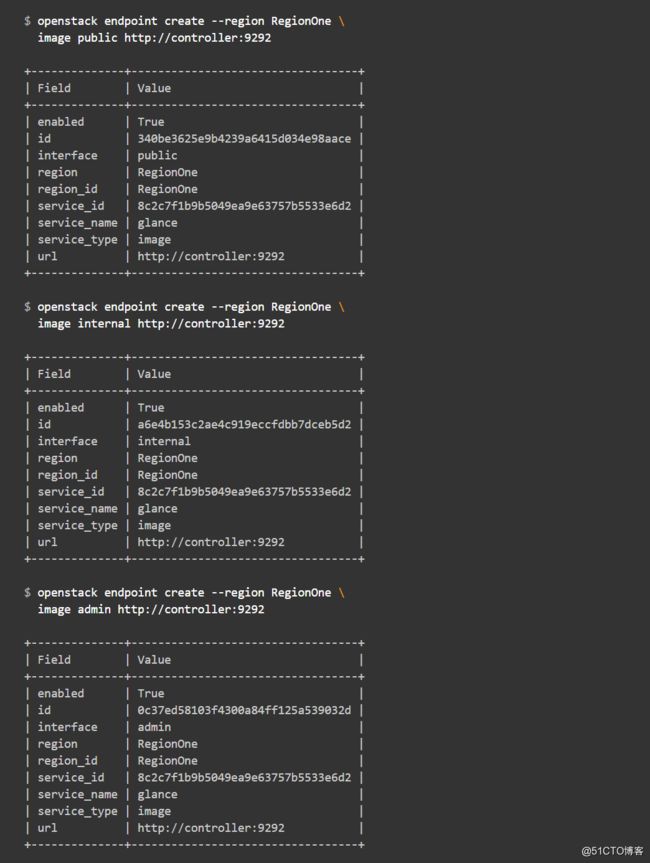

创建镜像服务API端点:

openstack endpoint create --region RegionOne \

image public http://controller:9292

openstack endpoint create --region RegionOne \

image internal http://controller:9292

openstack endpoint create --region RegionOne \

image admin http://controller:9292

⑶ 安装和配置镜像组件:

#yum install –y openstack-glance

配置/etc/glance/glance-api.conf

#vim /etc/glance/glance-api.conf

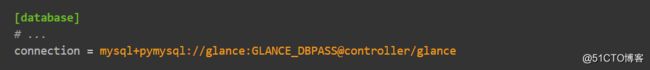

[database]

# ...

connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

替换GLANCE_DBPASS为密码

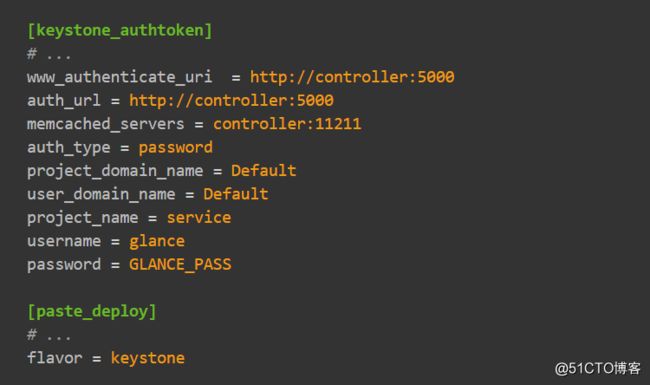

[keystone_authtoken]

# ...

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = GLANCE_PASS

[paste_deploy]

# ...

flavor = keystone

替换GLANCE_PASS

启动服务:

#systemctl enable openstack-glance-api ; systemctl restart openstack-glance-api

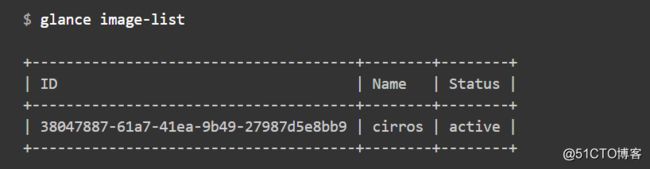

⑷ 验证服务:

#. admin-openrc

下载cirros镜像qcow2格式,类linux的一个测试镜像,很小12MB左右:

#wget http://download.cirros-cloud.net/0.4.0/cirros-0.4.0-x86_64-disk.img

#glance image-create --name "cirros" \

--file cirros-0.4.0-x86_64-disk.img \

--disk-format qcow2 --container-format bare \

--visibility public

验证:

#glance image-list

3. 部署Nova服务组件controller节点:

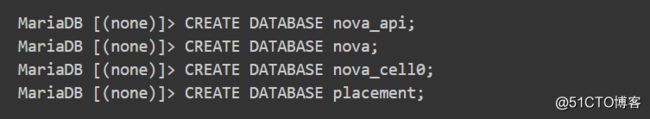

⑴ 配置数据库:

#mysql –u root –p

创建数据库:

MariaDB [(none)]> CREATE DATABASE nova_api;

MariaDB [(none)]> CREATE DATABASE nova;

MariaDB [(none)]> CREATE DATABASE nova_cell0;

MariaDB [(none)]> CREATE DATABASE placement;

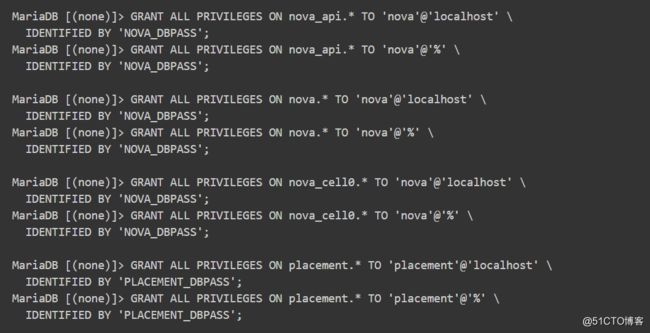

数据库授权:

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' \

IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' \

IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' \

IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' \

IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' \

IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' \

IDENTIFIED BY 'NOVA_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' \

IDENTIFIED BY 'PLACEMENT_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' \

IDENTIFIED BY 'PLACEMENT_DBPASS';

替换NOVA_DBPASS和PLACEMENT_DBPASS为密码

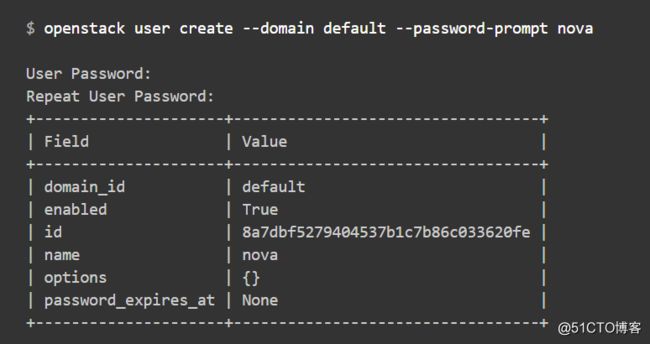

⑵ 创建服务凭证及端点:

创建nova用户:

#openstack user create --domain default --password-prompt nova

添加admin角色:

#openstack role add --project service --user nova admin

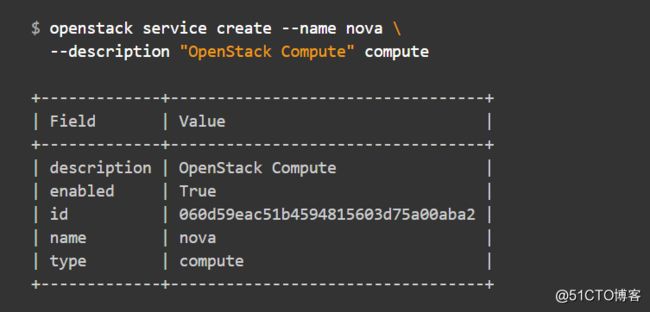

创建nova服务:

#openstack service create --name nova \

--description "OpenStack Compute" compute

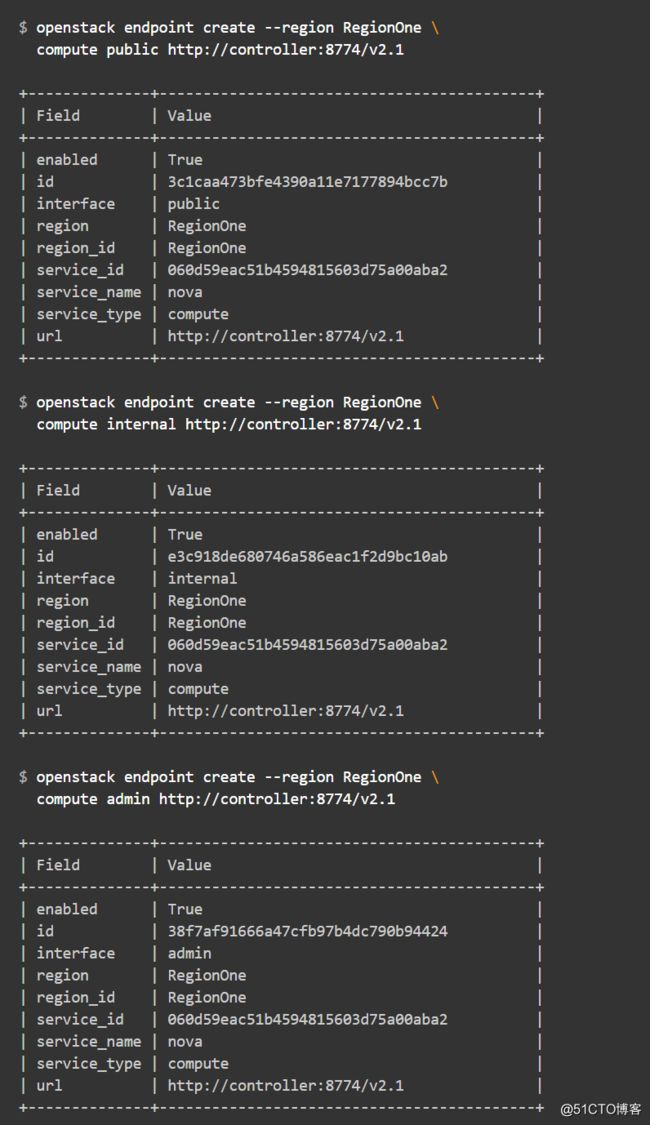

创建compute API服务端点:

#openstack endpoint create --region RegionOne \

compute public http://controller:8774/v2.1

#openstack endpoint create --region RegionOne \

compute internal http://controller:8774/v2.1

#openstack endpoint create --region RegionOne \

compute admin http://controller:8774/v2.1

创建placement服务用户,创建密码:

#openstack user create --domain default --password-prompt placement

添加admin角色:

#openstack role add --project service --user placement admin

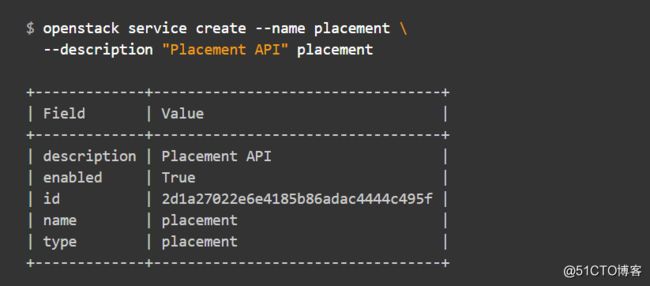

创建Placement API:

#openstack service create --name placement \

--description "Placement API" placement

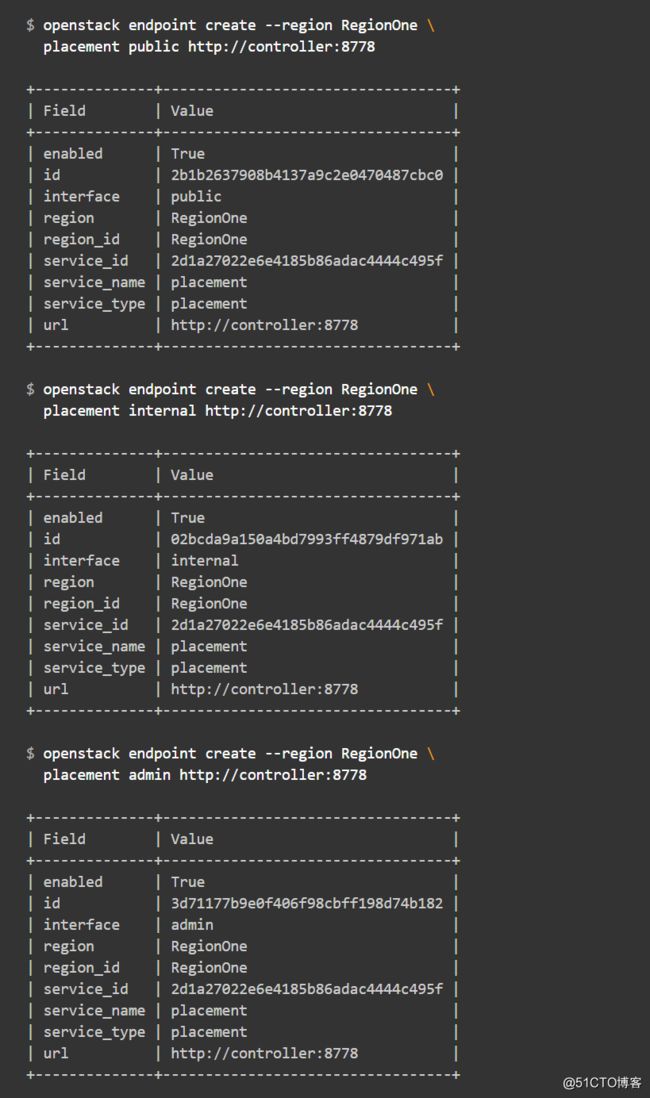

创建Placement API服务端点:

#openstack endpoint create --region RegionOne \

placement public http://controller:8778

#openstack endpoint create --region RegionOne \

placement internal http://controller:8778

#openstack endpoint create --region RegionOne \

placement admin http://controller:8778

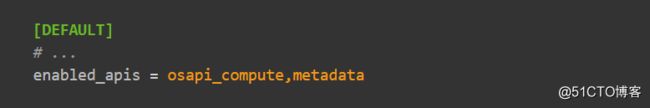

⑶ 安装和配置组件:

① 安装组件:

#yum install –y openstack-nova-api openstack-nova-conductor \

openstack-nova-console openstack-nova-novncproxy \

openstack-nova-scheduler openstack-nova-placement-api

② 配置组件:

配置/etc/nova/nova.conf

#vim /etc/nova/nova.conf

DEFAULT]

# ...

enabled_apis = osapi_compute,metadata

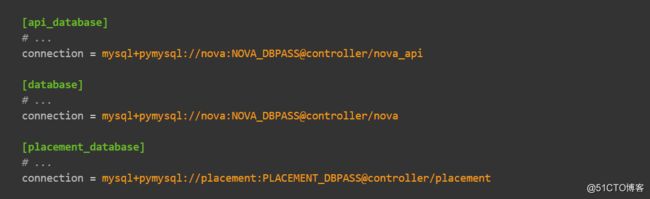

[api_database]

# ...

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api

[database]

# ...

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova

[placement_database]

# ...

connection = mysql+pymysql://placement:PLACEMENT_DBPASS@controller/placement

替换NOVA_DBPASS和PLACEMENT_DBPASS为密码

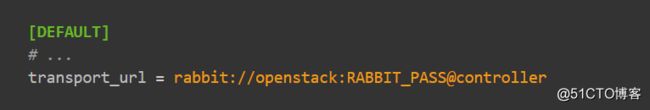

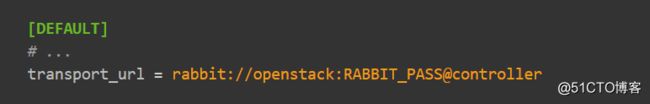

[DEFAULT]

# ...

transport_url = rabbit://openstack:RABBIT_PASS@controller

替换RABBIT_PASS

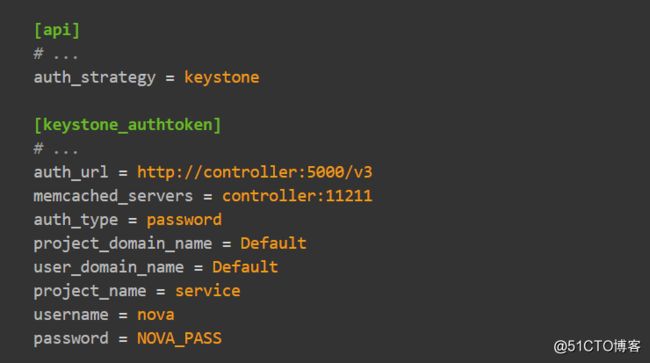

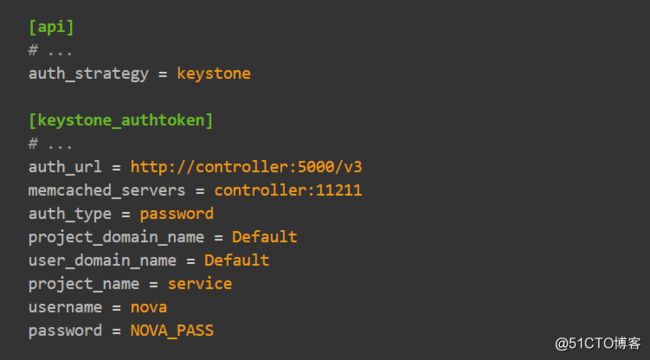

[api]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS

替换NOVA_PASS

在该[DEFAULT]部分中,配置my_ip选项以使用控制器节点的管理接口IP地址:

[DEFAULT]

# ...

my_ip = 10.0.0.11

[DEFAULT]

# ...

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

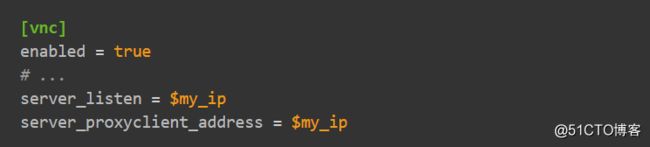

[vnc]

enabled = true

# ...

server_listen = $my_ip

server_proxyclient_address = $my_ip

[glance]

# ...

api_servers = http://controller:9292

[oslo_concurrency]

# ...

lock_path = /var/lib/nova/tmp

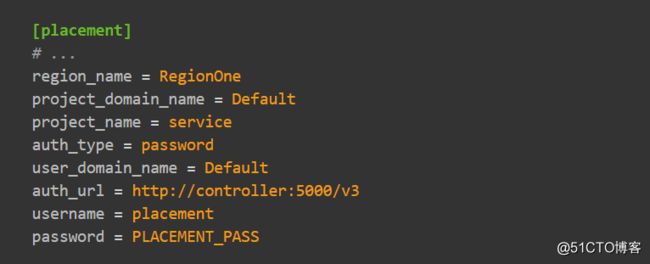

[placement]

# ...

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = PLACEMENT_PASS

替换PLACEMENT_PASS

配置/etc/httpd/conf.d/00-nova-placement-api.conf 添加以下项:

#vim /etc/httpd/conf.d/00-nova-placement-api.conf

= 2.4>

Require all granted

Order allow,deny

Allow from all

重启HTTPD:

#systemctl restart httpd

③ 填充nova-api和placement数据库:

#su -s /bin/sh -c "nova-manage api_db sync" nova

④ 注册cell0数据库:

#su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

⑤ 创建cell1单元格:

#su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

⑥ 填充nova数据库:

#su -s /bin/sh -c "nova-manage db sync" nova

⑦ 验证nove cell0和cell1是否正确注册:

#su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

⑷ 启动服务:

#systemctl enable openstack-nova-api.service \

openstack-nova-consoleauth openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

#systemctl restart openstack-nova-api.service \

openstack-nova-consoleauth openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

4. 部署Nova服务组件compute1节点:

⑴ 安装配置组件:

① 安装软件包:

#yum install –y openstack-nova-compute

⑵ 配置组件:

#vim /etc/nova/nova.conf

[DEFAULT]

# ...

enabled_apis = osapi_compute,metadata

[DEFAULT]

# ...

transport_url = rabbit://openstack:RABBIT_PASS@controller

替换RABBIT_PASS

[api]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS

替换NOVA_PASS

[DEFAULT]

# ...

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

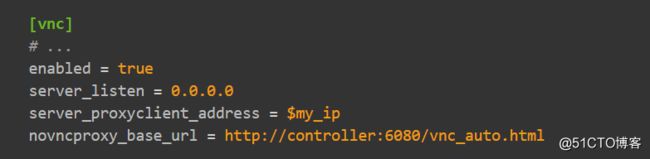

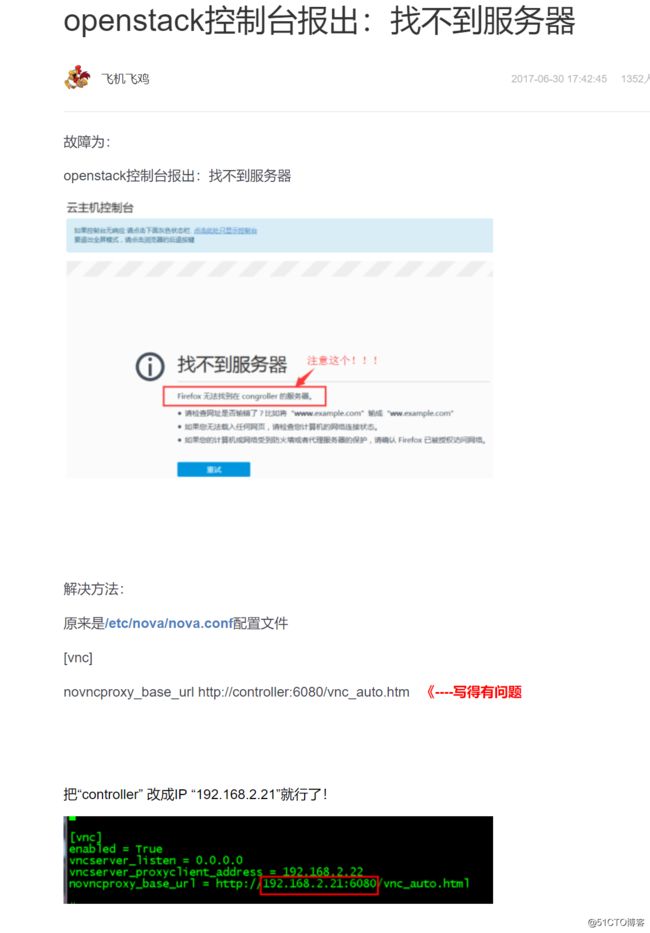

[vnc]

# ...

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://10.1.0.100:6080/vnc_auto.html

这里提醒一下,URL需要把controller更改为可访问的地址控制节点IP地址,否则提示“控制台报出:找不到服务器”

[glance]

# ...

api_servers = http://controller:9292

[oslo_concurrency]

# ...

lock_path = /var/lib/nova/tmp

[placement]

# ...

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = PLACEMENT_PASS

替换PLACEMENT_PASS

⑶ 启动服务:

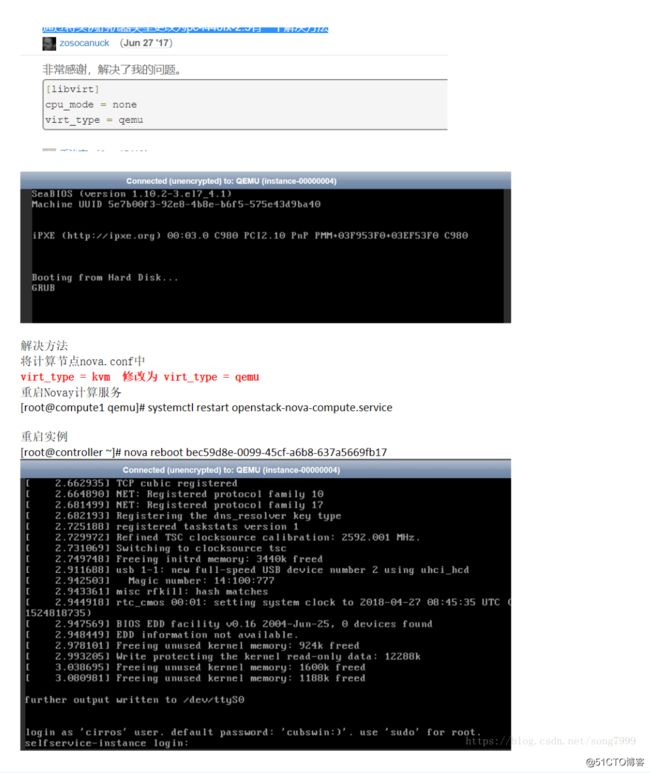

① 查看计算节点是否开启虚拟化支持:

#egrep –c '(vmx|svm)' /proc/cpuinfo

如果此命令返回值为0,那就是你的硬件或虚机未开启虚拟化,需要开启虚拟化。

在作者环境中,实例在第一次运行时,提示“Booting from Hard Disk... GRUB”,后查明原因需要更改libvirt配置:

注:读者可根据自己环境适当修改。

#vim /etc/nova/nova.conf

[libvirt]

# ...

virt_type = qemu

原文地址:https://blog.csdn.net/song7999/article/details/80119010

#systemctl enable libvirtd.service openstack-nova-compute.service ; systemctl restart libvirtd.service openstack-nova-compute.service

⑷ 填充数据库:

#. admin-openrc

#openstack compute service list --service nova-compute

#su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

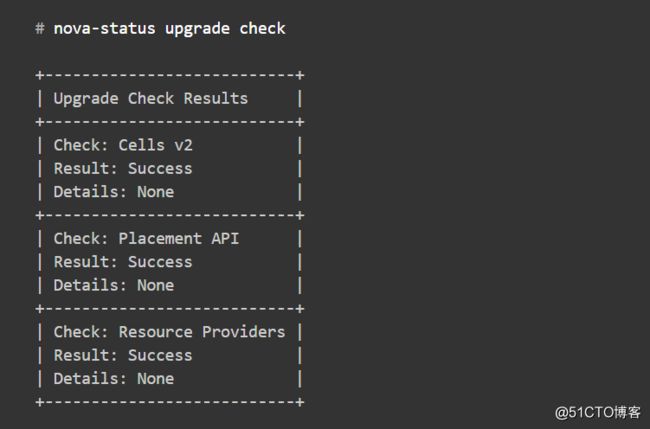

⑸ 验证:

在controller控制节点上:

#. admin-openrc

#openstack compute service list

#openstack catalog list

#openstack image list

#nova-status upgrade check

⑹ 部署完NOVA服务后需要解决的一些报错:

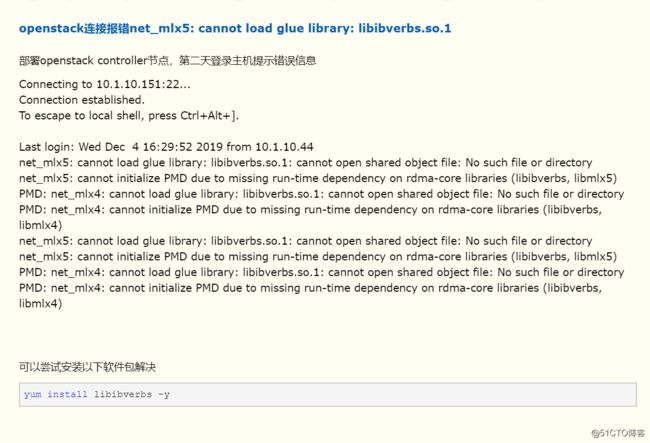

① openstack连接报错net_mlx5: cannot load glue library: libibverbs.so.1

原文地址:https://www.cnblogs.com/omgasw/p/11987504.html

② did not finish being created even after we waited 189 seconds or 61 attempts. And its status is downloading

解决办法

在nova.conf中有一个控制卷设备重试的参数:block_device_allocate_retries,可以通过修改此参数延长等待时间。

该参数默认值为60,这个对应了之前实例创建失败消息里的61 attempts。我们可以将此参数设置的大一点,例如:180。这样Nova组件就不会等待卷创建超时,也即解决了此问题。

原文地址:https://www.cnblogs.com/mrwuzs/p/10282436.html

5. 部署Neutron服务组件controller节点:

⑴ 配置数据库:

#mysql –u root –p

MariaDB [(none)] CREATE DATABASE neutron;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' \

IDENTIFIED BY 'NEUTRON_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' \

IDENTIFIED BY 'NEUTRON_DBPASS';

替换NEUTRON_DBPASS

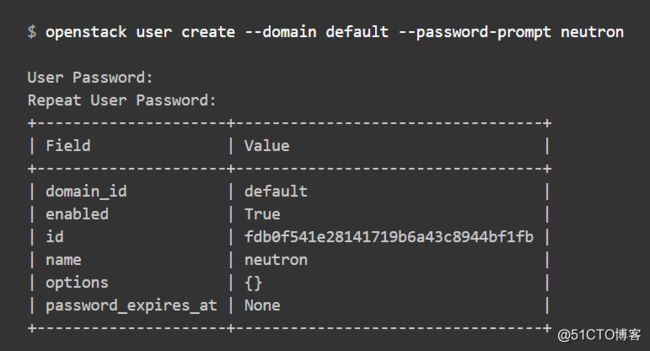

⑵ 创建凭证、API端点:

#. admin-openrc

#openstack user create --domain default --password-prompt neutron

#openstack role add --project service --user neutron admin

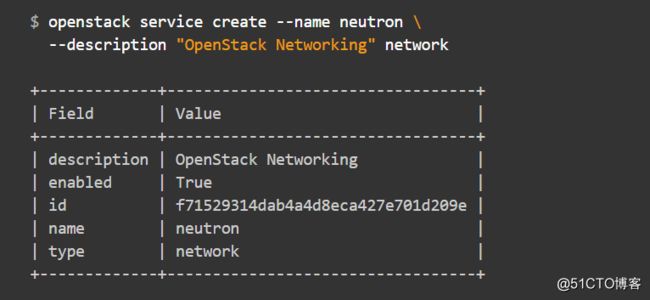

#openstack service create --name neutron \

--description "OpenStack Networking" network

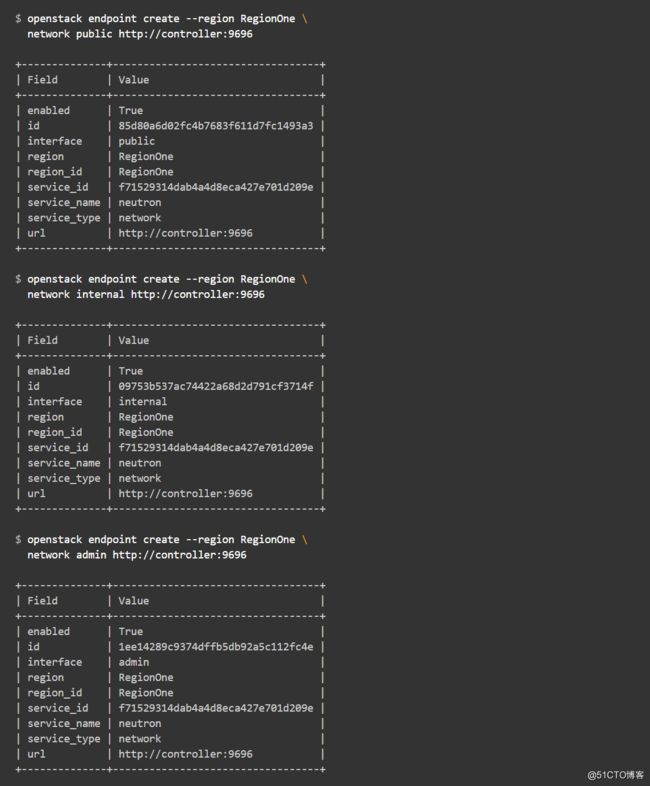

创建网络服务API端点:

#openstack endpoint create --region RegionOne \

network public http://controller:9696

#openstack endpoint create --region RegionOne \

network internal http://controller:9696

#openstack endpoint create --region RegionOne \

network admin http://controller:9696

⑶ 配置网络选项,自助服务网络:

配置网络之前,有2个选项:

联网选项1:提供者网络,也就是直接分配物理网络IP;没有内网和浮动IP概念;

联网选项2:自助服务网络,提供搭建私有内网与使用浮动IP,推荐配置此网络。

Self-service networks自助服务网络搭建:

① 安装组件:

#yum install –y openstack-neutron openstack-neutron-ml2 \ openstack-neutron-linuxbridge ebtables

② 配置服务器组件:

#vim /etc/neutron/neutron.conf

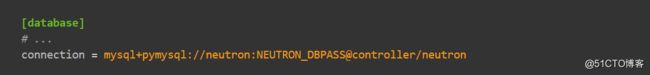

[database]

# ...

connection = mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron

替换NEUTRON_DBPASS

[DEFAULT]

# ...

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = true

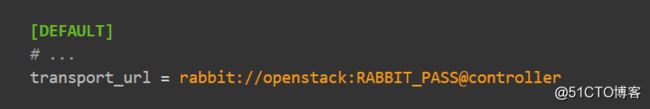

[DEFAULT]

# ...

transport_url = rabbit://openstack:RABBIT_PASS@controller

替换RABBIT_PASS

[DEFAULT]

# ...

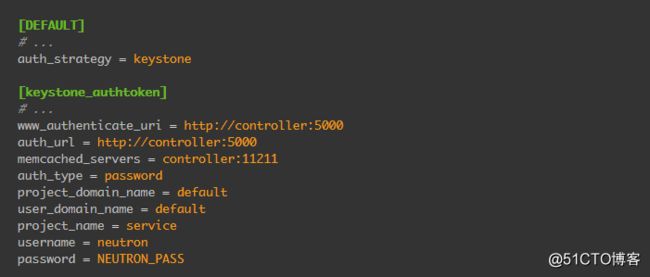

auth_strategy = keystone

[keystone_authtoken]

# ...

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = NEUTRON_PASS

替换NEUTRON_PASS

[oslo_concurrency]

# ...

lock_path = /var/lib/neutron/tmp

③ 配置模块化层2(ML2)插件:

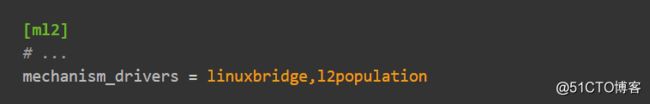

#vim /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

# ...

type_drivers = flat,vlan,vxlan

[ml2]

# ...

tenant_network_types = vxlan

[ml2]

# ...

mechanism_drivers = linuxbridge,l2population

[ml2]

# ...

extension_drivers = port_security

[ml2_type_flat]

# ...

flat_networks = provider

[ml2_type_vxlan]

# ...

vni_ranges = 1:1000

[securitygroup]

# ...

enable_ipset = true

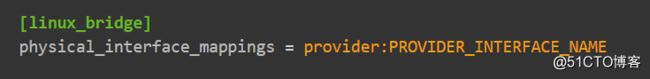

④ 配置Linux网桥代理:

#vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:ens192

注:PROVIDER_INTERFACE_NAME 的值为你外网网卡名称,例如:provider:ens192

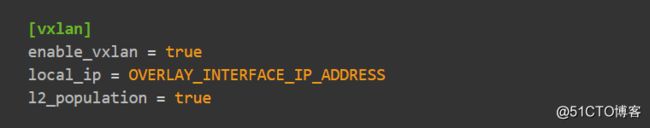

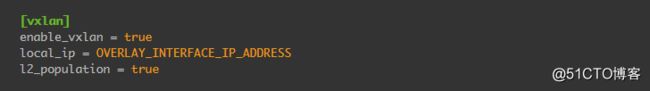

[vxlan]

enable_vxlan = true

local_ip = 10.0.0.11

l2_population = true

注:OVERLAY_INTERFACE_IP_ADDRESS 为controller控制节点管理IP地址10.0.0.11

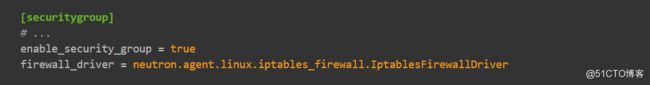

[securitygroup]

# ...

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

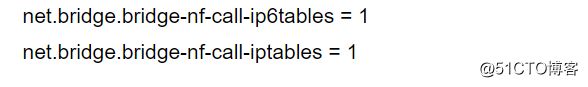

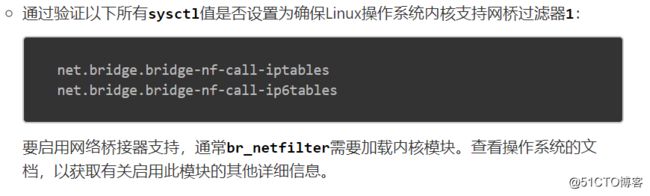

添加bridge-nf-call-ip6tables(这里官网没有提及配置方法):

#vim /etc/sysctl.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

#modprobe br_netfilter

#sysctl –p

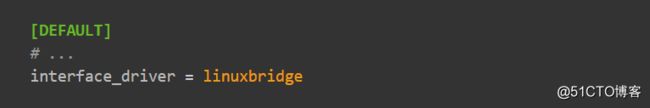

⑤ 配置三层代理:

#vim /etc/neutron/l3_agent.ini

[DEFAULT]

# ...

interface_driver = linuxbridge

⑥ 配置DHCP代理:

#vim /etc/neutron/dhcp_agent.ini

[DEFAULT]

# ...

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

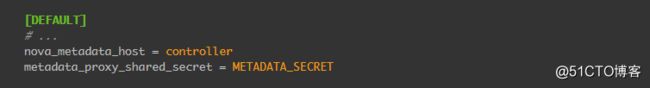

⑷ 配置元数据代理:

#vim /etc/neutron/metadata_agent.ini

[DEFAULT]

# ...

nova_metadata_host = controller

metadata_proxy_shared_secret = METADATA_SECRET

注:这里说明一下,METADATA_SECRET为元数据代理机密信息,你可以把METADATA_SECRET理解为密码,如果是测试环境,不建议更改;生产环境一定要更改,我这里保持不更改。

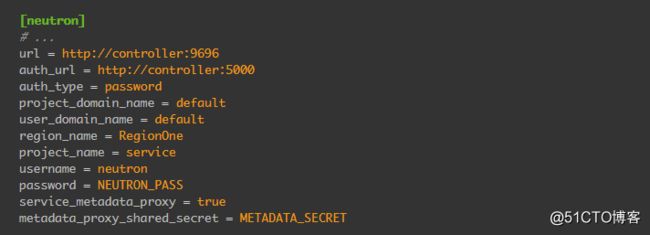

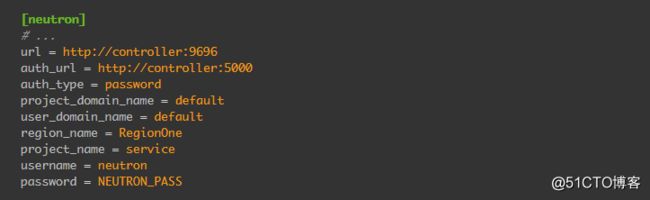

⑸ 配置计算服务使用网络服务:

#vim /etc/nova/nova.conf

[neutron]

# ...

url = http://controller:9696

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = NEUTRON_PASS

service_metadata_proxy = true

metadata_proxy_shared_secret = METADATA_SECRET

替换NEUTRON_PASS为密码

替换METADATA_SECRET为适当机密,我这里保持不修改

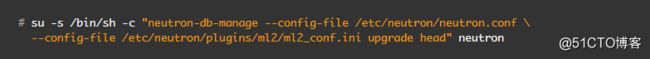

⑹ 启动服务:

创建软链接:

#ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

填充数据库:

#su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

#systemctl restart openstack-nova-api.service

启动网络服务:

#systemctl enable neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

#systemctl restart neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

对于网络选项2,自助服务网络,还需要启动3层服务:

#systemctl enable neutron-l3-agent.service ; systemctl restart neutron-l3-agent.service

6. 部署Neutron服务组件compute1节点:

⑴ 安装组件:

#yum install –y openstack-neutron-linuxbridge ebtables ipset

⑵ 配置组件:

#vim /etc/neutron/neutron.conf

[DEFAULT]

# ...

transport_url = rabbit://openstack:RABBIT_PASS@controller

替换RABBIT_PASS

[DEFAULT]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = NEUTRON_PASS

替换NEUTRON_PASS

[oslo_concurrency]

# ...

lock_path = /var/lib/neutron/tmp

⑶ 联网选项2:自助服务网络

① 配置Linux网桥代理:

#vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:ens192

替换PROVIDER_INTERFACE_NAME为外网网卡名称

[vxlan]

enable_vxlan = true

local_ip = 10.0.0.31

l2_population = true

替换OVERLAY_INTERFACE_IP_ADDRESS为compute1管理(内网)网卡IP

[securitygroup]

# ...

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

添加bridge-nf-call-ip6tables(这里官网没有提及配置方法):

#vim /etc/sysctl.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

#modprobe br_netfilter

#sysctl –p

⑷ 配置计算机服务使用网络服务:

#vim /etc/nova/nova.conf

[neutron]

# ...

url = http://controller:9696

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = NEUTRON_PASS

替换NEUTRON_PASS

⑸ 启动服务:

# systemctl restart openstack-nova-compute.service

# systemctl enable neutron-linuxbridge-agent.service ; systemctl restart neutron-linuxbridge-agent.service

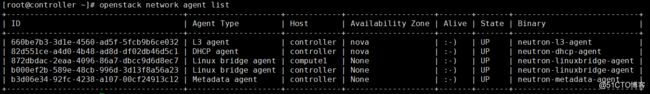

⑹ 验证:

在controller节点上:

#. admin-openrc

#openstack extension list –network

#openstack network agent list

7. 部署Horizon服务组件controller节点:

⑴ 安装:

#yum install –y openstack-dashboard

⑵ 配置:

#vim /etc/openstack-dashboard/local_settings

OPENSTACK_HOST = "controller"

允许主机访问仪表板:

ALLOWED_HOSTS = ['*']

注:['*']以接受所有主机,生产环境不推荐。

配置memcached会话存储服务:

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'controller:11211',

}

}

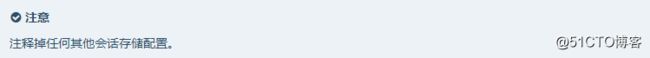

注:以上参数如何没有则添加,注释掉其他任何存储配置。

启用身份API 3版本:

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

启动对域的支持:

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

配置API版本:

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 2,

}

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default"

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

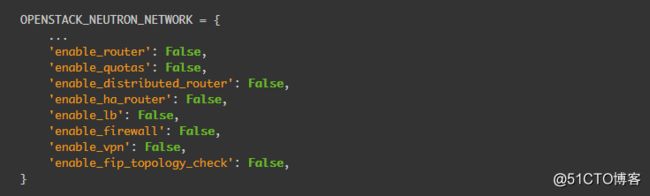

如果选择网络选项1,请禁用对第3层网络服务的支持;自助网络则开启3层,以下根据自己需求开启:

OPENSTACK_NEUTRON_NETWORK = {

...

'enable_router': True,

'enable_quotas': False,

'enable_distributed_router': True,

'enable_ha_router': True,

'enable_lb': False,

'enable_firewall': True,

'enable_***': False,

'enable_fip_topology_check': False,

}

时区:

TIME_ZONE = "Asia/Shanghai"

修改openstack-dashboard.conf,如何没有以下选项则添加:

#vim /etc/httpd/conf.d/openstack-dashboard.conf

WSGIApplicationGroup %{GLOBAL}

⑶ 重启服务:

#systemctl restart httpd.service memcached.service

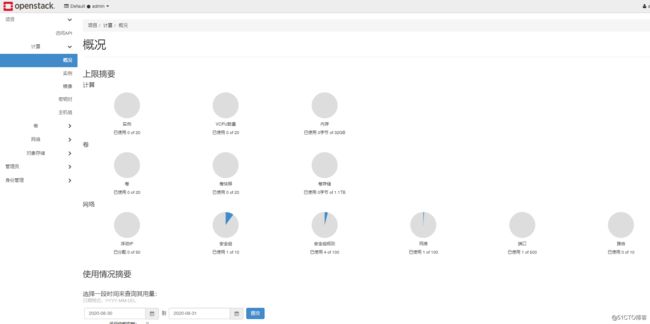

⑷验证:

使用浏览器访问:http://10.0.0.100/dashboard

注:访问IP根据自己实际IP输入

使用default域为默认域,admin或demo为登录用户,密码是自己修改的密码。

8. 部署Cinder服务组件controller节点:

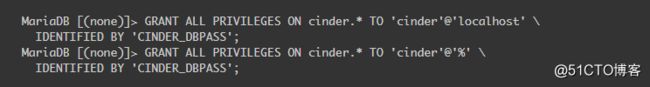

⑴ 创建数据库:

#mysql –u root –p

MariaDB [(none)]> CREATE DATABASE cinder;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' \

IDENTIFIED BY 'CINDER_DBPASS';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' \

IDENTIFIED BY 'CINDER_DBPASS';

替换CINDER_DBPASS

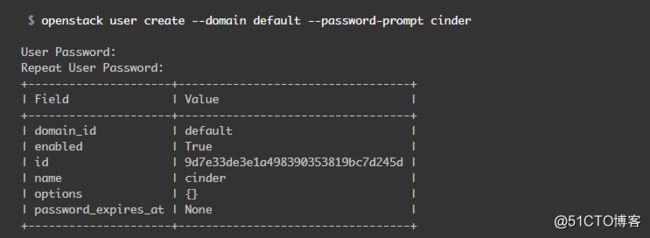

⑵ 创建服务凭证和API端点:

#. admin-openrc

#openstack user create --domain default --password-prompt cinder

#openstack role add --project service --user cinder admin

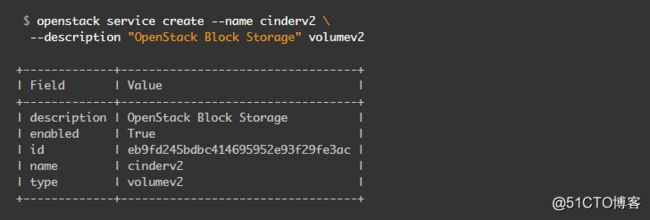

#openstack service create --name cinderv2 \

--description "OpenStack Block Storage" volumev2

创建块存储服务API V2、V3端点:

#openstack endpoint create --region RegionOne \

volumev2 public http://controller:8776/v2/%\(project_id\)s

#openstack endpoint create --region RegionOne \

volumev2 internal http://controller:8776/v2/%\(project_id\)s

#openstack endpoint create --region RegionOne \

volumev2 admin http://controller:8776/v2/%\(project_id\)s

#openstack endpoint create --region RegionOne \

volumev3 public http://controller:8776/v3/%\(project_id\)s

#openstack endpoint create --region RegionOne \

volumev3 internal http://controller:8776/v3/%\(project_id\)s

#openstack endpoint create --region RegionOne \

volumev3 admin http://controller:8776/v3/%\(project_id\)s

⑶安装和配置组件:

#yum install –y openstack-cinder

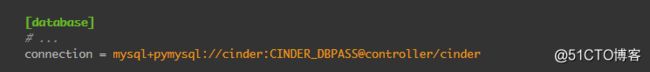

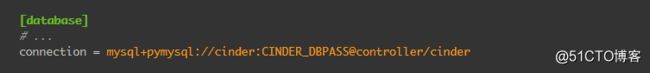

#vim /etc/cinder/cinder.conf

[database]

# ...

connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder

替换CINDER_DBPASS

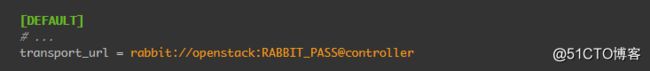

[DEFAULT]

# ...

transport_url = rabbit://openstack:RABBIT_PASS@controller

替换RABBIT_PASS

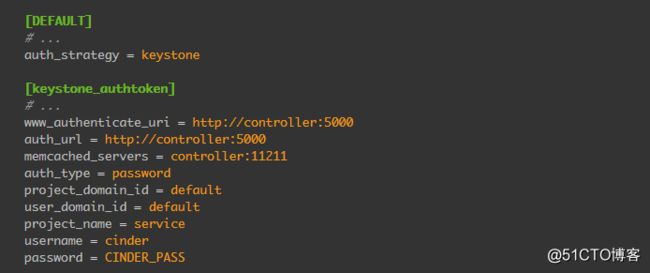

[DEFAULT]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_id = default

user_domain_id = default

project_name = service

username = cinder

password = CINDER_PASS

替换CINDER_PASS

[DEFAULT]

# ...

my_ip = 10.0.0.11

[oslo_concurrency]

# ...

lock_path = /var/lib/cinder/tmp

#su -s /bin/sh -c "cinder-manage db sync" cinder

#vim /etc/nova/nova.conf

[cinder]

os_region_name = RegionOne

⑷ 启动服务:

#systemctl restart openstack-nova-api.service

#systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

#systemctl restart openstack-cinder-api.service openstack-cinder-scheduler.service

9. 部署Cinder服务组件cinder1节点:

⑴ 安装启动服务:

#yum install –y lvm2 device-mapper-persistent-data

#systemctl enable lvm2-lvmetad.service ; systemctl restart lvm2-lvmetad.service

⑵ 配置LVM:

#pvcreate /dev/sdb

#vgcreate cinder-volumes /dev/sdb

#vim /etc/lvm/lvm.conf

devices {

...

filter = [ "a/sdb/", "r/.*/"]

注:如何sda系统磁盘分区是LVM还需要图片里的操作。

⑶ 安装和配置组件:

#yum install –y openstack-cinder targetcli python-keystone

#vim /etc/cinder/cinder.conf

[database]

# ...

connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder

替换CINDER_DBPASS

[DEFAULT]

# ...

transport_url = rabbit://openstack:RABBIT_PASS@controller

替换RABBIT_PASS

[DEFAULT]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_id = default

user_domain_id = default

project_name = service

username = cinder

password = CINDER_PASS

替换CINDER_PASS

[DEFAULT]

# ...

my_ip = 10.0.0.41

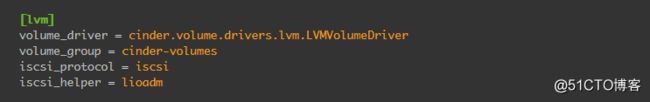

[lvm]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder-volumes

iscsi_protocol = iscsi

iscsi_helper = lioadm

注:如果没有[lvm]选项部分,则创建。

[DEFAULT]

# ...

enabled_backends = lvm

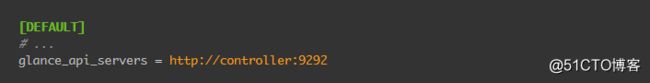

[DEFAULT]

# ...

glance_api_servers = http://controller:9292

[oslo_concurrency]

# ...

lock_path = /var/lib/cinder/tmp

⑷ 启动服务:

#systemctl enable openstack-cinder-volume.service target.service

#systemctl restart openstack-cinder-volume.service target.service

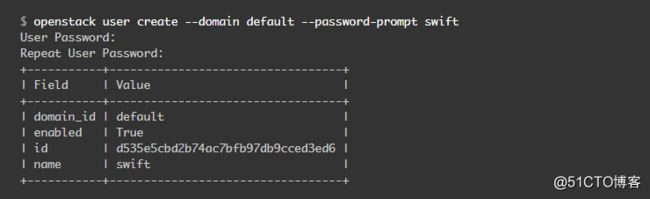

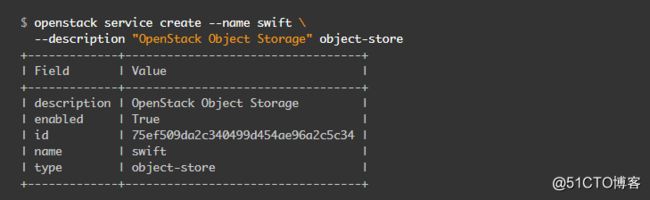

10. 部署Swift服务组件controller节点:

⑴ 创建凭证及API端点:

#. admin-openrc

#openstack user create --domain default --password-prompt swift

#openstack role add --project service --user swift admin

#openstack service create --name swift \

--description "OpenStack Object Storage" object-store

#openstack endpoint create --region RegionOne \

object-store public http://controller:8080/v1/AUTH_%\(project_id\)s

#openstack endpoint create --region RegionOne \

object-store internal http://controller:8080/v1/AUTH_%\(project_id\)s

#openstack endpoint create --region RegionOne \

object-store admin http://controller:8080/v1

⑵ 安装和配置组件:

#yum install –y openstack-swift-proxy python-swiftclient \

python-keystoneclient python-keystonemiddleware \

memcached

#curl -o /etc/swift/proxy-server.conf https://opendev.org/openstack/swift/raw/branch/master/etc/proxy-server.conf-sample

#vim /etc/swift/proxy-server.conf

[DEFAULT]

...

bind_port = 8080

user = swift

swift_dir = /etc/swift

[pipeline:main]

pipeline = catch_errors gatekeeper healthcheck proxy-logging cache container_sync bulk ratelimit authtoken keystoneauth container-quotas account-quotas slo dlo versioned_writes proxy-logging proxy-server

[app:proxy-server]

use = egg:swift#proxy

...

account_autocreate = True

[filter:keystoneauth]

use = egg:swift#keystoneauth

...

operator_roles = admin,user

[filter:authtoken]

paste.filter_factory = keystonemiddleware.auth_token:filter_factory

...

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_id = default

user_domain_id = default

project_name = service

username = swift

password = SWIFT_PASS

delay_auth_decision = True

替换SWIFT_PASS

[filter:cache]

use = egg:swift#memcache

...

memcache_servers = controller:11211

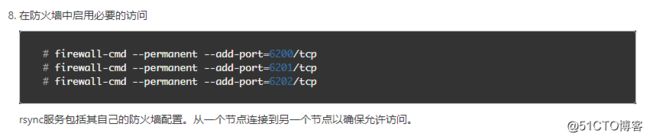

11. 部署Swift服务组件object1、2节点:

在每个swift节点执行以下操作:注:提前给object1、2节点,每个节点分配sdb、sdc磁盘,每个磁盘容量大小一致。

⑴ 前提条件:

#yum install –y xfsprogs rsync

#mkfs.xfs /dev/sdb

#mkfs.xfs /dev/sdc

#mkdir -p /srv/node/sdb

#mkdir -p /srv/node/sdc

#blkid

#vim /etc/fstab

UUID=a97355b6-101a-4cff-9fb6-824b97e79bea /srv/node/sdb xfs noatime 0 2

UUID=150ea60e-7d66-4afb-a491-2f2db75d62cf /srv/node/sdc xfs noatime 0 2

#mount /srv/node/sdb

#mount /srv/node/sdc

#vim /etc/rsyncd.conf

uid = swift

gid = swift

log file = /var/log/rsyncd.log

pid file = /var/run/rsyncd.pid

address = MANAGEMENT_INTERFACE_IP_ADDRESS

[account]

max connections = 2

path = /srv/node/

read only = False

lock file = /var/lock/account.lock

[container]

max connections = 2

path = /srv/node/

read only = False

lock file = /var/lock/container.lock

[object]

max connections = 2

path = /srv/node/

read only = False

lock file = /var/lock/object.lock

替换MANAGEMENT_INTERFACE_IP_ADDRESS为10.0.0.51、10.0.0.52

#systemctl enable rsyncd.service ; systemctl restart rsyncd.service

#yum install openstack-swift-account openstack-swift-container \

openstack-swift-object

#curl -o /etc/swift/account-server.conf https://opendev.org/openstack/swift/raw/branch/master/etc/account-server.conf-sample

#curl -o /etc/swift/container-server.conf https://opendev.org/openstack/swift/raw/branch/master/etc/container-server.conf-sample

#curl -o /etc/swift/object-server.conf https://opendev.org/openstack/swift/raw/branch/master/etc/object-server.conf-sample

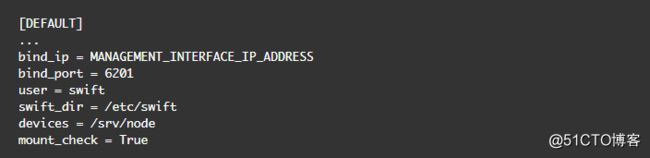

① 配置账户文件:

#vim /etc/swift/account-server.conf

[DEFAULT]

...

bind_ip = MANAGEMENT_INTERFACE_IP_ADDRESS

bind_port = 6202

user = swift

swift_dir = /etc/swift

devices = /srv/node

mount_check = True

替换MANAGEMENT_INTERFACE_IP_ADDRESS为10.0.0.51、10.0.0.52

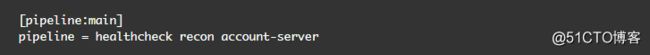

[pipeline:main]

pipeline = healthcheck recon account-server

[filter:recon]

use = egg:swift#recon

...

recon_cache_path = /var/cache/swift

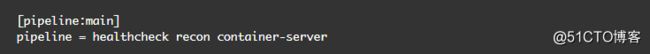

② 配置容器文件:

#vim /etc/swift/container-server.conf

[DEFAULT]

...

bind_ip = MANAGEMENT_INTERFACE_IP_ADDRESS

bind_port = 6201

user = swift

swift_dir = /etc/swift

devices = /srv/node

mount_check = True

替换MANAGEMENT_INTERFACE_IP_ADDRESS为10.0.0.51、10.0.0.52

[pipeline:main]

pipeline = healthcheck recon container-server

[filter:recon]

use = egg:swift#recon

...

recon_cache_path = /var/cache/swift

③ 配置对象文件:

#vim /etc/swift/object-server.conf

[DEFAULT]

...

bind_ip = MANAGEMENT_INTERFACE_IP_ADDRESS

bind_port = 6200

user = swift

swift_dir = /etc/swift

devices = /srv/node

mount_check = True

替换MANAGEMENT_INTERFACE_IP_ADDRESS为10.0.0.51、10.0.0.52

[pipeline:main]

pipeline = healthcheck recon object-server

[filter:recon]

use = egg:swift#recon

...

recon_cache_path = /var/cache/swift

recon_lock_path = /var/lock

#chown -R swift:swift /srv/node

#mkdir -p /var/cache/swift

#chown -R root:swift /var/cache/swift

#chmod -R 775 /var/cache/swift

12. 创建和分发Swift Ring在controller节点:

在控制节点controller执行这些操作:

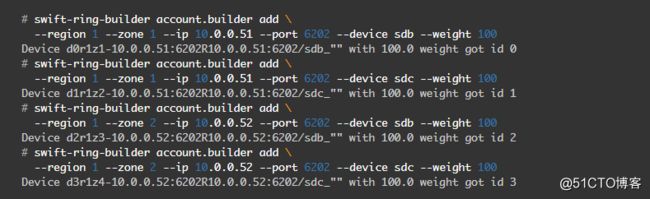

⑴ 创建账户Ring:

#cd /etc/swift

#swift-ring-builder account.builder create 10 3 1

# swift-ring-builder account.builder add \

--region 1 --zone 1 --ip 10.0.0.51 --port 6202 --device sdb --weight 100

# swift-ring-builder account.builder add \

--region 1 --zone 1 --ip 10.0.0.51 --port 6202 --device sdc --weight 100

# swift-ring-builder account.builder add \

--region 1 --zone 2 --ip 10.0.0.52 --port 6202 --device sdb --weight 100

# swift-ring-builder account.builder add \

--region 1 --zone 2 --ip 10.0.0.52 --port 6202 --device sdc --weight 100

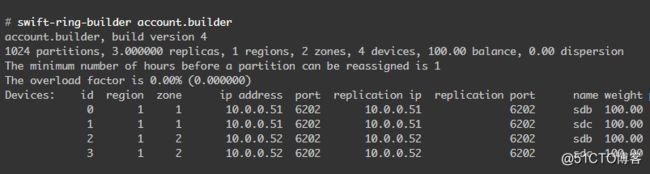

#swift-ring-builder account.builder

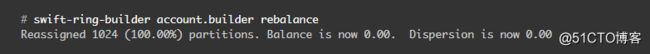

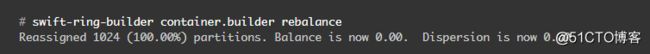

#swift-ring-builder account.builder rebalance

⑵ 创建容器Ring:

#cd /etc/swift

#swift-ring-builder container.builder create 10 3 1

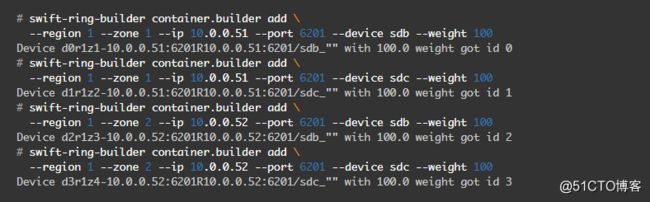

# swift-ring-builder container.builder add \

--region 1 --zone 1 --ip 10.0.0.51 --port 6201 --device sdb --weight 100

# swift-ring-builder container.builder add \

--region 1 --zone 1 --ip 10.0.0.51 --port 6201 --device sdc --weight 100

# swift-ring-builder container.builder add \

--region 1 --zone 2 --ip 10.0.0.52 --port 6201 --device sdb --weight 100

# swift-ring-builder container.builder add \

--region 1 --zone 2 --ip 10.0.0.52 --port 6201 --device sdc --weight 100

#swift-ring-builder container.builder

#swift-ring-builder container.builder rebalance

⑶ 创建对象环:

#cd /etc/swift

#swift-ring-builder object.builder create 10 3 1

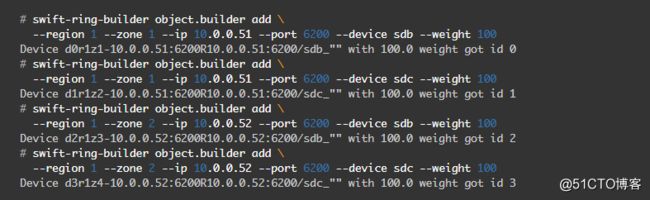

# swift-ring-builder object.builder add \

--region 1 --zone 1 --ip 10.0.0.51 --port 6200 --device sdb --weight 100

# swift-ring-builder object.builder add \

--region 1 --zone 1 --ip 10.0.0.51 --port 6200 --device sdc --weight 100

# swift-ring-builder object.builder add \

--region 1 --zone 2 --ip 10.0.0.52 --port 6200 --device sdb --weight 100

# swift-ring-builder object.builder add \

--region 1 --zone 2 --ip 10.0.0.52 --port 6200 --device sdc --weight 100

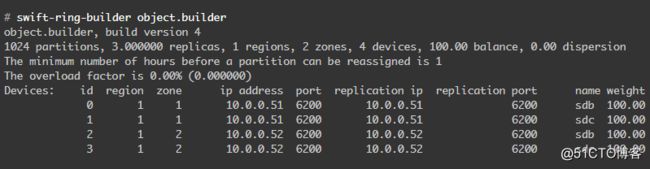

#swift-ring-builder object.builder

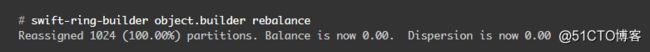

#swift-ring-builder object.builder rebalance

⑷ 分发Ring文件:

#cd /etc/swift

#scp account.ring.gz container.ring.gz object.ring.gz 10.0.0.51:/etc/swift

#scp account.ring.gz container.ring.gz object.ring.gz 10.0.0.52:/etc/swift

13.完成最后Swift配置操作在controller节点:

#curl -o /etc/swift/swift.conf \

https://opendev.org/openstack/swift/raw/branch/master/etc/swift.conf-sample

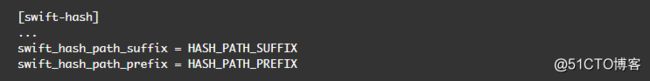

#vim /etc/swift/swift.conf

[swift-hash]

...

swift_hash_path_suffix = HASH_PATH_SUFFIX

swift_hash_path_prefix = HASH_PATH_PREFIX

替换HASH_PATH_SUFFIX和HASH_PATH_PREFIX为唯一值(可以理解为密码),我这里保持默认

[storage-policy:0]

...

name = Policy-0

default = yes

将swift.conf分发到每个object存储节点上:

#scp swift.conf 10.0.0.51:/etc/swift

#scp swift.conf 10.0.0.52:/etc/swift

在控制节点controller、对象节点object1、2执行:

#chown -R root:swift /etc/swift

在控制节点controller上执行:

#systemctl enable openstack-swift-proxy.service memcached.service

#systemctl restart openstack-swift-proxy.service memcached.service

在object1、2上启动服务:

# systemctl enable openstack-swift-account.service openstack-swift-account-auditor.service \

openstack-swift-account-reaper.service openstack-swift-account-replicator.service

# systemctl start openstack-swift-account.service openstack-swift-account-auditor.service \

openstack-swift-account-reaper.service openstack-swift-account-replicator.service

# systemctl enable openstack-swift-container.service \

openstack-swift-container-auditor.service openstack-swift-container-replicator.service \

openstack-swift-container-updater.service

# systemctl start openstack-swift-container.service \

openstack-swift-container-auditor.service openstack-swift-container-replicator.service \

openstack-swift-container-updater.service

# systemctl enable openstack-swift-object.service openstack-swift-object-auditor.service \

openstack-swift-object-replicator.service openstack-swift-object-updater.service

# systemctl start openstack-swift-object.service openstack-swift-object-auditor.service \

openstack-swift-object-replicator.service openstack-swift-object-updater.service

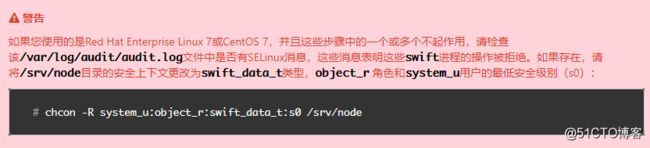

验证操作,在controller上:

#chcon -R system_u:object_r:swift_data_t:s0 /srv/node

#. admin-openrc

注:这也是坑点之一,官网上让你执行. demo-openrc,始终报错,这里需要执行. admin-openrc

#swift stat

创建container1容器:

#openstack container create container1

上传文件:

#mkdir FILE

#openstack object create container1 FILE

#openstack object list container1

下载:

#openstack object save container1 FILE

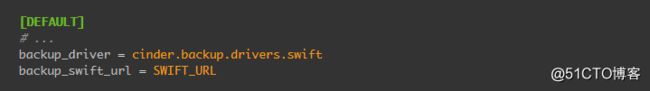

14.安装和配置备份服务,在cinder1节点上:

#yum install –y openstack-cinder

#vim /etc/cinder/cinder.conf

[DEFAULT]

# ...

backup_driver = cinder.backup.drivers.swift

backup_swift_url = http://controller:8080/v1

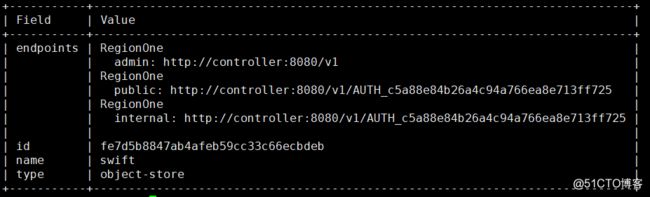

替换SWIFT_URL为对象存储URL,在controller节点上查找:

#openstack catalog show object-store

#systemctl enable openstack-cinder-backup.service

#systemctl restart openstack-cinder-backup.service

验证:

在控制节点上

#. admin-openrc

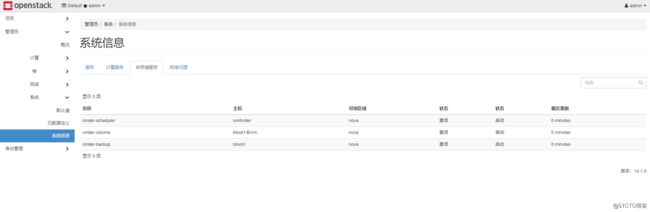

#openstack volume service list

至此,OpenStack所有节点部署完成,下一章准备创建网络、启动实例。

四. 启动实例:

1.创建网络:

⑴ 创建物理网络:

#. admin-openrc

#openstack network create --share --external \

--provider-physical-network provider \

--provider-network-type flat provider

创建子网:

#openstack subnet create --network provider \

--allocation-pool start=10.1.0.200,end=10.1.0.250 \

--dns-nameserver 114.114.114.114 --gateway 10.1.0.254 \

--subnet-range 10.1.0.0/24 provider

这是官网的图,根据自己实际的网段划分子网,子网必须和主机处于同一网段。

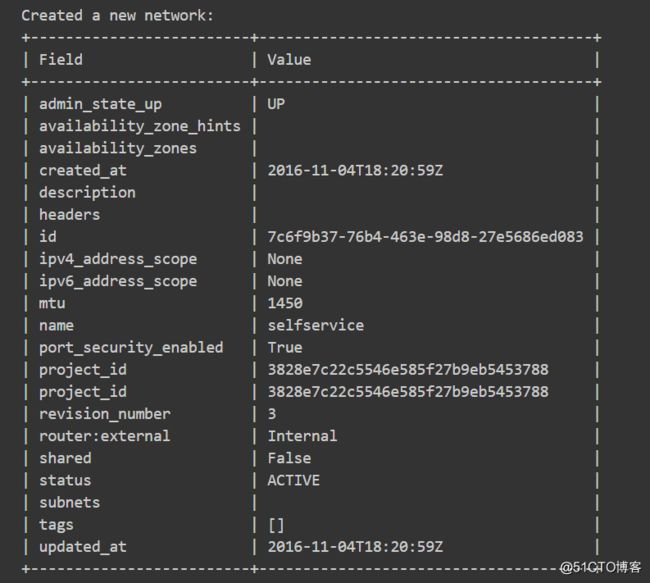

⑵创建内部网络:

#. demo-openrc //通过myuser用户创建

#openstack network create selfservice

创建子网:

#openstack subnet create --network selfservice \

--dns-nameserver 114.114.114.114 --gateway 172.16.1.1 \

--subnet-range 172.16.1.0/24 selfservice

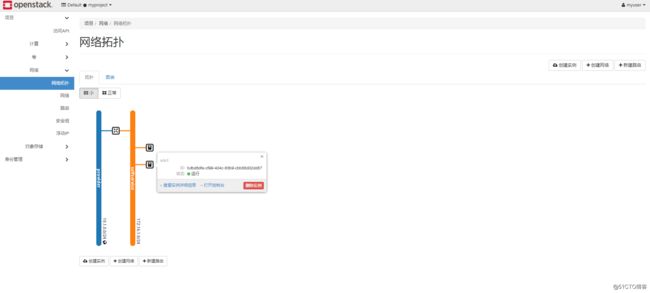

自助服务网络使用172.16.1.0/24,网关在172.16.1.1上。DHCP服务器为每个实例分配从172.16.1.2到172.16.1.254的IP地址。所有实例均使用114.114.114.114作为DNS。

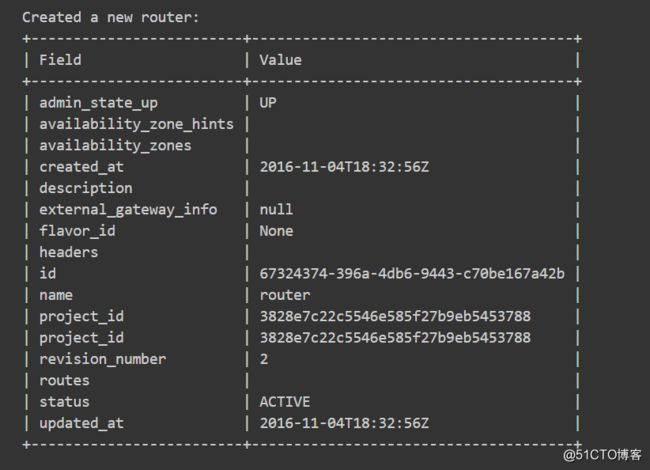

创建路由:

#openstack router create router

将自助服务网络子网添加到路由器上的接口:

#openstack router add subnet router selfservice

在路由器的提供商网络上设置网关:

#openstack router set router --external-gateway provider

验证:

#. admin-openrc

#ip netns

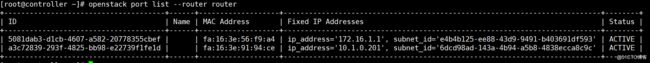

#openstack port list --router router

可以列出路由器的端口是10.1.0.201

#ping -c 4 10.1.0.201

2. 创建实例:

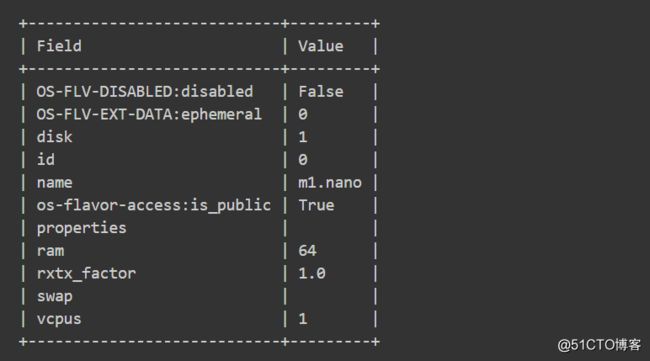

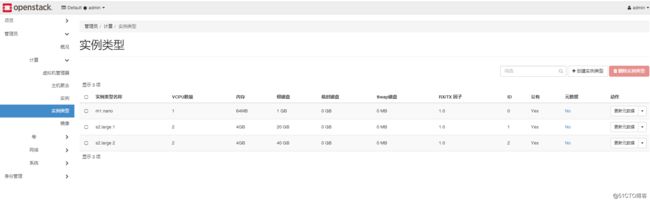

⑴ 创建实例类型:

#openstack flavor create --id 0 --vcpus 1 --ram 64 --disk 1 m1.nano

这翻译也是醉了,风味?什么鬼东西,BBQ?

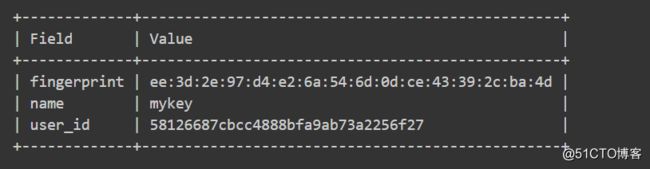

⑵ 创建密钥对:

#. demo-openrc

#ssh-keygen -q -N "" //一路回车即可

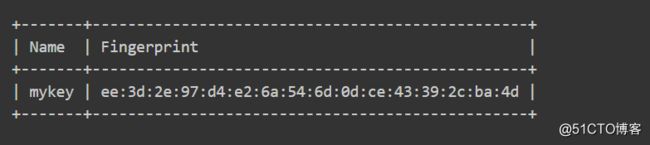

#openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey

#openstack keypair list

⑶ 添加安全组规则:

#openstack security group rule create --proto icmp default

允许SSH、RDP访问:

#openstack security group rule create --proto tcp --dst-port 22 default

#openstack security group rule create --proto tcp --dst-port 3389 default

⑷ 启动实例:

#. demo-openrc //使用myuser账号

#openstack flavor list

又是风味,想吃羊肉串了

#openstack image list //列出可用镜像

#openstack network list //列出可用网络

#openstack security group list //列出可用安全组

万事俱备,可以启动实例了:

#openstack server create --flavor m1.nano --image cirros \

--nic net-id=selfservice --security-group default \

--key-name mykey vm1

依旧是官方图,自己根据自己实际环境创建

#openstack server list

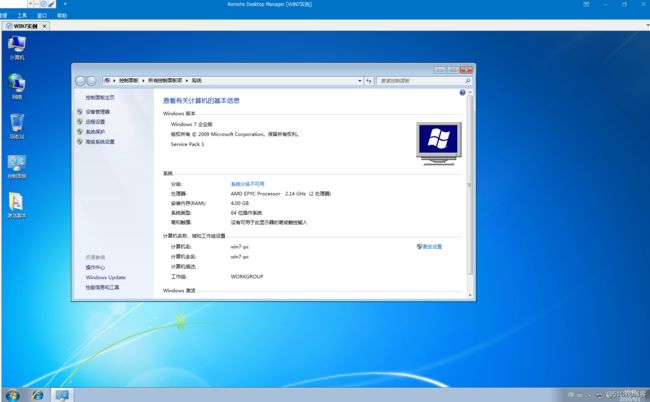

上一些图:

![]()

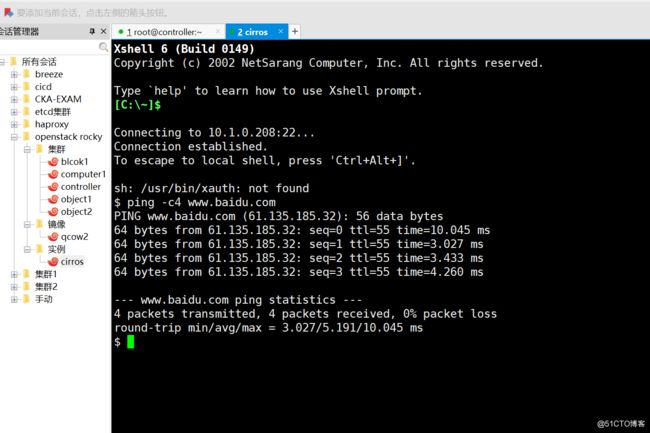

分配了浮动IP,10.1.0.208

cirros系统正常登陆,可以ping通内、外网。

可以通过10.1.0.208远程SSH实例,并访问外网。

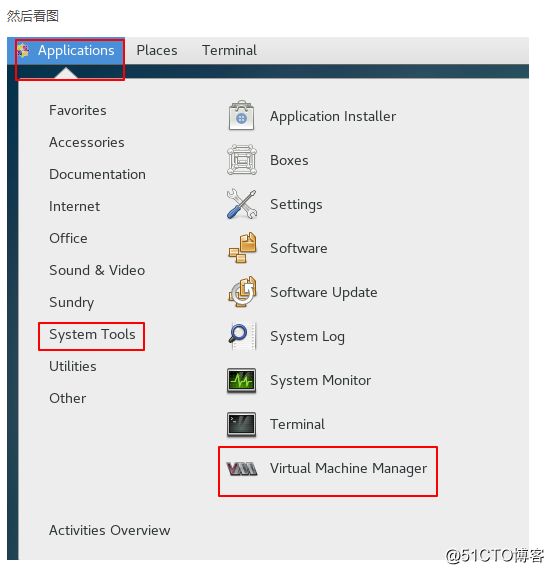

五. 通过CENTOS KVM虚机创建WIN7 QCOWS2格式镜像:

说明:由于之前已经完成了镜像制作,就不重复制作了,只好也盗图了。

原创链接:https://www.cnblogs.com/tcicy/p/7790956.html

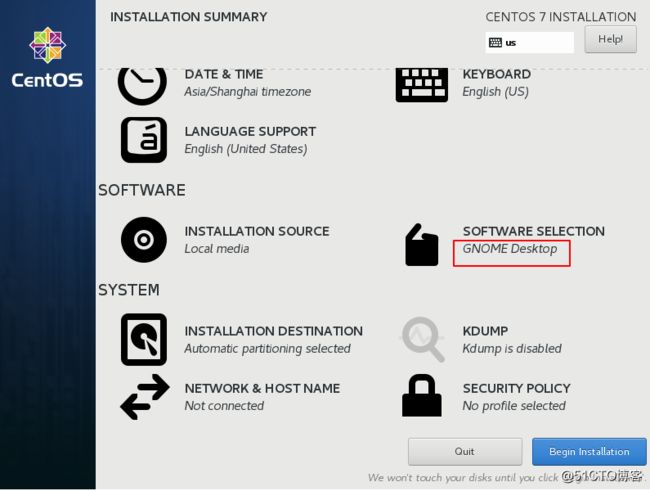

1.虚机安装Centos7.5及以上系统,设置好阿里YUM源:

⑴ 安装好GNOME桌面:

⑵启动之后安装KVM相关软件及依赖:

#yum install -y qemu-kvm qemu-img virt-manager libvirt libvirt-python python-virtinst libvirt-client virt-install virt-viewer bridge-utils

qemu-kvm:qemu模拟器

qemu-img:qemu磁盘image管理器

virt-install:用来创建虚拟机的命令行工具

libvirt:提供libvirtd daemon来管理虚拟机和控制hypervisor

libvirt-client:提供客户端API用来访问server和提供管理虚拟机命令行工具的virsh实体

virt-viewer:图形控制台

注:我是Centos7.5 1804的系统,安装不上python-virtinst,找不到软件包,但实际上测试,并不影响镜像制作,可以忽略。

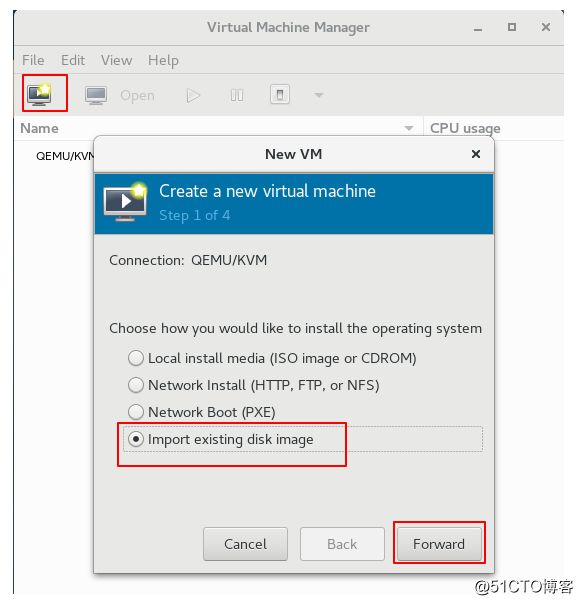

⑶ 创建QCOW2及下载驱动:

① 创建qcow2文件:

#mkdir /win7

#qemu-img create -f qcow2 -o size=40G /win7/windows7_64_40G

#chmod 777 /win7/*

将你准备好的win7.iso镜像拷贝大/win7目录下。

② 下载驱动文件,地址如下:

链接:https://pan.baidu.com/s/12eF05geEgcmTeGmW-fETYw 密码:1ohe

将RHEV-toolsSetup_3.5_9.iso和virtio-win-1.1.16(disk driver).vfd拷贝到/win7目录下

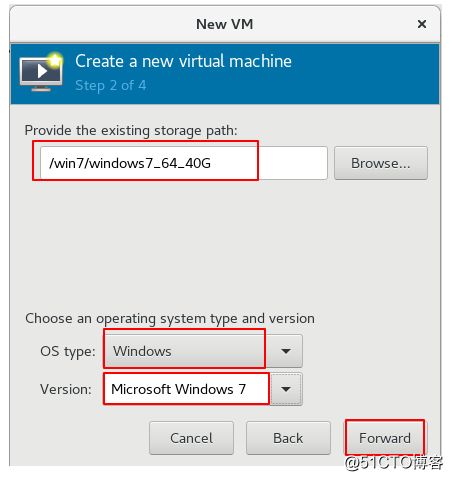

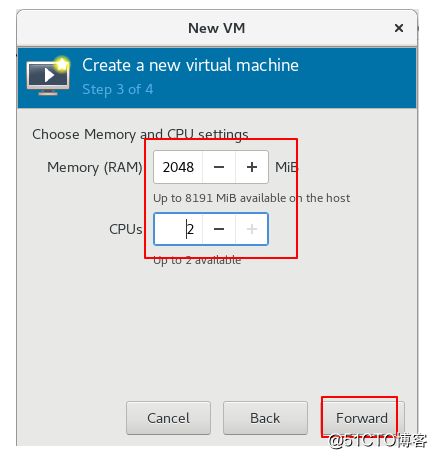

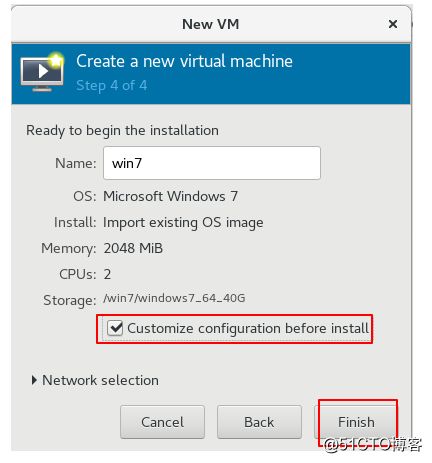

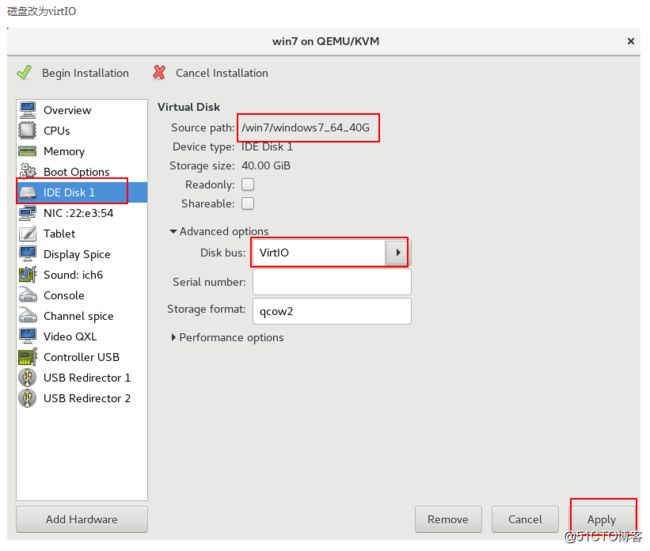

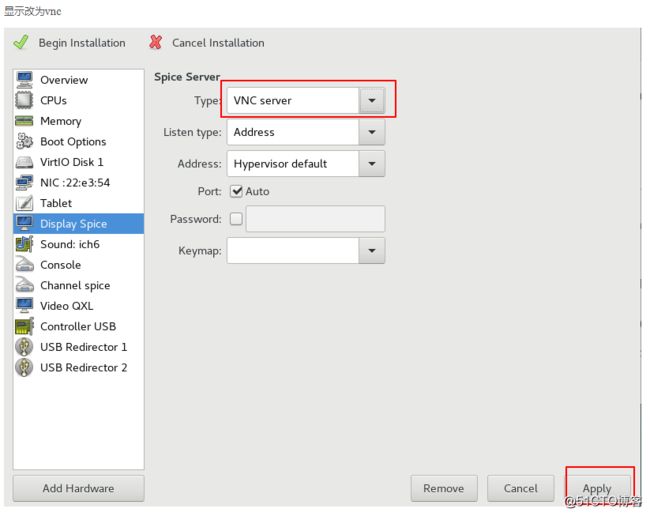

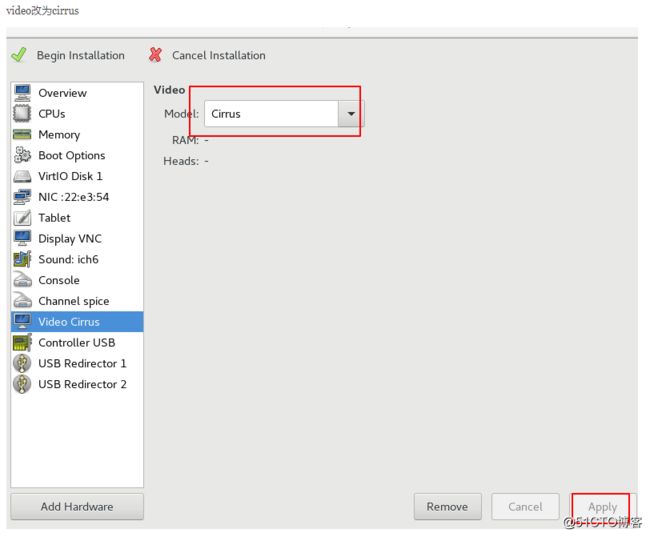

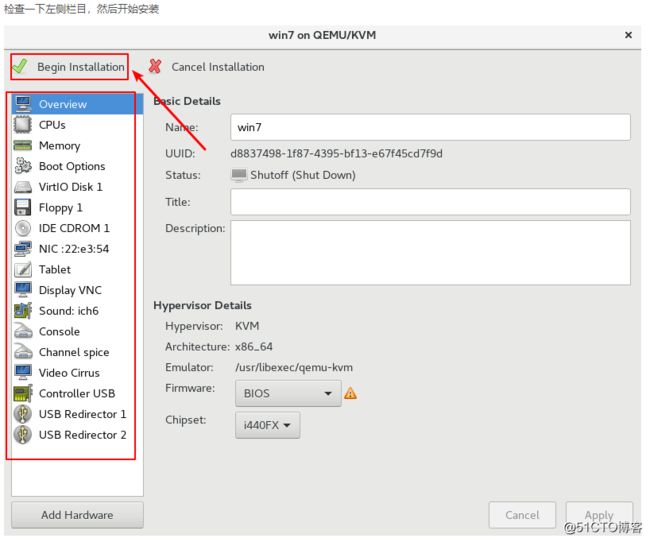

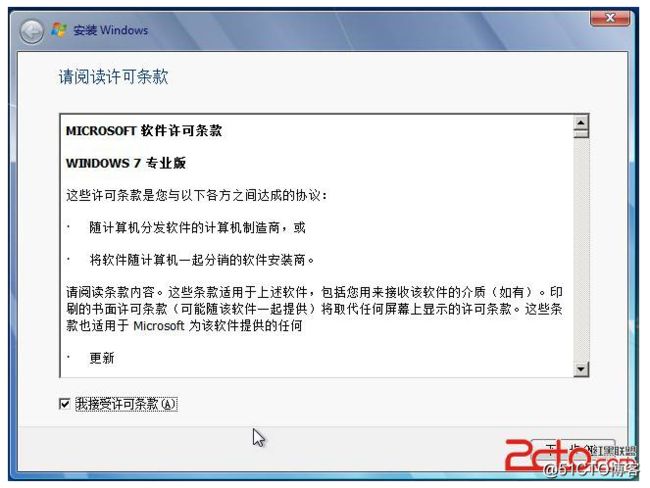

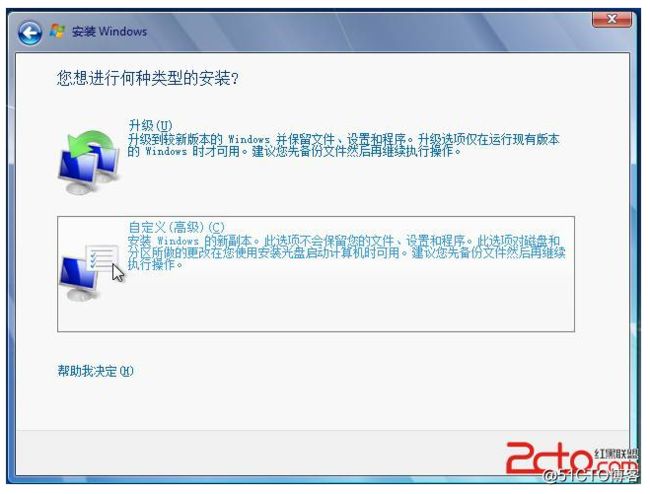

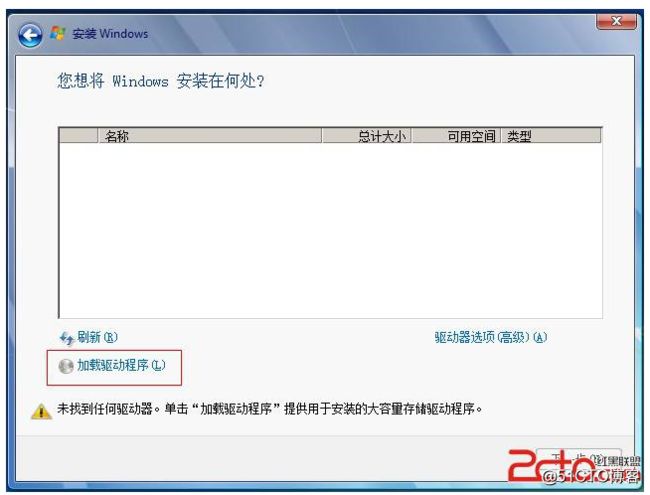

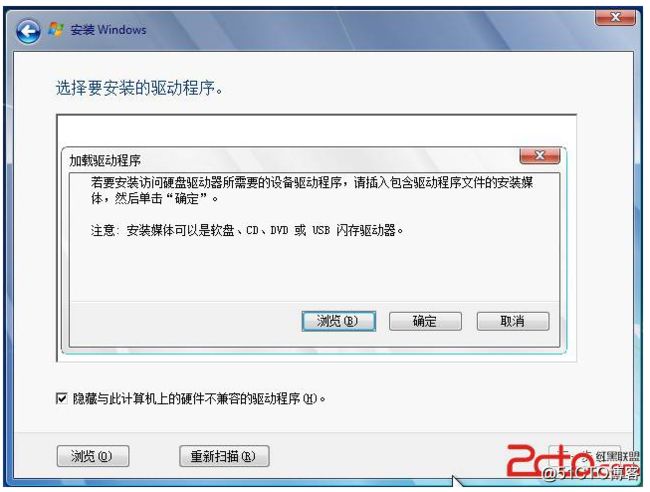

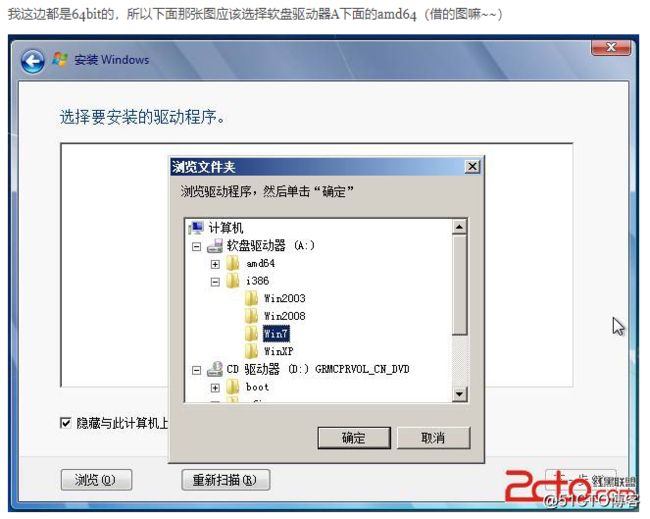

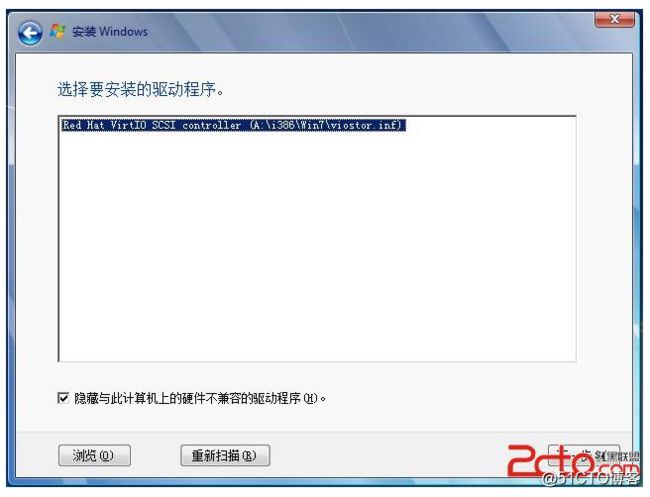

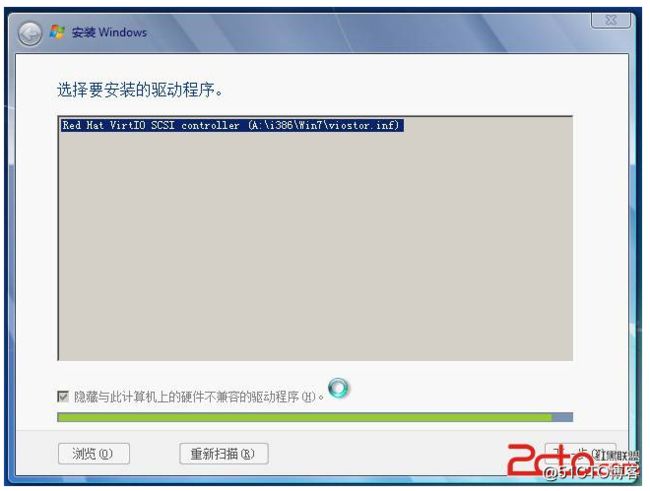

⑷ 启动KVM虚机,制作镜像:后面我就盗图了,就不过多说明了,看图就能明白。

输入刚才创建的qcow2文件路径

输入virtio-win-1.1.16(disk driver).vfd的路径

输入win7.iso镜像的路径

从这里是盗图中的盗图

注意这里是64位,选amd64里面的Win7驱动

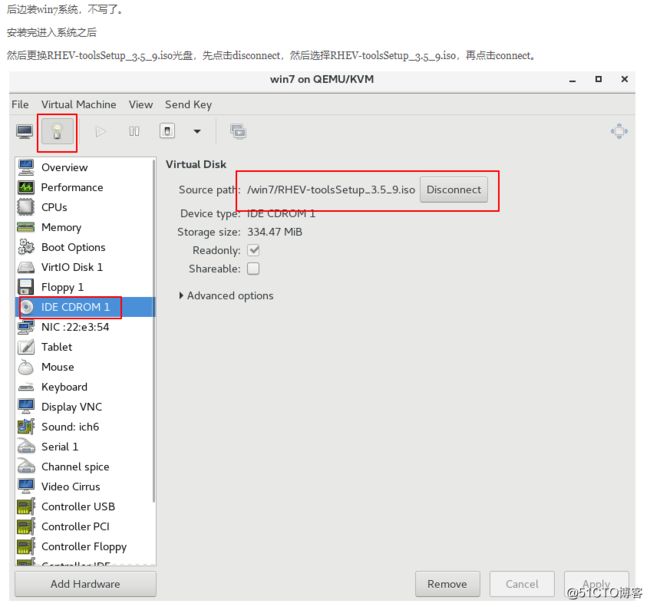

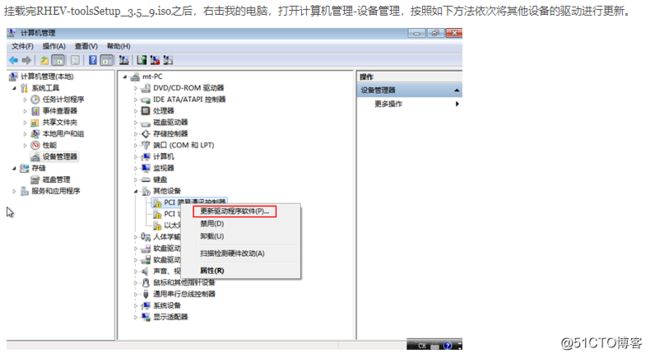

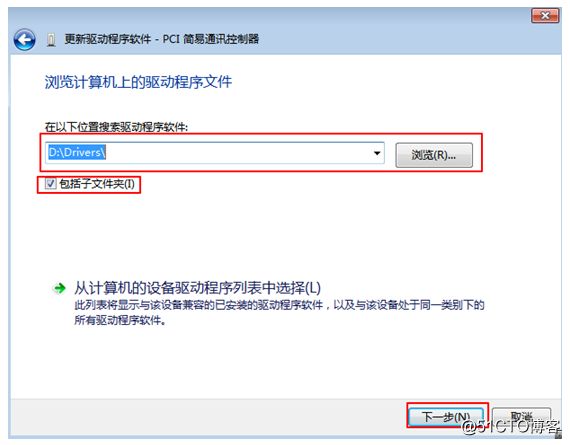

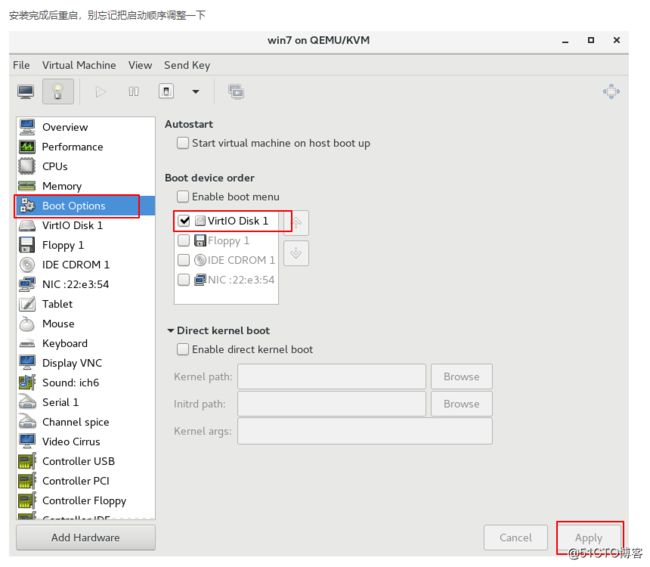

安装完成,进入系统后,IDE CDROM载入RHEV-toolsSetup_3.5_9.iso镜像

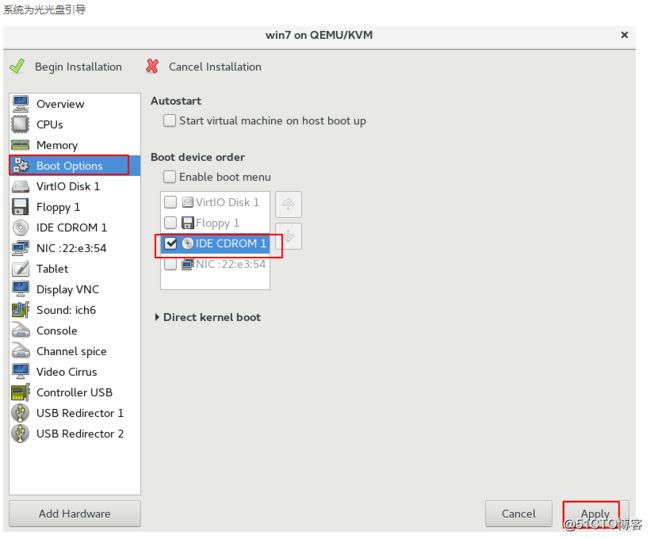

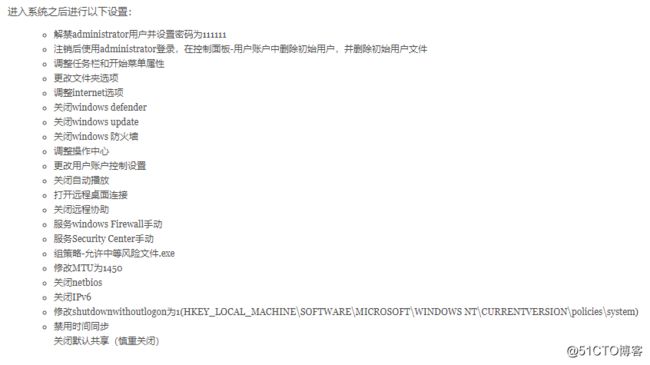

重启之前,把BOOT改为VirtIO Disk启动:

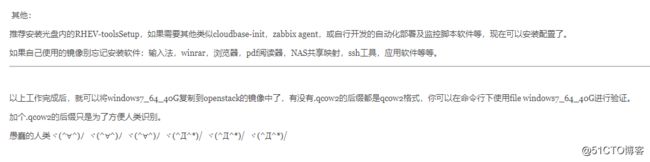

重启完成后,就可以拷贝/win7/windows7_64_40G文件了,这个文件没有带.qcow2格式后缀,其实有没有后缀,这个文件都是qcow2格式,可以通过”#file /win7/windows7_64_40G“命令查看。

将文件拷贝出来,就可以上传到OpenStack平台上了,镜像制作完成,windows server等版本可以如法炮制,提前下好相关驱动。

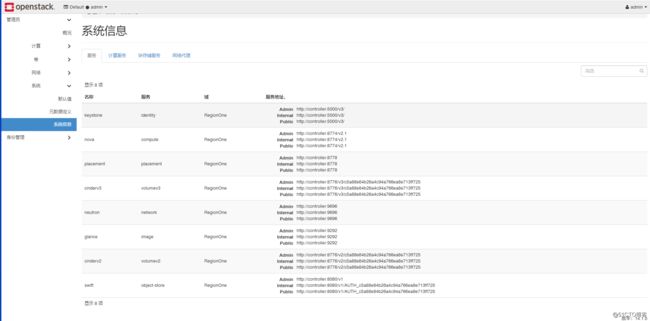

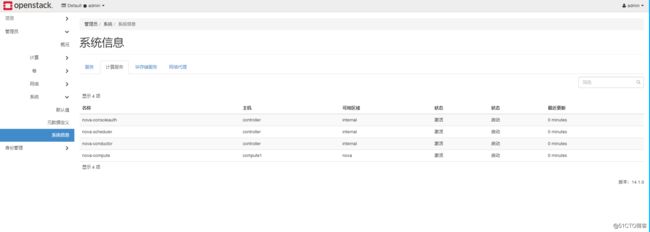

六.实际运行环境相关截图:

总结:作者经过一个很漫长的阶段才部署成功,期间会遇到很多坑,很多问题,几乎要放弃,不过通过书籍与网上的攻略还是成功的部署出来;

所以建议大家遇到问题多搜索、多思考、多看书,就能找到解决方案;OpenStack部署是一个很漫长的过程,一定要耐心、细心,不断总结、思考,一定就能完成。

部署与使用过程中经常遇到BUG的链接,分享给大家:

openstack安装nova计算节点报错

http://www.mamicode.com/info-detail-2422788.html

centos7添加bridge-nf-call-ip6tables出现No such file or directory

https://www.cnblogs.com/zejin2008/p/7102485.html

openstack连接报错net_mlx5: cannot load glue library: libibverbs.so.1

https://www.cnblogs.com/omgasw/p/11987504.html

Openstack dashboard错误SyntaxError: invalid syntax的解决

https://blog.csdn.net/obestboy/article/details/81195447

openstack控制台报出:找不到服务器

https://blog.51cto.com/xiaofeiji/1943553

实例开机提示找不到磁盘Booting from Hard Disk... GRUB.

https://blog.csdn.net/song7999/article/details/80119010

解决OpenStack创建实例不超过10个

https://blog.csdn.net/onesafe/article/details/50236863?utm_medium=distribute.pc_relevant_right.none-task-blog-BlogCommendFromBaidu-9.channel_param_right&depth_1-utm_source=distribute.pc_relevant_right.none-task-blog-BlogCommendFromBaidu-9.channel_param_right

Device /dev/sdb excluded by a filter

https://blog.csdn.net/lhl3620/article/details/104792408/

OpenStack删除Cinder盘失败解决办法

https://blog.csdn.net/u011521019/article/details/55854690?utm_source=blogxgwz7

did not finish being created even after we waited 189 seconds or 61 attempts. And its status is downloading

https://www.cnblogs.com/mrwuzs/p/10282436.html