python数据爬虫项目

python数据爬虫项目

作者:YRH

时间:2020/9/26

新手上路,如果有写的不好的请多多指教,多多包涵

前些天在一个学习群中有位老哥发布了一个项目,当时抱着满满的信心想去尝试一下,可惜手慢了,抢不到,最后只拿到了项目的任务之间去练习,感觉该项目还不错,所以就发布到博客上来,让大家一起学习学习

一、任务清单

项目名称:国家自然科学基金大数据知识管理服务门户爬取项目

爬取内容:爬取内容:资助项目(561914项)

爬取链接:HTTP://KD.NSFC.GOV.CN/BASEQUERY/SUPPORTQUERY

第一阶段:

1、 需要对申请代码、资助类别和批准年度进行筛选。

2、 爬取信息:

项目名称、批准号、项目类别、项目负责人、批准年度、资助金飞、依托单位、起止年月、申请代码、关键词、研究成果、结题项目

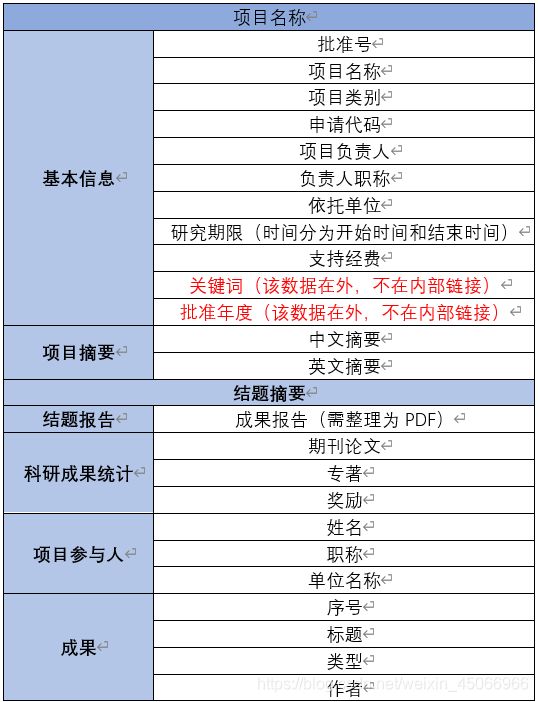

第二阶段:爬取完整的项目信息

1、 根据批准号从链接爬取

2、 需要爬取的信息

二、网页结构分析

1、路线选择

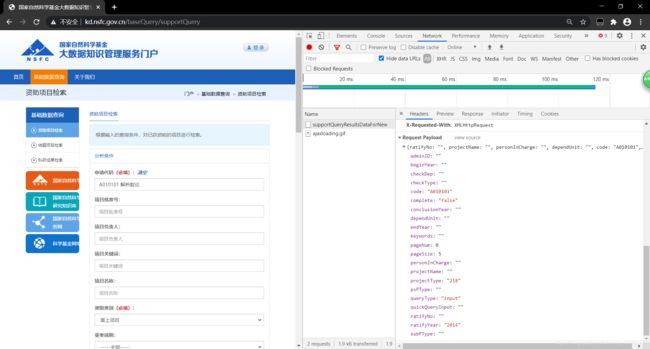

刚开始看到网页是第一时间想到的是使用selenium自动化爬取,但是通过网络请求方式察觉到该网站的数据获取方式是post请求的

所以只有利用post请求方式将传表单到http://kd.nsfc.gov.cn/baseQuery/data/supportQueryResultsDataForNew上,就能获取到数据。

所以最后选择了requests路线进行爬取

2、表单上传结构分析

从网页界面可以看出,想要提取信息必须上传三个参数,分别是申请代码、资助类型和批准年度。这三个所对应的是键是code、projectType和ratifyYear,所以这三个是回去数据各项类型必传的参数。但是当我在访问是一直错误,访问不了,然后就尝试多加几个参数进去,最后发现queryType: "input"这个参数必须上传,否则会报错

在传入参数前必须先看一下参数类型是什么,在Requests Headers中有一个参数Content-Type: application/json,可以看得出传入的参数是json类型的,所有参数必须先转为json类型

三、数据爬取代码

因为该网站数据比较多,所以我将数据保存至MySQL数据库在,如果想执行我代码需要先将访问数据库的代码给修改一下

数据比较多,所以我只爬取了2019年份的数据,没爬全

# -*- coding: utf-8 -*-

# Author : YRH

# Data :

# Project :

# Tool : PyCharm

import json

import requests

import xlwt

import time

import pymysql

import random

#这下面一段是创建数据库的代码,如果需要可以执行下面代码创建数据表

"""CREATE TABLE IF NOT EXISTS `prodectData`(

`序号` INT UNSIGNED AUTO_INCREMENT,

`项目名称` varchar(50) not null,

`批单号` VARCHAR(20) NOT NULL,

`项目类别` VARCHAR(20) NOT NULL,

`批准负责人` VARCHAR(20) NOT NULL,

`批准年度` VARCHAR(20) NOT NULL,

`资助经费` VARCHAR(20) NOT NULL,

`依托单位` VARCHAR(20) NOT NULL,

`起始年月` VARCHAR(20) NOT NULL,

`申请代码` VARCHAR(20) NOT NULL,

PRIMARY KEY ( `序号` )

)ENGINE=InnoDB DEFAULT CHARSET=utf8;"""

user_agent = ["Mozilla/5.0 (Windows NT 10.0; WOW64)", 'Mozilla/5.0 (Windows NT 6.3; WOW64)',

'Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.11 (KHTML, like Gecko) Chrome/23.0.1271.64 Safari/537.11',

'Mozilla/5.0 (Windows NT 6.3; WOW64; Trident/7.0; rv:11.0) like Gecko',

'Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/28.0.1500.95 Safari/537.36',

'Mozilla/5.0 (Windows NT 6.1; WOW64; Trident/7.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET '

'CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; rv:11.0) like Gecko)',

'Mozilla/5.0 (Windows; U; Windows NT 5.2) Gecko/2008070208 Firefox/3.0.1',

'Mozilla/5.0 (Windows; U; Windows NT 5.1) Gecko/20070309 Firefox/2.0.0.3',

'Mozilla/5.0 (Windows; U; Windows NT 5.1) Gecko/20070803 Firefox/1.5.0.12',

'Opera/9.27 (Windows NT 5.2; U; zh-cn)',

'Mozilla/5.0 (Macintosh; PPC Mac OS X; U; en) Opera 8.0',

'Opera/8.0 (Macintosh; PPC Mac OS X; U; en)',

'Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.12) Gecko/20080219 Firefox/2.0.0.12 '

'Navigator/9.0.0.6',

'Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 6.1; Win64; x64; Trident/4.0)',

'Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 6.1; Trident/4.0)',

'Mozilla/5.0 (compatible; MSIE 10.0; Windows NT 6.1; WOW64; Trident/6.0; SLCC2; .NET CLR 2.0.50727; '

'.NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; InfoPath.2; .NET4.0C; .NET4.0E)',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Maxthon/4.0.6.2000 '

'Chrome/26.0.1410.43 Safari/537.1 ',

'Mozilla/5.0 (compatible; MSIE 10.0; Windows NT 6.1; WOW64; Trident/6.0; SLCC2; .NET CLR 2.0.50727; '

'.NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; InfoPath.2; .NET4.0C; .NET4.0E; '

'QQBrowser/7.3.9825.400)',

'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:21.0) Gecko/20100101 Firefox/21.0 ',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/21.0.1180.92 '

'Safari/537.1 LBBROWSER',

'Mozilla/5.0 (compatible; MSIE 10.0; Windows NT 6.1; WOW64; Trident/6.0; BIDUBrowser 2.x)',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.11 '

'TaoBrowser/3.0 Safari/536.11']

db = pymysql.Connect(host="localhost",

port=3306,

user="root", #数据库名称

password=XXXXX, #数据库密码

db="zzProject",

charset="utf8"

)

cur = db.cursor()

def spider(info):

#通过循环生成项目类型编号和申请代码

i = ["A", "B", "C", "D", "E", "F", "G", "H"]

projectType = ["218", "220", "222", "339", "429", "432", "433", "649", "579", "630",

"631", "632", "635", "51", "52", "2699", "70", "7161"]

for y in [2019]: # 可通过这里修改爬取年份,如果想要多个年份爬取,可通过下面那一行进行循环

# for y in range(2000, 2021):

print("年度" + str(y))

for p in projectType:

print("项目类别编号:" + p)

for j in i:

if j == "A":

end = 10

elif j == "B":

end = 9

elif j == "C":

end = 22

elif j == "D":

end = 8

elif j == "E":

end = 14

elif j == "F":

end = 8

elif j == "G":

end = 5

elif j == "H":

end = 31

else:

end = 2

for b in range(1, end):

if len(str(b)) == 1:

code = j + str(0) + str(b)

else:

code = j + str(b)

# print(code)

pac(info, code, y, p)

for t in range(1, 10):

if len(str(t)) == 1:

code2 = code + str(0) + str(t)

else:

code2 = code + str(b)

# print(code)

pac(info, code2, y, p)

for l in range(1, 10):

if len(str(l)) == 1:

code3 = code2 + str(0) + str(l)

else:

code3 = code + str(l)

pac(info, code3, y, p)

def pac(info, code, year, projectType):

url = "http://kd.nsfc.gov.cn/baseQuery/data/supportQueryResultsDataForNew"

# print(code)

data = {

"code": code, "ratifyYear": str(year), "projectType": projectType, "queryType": "input",

"complete": "false", "pageNum": 0, "pageSize": 5

}

headers = {

"User-Agent": random.choice(user_agent),

"Content-Type": "application/json"

}

rep = requests.post(url, data=json.dumps(data), headers=headers)

rep.encoding = rep.apparent_encoding

text = rep.text

text = text.replace("\ue06d", "").replace("\u2022", "")

data = eval(text)

if len(data["data"]["resultsData"]) == 0:

# print(1)

pass

else:

# print(data["data"]["resultsData"])

data = data["data"]["resultsData"]

for d in data:

try:

name = d[1] # 项目名称

# print(name)

except:

name = " " # 项目名称

# print(name)

try:

num = d[2] # 批单号

# print(num)

except:

num = " " # 批单号

# print(num)

try:

itemCl = d[3] # 项目类别

# print(itemCl)

except:

itemCl = " " # 项目类别

# print(itemCl)

try:

itemLe = d[5] # 批准负责人

# print(itemLe)

except:

itemLe = " " # 批准负责人

# print(itemLe)

try:

year = d[7] # 批准年度

# print(year)

except:

year = " " # 批准年度

# print(year)

try:

money = str(d[6]) + "(万元)" # 资助经费

# print(money)

except:

money = " " # 资助经费

# print(money)

try:

supOrg = d[4] # 依托单位

# print(supOrg)

except:

supOrg = " " # 依托单位

# print(supOrg)

try:

startData = d[-2] # 起始年月

# print(startData)

except:

startData = " " # 起始年月

# print(startData)

try:

code = d[-3] # 申请代码

# print(code)

except:

code = " " # 申请代码

# print(code)

# print("=" * 30)

# info.append([name, num, itemCl, itemLe, year, money, supOrg, startData, code]) #当数据保存至excel是使用这个

# =====================================

# 保存至mysql

try:

op = "insert into prodectdata (项目名称,批单号,项目类别,批准负责人,批准年度,资助经费,依托单位,起始年月," \

"申请代码) " \

"values ('%s','%s','%s','%s','%s','%s','%s','%s','%s')" % \

(str(name), str(num), str(itemCl), str(itemLe), str(year), str(money), str(supOrg),

str(startData), str(code))

cur.execute(op)

db.commit()

except:

pass

# ======================================

time.sleep(0.5)

def save(info): # 保存数据至excel

print("save.....")

workbook = xlwt.Workbook(encoding="utf-8") # 创建workbook对象

movieBook = workbook.add_sheet("sheet1") # 创建工作表

# 输入头标签

head = ["项目名称", "批单号", "项目类别", "批准负责人", "批准年度", "资助经费", "依托单位", "起始年月", "申请代码"]

for i in range(0, len(head)):

movieBook.write(0, i, head[i]) # 参数1是行,参数2是列,参数3是值

# 数据逐行输入

y = 1

for a in info:

print("成功保存:" + str(y))

for x in range(0, len(a)):

movieBook.write(y, x, a[x])

y += 1

workbook.save("资助项目信息.xls") # 保存数据表

if __name__ == '__main__':

info = []

spider(info)

# save(info) #如果是保存至excel的话,请带有改方法

db.close()

四、数据结果展示

新手上路,多多指教