- nosql数据库技术与应用知识点

皆过客,揽星河

NoSQLnosql数据库大数据数据分析数据结构非关系型数据库

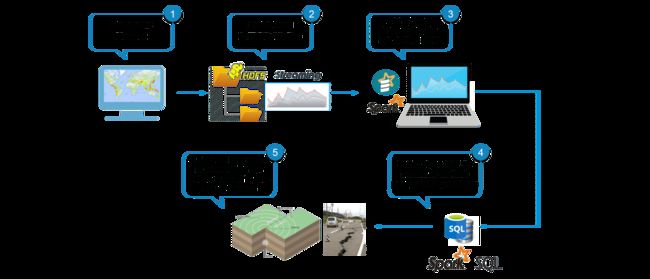

Nosql知识回顾大数据处理流程数据采集(flume、爬虫、传感器)数据存储(本门课程NoSQL所处的阶段)Hdfs、MongoDB、HBase等数据清洗(入仓)Hive等数据处理、分析(Spark、Flink等)数据可视化数据挖掘、机器学习应用(Python、SparkMLlib等)大数据时代存储的挑战(三高)高并发(同一时间很多人访问)高扩展(要求随时根据需求扩展存储)高效率(要求读写速度快)

- Java:爬虫框架

dingcho

Javajava爬虫

一、ApacheNutch2【参考地址】Nutch是一个开源Java实现的搜索引擎。它提供了我们运行自己的搜索引擎所需的全部工具。包括全文搜索和Web爬虫。Nutch致力于让每个人能很容易,同时花费很少就可以配置世界一流的Web搜索引擎.为了完成这一宏伟的目标,Nutch必须能够做到:每个月取几十亿网页为这些网页维护一个索引对索引文件进行每秒上千次的搜索提供高质量的搜索结果简单来说Nutch支持分

- 最简单将静态网页挂载到服务器上(不用nginx)

全能全知者

服务器nginx运维前端html笔记

最简单将静态网页挂载到服务器上(不用nginx)如果随便弄个静态网页挂在服务器都要用nignx就太麻烦了,所以直接使用Apache来搭建一些简单前端静态网页会相对方便很多检查Web服务器服务状态:sudosystemctlstatushttpd#ApacheWeb服务器如果发现没有安装web服务器:安装Apache:sudoyuminstallhttpd启动Apache:sudosystemctl

- 浅谈MapReduce

Android路上的人

Hadoop分布式计算mapreduce分布式框架hadoop

从今天开始,本人将会开始对另一项技术的学习,就是当下炙手可热的Hadoop分布式就算技术。目前国内外的诸多公司因为业务发展的需要,都纷纷用了此平台。国内的比如BAT啦,国外的在这方面走的更加的前面,就不一一列举了。但是Hadoop作为Apache的一个开源项目,在下面有非常多的子项目,比如HDFS,HBase,Hive,Pig,等等,要先彻底学习整个Hadoop,仅仅凭借一个的力量,是远远不够的。

- Hadoop

傲雪凌霜,松柏长青

后端大数据hadoop大数据分布式

ApacheHadoop是一个开源的分布式计算框架,主要用于处理海量数据集。它具有高度的可扩展性、容错性和高效的分布式存储与计算能力。Hadoop核心由四个主要模块组成,分别是HDFS(分布式文件系统)、MapReduce(分布式计算框架)、YARN(资源管理)和HadoopCommon(公共工具和库)。1.HDFS(HadoopDistributedFileSystem)HDFS是Hadoop生

- maven-assembly-plugin 打包实例

带着二娃去遛弯

1.先在pom.xml文件中添加assembly打包插件org.apache.maven.pluginsmaven-assembly-plugin2.6assembly/assembly.xmlmake-assemblypackagesingle说明:1.需要修改的可能就是descriptors标签下面的打包配置文件目录,指定assembly.xml的路径.2.可以添加多个打包配置文件,进行多种形

- Kafka详细解析与应用分析

芊言芊语

kafka分布式

Kafka是一个开源的分布式事件流平台(EventStreamingPlatform),由LinkedIn公司最初采用Scala语言开发,并基于ZooKeeper协调管理。如今,Kafka已经被Apache基金会纳入其项目体系,广泛应用于大数据实时处理领域。Kafka凭借其高吞吐量、持久化、分布式和可靠性的特点,成为构建实时流数据管道和流处理应用程序的重要工具。Kafka架构Kafka的架构主要由

- 分享一个基于python的电子书数据采集与可视化分析 hadoop电子书数据分析与推荐系统 spark大数据毕设项目(源码、调试、LW、开题、PPT)

计算机源码社

Python项目大数据大数据pythonhadoop计算机毕业设计选题计算机毕业设计源码数据分析spark毕设

作者:计算机源码社个人简介:本人八年开发经验,擅长Java、Python、PHP、.NET、Node.js、Android、微信小程序、爬虫、大数据、机器学习等,大家有这一块的问题可以一起交流!学习资料、程序开发、技术解答、文档报告如需要源码,可以扫取文章下方二维码联系咨询Java项目微信小程序项目Android项目Python项目PHP项目ASP.NET项目Node.js项目选题推荐项目实战|p

- java 技术 架构 相关文档

圣心

java架构开发语言

在Java中,有许多不同的技术和架构,这里我将列举一些常见的Java技术和架构,并提供一些相关的文档资源。SpringFrameworkSpring是一个开源的Java/JavaEE全功能框架,以Apache许可证形式发布,提供了一种实现企业级应用的方法。官方文档:SpringFrameworkSpringBootSpringBoot是Spring的一个子项目,旨在简化创建生产级的Spring应用

- Spark 组件 GraphX、Streaming

叶域

大数据sparkspark大数据分布式

Spark组件GraphX、Streaming一、SparkGraphX1.1GraphX的主要概念1.2GraphX的核心操作1.3示例代码1.4GraphX的应用场景二、SparkStreaming2.1SparkStreaming的主要概念2.2示例代码2.3SparkStreaming的集成2.4SparkStreaming的应用场景SparkGraphX用于处理图和图并行计算。Graph

- Apache Shiro安全框架(2)-用户认证

heyrian

Javashiro

身份认证在shiro中用户需要提供用户的principals(身份)和credentials(证明)来证明该用户属于当前系统用户。常见的认证方式即用户名/密码。在解释身份认证之前,我们先来看看shiro中的Subject和Realm,这是身份认证的两个关键的概念。Subjectsubject代表当前用户,内部主要维护当前用户信息。shiro中所有的subject都交给SecurityManager

- Apache HBase基础(基本概述,物理架构,逻辑架构,数据管理,架构特点,HBase Shell)

May--J--Oldhu

HBaseHBaseshellhbase物理架构hbase逻辑架构hbase

NoSQL综述及ApacheHBase基础一.HBase1.HBase概述2.HBase发展历史3.HBase应用场景3.1增量数据-时间序列数据3.2信息交换-消息传递3.3内容服务-Web后端应用程序3.4HBase应用场景示例4.ApacheHBase生态圈5.HBase物理架构5.1HMaster5.2RegionServer5.3Region和Table6.HBase逻辑架构-Row7.

- Flume:大规模日志收集与数据传输的利器

傲雪凌霜,松柏长青

后端大数据flume大数据

Flume:大规模日志收集与数据传输的利器在大数据时代,随着各类应用的不断增长,产生了海量的日志和数据。这些数据不仅对业务的健康监控至关重要,还可以通过深入分析,帮助企业做出更好的决策。那么,如何高效地收集、传输和存储这些海量数据,成为了一项重要的挑战。今天我们将深入探讨ApacheFlume,它是如何帮助我们应对这些挑战的。一、Flume概述ApacheFlume是一个分布式、可靠、可扩展的日志

- 大数据毕业设计hadoop+spark+hive知识图谱租房数据分析可视化大屏 租房推荐系统 58同城租房爬虫 房源推荐系统 房价预测系统 计算机毕业设计 机器学习 深度学习 人工智能

2401_84572577

程序员大数据hadoop人工智能

做了那么多年开发,自学了很多门编程语言,我很明白学习资源对于学一门新语言的重要性,这些年也收藏了不少的Python干货,对我来说这些东西确实已经用不到了,但对于准备自学Python的人来说,或许它就是一个宝藏,可以给你省去很多的时间和精力。别在网上瞎学了,我最近也做了一些资源的更新,只要你是我的粉丝,这期福利你都可拿走。我先来介绍一下这些东西怎么用,文末抱走。(1)Python所有方向的学习路线(

- Superset二次开发之源码DependencyList.tsx 分析

aimmon

Superset二次开发SupersetBI二次开发typescript前端

功能点路径superset-frontend\src\dashboard\components\nativeFilters\FiltersConfigModal\FiltersConfigForm\DependencyList.tsx/***LicensedtotheApacheSoftwareFoundation(ASF)underone*ormorecontributorlicenseagre

- 史上最全的maven的pom.xml文件详解

Meta999

Maven

注:详解文件中,用红色进行标注的是平常项目中常用的配置节点。要详细学习!转载的,太经典了、、、、欢迎收藏xxxxxxxxxxxx4.0.0xxxxxxjar1.0-SNAPSHOTxxx-mavenhttp://maven.apache.orgAmavenprojecttostudymaven.jirahttp://jira.baidu.com/

[email protected]

- 利用apache-pdfbox库修改pdf文件模板,进行信息替换

区块链攻城狮

pdf合同模板pdf生成合同生成

publicStringcreateSignFile(Longid)throwsIOException{//1.验证企业信息CompanyDOcompany=validateCompanyExists(id);//2.验证签约状态if(company.getSignStatus()!=0){throwexception(COMPANY_SIGN_STATUS_NOT_ZERO);}//3.获取合同

- Apache DataFusion Python 绑定教程

柏赢安Simona

ApacheDataFusionPython绑定教程datafusion-pythonApacheDataFusionPythonBindings项目地址:https://gitcode.com/gh_mirrors/data/datafusion-python项目介绍ApacheDataFusion是一个基于ApacheArrow的内存查询引擎,提供了高性能的查询处理能力。DataFusion的

- 压测服务器并使用 Grafana 进行可视化

豆瑞瑞

grafana

简介仓库代码GitCode-全球开发者的开源社区,开源代码托管平台参考Welcome!-TheApacheHTTPServerProjectGrafana|查询、可视化、警报观测平台https://prometheus.io/docs/introduction/overview/

- Spark集群的三种模式

MelodyYN

#Sparksparkhadoopbigdata

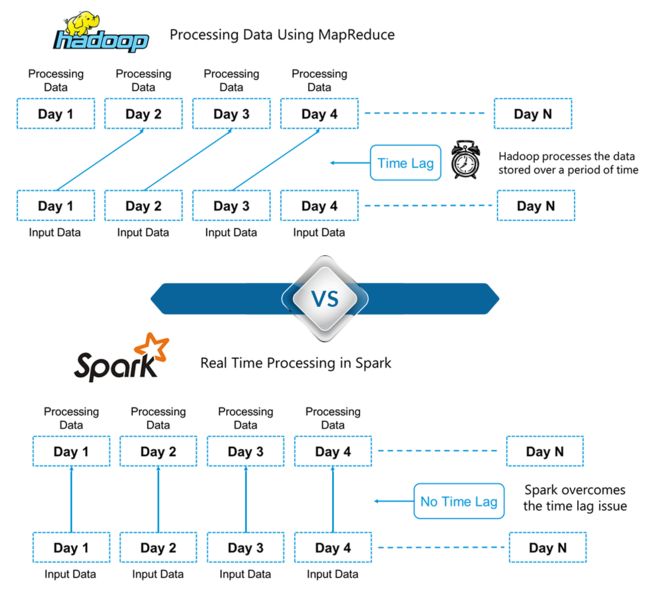

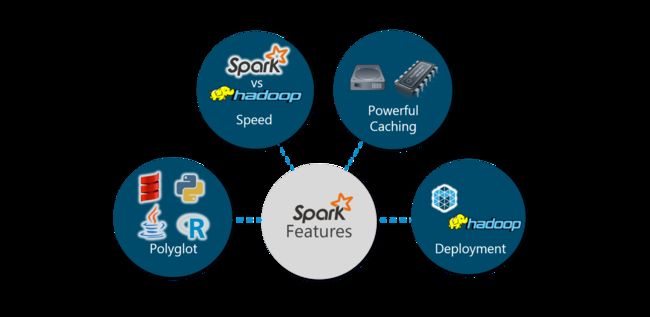

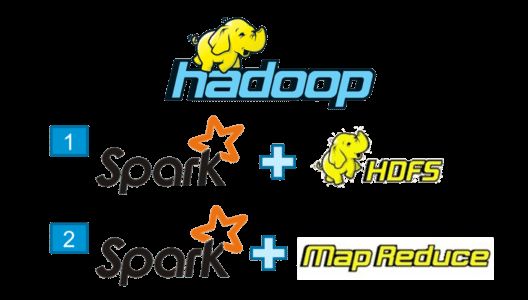

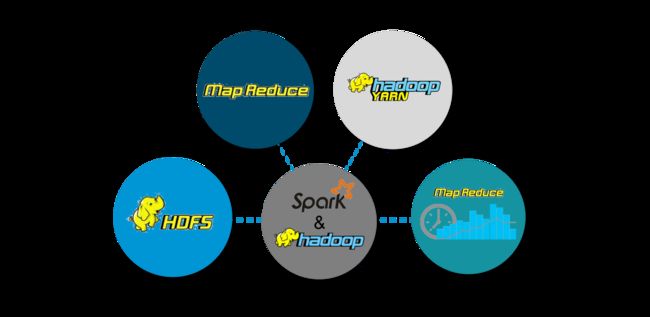

文章目录1、Spark的由来1.1Hadoop的发展1.2MapReduce与Spark对比2、Spark内置模块3、Spark运行模式3.1Standalone模式部署配置历史服务器配置高可用运行模式3.2Yarn模式安装部署配置历史服务器运行模式4、WordCount案例1、Spark的由来定义:Hadoop主要解决,海量数据的存储和海量数据的分析计算。Spark是一种基于内存的快速、通用、可

- 2.Jmeter安装配置,核心目录详情,组件和作用域

XXX-17

Jmeterjmeter软件测试接口测试

一、Jmeter安装配置以及核心目录详情Jmeter基于java语言来开发,java需要jdk环境。1.安装jdk并且配置jdk的环境变量。2.jmeter只需要解压就可以使用了。3.在D:\apache-jmeter-5.5\bin目录下双击jmeter.bat文件就可以启动使用了backups:自动备份的目录bin:启动文件、配置文件(jmeter.bat是启动问题,jmeter.propti

- BindingException: Invalid bound statement (not found)

小卡车555

MyBatismybatisjavamysql

Mybatis出现绑定异常问题的解决org.apache.ibatis.binding.BindingException:Invalidboundstatement(notfound)一般的原因是Mapperinterface和xml文件的定义对应不上,需要检查包名,namespace,函数名称等能否对应上,需要比较细致的对比,我经常就是写错了一两个字母搞的很长时间找不到错误按以下步骤一一执行:1

- Java中的大数据处理框架对比分析

省赚客app开发者

java开发语言

Java中的大数据处理框架对比分析大家好,我是微赚淘客系统3.0的小编,是个冬天不穿秋裤,天冷也要风度的程序猿!今天,我们将深入探讨Java中常用的大数据处理框架,并对它们进行对比分析。大数据处理框架是现代数据驱动应用的核心,它们帮助企业处理和分析海量数据,以提取有价值的信息。本文将重点介绍ApacheHadoop、ApacheSpark、ApacheFlink和ApacheStorm这四种流行的

- NoClassDefFoundError: org.apache.poi.POIXMLDocument问题排查解决

qinmingjun718

apache

java.lang.NoClassDefFoundError:org/apache/poi/POIXMLDocumentPart这错很明显就是没找到这个类POIXMLDocumentPart就是找不到类问题原因是大概是因为poi从3.1.X低版本版本升级到pio4.1.2高版本的后与org.apache.poi.xwpf.converter.core-1.0.6.jar不兼容问题,导致这个情况的主

- 使用poi替换XWPFTableCell内容,并设置行间距

RR369_yyh

javaUtiljavapoi

使用poi读取word文档(docx类型),进行数据替换。另外,为了记录poi设置行间距的api,真是找了好几十分钟才找到啊啊啊啊!!!importorg.apache.poi.xwpf.usermodel.*;importorg.springframework.util.StringUtils;importjava.io.File;importjava.io.FileInputStream;im

- 揭秘OozieBundle:架构组件与核心概念

光剑书架上的书

计算大数据AI人工智能计算科学神经计算深度学习神经网络大数据人工智能大型语言模型AIAGILLMJavaPython架构设计AgentRPA

揭秘OozieBundle:架构、组件与核心概念1.背景介绍在大数据领域,数据处理工作流程通常由多个复杂的作业组成,这些作业之间存在着依赖关系。ApacheOozie作为一个工作流调度系统,可以有效管理这些复杂的工作流程。OozieBundle是Oozie提供的一种特殊的工作流程,用于协调和控制多个相关的工作流程。OozieBundle的主要目的是将多个相关的工作流程组织在一起,并根据它们之间的依

- Apache POI用法

JH3073

apache

一、ApachePOI是什么ApachePOI是用Java编写的免费开源的跨平台的JavaAPI,ApachePOI提供API给Java程序对MicrosoftOffice格式档案读和写的功能,其中使用最多的就是使用POI操作Excel文件。二、POI结构HSSF-提供读写MicrosoftExcelXLS格式档案的功能XSSF-提供读写MicrosoftExcelOOXMLXLSX格式档案的功能

- 写出渗透测试信息收集详细流程

卿酌南烛_b805

一、扫描域名漏洞:域名漏洞扫描工具有AWVS、APPSCAN、Netspark、WebInspect、Nmap、Nessus、天镜、明鉴、WVSS、RSAS等。二、子域名探测:1、dns域传送漏洞2、搜索引擎查找(通过Google、bing、搜索c段)3、通过ssl证书查询网站:https://myssl.com/ssl.html和https://www.chinassl.net/ssltools

- 【LINUX】在ubuntu中安装tomcat

缘起性本空

linux运维服务器

#instaljdkaptinstallopenjdk-8-jdk-y#enterinstallpathcd/home/a/#copytomcatpackagecp/mnt/hgfs/Share/apache-tomcat-9.0.93.tar.gz.#unpresstomcatpackagetar-xfapache-tomcat-9.0.93.tar.gz#enterbinpathcdapach

- 最好用的e2e框架,使用 Cypress 让产品持续稳定交付

Node全栈

javapython编程语言软件测试html

以前我们经常使用nightwatch,现在都已经切换到cypress了,可以说cypress目前最好用的e2e框架。具体原因和对比,就是本文要讲的内容。背景ApacheAPISIXDashboard的设计是为了让用户通过前端界面尽可能方便地操作ApacheAPISIX。从项目初始化到现在,已经有552commits、发布了10个版本。在如此之快的产品迭代过程中,确保开源产品质量显的尤为重要。为此,

- js动画html标签(持续更新中)

843977358

htmljs动画mediaopacity

1.jQuery 效果 - animate() 方法 改变 "div" 元素的高度: $(".btn1").click(function(){ $("#box").animate({height:"300px

- springMVC学习笔记

caoyong

springMVC

1、搭建开发环境

a>、添加jar文件,在ioc所需jar包的基础上添加spring-web.jar,spring-webmvc.jar

b>、在web.xml中配置前端控制器

<servlet>

&nbs

- POI中设置Excel单元格格式

107x

poistyle列宽合并单元格自动换行

引用:http://apps.hi.baidu.com/share/detail/17249059

POI中可能会用到一些需要设置EXCEL单元格格式的操作小结:

先获取工作薄对象:

HSSFWorkbook wb = new HSSFWorkbook();

HSSFSheet sheet = wb.createSheet();

HSSFCellStyle setBorder = wb.

- jquery 获取A href 触发js方法的this参数 无效的情况

一炮送你回车库

jquery

html如下:

<td class=\"bord-r-n bord-l-n c-333\">

<a class=\"table-icon edit\" onclick=\"editTrValues(this);\">修改</a>

</td>"

j

- md5

3213213333332132

MD5

import java.security.MessageDigest;

import java.security.NoSuchAlgorithmException;

public class MDFive {

public static void main(String[] args) {

String md5Str = "cq

- 完全卸载干净Oracle11g

sophia天雪

orale数据库卸载干净清理注册表

完全卸载干净Oracle11g

A、存在OUI卸载工具的情况下:

第一步:停用所有Oracle相关的已启动的服务;

第二步:找到OUI卸载工具:在“开始”菜单中找到“oracle_OraDb11g_home”文件夹中

&

- apache 的access.log 日志文件太大如何解决

darkranger

apache

CustomLog logs/access.log common 此写法导致日志数据一致自增变大。

直接注释上面的语法

#CustomLog logs/access.log common

增加:

CustomLog "|bin/rotatelogs.exe -l logs/access-%Y-%m-d.log

- Hadoop单机模式环境搭建关键步骤

aijuans

分布式

Hadoop环境需要sshd服务一直开启,故,在服务器上需要按照ssh服务,以Ubuntu Linux为例,按照ssh服务如下:

sudo apt-get install ssh

sudo apt-get install rsync

编辑HADOOP_HOME/conf/hadoop-env.sh文件,将JAVA_HOME设置为Java

- PL/SQL DEVELOPER 使用的一些技巧

atongyeye

javasql

1 记住密码

这是个有争议的功能,因为记住密码会给带来数据安全的问题。 但假如是开发用的库,密码甚至可以和用户名相同,每次输入密码实在没什么意义,可以考虑让PLSQL Developer记住密码。 位置:Tools菜单--Preferences--Oracle--Logon HIstory--Store with password

2 特殊Copy

在SQL Window

- PHP:在对象上动态添加一个新的方法

bardo

方法动态添加闭包

有关在一个对象上动态添加方法,如果你来自Ruby语言或您熟悉这门语言,你已经知道它是什么...... Ruby提供给你一种方式来获得一个instancied对象,并给这个对象添加一个额外的方法。

好!不说Ruby了,让我们来谈谈PHP

PHP未提供一个“标准的方式”做这样的事情,这也是没有核心的一部分...

但无论如何,它并没有说我们不能做这样

- ThreadLocal与线程安全

bijian1013

javajava多线程threadLocal

首先来看一下线程安全问题产生的两个前提条件:

1.数据共享,多个线程访问同样的数据。

2.共享数据是可变的,多个线程对访问的共享数据作出了修改。

实例:

定义一个共享数据:

public static int a = 0;

- Tomcat 架包冲突解决

征客丶

tomcatWeb

环境:

Tomcat 7.0.6

win7 x64

错误表象:【我的冲突的架包是:catalina.jar 与 tomcat-catalina-7.0.61.jar 冲突,不知道其他架包冲突时是不是也报这个错误】

严重: End event threw exception

java.lang.NoSuchMethodException: org.apache.catalina.dep

- 【Scala三】分析Spark源代码总结的Scala语法一

bit1129

scala

Scala语法 1. classOf运算符

Scala中的classOf[T]是一个class对象,等价于Java的T.class,比如classOf[TextInputFormat]等价于TextInputFormat.class

2. 方法默认值

defaultMinPartitions就是一个默认值,类似C++的方法默认值

- java 线程池管理机制

BlueSkator

java线程池管理机制

编辑

Add

Tools

jdk线程池

一、引言

第一:降低资源消耗。通过重复利用已创建的线程降低线程创建和销毁造成的消耗。第二:提高响应速度。当任务到达时,任务可以不需要等到线程创建就能立即执行。第三:提高线程的可管理性。线程是稀缺资源,如果无限制的创建,不仅会消耗系统资源,还会降低系统的稳定性,使用线程池可以进行统一的分配,调优和监控。

- 关于hql中使用本地sql函数的问题(问-答)

BreakingBad

HQL存储函数

转自于:http://www.iteye.com/problems/23775

问:

我在开发过程中,使用hql进行查询(mysql5)使用到了mysql自带的函数find_in_set()这个函数作为匹配字符串的来讲效率非常好,但是我直接把它写在hql语句里面(from ForumMemberInfo fm,ForumArea fa where find_in_set(fm.userId,f

- 读《研磨设计模式》-代码笔记-迭代器模式-Iterator

bylijinnan

java设计模式

声明: 本文只为方便我个人查阅和理解,详细的分析以及源代码请移步 原作者的博客http://chjavach.iteye.com/

import java.util.Arrays;

import java.util.List;

/**

* Iterator模式提供一种方法顺序访问一个聚合对象中各个元素,而又不暴露该对象内部表示

*

* 个人觉得,为了不暴露该

- 常用SQL

chenjunt3

oraclesqlC++cC#

--NC建库

CREATE TABLESPACE NNC_DATA01 DATAFILE 'E:\oracle\product\10.2.0\oradata\orcl\nnc_data01.dbf' SIZE 500M AUTOEXTEND ON NEXT 50M EXTENT MANAGEMENT LOCAL UNIFORM SIZE 256K ;

CREATE TABLESPA

- 数学是科学技术的语言

comsci

工作活动领域模型

从小学到大学都在学习数学,从小学开始了解数字的概念和背诵九九表到大学学习复变函数和离散数学,看起来好像掌握了这些数学知识,但是在工作中却很少真正用到这些知识,为什么?

最近在研究一种开源软件-CARROT2的源代码的时候,又一次感觉到数学在计算机技术中的不可动摇的基础作用,CARROT2是一种用于自动语言分类(聚类)的工具性软件,用JAVA语言编写,它

- Linux系统手动安装rzsz 软件包

daizj

linuxszrz

1、下载软件 rzsz-3.34.tar.gz。登录linux,用命令

wget http://freeware.sgi.com/source/rzsz/rzsz-3.48.tar.gz下载。

2、解压 tar zxvf rzsz-3.34.tar.gz

3、安装 cd rzsz-3.34 ; make posix 。注意:这个软件安装与常规的GNU软件不

- 读源码之:ArrayBlockingQueue

dieslrae

java

ArrayBlockingQueue是concurrent包提供的一个线程安全的队列,由一个数组来保存队列元素.通过

takeIndex和

putIndex来分别记录出队列和入队列的下标,以保证在出队列时

不进行元素移动.

//在出队列或者入队列的时候对takeIndex或者putIndex进行累加,如果已经到了数组末尾就又从0开始,保证数

- C语言学习九枚举的定义和应用

dcj3sjt126com

c

枚举的定义

# include <stdio.h>

enum WeekDay

{

MonDay, TuesDay, WednesDay, ThursDay, FriDay, SaturDay, SunDay

};

int main(void)

{

//int day; //day定义成int类型不合适

enum WeekDay day = Wedne

- Vagrant 三种网络配置详解

dcj3sjt126com

vagrant

Forwarded port

Private network

Public network

Vagrant 中一共有三种网络配置,下面我们将会详解三种网络配置各自优缺点。

端口映射(Forwarded port),顾名思义是指把宿主计算机的端口映射到虚拟机的某一个端口上,访问宿主计算机端口时,请求实际是被转发到虚拟机上指定端口的。Vagrantfile中设定语法为:

c

- 16.性能优化-完结

frank1234

性能优化

性能调优是一个宏大的工程,需要从宏观架构(比如拆分,冗余,读写分离,集群,缓存等), 软件设计(比如多线程并行化,选择合适的数据结构), 数据库设计层面(合理的表设计,汇总表,索引,分区,拆分,冗余等) 以及微观(软件的配置,SQL语句的编写,操作系统配置等)根据软件的应用场景做综合的考虑和权衡,并经验实际测试验证才能达到最优。

性能水很深, 笔者经验尚浅 ,赶脚也就了解了点皮毛而已,我觉得

- Word Search

hcx2013

search

Given a 2D board and a word, find if the word exists in the grid.

The word can be constructed from letters of sequentially adjacent cell, where "adjacent" cells are those horizontally or ve

- Spring4新特性——Web开发的增强

jinnianshilongnian

springspring mvcspring4

Spring4新特性——泛型限定式依赖注入

Spring4新特性——核心容器的其他改进

Spring4新特性——Web开发的增强

Spring4新特性——集成Bean Validation 1.1(JSR-349)到SpringMVC

Spring4新特性——Groovy Bean定义DSL

Spring4新特性——更好的Java泛型操作API

Spring4新

- CentOS安装配置tengine并设置开机启动

liuxingguome

centos

yum install gcc-c++

yum install pcre pcre-devel

yum install zlib zlib-devel

yum install openssl openssl-devel

Ubuntu上可以这样安装

sudo aptitude install libdmalloc-dev libcurl4-opens

- 第14章 工具函数(上)

onestopweb

函数

index.html

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd">

<html xmlns="http://www.w3.org/

- Xelsius 2008 and SAP BW at a glance

blueoxygen

BOXelsius

Xelsius提供了丰富多样的数据连接方式,其中为SAP BW专属提供的是BICS。那么Xelsius的各种连接的优缺点比较以及Xelsius是如何直接连接到BEx Query的呢? 以下Wiki文章应该提供了全面的概览。

http://wiki.sdn.sap.com/wiki/display/BOBJ/Xcelsius+2008+and+SAP+NetWeaver+BW+Co

- oracle表空间相关

tongsh6

oracle

在oracle数据库中,一个用户对应一个表空间,当表空间不足时,可以采用增加表空间的数据文件容量,也可以增加数据文件,方法有如下几种:

1.给表空间增加数据文件

ALTER TABLESPACE "表空间的名字" ADD DATAFILE

'表空间的数据文件路径' SIZE 50M;

&nb

- .Net framework4.0安装失败

yangjuanjava

.netwindows

上午的.net framework 4.0,各种失败,查了好多答案,各种不靠谱,最后终于找到答案了

和Windows Update有关系,给目录名重命名一下再次安装,即安装成功了!

下载地址:http://www.microsoft.com/en-us/download/details.aspx?id=17113

方法:

1.运行cmd,输入net stop WuAuServ

2.点击开