服务器监控,整理分2部分,一部分是加入kubernetes,另外一部分未加入的。选用Promtheus如何来监控

一.kubernetes监控

1.添加一个namespace

kubectl create namespace monitor

2.prometheus部署

prometheus-rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/name: prometheus

name: prometheus

namespace: monitor

rules:

- apiGroups:

- ""

resources:

- nodes

- nodes/proxy

- pods

- services

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- ingresses

verbs:

- get

- list

- watch

- nonResourceURLs:

- "/metrics"

verbs:

- get

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: monitor

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: monitorprometheus-config-kubernetes.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: monitor

data:

prometheus.yml: |

global:

scrape_configs:

- job_name: 'kubernetes-kubelet'

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: 192.168.11.210:8443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics

- job_name: 'kubernetes-cadvisor'

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: 192.168.11.210:8443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: kube-state-metrics

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_label_app_kubernetes_io_name]

regex: kube-state-metrics

replacement: $1

action: keep

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: k8s_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: k8s_snameprometheus.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

name: prometheus

name: prometheus

namespace: monitor

spec:

replicas: 1

selector:

matchLabels:

app: prometheus-server

template:

metadata:

labels:

app: prometheus-server

spec:

serviceAccountName: prometheus

containers:

- name: prometheus

image: prom/prometheus:v2.22.0

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: "/etc/prometheus"

name: config-prometheus

imagePullSecrets:

- name: authllzg

volumes:

- name: config-prometheus

configMap:

name: prometheus-config

---

kind: Service

apiVersion: v1

metadata:

name: prometheus

namespace: monitor

spec:

selector:

app: prometheus-server

ports:

- protocol: TCP

port: 9090

targetPort: 9090

name: prom

type: ClusterIP

---

apiVersion: networking.k8s.io/v1beta1

#apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: prometheus

namespace: monitor

spec:

rules:

- host: test-prometheus.bsg.com

http:

paths:

- path: /

backend:

serviceName: prometheus

servicePort: prom分别将以上配置文件应用到kubernetes(单独执行后方便是否部署成功)

kubectl apply -f *.yaml

3.部署kube-state-metrics

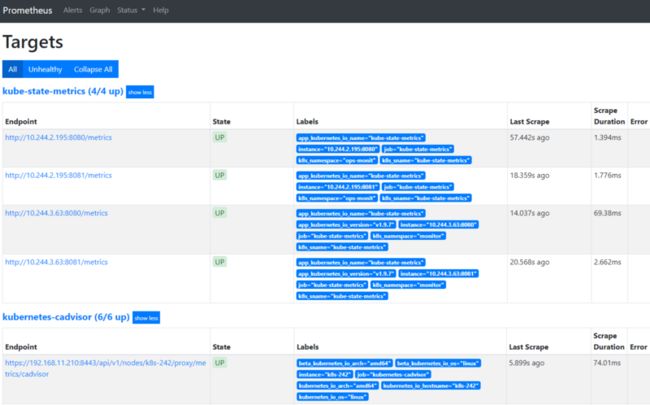

Prometheus需要能采集到cadvisor与kube-state-metrics的指标

由于cAdvisor作为kubelet内置的一部分程序可以直接使用,所以只需要部署kube-state-metrics,参考以下链接下方部分内容

https://grafana.com/grafana/d...

cluster-role-binding.yaml

cluster-role.yaml

service-account.yaml

参考链接模板

kube-state-metrics.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v1.9.7

name: kube-state-metrics

namespace: monitor

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: kube-state-metrics

template:

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v1.9.7

spec:

containers:

- image: quay.mirrors.ustc.edu.cn/coreos/kube-state-metrics:v1.9.7

livenessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 5

timeoutSeconds: 5

name: kube-state-metrics

ports:

- containerPort: 8080

name: http-metrics

- containerPort: 8081

name: telemetry

readinessProbe:

httpGet:

path: /

port: 8081

initialDelaySeconds: 5

timeoutSeconds: 5

serviceAccountName: kube-state-metrics

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: v1.9.7

name: kube-state-metrics

namespace: monitor

annotations:

prometheus.io/scrape: "true" ##添加此参数,允许prometheus自动发现

spec:

clusterIP: None

ports:

- name: http-metrics

port: 8080

targetPort: http-metrics

- name: telemetry

port: 8081

targetPort: telemetry

selector:

app.kubernetes.io/name: kube-state-metrics4.部署node-exporter

node-exporter.yaml

{

"kind": "DaemonSet",

"apiVersion": "apps/v1",

"metadata": {

"name": "node-exporter",

"namespace": "monitor"

},

"spec": {

"selector": {

"matchLabels": {

"daemon": "node-exporter",

"grafanak8sapp": "true"

}

},

"template": {

"metadata": {

"name": "node-exporter",

"labels": {

"daemon": "node-exporter",

"grafanak8sapp": "true"

}

},

"spec": {

"volumes": [

{

"name": "proc",

"hostPath": {

"path": "/proc"

}

},

{

"name": "sys",

"hostPath": {

"path": "/sys"

}

}

],

"containers": [

{

"name": "node-exporter",

"image": "prom/node-exporter:v1.0.1",

"args": [

"--path.procfs=/proc_host",

"--path.sysfs=/host_sys"

],

"ports": [

{

"name": "node-exporter",

"hostPort": 9100,

"containerPort": 9100

}

],

"volumeMounts": [

{

"name": "sys",

"readOnly": true,

"mountPath": "/host_sys"

},

{

"name": "proc",

"readOnly": true,

"mountPath": "/proc_host"

}

],

"imagePullPolicy": "IfNotPresent"

}

],

"restartPolicy": "Always",

"hostNetwork": true,

"hostPID": true

}

}

}

}kubectl apply -f node-exporter.yaml -o json5.grafana部署

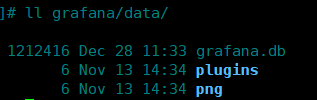

注意:因为数据需要存储到nfs,先配置好nfs服务器,然后创建存储类StorageClass并关联nfs参数。

grafana.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana-pvc

namespace: monitor

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: "40Gi"

volumeName:

storageClassName: monitor-store

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

name: grafana-server

name: grafana-server

namespace: monitor

spec:

replicas: 1

selector:

matchLabels:

app: grafana-server

template:

metadata:

labels:

app: grafana-server

spec:

serviceAccountName: prometheus

containers:

- name: grafana

image: grafana/grafana:7.3.4

ports:

- containerPort: 3000

protocol: TCP

volumeMounts:

- mountPath: "/var/lib/grafana"

readOnly: false

name: grafana-pvc

#env:

#- name: GF_INSTALL_PLUGINS

# value: "grafana-kubernetes-app"

imagePullSecrets:

- name: IfNotPresent

volumes:

- name: grafana-pvc

persistentVolumeClaim:

claimName: grafana-pvc

---

apiVersion: v1

kind: Service

metadata:

name: grafana-server

namespace: monitor

spec:

selector:

app: grafana-server

ports:

- protocol: TCP

port: 3000

name: grafana

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: grafana-server

namespace: monitor

#annotations:

# kubernetes.io/ingress.class: traefik

spec:

rules:

- host: test-grafana.bsg.com

http:

paths:

- path: /

backend:

serviceName: grafana-server

servicePort: grafana6.最后,配置好grafana

添加数据源

导入模板

推荐的2个模板

13105 8919

参考链接

https://sre.ink/kube-state-me...

二、服务器监控告警

1.docker-compose

docker-compose.yml

version: '3.1'

services:

grafana:

image: grafana/grafana:6.7.4

restart: on-failure

container_name: grafana

environment:

- GF_SERVER_ROOT_URL=http://192.168.11.229:3000

volumes:

- ./grafana/data:/var/lib/grafana:rw

ports:

- 3000:3000

user: "root"

prometheus:

image: prom/prometheus:v2.22.0

restart: on-failure

container_name: prometheus

volumes:

- ./prometheus/prometheus.yml:/etc/prometheus/prometheus.yml

- ./prometheus/alert-rules.yml:/etc/prometheus/alert-rules.yml

- ./prometheus/data:/prometheus:rw

ports:

- 9090:9090

user: "root"

depends_on:

- alertmanager

alertmanager:

image: prom/alertmanager:latest

restart: on-failure

container_name: alertmanager

volumes:

- ./alertmanager/alertmanager.yml:/etc/alertmanager/alertmanager.yml

ports:

- 9093:9093

- 9094:9094

depends_on:

- dingtalk

dingtalk:

image: timonwong/prometheus-webhook-dingtalk:latest

restart: on-failure

container_name: dingtalk

volumes:

- ./alertmanager/config.yml:/etc/prometheus-webhook-dingtalk/config.yml

ports:

- 8060:80602.altermanager

alertmanager.yml

global:

resolve_timeout: 5m

smtp_smarthost: 'smtp.exmail.qq.com:465' #邮箱smtp服务器代理,启用SSL发信, 端口一般是465

smtp_from: 'yc@bsg.com' #发送邮箱名称

smtp_auth_username: 'yc@bsg.com' #邮箱名称

smtp_auth_password: 'password' #邮箱密码或授权码

smtp_require_tls: false

route:

receiver: 'default'

group_wait: 10s

group_interval: 1m

repeat_interval: 1h

group_by: ['alertname']

inhibit_rules:

- source_match:

severity: 'critical'

target_match:

severity: 'warning'

equal: ['alertname', 'instance']

receivers:

- name: 'default'

email_configs:

- to: 'yc@bsg.com'

send_resolved: true

webhook_configs:

- url: 'http://192.168.11.229:8060/dingtalk/webhook/send'

send_resolved: trueconfig.yml

targets:

webhook:

url: https://oapi.dingtalk.com/robot/send?access_token=xxxx #修改为钉钉机器人的webhook

mention:

all: true4.prometheus

alert-rules.yml

groups:

- name: node-alert

rules:

- alert: NodeDown

expr: up{job="node"} == 0

for: 5m

labels:

severity: critical

instance: "{{ $labels.instance }}"

annotations:

summary: "Instance 节点已经宕机 5分钟"

description: "instance: {{ $labels.instance }} down"

value: "{{ $value }}"

- alert: NodeCpuHigh

expr: (1 - avg by (instance) (irate(node_cpu_seconds_total{job="node",mode="idle"}[5m]))) * 100 > 80

for: 5m

labels:

severity: warning

instance: "{{ $labels.instance }}"

annotations:

summary: "CPU 使用率超过 80%"

description: "instance: {{ $labels.instance }} cpu使用率过高,(current value is {{ $value }})"

value: "{{ $value }}"

- alert: NodeCpuIowaitHigh

expr: avg by (instance) (irate(node_cpu_seconds_total{job="node",mode="iowait"}[5m])) * 100 > 50

for: 5m

labels:

severity: warning

instance: "{{ $labels.instance }}"

annotations:

summary: "CPU iowait 使用率超过 50%"

description: "instance: {{ $labels.instance }} cpu iowait 使用率过高,(current value is {{ $value }})"

value: "{{ $value }}"

- alert: NodeLoad5High

expr: node_load5 > (count by (instance) (node_cpu_seconds_total{job="node",mode='system'})) * 1.2

for: 5m

labels:

severity: warning

instance: "{{ $labels.instance }}"

annotations:

summary: "Load(5m) 过高,超出cpu核数 1.2倍"

description: "instance: {{ $labels.instance }} load(5m) 过高,current value is {{ $value }})"

value: "{{ $value }}"

- alert: NodeMemoryHigh

expr: (1 - node_memory_MemAvailable_bytes{job="node"} / node_memory_MemTotal_bytes{job="node"}) * 100 > 90

for: 5m

labels:

severity: warning

instance: "{{ $labels.instance }}"

annotations:

summary: "Memory 使用率超过 90%"

description: "instance: {{ $labels.instance }} memory 使用率过高,current value is {{ $value }})"

value: "{{ $value }}"

- alert: NodeDiskRootHigh

expr: max((1 - node_filesystem_avail_bytes{job="node",fstype=~"ext.?|xfs"} / node_filesystem_size_bytes{job="node",fstype=~"ext.?|xfs"}) * 100)by(instance) > 85

for: 5m

labels:

severity: warning

instance: "{{ $labels.instance }}"

annotations:

summary: "Disk(/ 分区) 使用率超过 85%"

description: "instance: {{ $labels.instance }} disk(/ 分区) 使用率过高,(current value is {{ $value }})"

value: "{{ $value }}"

- alert: NodeDiskBootHigh

expr: (1 - node_filesystem_avail_bytes{job="node",fstype=~"ext.*|xfs",mountpoint ="/boot"} / node_filesystem_size_bytes{job="node",fstype=~"ext.*|xfs",mountpoint ="/boot"}) * 100 > 80

for: 5m

labels:

severity: warning

instance: "{{ $labels.instance }}"

annotations:

summary: "Disk(/boot 分区) 使用率超过 80%"

description: "instance: {{ $labels.instance }} disk(/boot 分区) 使用率过高,(current value is {{ $value }})"

value: "{{ $value }}"

- alert: NodeDiskReadHigh

expr: irate(node_disk_read_bytes_total{job="node"}[5m]) > 20 * (1024 ^ 2)

for: 5m

labels:

severity: warning

instance: "{{ $labels.instance }}"

annotations:

summary: "Disk 读取字节数 速率超过 20 MB/s"

description: "instance: {{ $labels.instance }} disk 读取字节数 速率过高,(current value is {{ $value }})"

value: "{{ $value }}"

- alert: NodeDiskWriteHigh

expr: irate(node_disk_written_bytes_total{job="node"}[5m]) > 20 * (1024 ^ 2)

for: 5m

labels:

severity: warning

instance: "{{ $labels.instance }}"

annotations:

summary: "Disk 写入字节数 速率超过 20 MB/s"

description: "instance: {{ $labels.instance }} disk 写入字节数 速率过高,(current value is {{ $value }})"

value: "{{ $value }}"

- alert: NodeDiskReadRateCountHigh

expr: irate(node_disk_reads_completed_total{job="node"}[2m]) > 3000

for: 2m

labels:

severity: warning

instance: "{{ $labels.instance }}"

annotations:

summary: "Disk iops 每秒读取速率超过 3000 iops"

description: "instance: {{ $labels.instance }} disk iops 每秒读取速率过高,(current value is {{ $value }})"

value: "{{ $value }}"

- alert: NodeDiskWriteRateCountHigh

expr: irate(node_disk_writes_completed_total{job="node"}[5m]) > 3000

for: 5m

labels:

severity: warning

instance: "{{ $labels.instance }}"

annotations:

summary: "Disk iops 每秒写入速率超过 3000 iops"

description: "instance: {{ $labels.instance }} disk iops 每秒写入速率过高,(current value is {{ $value }})"

value: "{{ $value }}"

- alert: NodeInodeRootUsedPercentHigh

expr: (1 - node_filesystem_files_free{job="node",fstype=~"ext4|xfs",mountpoint="/"} / node_filesystem_files{job="node",fstype=~"ext4|xfs",mountpoint="/"}) * 100 > 80

for: 10m

labels:

severity: warning

instance: "{{ $labels.instance }}"

annotations:

summary: "Disk (/ 分区) inode 使用率超过 80%"

description: "instance: {{ $labels.instance }} disk(/ 分区) inode 使用率过高,(current value is {{ $value }})"

value: "{{ $value }}"

- alert: NodeFilefdAllocatedPercentHigh

expr: node_filefd_allocated{job="node"} / node_filefd_maximum{job="node"} * 100 > 80

for: 10m

labels:

severity: warning

instance: "{{ $labels.instance }}"

annotations:

summary: "Filefd 打开百分比 超过 80%"

description: "instance: {{ $labels.instance }} filefd 打开百分比过高,(current value is {{ $value }})"

value: "{{ $value }}"

- alert: NodeNetworkNetinBitRateHigh

expr: avg by (instance) (irate(node_network_receive_bytes_total{device=~"eth0|eth1|ens160|ens192|enp3s0"}[1m]) * 8) > 10 * (1024 ^ 2) * 8

for: 3m

labels:

severity: warning

instance: "{{ $labels.instance }}"

annotations:

summary: "Network 接收比特数 速率超过 10MB/s"

description: "instance: {{ $labels.instance }} network 接收比特数 速率过高,(current value is {{ $value }})"

value: "{{ $value }}"

- alert: NodeNetworkNetoutBitRateHigh

expr: avg by (instance) (irate(node_network_transmit_bytes_total{device=~"eth0|eth1|ens160|ens192|enp3s0"}[1m]) * 8) > 10 * (1024 ^ 2) * 8

for: 3m

labels:

severity: warning

instance: "{{ $labels.instance }}"

annotations:

summary: "Network 发送比特数 速率超过 10MB/s"

description: "instance: {{ $labels.instance }} network 发送比特数 速率过高,(current value is {{ $value }})"

value: "{{ $value }}"

- alert: NodeNetworkNetinPacketErrorRateHigh

expr: avg by (instance) (irate(node_network_receive_errs_total{device=~"eth0|eth1|ens160|ens192|enp3s0"}[1m])) > 15

for: 3m

labels:

severity: warning

instance: "{{ $labels.instance }}"

annotations:

summary: "Network 接收错误包 速率超过 15个/秒"

description: "instance: {{ $labels.instance }} 接收错误包 速率过高,(current value is {{ $value }})"

value: "{{ $value }}"

- alert: NodeNetworkNetoutPacketErrorRateHigh

expr: avg by (instance) (irate(node_network_transmit_errs_total{device=~"eth0|eth1|ens160|ens192|enp3s0"}[1m])) > 15

for: 3m

labels:

severity: warning

instance: "{{ $labels.instance }}"

annotations:

summary: "Network 发送错误包 速率超过 15个/秒"

description: "instance: {{ $labels.instance }} 发送错误包 速率过高,(current value is {{ $value }})"

value: "{{ $value }}"

- alert: NodeProcessBlockedHigh

expr: node_procs_blocked{job="node"} > 10

for: 10m

labels:

severity: warning

instance: "{{ $labels.instance }}"

annotations:

summary: "Process 当前被阻塞的任务的数量超过 10个"

description: "instance: {{ $labels.instance }} 当前被阻塞的任务的数量过多,(current value is {{ $value }})"

value: "{{ $value }}"

- alert: NodeTimeOffsetHigh

expr: abs(node_timex_offset_seconds{job="node"}) > 3 * 60

for: 2m

labels:

severity: info

instance: "{{ $labels.instance }}"

annotations:

summary: "Time 节点的时间偏差超过 3m"

description: "instance: {{ $labels.instance }} 时间偏差过大,(current value is {{ $value }})"

value: "{{ $value }}"prometheus.yml

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

- alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

- "*rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090']

# add-2020/11/12

- job_name: 'node'

static_configs:

- targets: ['192.168.11.235:9100'] #devops01

- targets: ['192.168.11.237:9100'] #devops02

- targets: ['192.168.11.236:9100'] #samba

- targets: ['192.168.11.219:9100'] #middle01

- targets: ['192.168.11.242:9100'] #k8s-master1

- targets: ['192.168.11.212:9100'] #k8s-worker1

- targets: ['192.168.11.213:9100'] #k8s-worker2

- targets: ['192.168.11.214:9100'] #k8s-worker3

- targets: ['192.168.11.223:9100'] #k8s-worker4

- job_name: 'alertmanager'

static_configs:

- targets: ['192.168.11.229:9093']

- job_name: 'kube-state-metrics'

static_configs:

- targets: ['192.168.11.242:30808'] 服务器的监控告警完成