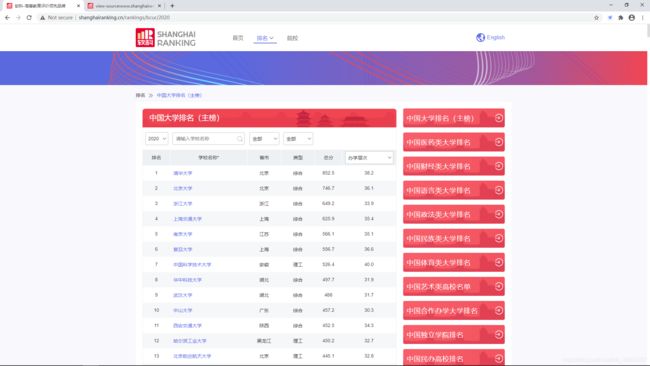

爬取上海交通大学软科中国大学排名

链接: 源代码下载地址

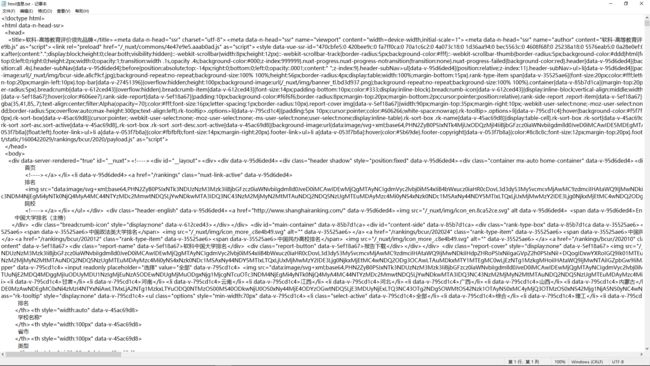

下面展示 代码

爬取上海交通大学软科中国大学排名

import requests

from bs4 import BeautifulSoup

if __name__ == "__main__":

destinationPath = "html信息.txt"

allUniv = []

# headers={

'User-Agent':'Mozilla/5.0'}

url= 'http://www.shanghairanking.cn/rankings/bcur/2020'

# url = 'http://www.zuihaodaxue.cn/zuihaodaxuepaiming2016.html'

try:

# r = requests.get(url=url,headers = headers, timeout=30)

r = requests.get(url=url, timeout=30)

r.raise_for_status()

r.encoding = 'utf-8'

html = r.text

except:

html = ""

# fd = open(destinationPath,"w+") # 注意这里会报错: UnicodeEncodeError: 'gbk' codec can't encode character '\xa9' in position 0: illegal multibyte sequence

fd = open(destinationPath,"w+",encoding='utf-8')

fd.writelines(html)

fd.close()

# print(html) # 注意在vscode下这里打印不全,故将结果保存在文件中

soup = BeautifulSoup(html, "html.parser")

# fillUnivList(soup)

# printUnivList(10)

print("{0:{5}<10}{1:{5}^10}{2:{5}^12}{3:{5}^10}{4:{5}^10}".format("排名","学校名称","省市","类型","总分",(chr(12288))))

data = soup.find_all('tr')

for tr in data: # 每一行,对应每一个学校

ltd = tr.find_all('td')

if len(ltd)==0:# if len(ltd)<=1:# if len(ltd)==0:

continue

singleUniv = []

# UniName = tr.find('a')

# singleUniv = [UniName.string]

for td in ltd:

if td.find("a"):

UniName = td.find("a").string

singleUniv.append(UniName)

elif td.string.strip():

singleUniv.append(td.string.strip())

allUniv.append(singleUniv)

# print(singleUniv)

# num = len(allUniv)

num = 30

for i in range(num):

u=allUniv[i]

print("{0:<10}{1:{5}^20}{2:{5}<10}{3:{5}^4}{4:^33}".format(u[0],u[1],u[2],u[3],u[4],(chr(12288))))

print("{0:{5}<10}{1:{5}^10}{2:{5}^12}{3:{5}^10}{4:{5}^10}".format("排名","学校名称","省市","类型","总分",(chr(12288))))

运行结果: