(六) elasticsearch手把手搭建生产环境ELK加kafka实现终极版日志收集系统

前言:

上文 elasticsearch手把手搭建生产环境日志收集系统ELK之kafka单实例搭建 已经将kafka在服务器搭建成功.

现在开始通过代码进行kafka的集成. 我在elasticsearch手把手搭建生产环境日志收集系统ELK完结版之logstash一文中说到简单的elk日志系统从服务器的日志文件角度来进行日志收集.虽然能够做到收集日志的效果,但是一旦服务器数量多了.那么维护起来就是十分麻烦的了.所以现在我们从根源获取日志.并通过kafka和logstash结合起来进行日志收集.不管有多少model的服务器...我们只需要一台搭建了elk+kafka的机子,就可以做到所有日志的收集.真正做到日志全局管理.

因为我们的项目是一个springboot加dubbo的项目.这里开始进行kafka的引入.下面是思路.

思路:

首先是集成kafka,然后java代码层作为生产者,用来生产日志,而logstash作为我们的消费者.进行topic主题的监听方.将监听到的主题消息发送到elasticsearch上.然后通过kibana显示日志.

项目有三种日志,一种是接口请求和响应日志,一种是dubbo层的请求和响应日志 ,一种是全局异常日志.

而我们用了springboot,所以打算接口的拦截采用aop技术,将请求和响应日志进行拦截,dubbo的日志采用全局过滤器进行日志的过滤.另外一种是通过全局异常捕获日志.

动手开干:

第一步: springboot集成kafka.

1.引入maven依赖如下,本人使用的是springboot 2.1.2.RELEASE,,,所以这里采用默认版本引入kafka 2.2.3.RELEASE

org.springframework.kafka

spring-kafka

2.引入kafka的配置如下: 也包含了elasticsearch的配置项..这里就不说elasticsearch的集成了.

spring:

data:

elasticsearch:

####集群名称

cluster-name: duduyl-serach

####地址

cluster-nodes: ip:9300

####防止健康检查报错.

elasticsearch:

rest:

uris: ["http://ip:9200"]

kafka:

bootstrap-servers: ip:9092

#本机ip地址和kafka的topic主题: 索引名称不能使用大写字母. 而logstash直接读取的topic作为索引名称.故这里topic也使用小写

localhost:

ip: locahost #默认的ip地址

topic: devlog #接口层请求的kafka 的主题topic

backTopic: devbacklog #dubbo层请求的kafka 的主题topic3.kafka的生产者java代码 因为监听者为logstash,所以这里没有消费者的接收代码.下面会将logstash如何进行监听.

package medicalshare.framework.kafka;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONObject;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.kafka.support.SendResult;

import org.springframework.stereotype.Component;

import org.springframework.util.concurrent.ListenableFuture;

import org.springframework.util.concurrent.ListenableFutureCallback;

/**

* 生产者, 在utils这里想要其他model使用需要注入成bean才可以使用.其他包都没有进行包的扫描....必须main的启动类同级包下以及子集包下才可以通过@compent进行管理.

*/

@Slf4j

public class KafkaSender {

@Autowired

private KafkaTemplate kafkaTemplate;

/**

* kafka 发送消息

*

* @param obj

* 消息对象

*/

public void send(String topic,String obj) {

//String jsonObj = JSONObject.toJSONString(obj);

log.info("------------ message = {}", obj);

// 发送消息

ListenableFuture> future = kafkaTemplate.send(topic, obj);

future.addCallback(new ListenableFutureCallback>() {

@Override

public void onFailure(Throwable throwable) {

log.info("Produce: The message failed to be sent:" + throwable.getMessage());

}

@Override

public void onSuccess(SendResult stringObjectSendResult) {

// TODO 业务处理

log.info("Produce: The message was sent successfully:");

log.info("Produce: _+_+_+_+_+_+_+ result: " + stringObjectSendResult.toString());

}

});

}

} 第二步: springboot通过aop和过滤器分别进行请求日志、dubbo日志以及全局异常日志获取

1.aop拦截controller层的所有请求.并通过KafkaSender的send方法发送到kafka主题topic队列.

package medicalshare.framework.aop;

import com.alibaba.fastjson.JSONObject;

import com.google.common.collect.Lists;

import lombok.extern.slf4j.Slf4j;

import medicalshare.framework.filter.LogHttpServletRequestWrapper;

import medicalshare.framework.kafka.KafkaSender;

import medicalshare.framework.vo.user.ElkRequestVo;

import medicalshare.framework.vo.user.ElkResponseVo;

import org.apache.commons.lang3.StringUtils;

import org.aspectj.lang.JoinPoint;

import org.aspectj.lang.annotation.AfterReturning;

import org.aspectj.lang.annotation.Aspect;

import org.aspectj.lang.annotation.Before;

import org.aspectj.lang.reflect.MethodSignature;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.stereotype.Component;

import org.springframework.web.context.request.RequestContextHolder;

import org.springframework.web.context.request.ServletRequestAttributes;

import org.springframework.web.multipart.MultipartFile;

import javax.servlet.ServletRequest;

import javax.servlet.ServletResponse;

import javax.servlet.http.HttpServletRequest;

import java.net.InetAddress;

import java.net.UnknownHostException;

import java.text.SimpleDateFormat;

import java.util.*;

/**

* @description: ELK拦截日志信息用于存储日志. 在framework-web想要切面生效.需要将其注入到spring容器进行管理.直接加@compent必须要在main的同级包下.(经验啊)

*/

@Aspect

@Slf4j

public class AopLogAspect {

@Value("${localhost.topic}")

private String topic;

@Autowired

private KafkaSender kafkaSender;

@Value("${localhost.ip}")

public String LOCAL_IP;//本地ip地址

public String DEFAULT_IP = "0:0:0:0:0:0:0:1";//默认ip地址

public int DEFAULT_IP_LENGTH = 15;//默认ip地址长度

// 请求method前打印内容

@Before(value = "execution(* medicalshare.*.controller.*.*(..))")

public void methodBefore(JoinPoint joinPoint) {

ServletRequestAttributes requestAttributes = (ServletRequestAttributes) RequestContextHolder

.getRequestAttributes();

HttpServletRequest request = requestAttributes.getRequest();

SimpleDateFormat df = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");// 设置日期格式

Object[] args = joinPoint.getArgs();

HashMap map=new HashMap();

System.out.println("================:"+ Arrays.toString(args));

// 下面两个数组中,参数值和参数名的个数和位置是一一对应的。

String[] argNames = ((MethodSignature)joinPoint.getSignature()).getParameterNames(); // 参数名

for (int i = 0; i < args.length; i++) {

if (args[i] instanceof ServletRequest || args[i] instanceof ServletResponse ) {

//ServletRequest不能序列化,从入参里排除,否则报异常:java.lang.IllegalStateException: It is illegal to call this method if the current request is not in asynchronous mode (i.e. isAsyncStarted() returns false)

//ServletResponse不能序列化 从入参里排除,否则报异常:java.lang.IllegalStateException: getOutputStream() has already been called for this response

continue;

}else if (args[i] instanceof MultipartFile){

MultipartFile arg = (MultipartFile) args[i];

String name = arg.getName();

map.put(name,arg.getOriginalFilename());

continue;

}else{

map.put(argNames[i],args[i]);

}

}

ElkRequestVo elkRequestVo=new ElkRequestVo();

if (map != null&& map.size()>0) {

elkRequestVo.setRequest_args(map);

}

System.out.println("================:"+map.toString());

elkRequestVo.setRequest_time(df.format(new Date()));

elkRequestVo.setRequest_url(request.getRequestURL().toString());

elkRequestVo.setRequest_method(request.getMethod());

elkRequestVo.setRequest_ip(getRealIpAddress(request));

kafkaSender.send(topic,JSONObject.toJSONString(elkRequestVo));

}

// 在方法执行完结后打印返回内容

@AfterReturning(returning = "o", pointcut = "execution(* medicalshare.*.controller.*.*(..))")

public void methodAfterReturing(Object o) {

SimpleDateFormat df = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");// 设置日期格式

ElkResponseVo elkResponseVo = new ElkResponseVo();

elkResponseVo.setResponse_Data(o);

elkResponseVo.setResponseTime(df.format(new Date()));

kafkaSender.send(topic,JSONObject.toJSONString(elkResponseVo));

}

/**

* 获取合法ip地址

* @param request

* @return

*/

public String getRealIpAddress(HttpServletRequest request) {

String ip = request.getHeader("x-forwarded-for");//squid 服务代理

if(ip == null || ip.length() == 0 || "unknown".equalsIgnoreCase(ip)) {

ip = request.getHeader("Proxy-Client-IP");//apache服务代理

}

if(ip == null || ip.length() == 0 || "unknown".equalsIgnoreCase(ip)) {

ip = request.getHeader("WL-Proxy-Client-IP");//weblogic 代理

}

if (ip == null || ip.length() == 0 || "unknown".equalsIgnoreCase(ip)) {

ip = request.getHeader("HTTP_CLIENT_IP");//有些代理

}

if (ip == null || ip.length() == 0 || "unknown".equalsIgnoreCase(ip)) {

ip = request.getHeader("X-Real-IP"); //nginx代理

}

/*

* 如果此时还是获取不到ip地址,那么最后就使用request.getRemoteAddr()来获取

* */

if(ip == null || ip.length() == 0 || "unknown".equalsIgnoreCase(ip)) {

ip = request.getRemoteAddr();

if(StringUtils.equals(ip,LOCAL_IP) || StringUtils.equals(ip,DEFAULT_IP)){

//根据网卡取本机配置的IP

InetAddress iNet = null;

try {

iNet = InetAddress.getLocalHost();

} catch (UnknownHostException e) {

log.error("InetAddress getLocalHost error In HttpUtils getRealIpAddress: " ,e);

}

ip= iNet.getHostAddress();

}

}

//对于通过多个代理的情况,第一个IP为客户端真实IP,多个IP按照','分割

//"***.***.***.***".length() = 15

if(!StringUtils.isEmpty(ip) && ip.length()> DEFAULT_IP_LENGTH){

if(ip.indexOf(",") > 0){

ip = ip.substring(0,ip.indexOf(","));

}

}

return ip;

}

}

2. 过滤器过滤dubbo层的生产者日志消费者日志

package medicalshare.framework.dubbo;

import medicalshare.framework.kafka.KafkaSender;

import org.apache.commons.lang3.StringUtils;

import org.slf4j.MDC;

import com.alibaba.dubbo.rpc.Filter;

import com.alibaba.dubbo.rpc.Invocation;

import com.alibaba.dubbo.rpc.Invoker;

import com.alibaba.dubbo.rpc.Result;

import com.alibaba.dubbo.rpc.RpcException;

import com.alibaba.fastjson.JSONObject;

import lombok.extern.slf4j.Slf4j;

import medicalshare.framework.utils.FrameworkConstants;

import medicalshare.framework.utils.RandomGenerator;

import medicalshare.framework.utils.SpringContextUtils;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.ApplicationContext;

import org.springframework.transaction.PlatformTransactionManager;

import java.util.HashMap;

@Slf4j

public class DubboLogProviderFilter implements Filter {

//@Autowired

//private KafkaSender kafkaSender; //filter 加载顺序高于spring 所以这里注入会为空.解决办法:通过applicationContext根据bean名称获取bean

/*@Value("${localhost.backtopic}")

private String backtopic;*/ //这个也注入不进来.

@Override

public Result invoke(Invoker invoker, Invocation invocation) throws RpcException {

String traceid = invocation.getAttachment(FrameworkConstants.LOG_TRACEID);

if (StringUtils.isBlank(traceid)) {

traceid = RandomGenerator.randomString(12);

}

MDC.put(FrameworkConstants.LOG_TRACEID, traceid);

String className = invoker.getInterface().getName();

String methodName = invocation.getMethodName();

long startTimeMillis = 0;

HashMap hashMap1=new HashMap();

if (log.isInfoEnabled()) {

startTimeMillis = System.currentTimeMillis();

if ("true".equals(SpringContextUtils.getProperty("application.dubboLogDetail"))) {

log.info("[dubbo-service-start][{}.{}][arguments={}]", className, methodName,

JSONObject.toJSONString(invocation.getArguments()));

hashMap1.put("dubbo-service-start-methodName",invocation.getArguments());

} else {

log.info("[dubbo-service-start][{}.{}]", className, methodName);

}

hashMap1.put("dubbo-service-start-className",className);

hashMap1.put("dubbo-service-start-methodName",methodName);

}

Result result = invoker.invoke(invocation);

HashMap hashMap2=new HashMap();

if (log.isInfoEnabled()) {

if (result.hasException()) {

log.error("[dubbo-service-end][{}.{}][exception]", className, methodName, result.getException());

hashMap2.put("dubbo-service-end-exception",result.getException());

} else {

long execTimeMillis = System.currentTimeMillis() - startTimeMillis;

if ("true".equals(SpringContextUtils.getProperty("application.dubboLogDetail"))) {

log.info("[dubbo-service-end][{}.{}(execTime={})][result={}]", className, methodName,

execTimeMillis, JSONObject.toJSONString(result.getValue()));

hashMap2.put("dubbo-service-end-result",result.getValue());

} else {

log.info("[dubbo-service-end][{}.{}(execTime={})]", className, methodName, execTimeMillis);

}

hashMap2.put("dubbo-service-end-execTimeMillis",execTimeMillis);

}

hashMap2.put("dubbo-service-end-className",className);

hashMap2.put("dubbo-service-end-methodName",methodName);

}

HashMap hashMap=new HashMap();

hashMap.put("dubbo-service-start",hashMap1);

hashMap.put("dubbo-service-end",hashMap2);

KafkaSender kafkaSender =SpringContextUtils.getBean(KafkaSender.class);

String backtopic = SpringContextUtils.getProperty("localhost.backtopic");

kafkaSender.send(backtopic,JSONObject.toJSONString(hashMap));

return result;

}

}

package medicalshare.framework.dubbo;

import medicalshare.framework.kafka.KafkaSender;

import org.apache.commons.lang3.StringUtils;

import org.slf4j.MDC;

import com.alibaba.dubbo.rpc.Filter;

import com.alibaba.dubbo.rpc.Invocation;

import com.alibaba.dubbo.rpc.Invoker;

import com.alibaba.dubbo.rpc.Result;

import com.alibaba.dubbo.rpc.RpcException;

import com.alibaba.dubbo.rpc.RpcInvocation;

import com.alibaba.fastjson.JSONObject;

import lombok.extern.slf4j.Slf4j;

import medicalshare.framework.utils.FrameworkConstants;

import medicalshare.framework.utils.RandomGenerator;

import medicalshare.framework.utils.SpringContextUtils;

import java.util.HashMap;

@Slf4j

public class DubboLogConsumerFilter implements Filter {

@Override

public Result invoke(Invoker invoker, Invocation invocation) throws RpcException {

RpcInvocation rpcInvocation = (RpcInvocation) invocation;

String traceid = MDC.get(FrameworkConstants.LOG_TRACEID);

if (StringUtils.isBlank(traceid)) {

traceid = RandomGenerator.randomString(12);

MDC.put(FrameworkConstants.LOG_TRACEID, traceid);

}

rpcInvocation.setAttachment(FrameworkConstants.LOG_TRACEID, traceid);

String className = invoker.getInterface().getName();

String methodName = invocation.getMethodName();

HashMap hashMap1=new HashMap();

if (log.isInfoEnabled()) {

if ("true".equals(SpringContextUtils.getProperty("application.dubboLogDetail"))) {

log.info("[dubbo-referer-start][{}.{}][arguments={}]", className, methodName,

JSONObject.toJSONString(invocation.getArguments()));

hashMap1.put("dubbo-service-start-methodName",invocation.getArguments());

} else {

log.info("[dubbo-referer-start][{}.{}]", className, methodName);

}

hashMap1.put("dubbo-service-start-className",className);

hashMap1.put("dubbo-service-start-methodName",methodName);

}

HashMap hashMap2=new HashMap();

Result result = invoker.invoke(invocation);

if (log.isInfoEnabled()) {

if (result.hasException()) {

log.error("[dubbo-referer-end][{}.{}][exception]", className, methodName, result.getException());

hashMap2.put("dubbo-service-end-exception",result.getException());

} else {

if ("true".equals(SpringContextUtils.getProperty("application.dubboLogDetail"))) {

log.info("[dubbo-referer-end][{}.{}][result={}]", className, methodName,

JSONObject.toJSONString(result.getValue()));

hashMap2.put("dubbo-service-end-result",result.getValue());

} else {

log.info("[dubbo-referer-end][{}.{}]", className, methodName);

}

}

hashMap2.put("dubbo-service-end-className",className);

hashMap2.put("dubbo-service-end-methodName",methodName);

}

HashMap hashMap=new HashMap();

hashMap.put("dubbo-service-start",hashMap1);

hashMap.put("dubbo-service-end",hashMap2);

KafkaSender kafkaSender =SpringContextUtils.getBean(KafkaSender.class);

String backtopic = SpringContextUtils.getProperty("localhost.backtopic");

kafkaSender.send(backtopic,JSONObject.toJSONString(hashMap));

return result;

}

}

package medicalshare.allweb.resolver;

import java.io.PrintWriter;

import java.io.StringWriter;

import java.util.HashMap;

import java.util.Map;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import com.alibaba.fastjson.JSONObject;

import medicalshare.framework.kafka.KafkaSender;

import org.slf4j.MDC;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.http.HttpStatus;

import org.springframework.stereotype.Component;

import org.springframework.web.servlet.HandlerExceptionResolver;

import org.springframework.web.servlet.ModelAndView;

import org.springframework.web.servlet.view.json.MappingJackson2JsonView;

import lombok.extern.slf4j.Slf4j;

import medicalshare.framework.exception.BusinessException;

import medicalshare.framework.utils.RedisUtils;

@Slf4j

@Component

public class AllWebExceptionResolver implements HandlerExceptionResolver {

@Value("${application.showExceptionStackTrace:false}")

private boolean showExceptionStackTrace;

@Value("${localhost.topic}")

private String topic;

@Autowired

private KafkaSender kafkaSender;

@Override

public ModelAndView resolveException(HttpServletRequest request, HttpServletResponse response, Object handler,

Exception ex) {

// 根据异常类型决定返回数据

String errorCode = "";

String errorMessage = "";

if (ex instanceof BusinessException) {

log.warn(ex.getMessage(), ex);

errorCode = ((BusinessException) ex).getErrorCode();

errorMessage = ex.getMessage();

} else {

log.error(ex.getMessage(), ex);

errorCode = "runtime";

errorMessage = "系统异常";

}

// 异常临时记录到Redis里面方便查询

String traceid = MDC.get("traceid");

RedisUtils.set("Allweb_Exception_" + traceid, ex, 7200000);

// 返回错误信息json

ModelAndView mv = new ModelAndView();

mv.setStatus(HttpStatus.OK);

mv.setView(new MappingJackson2JsonView());

mv.addObject("success", false);

mv.addObject("errorCode", errorCode);

mv.addObject("errorMessage", errorMessage);

mv.addObject("traceid", traceid);

//todo 发送错误信息到elk 并返回前端.

Map jsonObject = new HashMap();

if (showExceptionStackTrace) {

StringWriter writer = new StringWriter();

ex.printStackTrace(new PrintWriter(writer, true));

String stackTrace = writer.toString();

mv.addObject("stackTrace", stackTrace);

jsonObject.put("stackTrace",stackTrace);

}

jsonObject.put("success", false);

jsonObject.put("errorCode", errorCode);

jsonObject.put("errorMessage",errorMessage);

jsonObject.put("traceid:",traceid);

kafkaSender.send(topic,JSONObject.toJSONString(jsonObject));

return mv;

}

}

上面这三个类都将日志通过kafka发出去了...

接下来我们来配置logstash配置...然后重启就可以了.

第三步:修改logstash配置,监听kafka投递的topic消息.

进入/opt/logstash-6.4.3/config vi编辑我们早先创建的adlog.conf 文件....将原先的从服务器file文件日志读取日志改为kafka读取.

input {

kafka {

bootstrap_servers => "ip:9092"

topics => ["devlog"]

client_id => "devlog" #需要指定client_id,否则logstash启动报错

#codec => "json"

type => "devlog"

}

kafka {

bootstrap_servers => "ip:9092"

topics => ["testlog"]

client_id => "testlog" #需要指定client_id,否则logstash启动报错

#codec => "json"

type => "testlog"

}

kafka {

bootstrap_servers => "ip:9092"

topics => ["uatlog"]

client_id => "uatlog" #需要指定client_id,否则logstash启动报错

#codec => "json"

type => "uatlog"

}

kafka {

bootstrap_servers => "ip:9092"

topics => ["prodlog"]

client_id => "prodlog" #需要指定client_id,否则logstash启动报错

#codec => "json"

type => "prodlog"

}

kafka {

bootstrap_servers => "ip:9092"

topics => ["devbacklog"]

client_id => "devbacklog" #需要指定client_id,否则logstash启动报错

#codec => "json"

type => "devbacklog"

}

kafka {

bootstrap_servers => "ip:9092"

topics => ["testbacklog"]

client_id => "testbacklog" #需要指定client_id,否则logstash启动报错

#codec => "json"

type => "testbacklog"

}

kafka {

bootstrap_servers => "ip:9092"

topics => ["uatbacklog"]

client_id => "uatbacklog" #需要指定client_id,否则logstash启动报错

#codec => "json"

type => "uatbacklog"

}

kafka {

bootstrap_servers => "ip:9092"

topics => ["prodbacklog"]

client_id => "prodbacklog" #需要指定client_id,否则logstash启动报错

#codec => "json"

type => "prodbacklog"

}

}

filter {

mutate {

rename => { "[host][name]" => "host" }

}

}

output {

stdout { codec => rubydebug }

#stdout { codec => json_lines }

if[type] == "devlog"{

elasticsearch {

hosts => ["http://ip:9200"]

index => "devlog-%{+YYYY.MM.dd}"

}

}

if[type] == "testlog"{

elasticsearch {

hosts => ["http://ip:9200"]

index => "testlog-%{+YYYY.MM.dd}"

}

}

if[type] == "uatlog"{

elasticsearch {

hosts => ["http://ip:9200"]

index => "uatlog-%{+YYYY.MM.dd}"

}

}

if[type] == "prodlog"{

elasticsearch {

hosts => ["http://ip:9200"]

index => "prodlog-%{+YYYY.MM.dd}"

}

}

if[type] == "devbacklog"{

elasticsearch {

hosts => ["http://ip:9200"]

index => "devbacklog-%{+YYYY.MM.dd}"

}

}

if[type] == "testbacklog"{

elasticsearch {

hosts => ["http://ip:9200"]

index => "testbacklog-%{+YYYY.MM.dd}"

}

}

if[type] == "uatbacklog"{

elasticsearch {

hosts => ["http://ip:9200"]

index => "uatbacklog-%{+YYYY.MM.dd}"

}

}

if[type] == "prodbacklog"{

elasticsearch {

hosts => ["http://ip:9200"]

index => "prodbacklog-%{+YYYY.MM.dd}"

}

}

}看起来是不是很恐怖....竟然配置了这么多....其实不用担心....我之所以配置这么多.是配置了开发,测试,冻结,生产四个环境的topic

所以input里面的内容其实出了topic和type不同以外,其他都是一样的. 而为了解决logstash配置多topic进行监听时的输出到不一样的索引中.才配置的type...可以根据if语句判断是属于哪个环境的.这样就能每个环境都能生成各自的索引了.方便在kibana中管理.

然后里面又分了接口层(开发环境devlog为例)的日志和dubbo层(开发环境devbacklog)的日志....

所以共有两种topic,四种环境..也就是八个配置了.

好了!.到这里我们就大功告成了!

接下来开始请求接口,去kibana看效果吧

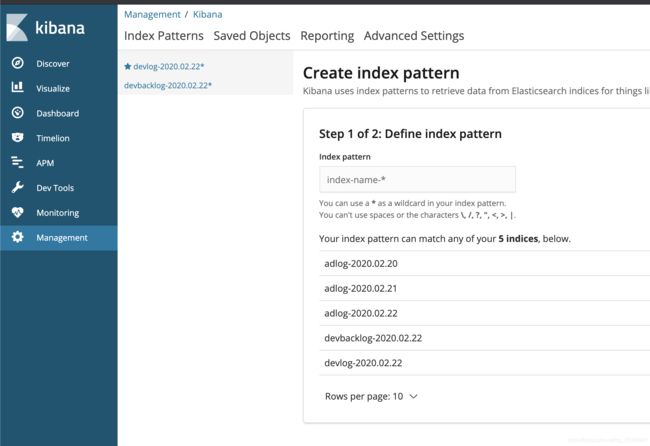

我们开发环境请求接口后.会通过kafka发送到logstash创建开发的索引devlog-2020.02.22...和dubbo层的索引devbacklog-2020.02.22.这里我们通过kibana创建好索引模式的名称进行搜索

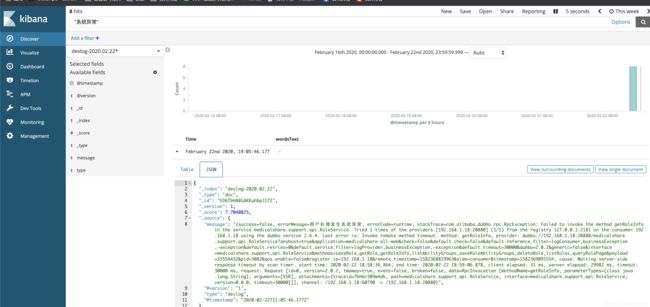

点击discover 选择好索引进行搜索 "系统异常"四个字

我们发现下面已经索引出来了系统异常的数据了.大功告成了.以后各个环境如果找日志分别创建不同环境的索引管理模式名称继续拿给你查询就可以了....真正做到了多服务器多环境通过一个kibana全局管理.

总结:

别看上面写的那么顺利,实际搭建会遇见各种难题.好在都解决了.

这里记录几个遇到的问题:

1.配置logstash中input的kafka配置时出现的问题.配置多个topic,刚开始因为知道那个字段是topics加上了中括号...然后认为一个中括号就可以写多个topic了...但是后来发现在output日志到elasticsearch的时候不知道怎么区分索引.所以没办法想到了配置多个input,然后通过type来区分环境.在output中根据if判断...然后输出到不同的索引名称.虽然配置的比较多..但总算也解决了.听说filebeat可以配置多个topic以及输出时通过指令区分topic...后期可以试试.

2.logstash虽然启动成功了.但是一请求接口,logstash接收到kafka的主题消息之后报了如下错误:

[2020-02-21T15:03:23,947][WARN ][logstash.outputs.elasticsearch] Could not index event to Elasticsearch. {:status=>400, :action=>["index", {:_id=>nil, :_index=>"adlog-2020.02.21", :_type=>"doc", :_routing=>nil}, #], :response=>{"index"=>{"_index"=>"adlog-2020.02.21", "_type"=>"doc", "_id"=>"XD6OZnABGAKKuhbp3o0V", "status"=>400, "error"=>{"type"=>"mapper_parsing_exception", "reason"=>"failed to parse [response.response_content]", "caused_by"=>{"type"=>"illegal_state_exception", "reason"=>"Can't get text on a START_OBJECT at 1:111"}}}}}

其实是因为上面我kafka在input的时候配置的是json读取...然后kafka发送的消息体没有经过jsonobject.toJsonString的转换...而是普通的对象转换成string字符串...导致logstash无法解析.自然也就无法发送到elasticsearch中了.所以我就将上面的input里面的codec => "json"配置给注掉了.其实不注释掉也可以.那样java代码发送的就都得是jsonobject转换的json对象了.看你们选择了.两种方式都行.但是要说明的是如果使用了json...那么输出到kibana的就直接解析到字段级别了...那样在看日志的时候字段过多的话树形排列可读效果不佳. 而我不采用json..那样传过来的也就几个字段.只有一个data字段是对象...并且可以通过左侧自主选择展示哪些字段..可以自己将data数据对象拷贝到json在线解析网站去看.

3.filter中读取不到@Autowired注解和@value的对象...一直为空.那是因为过滤器的加载顺序在spring容器之前.所以注入到spring容器的对象在过滤器中获取不到.要想解决这个问题.可以采用ApplicationContext对象去获取对应的bean.这里提供一个SpringContextUtils工具.可以使用.

package medicalshare.framework.utils;

import org.springframework.beans.BeansException;

import org.springframework.context.ApplicationContext;

import org.springframework.context.ApplicationContextAware;

/**

* 提供获取ApplicationContext的静态方法

*/

public class SpringContextUtils implements ApplicationContextAware {

private static ApplicationContext applicationContext;

public static ApplicationContext getApplicationContext() {

return applicationContext;

}

public static Object getBean(String name) {

return applicationContext.getBean(name);

}

public static T getBean(Class requiredType) {

return applicationContext.getBean(requiredType);

}

public static String getEnvironmentType() {

return getProperty("application.environmentType");

}

public static String getProperty(String key) {

return applicationContext.getEnvironment().getProperty(key);

}

public static String getProperty(String key, String defaultValue) {

return applicationContext.getEnvironment().getProperty(key, defaultValue);

}

@Override

public void setApplicationContext(ApplicationContext applicationContext) throws BeansException {

SpringContextUtils.applicationContext = applicationContext;

}

}

4.aop一直不生效 注解@Aspect一直没有用.拦截不到对应包下的类.....最后发现@Aspect:作用是把当前类标识为一个切面供容器读取 ,所以本身也应该注入到spring中. 而我虽然加了@Component注解.但是一直不管用...最后发现该类的包不在main函数的同级包或下级包内.而是单独的包...这就导致了扫描不到.....@Component注解也就没用了.为了解决这个问题.在不换包的情况下.采用另一个注解@Configuration配置bean...如下:就可以注入到spring中了...自然也就可以切面拦截了.

@Configuration

public class FrameworkWebAutoConfiguration {

@Bean

public AopLogAspect aopLogAspect() {

return new AopLogAspect();

}

}

5.es的索引都要小写.而我刚开始配置的kafka的topic名称配成了大写.es的索引配的跟topic相同.导致logstash存es数据的时候发生错误.造成存储失败.

6....忘了 啊哦....