来学学Hive JDBC操作流程?

一:启动Hadoop

1. core-site.xml 配置代理用户属性

特别注意:hadoop.proxyuser.<服务器用户名>.hosts 和 hadoop.proxyuser.<服务器用户名>.groups这两个属性,服务器用户名是hadoop所在的机器的登录的名字,根据自己实际的登录名来配置。这里我的电脑用户名为mengday。

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/usr/local/Cellar/hadoop/3.2.1/libexec/tmp</value>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:8020</value>

</property>

<property>

<name>hadoop.proxyuser.mengday.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.mengday.groups</name>

<value>*</value>

</property>

</configuration>

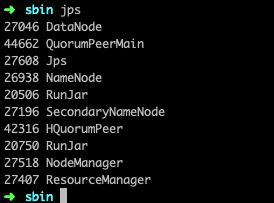

2. 启动hadoop

> cd /usr/local/Cellar/hadoop/3.2.1/sbin

> ./start-all.sh

> jps

启动成功后注意查看DataNode节点是否启动起来, 经常遇到DataNode节点启动不成功。

二:配置hive-site.xml

Java是通过beeline来连接Hive的。启动beeline最重要的就是配置好hive-site.xml。

其中javax.jdo.option.ConnectionURL涉及到一个数据库,最好重新删掉原来的metastore数据库然后重新创建一个并初始化一下。

mysql> create database metastore;

> cd /usr/local/Cellar/hive/3.1.2/libexec/bin

> schematool -initSchema -dbType mysql

hive-site.xml

<configuration>

<property>

<name>hive.metastore.local</name>

<value>true</value>

</property>

<property>

<name>hive.metastore.uris</name>

<value>thrift://localhost:9083</value>

<description>Thrift URI for the remote metastore. Used by metastore client to connect to remote metastore.</description>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/metastore?characterEncoding=UTF-8&createDatabaseIfNotExist=true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.cj.jdbc.Driver</value>

</property>

<!--mysql用户名-->

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<!--mysql密码-->

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>root123</value>

</property>

<!-- hive用来存储不同阶段的map/reduce的执行计划的目录,同时也存储中间输出结果

,默认是/tmp/<user.name>/hive,我们实际一般会按组区分,然后组内自建一个tmp目录存>储 -->

<property>

<name>hive.exec.local.scratchdir</name>

<value>/tmp/hive</value>

</property>

<property>

<name>hive.downloaded.resources.dir</name>

<value>/tmp/hive</value>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/data/hive/warehouse</value>

</property>

<property>

<name>hive.metastore.event.db.notification.api.auth</name>

<value>false</value>

</property>

<property>

<name>hive.server2.active.passive.ha.enable</name>

<value>true</value>

</property>

<property>

<name>hive.server2.transport.mode</name>

<value>binary</value>

<description>

Expects one of [binary, http].

Transport mode of HiveServer2.

</description>

</property>

<property>

<name>hive.server2.logging.operation.log.location</name>

<value>/tmp/hive</value>

</property>

<property>

<name>hive.hwi.listen.host</name>

<value>0.0.0.0</value>

<description>This is the host address the Hive Web Interface will listen on</description>

</property>

<property>

<name>hive.server2.webui.host</name>

<value>0.0.0.0</value>

<description>The host address the HiveServer2 WebUI will listen on</description>

</property>

</configuration>

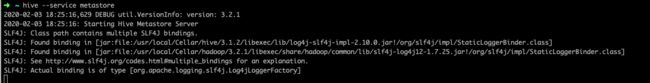

三:启动metastore

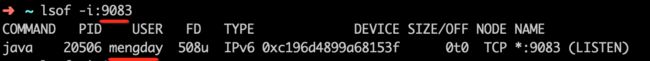

在启动beeline之前需要先启动hiveserver2,而在启动hiveserver2之前需要先启动metastore。metastore默认的端口为9083。

> cd /usr/local/Cellar/hive/3.1.2/bin

> hive --service metastore &

启动过一定确认一下启动是否成功。

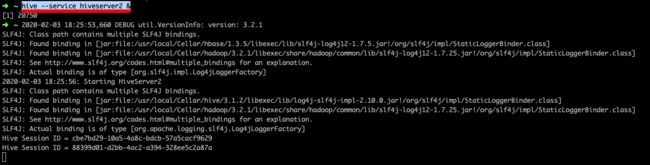

四:启动hiveserver2

> cd /usr/local/Cellar/hive/3.1.2/bin

> hive --service hiveserver2 &

hiveserver2默认的端口为10000,启动之后一定要查看10000端口是否存在,配置有问题基本上10000端口都启动不成功。10000端口存在不存在是启动beeline的关键。

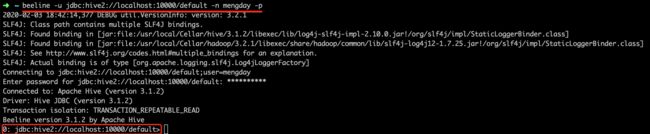

五:启动beeline

> cd /usr/local/Cellar/hive/3.1.2/bin

> beeline -u jdbc:hive2://localhost:10000/default -n mengday -p

- -u: 连接的url,jdbc:hive2://<主机名或IP>:<端口默认>/<数据库名>,端口号默认10000 可通过 ```hiveserver2 --hiveconf hive.server2.thrift.port=14000 修改端口号,default是自带的数据库

- -n: hive所在的那台服务器的登录账号名称, 这里是我Mac机器的登录用户名mengday, 这里的名字要和core-site.xml中的hadoop.proxyuser.mengday.hosts和hadoop.proxyuser.mengday.groups中mengday保持一致。

- -p: 密码,用户名对应的密码

看到0: jdbc:hive2://localhost:10000/default>就表示启动成功了。

六:Hive JDBC

1. 引入依赖

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>3.1.2</version>

</dependency>

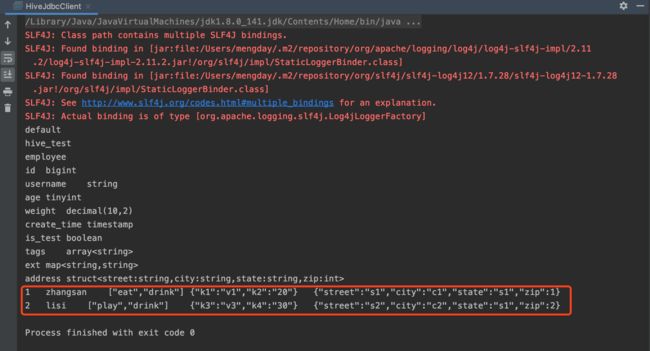

2. 准备数据

/data/employee.txt

1,zhangsan,28,60.66,2020-02-01 10:00:00,true,eat#drink,k1:v1#k2:20,s1#c1#s1#1

2,lisi,29,60.66,2020-02-01 11:00:00,false,play#drink,k3:v3#k4:30,s2#c2#s1#2

3. Java

import java.sql.*;

public class HiveJdbcClient {

private static String url = "jdbc:hive2://localhost:10000/default";

private static String driverName = "org.apache.hive.jdbc.HiveDriver";

private static String user = "mengday";

private static String password = "user对应的密码";

private static Connection conn = null;

private static Statement stmt = null;

private static ResultSet rs = null;

static {

try {

Class.forName(driverName);

conn = DriverManager.getConnection(url, user, password);

stmt = conn.createStatement();

} catch (Exception e) {

e.printStackTrace();

}

}

public static void init() throws Exception {

stmt.execute("drop database if exists hive_test");

stmt.execute("create database hive_test");

rs = stmt.executeQuery("show databases");

while (rs.next()) {

System.out.println(rs.getString(1));

}

stmt.execute("drop table if exists employee");

String sql = "create table if not exists employee(" +

" id bigint, " +

" username string, " +

" age tinyint, " +

" weight decimal(10, 2), " +

" create_time timestamp, " +

" is_test boolean, " +

" tags array, " +

" ext map, " +

" address struct " +

" ) " +

" row format delimited " +

" fields terminated by ',' " +

" collection items terminated by '#' " +

" map keys terminated by ':' " +

" lines terminated by '\n'";

stmt.execute(sql);

rs = stmt.executeQuery("show tables");

while (rs.next()) {

System.out.println(rs.getString(1));

}

rs = stmt.executeQuery("desc employee");

while (rs.next()) {

System.out.println(rs.getString(1) + "\t" + rs.getString(2));

}

}

private static void load() throws Exception {

// 加载数据

String filePath = "/data/employee.txt";

stmt.execute("load data local inpath '" + filePath + "' overwrite into table employee");

// 查询数据

rs = stmt.executeQuery("select * from employee");

while (rs.next()) {

System.out.println(rs.getLong("id") + "\t"

+ rs.getString("username") + "\t"

+ rs.getObject("tags") + "\t"

+ rs.getObject("ext") + "\t"

+ rs.getObject("address")

);

}

}

private static void close() throws Exception {

if ( rs != null) {

rs.close();

}

if (stmt != null) {

stmt.close();

}

if (conn != null) {

conn.close();

}

}

public static void main(String[] args) throws Exception {

init();

load();

close();

}

}