大数据系列:Spark学习笔记

1.关于Spark

- 2009年,spark诞生于伯克利大学的amplab。最重要的是,spark只是一个实验项目,只包含很少的代码,属于轻量级框架。

- 2010年,伯克利大学正式启动了Spark项目。

- 2013年6月,Spark成为Apache基金会的一个项目,并进入了高速开发阶段。第三方开发人员贡献了大量代码,并且非常活跃

- 2014年2月,Spark被称为Apache的顶级项目。与此同时,大数据公司cloudera宣布增加对spark框架的投资,以取代MapReduce。

- 2014年4月,大数据公司MAPR进入了Spark阵地。Apache mahout放弃了MapReduce,将使用spark作为计算引擎。

- 2014年5月,发布了Spark 1.0.0。

- 2015年,Spark在国内IT行业中越来越受欢迎。越来越多的公司开始专注于部署或使用Spark取代MR2,hive,storm和其他传统的大数据并行计算框架。

2.什么是Spark?

- Apache Spark™ 是用于大规模数据处理的统一分析引擎。

- 大型数据集的统一分析引擎

- Spark是基于内存的通用并行计算框架,旨在使数据分析更快

- Spark包含大数据领域的各种通用计算框架

- Spark Core(离线计算)

- Sparksql(交互式查询)

- Spark Streaming(实时计算)

- Spark mllib(机器学习)

- Spark graphx(图形计算)

3. Spark可以取代Hadoop吗?

不完全正确。

因为我们只能用火花的核心,而不是MR用于离线计算,数据存储仍取决于HDFS。

Spark+Hadoop的结合是最流行的组合和最有前途的一个,在未来大数据的领域!

4.Spark的特点

- 速度

- 内存计算比Mr快100倍

- 磁盘计算比Mr快10倍以上

- 易于使用

- 提供Java Scala Python R语言的API接口

- 一站式解决方案

- Spark核心(离线计算)

- Spark SQL(交互式查询)

- Spark流式传输(实时计算)

- …..

- 可以在任何平台上运行

- Yarn

- Mesos

- Standalone

5.Spark的缺点

- JVM的内存开销太大,1g的数据通常会消耗5g的内存(tungsten项目试图解决此问题)

- 不同的spark应用程序之间没有有效的共享内存机制(项目Tachyon正在尝试引入分布式内存管理,因此不同的spark应用程序可以共享缓存的数据)

6. Spark与MR

6.1 MR的局限性

- 低层次的抽象,需要手工编写代码,很难使用

- 仅提供两个操作,即Map和Reduce,缺少表达式

- 作业只有两个阶段:映射和缩小。复杂的计算需要完成大量工作。作业之间的依赖关系由开发人员自己管理。

- 中间结果(reduce的输出)也放置在HDFS文件系统中

- 高延迟,仅适用于批处理数据,并且对交互式数据处理和实时数据处理的支持不足

- 迭代数据处理性能不佳

6.2 Spark解决了哪些问题?

- 低层次的抽象,需要手工编写代码,很难使用

- 在Spark中通过RDD(弹性分布式数据集)进行抽象

- 仅提供两个操作,即map和reduce,缺少表达式

- 火花中提供了许多运算符

- 作业只有两个阶段:map和reduce。

- Spark可能有多个阶段

- 中间结果也在HDFS文件系统上(缓慢)

- 如果中间结果在内存中,它将被写入本地磁盘而不是HDFS。

- 高延迟,仅适用于批处理数据,并且对交互式数据处理和实时数据处理的支持不足

- Sparksql和sparkstreaming解决了以上问题

- 迭代数据处理性能不佳

- 通过将数据缓存在内存中来提高迭代计算的性能

==因此,将Hadoop MapReduce替换为新一代大数据处理平台是技术发展的趋势。在新一代的大数据处理平台中,spark是目前得到最广泛认可和支持的。

7. Spark版本

- spark1.6.3:Scala版本2.10.5

- spark2.2.0:Scala 2.11.8版本(建议用于新项目)

- hadoop2.7.5

8.独立安装spark

- 准备安装软件包spark-2.2.0-bin-hadoop 2.7.tgz

$tar -zxvf spark-2.2.0-bin-hadoop2.7.tgz -C /opt/ $mv spark-2.2.0-bin-hadoop2.7/ spark

- 修改spark env.sh

export JAVA_HOME=/opt/jdk export SPARK_MASTER_IP=hdp01 export SPARK_MASTER_PORT=7077 export SPARK_WORKER_CORES=4 export SPARK_WORKER_INSTANCES=1 export SPARK_WORKER_MEMORY=2g export HADOOP_CONF_DIR=/opt/hadoop/etc/hadoop

- 配置环境变量

#Configure environment variables for spark export SPARK_HOME=/opt/spark export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin

- 启动stand-alone模式的Spark

$start-all-spark.sh

- 查看启动状态

http://hdp01:8080

9.安装spark分布式集群

- 配置spark env.sh

[root@hdp01 /opt/spark/conf]

export JAVA_HOME=/opt/jdk #Configure the host of the master export SPARK_MASTER_IP=hdp01 #Configure the port for master host communication export SPARK_MASTER_PORT=7077 #Configure the number of CPU cores used by spark in each worker export SPARK_WORKER_CORES=4 #Configure one worker per host export SPARK_WORKER_INSTANCES=1 #The memory used by worker is 2GB export SPARK_WORKER_MEMORY=2g #Directory in Hadoop's configuration file export HADOOP_CONF_DIR=/opt/hadoop/etc/hadoop

- 配置slaves

[root@hdp01 /opt/spark/conf]

hdp03 hdp04 hdp05

- 分发Spark

[root@hdp01 /opt/spark/conf]

$scp -r /opt/spark hdp02:/opt/ $scp -r /opt/spark hdp03:/opt/ $scp -r /opt/spark hdp04:/opt/ $scp -r /opt/spark hdp05:/opt/

- 分发在hdp01上配置的环境变量

$scp -r /etc/profile hdp03:/etc/

[root@hdp01 /]

$scp -r /etc/profile hdp02:/etc/ $scp -r /etc/profile hdp03:/etc/ $scp -r /etc/profile hdp04:/etc/ $scp -r /etc/profile hdp05:/etc/

- 启动Spark

[root@hdp01 /]

$start-all-spark.sh

10. 配置Spark高可用性集群

先停止正在运行的火花集群

- 修改spark env.sh

#Note the following two lines #export SPARK_MASTER_IP=hdp01 #export SPARK_MASTER_PORT=7077

- 添加以下内容

$export SPARK_DAEMON_JAVA_OPTS="-Dspark.deploy.recoveryMode=ZOOKEEPER - Dspark.deploy.zookeeper.url=hdp03:2181,hdp04:2181,hdp05:2181 -Dspark.deploy.zookeeper.dir=/spark"

- 分发修改后的配置

$scp /opt/spark/conf/spark-env.sh hdp02:/opt/spark/conf $scp /opt/spark/conf/spark-env.sh hdp03:/opt/spark/conf $scp /opt/spark/conf/spark-env.sh hdp04:/opt/spark/conf $scp /opt/spark/conf/spark-env.sh hdp05:/opt/spark/conf

- 启动集群

[root@hdp01 /]

$start-all-spark.sh

[root@hdp02 /]

$start-master.sh

11.第一个Spark Shell程序

$spark-shell --master spark://hdp01:7077

#Spark shell can specify the resources (total cores, memory used on each work) used by the spark shell application at startup.

$spark-shell --master spark://hdp01:7077 --total-executor-cores 6 --executor-memory 1g

#If you do not specify to use all cores on each worker by default, and 1G memory on each worker

>>>sc.textFile("hdfs://ns1/sparktest/").flatMap(_.split(",")).map((_,1)).reduceByKey(_+_).collect

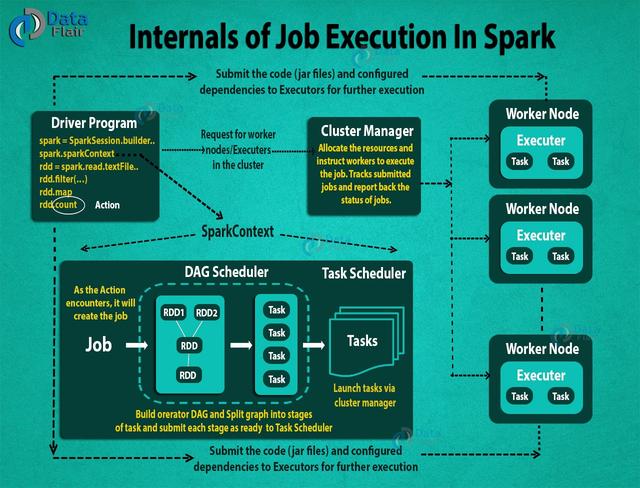

12.Spark中的角色

- Master

- 负责接收对已提交工作的请求

- Master负责安排资源(在worker中启动executor)

- Worker

- 工作者中的执行者负责执行任务

- Spark-Submitter ===> Driver

- 向master提交Spark应用

13. Spark提交的一般过程

如果发现任何不正确的地方,或者想分享有关上述主题的更多信息,欢迎反馈。

译自developpaper.com