selenium+scrapy爬取前程无忧职位

目标:

爬取前程无忧网站职位关键字为python的职位信息

分析

首页的链接地址:

‘https://search.51job.com/list/000000,000000,0000,00,9,99,Python,2,1.html’

不同页码对应url

‘https://search.51job.com/list/000000,000000,0000,00,9,99,Python,2,page.html’ 其中page对应页数

存在的问题

- 对第一页爬取数据发现不能解析职位信息:将获得的响应保存为html打开后发现的确没有职位信息

- 总页数随着时间会发生变化:不能将页数固定

- 列表页和详情页允许域名不同:域名注意

问题解决

1.考虑使用selenium模拟浏览器访问获得职位信息和总的页数

2.获得页数后动态添加 start_urls列表页url

3.selenium控制浏览器访问列表页,每访问一次列表页将该页所有职位详情页面保存在列表中

4.职位详情页不需要使用selenium因此速度很快

代码实现

import scrapy

from lxml import etree

from selenium import webdriver

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.common.by import By

from selenium.webdriver.support import expected_conditions as EC

from items import My51JobItem

class My51jobspiderSpider(scrapy.Spider):

name = 'my51jobSpider'

allowed_domains = ['www.search.51job.com','www.jobs.51job.com']

# allowed_domains = ['www.jobs.51job.com']

# 从第一页开始

start_urls = ['https://search.51job.com/list/000000,000000,0000,00,9,99,Python,2,1.html']

def __init__(self):

self.driver = webdriver.Chrome(executable_path=r"D:\programs\chrome_driver\chromedriver.exe")

def parse(self, response):

self.driver.get(self.start_urls[0])

# 获取总的页数

try:

element = WebDriverWait(self.driver, 5).until(EC.presence_of_element_located

((By.XPATH,

"//div[@class='j_page']//div[@class='p_in']/span")))

print("获取到页数:", element)

except:

print("获取页数失败")

# 重新构造url列表

page_text = element.get_attribute('innerText')

page_num = int(page_text.split(' ')[1])

for i in range(page_num):

if i > 0:

urls ='https://search.51job.com/list/000000,000000,0000,00,9,99,Python,2,{}.html'.format(i+1)

print(urls)

self.start_urls.append(urls)

if i == 50:

break;

# 获取所有职位详情页面链接

page_list = []

for url in self.start_urls:

print("解析当前url:",url)

self.driver.get(url)

# 获取职位详情

try:

element = WebDriverWait(self.driver, 5).until(EC.presence_of_element_located

((By.XPATH,

"//div[@class='j_joblist']")))

print("获取到职位:", element)

except:

print("获取页数失败")

# 解析职位信息

addre = element.find_elements_by_xpath(".//div[@class='e']/a")

# 页面详情列表

for add in addre:

page = add.get_attribute("href")

page_list.append(page)

# print(add.get_attribute('innerText'))

# 解析详情页面信息

for url in page_list:

print(url)

yield scrapy.Request(url=url, callback=self.detail, dont_filter=True)

print("url发送出去了")

def detail(self, response):

# 解析详情页面信息

print("解析中{}。。。".format(response.url))

html = etree.HTML(response.text)

cn = html.xpath('//div[@class="tHeader tHjob"]//div[@class="cn"]')[0]

# 职位名称

title = cn.xpath('./h1/@title')

if title:

title = title[0]

# 工资

salary = cn.xpath('./strong/text()')

if salary:

salary = salary[0]

# 公司名字

cname = cn.xpath('.//p[@class="cname"]//a[1]/@title')

if cname:

cname = cname[0]

# 公司网址

caddr = cn.xpath('.//p[@class="cname"]//a[1]/@href')

if caddr:

caddr = caddr[0]

# 信息

msg = cn.xpath('.//p[@class="msg ltype"]/@title')

if msg:

msg = msg[0]

# detail

detail = html.xpath('//div[@class="tCompany_main"]//div[@class="bmsg job_msg inbox"]//text()')

# 公司信息

company = html.xpath('//div[@class="tCompany_sidebar"]//div[@class="com_tag"]//text()')

# 创建对象

item = My51JobItem(title=title,salary=salary,cname=cname,caddr=caddr,msg=msg,detail=detail,company=company)

print("解析完成")

yield item

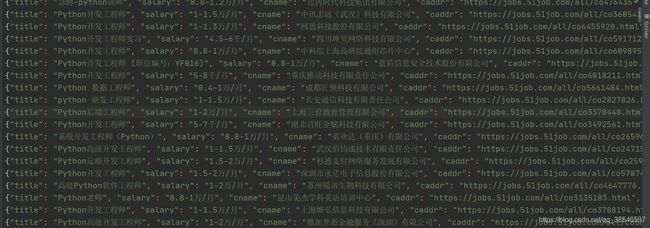

总结

1.主要耗时时间在于打开不同列表页:解析每页需要1s左右,共计7百多页

2.这里统计了前50页;花费时间1分钟左右保存数据2500多条职位信息