Puppo,柯基犬:使用Unity ML-Agents工具包的可爱重载

Building a game is a creative process that involves many challenging steps including defining the game concept and logic, building assets and animations, specifying NPC behaviors, tuning difficulty and balance and, finally, testing the game with real players before launch. We believe machine learning can be used across the entire creative process and in today’s blog post we will focus on one of these challenges: specifying the behavior of an NPC.

制作游戏是一个创造性的过程,涉及许多挑战性步骤,包括定义游戏概念和逻辑,构建资产和动画,指定NPC行为,调整难度和平衡,最后在发布前与真实玩家测试游戏。 我们相信机器学习可以在整个创作过程中使用,在今天的博客文章中,我们将重点关注以下挑战之一:指定NPC的行为。

Traditionally, the behavior of an NPC is hard-coded using scripting and behavior trees. These (typically long) lists of rules process information about the surroundings of the NPC (called observations) to dictate its next action. These rules can be time-consuming to write and maintain as the game evolves. Reinforcement learning provides a promising, alternative framework for defining the behavior of an NPC. More specifically, instead of defining the observation to action mapping by hand, you can simply train your NPC by providing it with rewards when it achieves the desired goal.

传统上,使用脚本和行为树对NPC的行为进行硬编码。 这些(通常很长)的规则列表处理有关NPC周围环境的信息(称为观察值),以指示其下一步行动。 随着游戏的发展,编写和维护这些规则可能会非常耗时。 强化学习为定义NPC的行为提供了一个有希望的替代框架。 更具体地说,您无需手动定义观察到操作的映射,而可以通过在NPC实现预期目标时为其提供奖励来简单地训练它。

好小狗,坏小狗的方法 (The good puppy, bad puppy method)

Training an NPC using reinforcement learning is quite similar to how we train a puppy to play fetch. We present the puppy with a treat and then throw the stick. At first, the puppy wanders around not sure what to do, until it eventually picks up the stick and brings it back, promptly getting a treat. After a few sessions, the puppy learns that retrieving a stick is the best way to get a treat and continues to do so.

使用强化学习训练NPC与我们训练小狗玩取情的方法非常相似。 我们给小狗吃点心,然后扔棍子。 刚开始时,小狗四处游荡,不确定该怎么做,直到它最终捡起棍子并把它带回来,并Swift得到治疗。 经过几次训练后,这只小狗得知,取回棍子是获得治疗的最佳方法,并将继续这样做。

That is precisely how reinforcement learning works in training the behavior of an NPC. We provide our NPC with a reward whenever it completes a task correctly. Through multiple simulations of the game (the equivalent of many fetch sessions), the NPC builds an internal model of what action it needs to perform at each instance to maximize its reward, which results in the ideal, desired behavior. Thus, instead of creating and maintaining low-level actions for each observation of the NPC, we only need to provide a high-level reward when a task is completed correctly and the NPC learns the appropriate low-level behavior.

这正是强化学习在训练NPC行为方面的工作方式。 每当NPC正确完成任务时,我们都会给予奖励。 通过对游戏进行多次模拟(相当于许多获取会话),NPC建立了一个内部模型,该模型需要在每个实例上执行什么动作以最大化其奖励,从而产生理想的期望行为。 因此,我们无需为每次对NPC的观察都创建和维护低级动作,而只需要在正确完成任务并且NPC学习适当的低级行为时提供高级奖励即可。

小狗柯基犬 (Puppo, The Corgi)

演示地址

To showcase the effectiveness of this technique, we built a demo game, “Puppo (read as ‘Pup-o’), The Corgi”, and presented it at Unite Berlin. It is a mobile game where you play fetch with a cute little corgi. Throw a stick to Puppo by swiping on the screen and Puppo brings it back. While the higher-level game logic uses traditional scripting, the corgi learns to walk, run, jump and fetch the stick using reinforcement learning. Instead of using animation or scripted behaviors, the movements of the corgi are trained solely with reinforcement learning. Not only does it look super cute, but the corgi’s motion is driven by the physics engine exclusively. This means for instance that the motion of the corgi can be affected by surrounding RigidBodies.

为了展示该技术的有效性,我们制作了一个演示游戏“ Puppo(读作'Pup-o'),Corgi” ,并在Unite Berlin上展示了该游戏。 这是一款手机游戏,您可以在其中与可爱的小柯基犬玩取球。 在屏幕上滑动即可向Puppo投掷棍子,然后Puppo将其带回。 较高级别的游戏逻辑使用传统脚本编写时,柯基犬使用强化学习来学习走路,奔跑,跳跃和拿起棍子。 代替使用动画或脚本行为,只通过强化学习来训练柯基犬的运动。 它不仅看起来超级可爱,而且柯基犬的动作完全由物理引擎驱动。 例如,这意味着柯基犬的运动会受到周围刚体的影响。

Puppo became so popular at Unite Berlin that many developers asked us how we made it. That’s why we decided to write this blog post and release the project for you to try it out yourself.

Puppo在Unite Berlin变得如此受欢迎,以至于许多开发者问我们如何做到这一点。 这就是为什么我们决定撰写此博客文章并发布该项目供您自己尝试的原因。

Download the Unity Project

下载Unity项目

To get started, we will cover the requirements and preliminary work that you need to do to train the corgi. Then, we will share our experience in training it. Finally, we will go over the steps we took to create a game with Puppo as its hero.

首先,我们将介绍您训练柯基犬所需的要求和初步工作。 然后,我们将分享我们的培训经验。 最后,我们将介绍制作以Puppo为英雄的游戏的步骤。

前期工作 (Preliminary work)

Before we get into the details, let’s define a few important notions in reinforcement learning. The goal of reinforcement learning is to learn a policy for an agent. An agent is an entity that interacts with its environment: Every learning step, the agent collects observations about the state of the environment, performs an action, and gets a reward for that action. The policy defines how an agent acts based on the observations it perceives. We can develop a policy by rewarding the agent when his behavior is appropriate.

在深入探讨细节之前,让我们先定义一些强化学习中的重要概念 。 强化学习的目的是为代理商学习策略 。 代理是与环境互动的实体:在每个学习步骤中 ,代理都会收集有关环境状态的观察结果 ,执行一项操作 ,并为该操作获得奖励 。 该策略根据代理所感知的观察来定义其行为方式。 当代理人的行为适当时,我们可以通过奖励其制定政策。

In our case, the environment is the game scene and the agent is Puppo. Puppo needs to learn a policy so it can play fetch with us. Similar to how we train a real dog with treats to fetch sticks, we can train Puppo by rewarding it appropriately.

在我们的案例中,环境是游戏场景,代理商是Puppo。 Puppo需要学习一项政策,以便可以与我们合作。 类似于我们训练真正的狗以获取棍棒的方式,我们可以通过适当奖励它来训练Puppo。

We used a ragdoll to create Puppo and its legs are driven by joint motors. Therefore, for Puppo to learn how to get to the target, it must first learn how to rotate the joint motors so that it can move.

我们使用布娃娃创建了Puppo,它的腿由关节电机驱动。 因此,为了让Puppo学习如何到达目标,它必须首先学习如何旋转关节电机以使其运动。

A real dog uses vision and other senses to orient itself and to decide where to go. Puppo follows the same methodology. It collects observations about the scene such as proximity to the target, the relative position between itself and the target and the orientation of its own legs, so it can decide what action to take next. In Puppo’s case, the action describes how to rotate the joint motors in order to move.

一只真正的狗会使用视觉和其他感官来定向自己并决定要去哪里。 Puppo遵循相同的方法。 它收集有关场景的观察信息,例如与目标的接近程度,自身与目标之间的相对位置以及自己腿部的方向,因此可以决定下一步要采取的动作。 在Puppo的情况下,该动作描述了如何旋转关节电动机以使其运动。

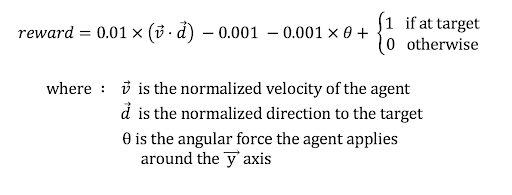

After each action Puppo performs, we give a reward to the agent. The reward is comprised of:

在Puppo执行每个动作后,我们会向代理商给予奖励。 奖励包括:

Orientation Bonus: We reward Puppo when it is moving towards the target. To do so, we use the Vector3.Dot() method.

定向奖励:当Puppo向目标前进时,我们会对其进行奖励。 为此,我们使用Vector3.Dot()方法。

Time Penalty: We give a fixed penalty (negative reward) to Puppo at every action. This way, Puppo will learn to get the stick as fast as possible to avoid a heavy time penalty.

时间处罚:我们会在每次行动中对Puppo给予固定的罚款(负奖励)。 这样,Puppo将学会尽可能快地获得控制杆,以避免繁重的时间损失。

Rotation Penalty: We penalize Puppo for trying to spin too much. A real dog would be dizzy if it spins too much. To make it look real, we penalize Puppo when it turns around too fast.

轮换处罚:我们对Puppo尝试旋转过多表示惩罚。 如果真狗旋转太多,就会头晕。 为了使它看起来真实,我们会在Puppo旋转得太快时对其进行惩罚。

Getting to the target Reward: Most importantly, we reward Puppo for getting to the target.

达到目标奖励:最重要的是,我们奖励Puppo达到目标。

火车Puppo (Train Puppo)

Now Puppo is ready to learn. It took us two hours on a laptop for the dog to learn to run towards the target efficiently. During the training process, we noticed one interesting behavior. The dog learned to walk rather quickly in about 1 min. Then, as the training continued, the dog learned to run. Soon after, it began to flip over when it tried to make a sudden turn while running. Fortunately, the dog learned how to get back up just as a real dog will do. This clumsy behavior is so cute that you could stop the training at this point and use it directly in the game.

现在,Puppo准备学习。 狗用笔记本电脑花了两个小时才能让狗学会有效地向目标奔跑。 在培训过程中,我们注意到了一种有趣的行为。 这只狗在大约1分钟内学会了很快走路。 然后,随着训练的继续,狗学会了奔跑。 不久之后,当它试图在奔跑时突然转弯时,它开始翻转。 幸运的是,这只狗学会了如何像真正的狗一样站起来。 这种笨拙的行为非常可爱,您可以在此时停止训练并直接在游戏中使用它。

If you are interested in training Puppo yourself, you can follow the instruction in the project. It includes detail steps on how to set up the training and what parameters you should choose. For a more detailed tutorial on how to train agents, please visit the ML-Agents documentation site.

如果您想自己培训Puppo,可以按照项目中的说明进行操作。 它包括有关如何设置培训以及应该选择哪些参数的详细步骤。 有关如何培训代理的更详细的教程,请访问ML-Agents文档站点 。

用Puppo创建游戏 (Create a game with Puppo)

To create “Puppo, The Corgi” game, we need to define the game logic that lets a player interact with the trained model. Because Puppo has learned to run to a target, we need to implement the logic that changes the target for Puppo within the game.

要创建“ Puppo,科基犬”游戏,我们需要定义游戏逻辑,以允许玩家与训练有素的模型进行交互。 由于Puppo已经学会了奔向目标,因此我们需要在游戏中实现更改Puppo目标的逻辑。

In game mode, we set the target to be the stick right after the player has thrown it. When Puppo arrives at the stick, we change Puppo’s target to the player’s position in the scene so that Puppo returns the stick to the player. We do this because it’s much easier to train Puppo to move to a target while defining the game flow logic with a script. It’s our belief that Machine Learning and traditional game development methods can be combined to get the best of both approaches. “Puppo, The Corgi” project includes a pre-trained model for the corgi that you can use immediately and even deploy on mobile devices.

在游戏模式下,我们将目标设置为在玩家扔出棍子后立即将其作为棍子。 当Puppo到达摇杆时,我们会将Puppo的目标更改为场景中玩家的位置,以便Puppo将摇杆返回给玩家。 我们这样做是因为训练Puppo使其更容易移动到目标,同时用脚本定义游戏流程逻辑。 我们相信,可以将机器学习和传统游戏开发方法结合起来,以充分利用这两种方法。 “ Puppo,柯基犬”项目包含针对柯基犬的预训练模型,您可以立即使用该模型,甚至可以将其部署在移动设备上。

下一步 (Next Steps)

We hope this blog post has shed some light on what is achievable with the ML-Agents Toolkit for game development.

我们希望这篇博文能够阐明ML-Agents工具包可用于游戏开发的功能。

Want to dive deep into the code of this project? We released the project and you can download it here. To learn more about how to use the ML-Agents Toolkit, you can find our official documentation and a step-by-step beginner’s guide here. If you are interested in getting a deeper understanding of the math, algorithms, and theories behind reinforcement learning, there is a Reinforcement Learning Nanodegree we offer in partnership with Udacity.

是否想深入研究该项目的代码? 我们发布了该项目,您可以在此处下载。 要了解有关如何使用ML-Agents工具包的更多信息,您可以在此处找到我们的官方文档和分步入门指南 。 如果您想对加深学习背后的数学,算法和理论有更深入的了解,我们将与Udacity合作提供“ 加深学习纳米学位” 。

We would love to hear about your experience using the ML-Agents Toolkit for your games. Feel free to reach out to us on our GitHub issues page or email us directly.

我们很想听听您使用ML-Agents工具包进行游戏的经验 。 请随时在我们的GitHub问题页面上与我们联系,或直接给我们发送电子邮件 。

Happy creating!

创建愉快!

翻译自: https://blogs.unity3d.com/2018/10/02/puppo-the-corgi-cuteness-overload-with-the-unity-ml-agents-toolkit/