爬取智联招聘上24座热门城市中Java招聘信息

一、确定URL及其传递的参数

获取上海中Java的招聘信息url:

通过对比得知,url中传递了三个参数,jl代表城市的编号,kw代表职业,p代表当前在招聘页面的第几页

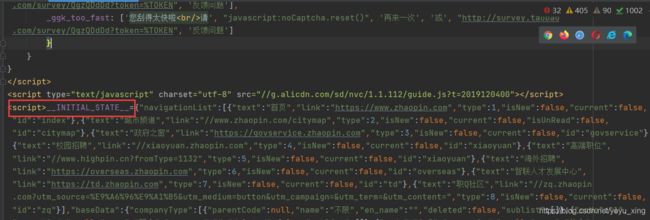

二、判断数据是否动态显示

执行以下代码后,然后在开发工具中打开浏览器查看页面

import requests

from fake_useragent import UserAgent

headers = {

'User-Agent': UserAgent().random

}

url = 'https://sou.zhaopin.com/?jl=530&kw=Java%E5%BC%80%E5%8F%91&p=1'

res = requests.get(url=url, headers=headers)

res.encoding = res.apparent_encoding

text = res.text

with open('zl_src_code.html', 'w', encoding='utf-8') as f:

f.write(text)

三、使用cookie或selenium登录爬取

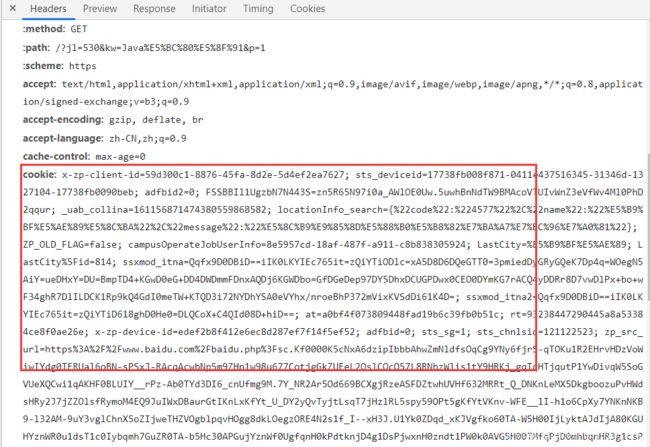

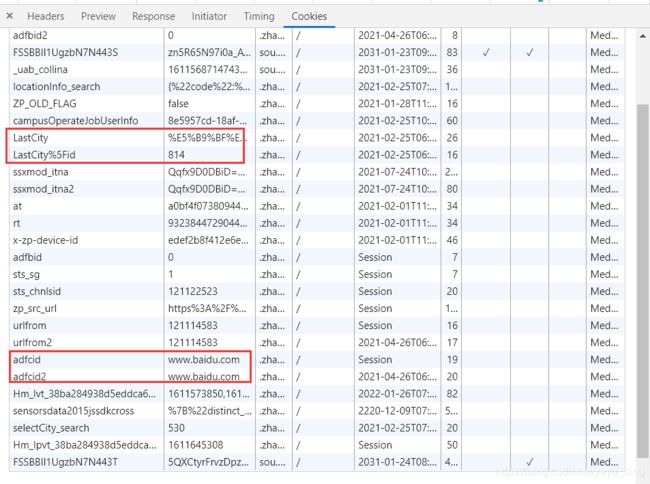

在抓包工具中获取cookie

观察得知部分参数可以剔除:

将所有的 cooklie参数粘贴进文件中,并删除以上说明的无用的cookie参数:

![]()

执行以下代码生成cookie字典(因为之后要在scrapy项目中设置cookie,因此cookie文件为绝对路径)

def get_cookie():

with open(r'E:\Code\Python\2021\PythonDemo\zl\zl\zl_cookie.txt', 'r', encoding='utf-8') as f:

cookie_str = f.read()

cookies = dict()

for item in cookie_str.split('; '):

cookies[item.split('=')[0]] = item.split('=')[1]

return cookies

验证cookie登录是否成功,执行以下代码

import requests

from fake_useragent import UserAgent

headers = {

'User-Agent': UserAgent().random

}

def get_cookie():

with open(r'E:\Code\Python\2021\PythonDemo\zl\zl\zl_cookie.txt', 'r', encoding='utf-8') as f:

cookie_str = f.read()

cookies = dict()

for item in cookie_str.split('; '):

cookies[item.split('=')[0]] = item.split('=')[1]

return cookies

url = 'https://sou.zhaopin.com/?jl=530&kw=Java%E5%BC%80%E5%8F%91&p=1'

res = requests.get(url=url, headers=headers, cookies=get_cookie())

res.encoding = res.apparent_encoding

text = res.text

with open('zl_src_code.html', 'w', encoding='utf-8') as f:

f.write(text)

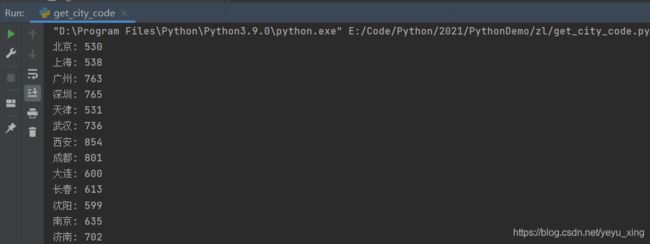

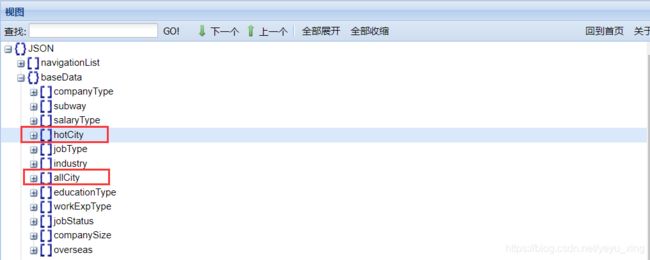

四、利用cookie保持登录状态后,并获取24座热门城市及其编号

通过观察之前爬取的源码可知,数据由js变量动态接收然后显示在网页中,且数据格式为json形式,因此需要解析json

通过json在线工具查看json结构:

编写Python代码如下:

import requests

from fake_useragent import UserAgent

from bs4 import BeautifulSoup

import json

def get_cookie():

with open(r'E:\Code\Python\2021\PythonDemo\zl\zl\zl_cookie.txt', 'r', encoding='utf-8') as f:

cookie_str = f.read()

cookies = dict()

for item in cookie_str.split('; '):

cookies[item.split('=')[0]] = item.split('=')[1]

return cookies

headers = {

'User-Agent': UserAgent().random

} # 设置随机请求头

url = 'https://sou.zhaopin.com/?jl=530&kw=Java%E5%BC%80%E5%8F%91&p=1' # url中的中文参数已被url编码

res = requests.get(url=url, headers=headers, cookies=get_cookie())

res.encoding = res.apparent_encoding # 自动解析网页编码

text = res.text

soup = BeautifulSoup(text, 'html.parser')

jsons_str = soup.select('body > script:nth-child(10)')[0].string[18:] # 这里使用BeautifulSoup获取热门城市的json数据。xpath试了,获取不到

for city in json.loads(jsons_str)['baseData']['hotCity']:

print('{}: {}'.format(city['name'], city['code']))

from redis import Redis

class RedisConnection:

host = '127.0.0.1'

port = 6379

decode_responses = True

# password = '5180'

@classmethod

def getConnection(cls):

conn = None

try:

conn = Redis(

host=cls.host,

port=cls.port,

decode_responses=cls.decode_responses,

# password=cls.password

)

except Exception as e:

print(e)

return conn

@classmethod

def close(cls, conn):

if not conn:

conn.close()

将代码改进并将城市名称和城市号保存进redis中:

import requests

from fake_useragent import UserAgent

from bs4 import BeautifulSoup

import json

from connection import RedisConnection

def get_cookie():

with open(r'E:\Code\Python\2021\PythonDemo\zl\zl\zl_cookie.txt', 'r', encoding='utf-8') as f:

cookie_str = f.read()

cookies = dict()

for item in cookie_str.split('; '):

cookies[item.split('=')[0]] = item.split('=')[1]

return cookies

headers = {

'User-Agent': UserAgent().random

} # 设置随机请求头

url = 'https://sou.zhaopin.com/?jl=530&kw=Java%E5%BC%80%E5%8F%91&p=1' # url中的中文参数已被url编码

res = requests.get(url=url, headers=headers, cookies=get_cookie())

res.encoding = res.apparent_encoding # 自动解析网页编码

text = res.text

soup = BeautifulSoup(text, 'html.parser')

jsons_str = soup.select('body > script:nth-child(10)')[0].string[18:] # 这里使用BeautifulSoup获取热门城市的json数据。xpath试了,获取不到

conn = RedisConnection.getConnection()

for city in json.loads(jsons_str)['baseData']['hotCity']:

conn.hset('zl_hotCity', city['name'], city['code'])

print('{}: {}'.format(city['name'], city['code']))

RedisConnection.close(conn)

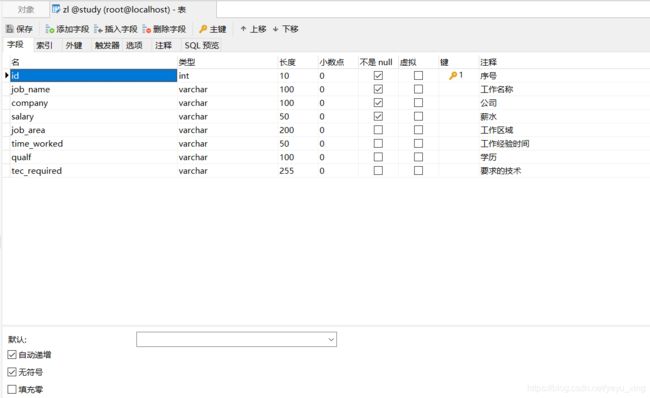

五、编写scrapy

(一):准备工作

执行scrapy startproject zl创建scrapy项目

执行scrapy genspider zlCrawler www.xxx.com创建爬虫文件

创建如下所示的study数据库和mysql表:

(二)、更改项目配置文件settings.py:

# Scrapy settings for zl project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

from fake_useragent import UserAgent

BOT_NAME = 'zl'

SPIDER_MODULES = ['zl.spiders']

NEWSPIDER_MODULE = 'zl.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = UserAgent().random

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

LOG_LEVEL = 'ERROR'

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'zl.pipelines.ZlPipeline': 300,

}

(三)、更改items.py文件,确定爬取字段

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class ZlItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

job_name = scrapy.Field() # 工作名称

company = scrapy.Field() # 公司

salary = scrapy.Field() # 薪水

job_area = scrapy.Field() # 工作区域

time_worked = scrapy.Field() # 工作时间经验

qualf = scrapy.Field() # 学历

tec_required = scrapy.Field() # 要求的技术

(四)、创建dbutil包和connection.py文件

connection.py文件内容如下:

from pymysql import connect

from redis import Redis

class MysqlConnection:

host = '127.0.0.1'

port = 3306

user = 'root'

password = 'qwe12333'

db = 'study'

charset = 'utf8'

@classmethod

def getConnection(cls):

conn = None

try:

conn = connect(

host=cls.host,

port=cls.port,

user=cls.user,

password=cls.password,

db=cls.db,

charset=cls.charset

)

except Exception as e:

print(e)

return conn

@classmethod

def close(cls, conn):

if conn:

conn.close()

class RedisConnection:

host = '127.0.0.1'

port = 6379

decode_responses = True

# password = '5180'

@classmethod

def getConnection(cls):

conn = None

try:

conn = Redis(

host=cls.host,

port=cls.port,

decode_responses=cls.decode_responses,

# password=cls.password

)

except Exception as e:

print(e)

return conn

@classmethod

def close(cls, conn):

if conn:

conn.close()

(五)、更改zlCrawler.py文件,编写爬虫代码

import scrapy

from zl.items import ZlItem

from zl.dbutil.connection import RedisConnection

def get_cookie():

with open(r'E:\Code\Python\2021\PythonDemo\zl\zl\zl_cookie.txt', 'r', encoding='utf-8') as f:

cookie_str = f.read() # 读取cookie文件,注意为绝对路径

cookies = dict()

for item in cookie_str.split('; '):

cookies[item.split('=')[0]] = item.split('=')[1] # 生成cookie字典

return cookies

class ZlcrawlerSpider(scrapy.Spider):

name = 'zlCrawler'

# allowed_domains = ['www.xxx.com']

start_urls = []

def __init__(self, **kwargs):

super().__init__(**kwargs)

self.url = 'https://sou.zhaopin.com/?jl={}&kw=Java%E5%BC%80%E5%8F%91&p={}'

self.cookies = get_cookie()

self.item = ZlItem()

self.redis_conn = RedisConnection.getConnection() # 获取redis连接

self.zl_hotCity = self.redis_conn.hgetall('zl_hotCity') # 获取指定hash的全部值

RedisConnection.close(self.redis_conn) # 释放连接

def start_requests(self): # 该方法主要是传递url和封装cookie

for city_name in self.zl_hotCity:

city_code = self.zl_hotCity[city_name]

yield scrapy.Request(self.url.format(city_code, 1), cookies=self.cookies,

meta={

'city_code': city_code, 'page_num': 1})

def parse(self, response):

next_page = response.xpath('//div[@class="soupager"]/button[2]/@class').extract_first()

if next_page is not None: # 可能会因为访问过多导致ip被禁,状态码是200,但是没数据,因此提前判断

for row in response.xpath('//div[@class="joblist-box__item clearfix"]'):

self.item['job_name'] = row.xpath(

'.//span[@class="iteminfo__line1__jobname__name"]/text()').extract_first()

self.item['company'] = row.xpath(

'.//span[@class="iteminfo__line1__compname__name"]/text()').extract_first()

self.item['salary'] = row.xpath(

'.//p[@class="iteminfo__line2__jobdesc__salary"]/text()').extract_first().strip()

self.item['job_area'] = row.xpath(

'.//ul[@class="iteminfo__line2__jobdesc__demand"]/li[1]/text()').extract_first()

self.item['time_worked'] = row.xpath(

'.//ul[@class="iteminfo__line2__jobdesc__demand"]/li[2]/text()').extract_first()

self.item['qualf'] = row.xpath(

'.//ul[@class="iteminfo__line2__jobdesc__demand"]/li[3]/text()').extract_first()

self.item['tec_required'] = ' '.join(

row.xpath('.//div[@class="iteminfo__line3__welfare__item"]/text()').extract())

yield self.item

city_code = response.meta['city_code']

page_num = response.meta['page_num']

if 'disable' not in next_page:

yield scrapy.Request(url=self.url.format(city_code, page_num + 1),

meta={

'city_code': city_code, 'page_num': page_num + 1},

callback=self.parse, cookies=self.cookies)

可能会因为访问过多导致ip被禁,状态码是200,但是没数据,等待一个小时候才可以正常访问

(六)、更改pipelines.py文件,用于保存数据进mysql

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

from itemadapter import ItemAdapter

from zl.dbutil.connection import MysqlConnection

from time import time

class ZlPipeline:

def __init__(self):

self.start_time = time()

self.conn = MysqlConnection.getConnection()

self.cursor = self.conn.cursor()

self.sql = 'insert into zl values(null, %s, %s, %s, %s, %s, %s, %s);'

self.count = 0

def process_item(self, item, spider):

try:

self.count += self.cursor.execute(self.sql,

(item['job_name'], item['company'], item['salary'], item['job_area'],

item['time_worked'],

item['qualf'], item['tec_required']))

except Exception as e:

self.conn.rollback()

if self.count % 10 == 0:

self.conn.commit()

print('{}: {}--{}'.format(self.count, item['company'], item['job_name']))

def close_spider(self, spider):

if self.cursor:

self.cursor.close()

self.conn.commit()

MysqlConnection.close(self.conn)

print('耗时:{}秒'.format(time() - self.start_time))

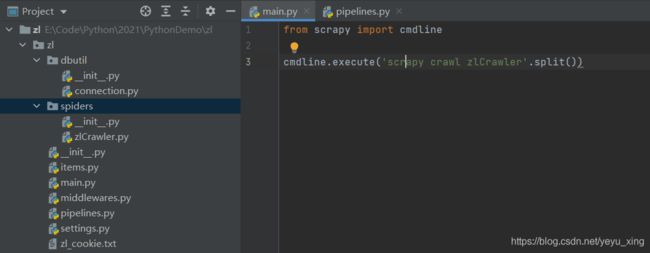

(七)、创建main.py文件,用于启动scrapy

from scrapy import cmdline

cmdline.execute('scrapy crawl zlCrawler'.split())