RNN循环神经网络

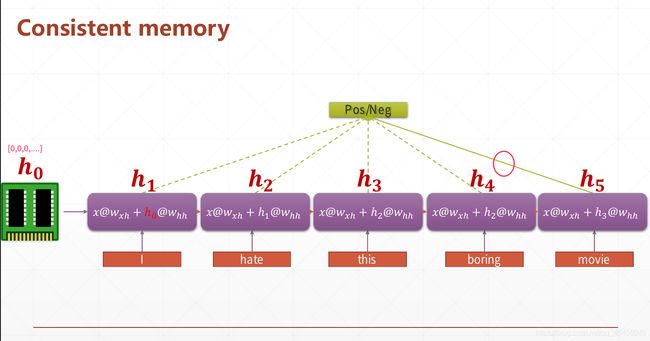

上图一个单词一个线性层的model,当单词数量变多时,参数量会变的很大

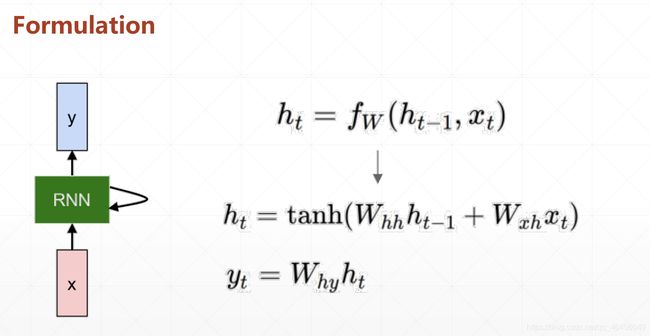

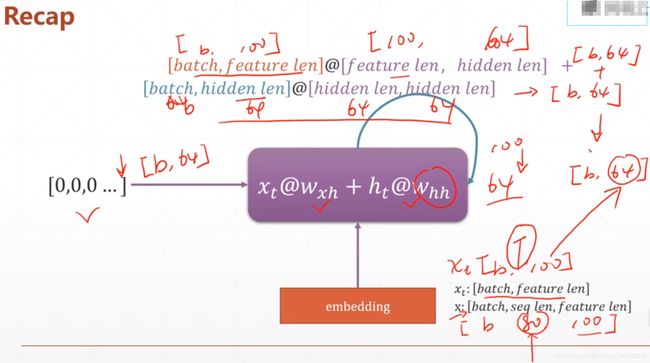

数学形式

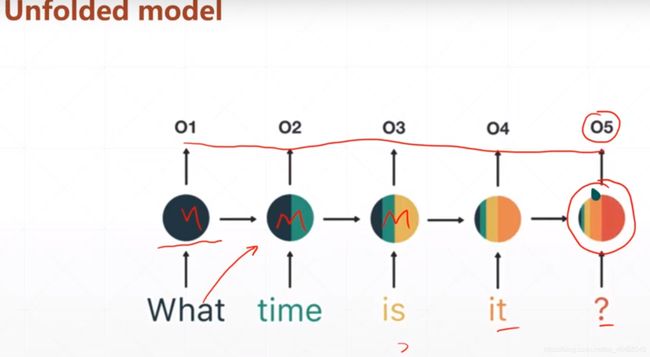

在时间维度上展开

In [4]: cell = layers.SimpleRNNCell(3) # 在时间维度上进行一次前向运算叫做一个cell

In [5]: cell.build(input_shape=(None, 4))

In [6]: cell.trainable_variables

Out[6]:

[<tf.Variable 'kernel:0' shape=(4, 3) dtype=float32, numpy=

array([[-0.62658715, 0.2633381 , 0.52798474],

[-0.61995786, 0.06983739, 0.5513731 ],

[ 0.38611555, -0.4026158 , 0.03712767],

[ 0.30363703, -0.17313945, -0.49760813]], dtype=float32)>,

<tf.Variable 'recurrent_kernel:0' shape=(3, 3) dtype=float32, numpy=

array([[-0.7433469 , 0.27049097, -0.611776 ],

[-0.3911615 , -0.9176911 , 0.0695377 ],

[ 0.54261214, -0.29099387, -0.7879687 ]], dtype=float32)>,

<tf.Variable 'bias:0' shape=(3,) dtype=float32, numpy=array([0., 0., 0.], dtype=float32)>]

In [7]: # 'kernel' 是Wxh 'recurrent_kernel' 是Whh

Single layer RNN Cell

In [8]: x = tf.random.normal([4, 80, 100])

In [9]: xt0 = x[:,0,:] # 第一个时间戳

In [10]: cell = tf.keras.layers.SimpleRNNCell(64)

In [11]: out, ht1 = cell(xt0, [tf.zeros([4, 64])])

In [12]: out.shape, ht1[0].shape # ht1 = [out]

Out[12]: (TensorShape([4, 64]), TensorShape([4, 64]))

In [13]: id(out), id(ht1[0])

Out[13]: (140385529293816, 140385529293816)

W, b

In [14]: cell = layers.SimpleRNNCell(64)

In [16]: cell.build(input_shape=(None, 100))

In [17]: cell.trainable_variables

Out[17]:

[<tf.Variable 'kernel:0' shape=(100, 64) dtype=float32, numpy=

array([[-0.0462963 , 0.18592049, -0.07400571, ..., 0.02941154,

-0.05667783, 0.17601068],

[ 0.02097672, -0.06260185, 0.07964225, ..., -0.11110415,

-0.01080528, -0.00902247],

[-0.05255362, 0.07717623, -0.11303762, ..., -0.05901927,

-0.03984712, -0.10676748],

...,

[-0.10032847, 0.13400845, -0.09042987, ..., -0.05995423,

0.01902728, -0.18547705],

[-0.1514959 , 0.03897026, -0.01399444, ..., -0.01285194,

-0.02200188, -0.08484551],

[ 0.18662949, 0.12275948, -0.0774735 , ..., 0.16830678,

0.11057346, 0.04651706]], dtype=float32)>,

<tf.Variable 'recurrent_kernel:0' shape=(64, 64) dtype=float32, numpy=

array([[-0.03017783, 0.00358709, 0.10952011, ..., 0.13098714,

-0.01387545, -0.04514761],

[ 0.05304666, -0.12605345, 0.01283378, ..., -0.15707201,

0.00664928, 0.12498017],

[-0.11184912, -0.04400529, -0.12750888, ..., 0.12323189,

0.03086963, -0.06987697],

...,

[ 0.0790165 , 0.18564737, 0.00124895, ..., -0.08405672,

-0.16828664, 0.21780132],

[-0.18108547, 0.03274943, -0.08180425, ..., -0.0022919 ,

-0.00342036, -0.10432611],

[-0.2050607 , 0.04017716, -0.15770806, ..., 0.00416686,

-0.15942793, 0.22969675]], dtype=float32)>,

<tf.Variable 'bias:0' shape=(64,) dtype=float32, numpy=

array([0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.,

0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.,

0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.,

0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.], dtype=float32)>]

In [18]: # Wxh:(100, 64), Whh:(64, 64), bias:(64, )

In [19]: # Multi-Layers RNN

In [20]: x = tf.random.normal([4, 80, 100])

In [21]: Xt0 = x[:,0,:]

In [22]: cell0 = tf.keras.layers.SimpleRNNCell(64)

In [23]: cell2 = tf.keras.layers.SimpleRNNCell(64)

In [24]: state0 = [tf.zeros([4, 64])]

In [25]: state2 = [tf.zeros([4, 64])]

In [26]: out0, state0 = cell0(Xt0, state0)

In [27]: out2, state2 = cell2(out0, state2)

In [28]: out2.shape, state2[0].shape

Out[28]: (TensorShape([4, 64]), TensorShape([4, 64]))

Multi-Layers RNN

In [29]: x.shape

Out[29]: TensorShape([4, 80, 100])

In [30]: state0 = [tf.zeros([4, 64])]

In [31]: state1 = [tf.zeros([4, 64])]

In [32]: cell0 = tf.keras.layers.SimpleRNNCell(64)

In [33]: cell1 = tf.keras.layers.SimpleRNNCell(64)

In [34]: for word in tf.unstack(x, axis=1):

...: # h1 = x * Wxh + h0 * Whh

...: # out0: [b, 64]

...: out0, state0 = cell0(word, state0)

...: # out1: [b, 64]

...: out1, state1 = cell1(out0, state1)

RNN Layer

自动在时间序列上拆分

# return_sequences = True, 表示返回每个时间戳这一层的state作为下一层的输入

In [37]: rnn = Sequential([layers.SimpleRNN(64, dropout=0.5, return_sequences=True, unroll=True),

...: layers.SimpleRNN(64, dropout=0.5, unroll=True)])

In [38]: x.shape

Out[38]: TensorShape([4, 80, 100])

In [40]: x = rnn(x)

In [41]: x.shape

Out[41]: TensorShape([4, 64])