利用requests模块爬取小说

面向过程用python爬取网站某一小说并以文本形式存储

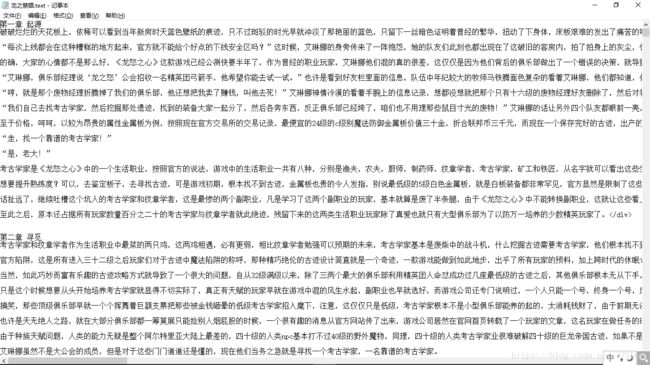

![]()

代码比较简单,过程如下:

1. 导入requests

import requests2. 模拟浏览器发送HTTP请求,获得小说主页网页源码

novel_url = 'http://www.xs4.cc/book/9/3802/'

response = requests.get(novel_url)

response.encoding = 'utf-8'

html = response.text3. 利用正则表达式获取每一章节title和url

div = re.findall(r'.*?',html,re.S)[0]

chapter_list = re.findall(r'(.*?)',div)4. 循环章节列表

for chapter_content in chapter_list:

chapter_title = chapter_content[1]

chapter_url = chapter_content[0]

chapter_url = 'http://www.xs4.cc%s'% chapter_url

print(chapter_url,chapter_title)

5. 提取小说的title,以title创建小说文本,后面存储章节内容

title = re.findall(r'(.*?)',html)[0]

fb = open('%s.text' %title,'w',encoding = 'utf-8')6. 提取小说章节内容

chapter_download = requests.get(chapter_url)

chapter_download.encoding = 'utf-8'

chapter_html = chapter_download.text

chapter_content_download = re.findall(r'id=\"content\">(.*?)',chapter_html,re.S)[0]

7. 保存小说内容

print('正在保存 %s'%chapter_title)

fb.write(chapter_title)

fb.write('\n')

fb.write(chapter_content_download)

fb.write('\n')

8. 清理数据

chapter_content_download = chapter_content_download.replace(' ','')

chapter_content_download = chapter_content_download.replace(' ','')

chapter_content_download = chapter_content_download.replace('

','')

----------------------------------------------函数实现------------------------------------------------

import requests

import re

# 获取章节信息和url

def get_chapter_list():

response = requests.get('http://www.xs4.cc/book/9/3802/')

response.encoding = 'utf-8'

html = response.text

div = re.findall(r'.*?', html, re.S)[0]

chapter_list = re.findall(r'(.*?)', div)

return chapter_list

# 获取章节内容

def chapter_download(chapter_url):

chapter_dl = requests.get(chapter_url)

chapter_dl.encoding = 'utf-8'

chapter_html = chapter_dl.text

chapter_content_download = re.findall(r'id=\"content\">(.*?)',chapter_html,re.S)[0]

# 清洗数据

chapter_content_download = chapter_content_download.replace(' ', '')

chapter_content_download = chapter_content_download.replace(' ', '')

chapter_content_download = chapter_content_download.replace('

', '')

chapter_content_download = chapter_content_download.replace('','')

return chapter_content_download

# 循环章节,建立章节文本存取小说内容

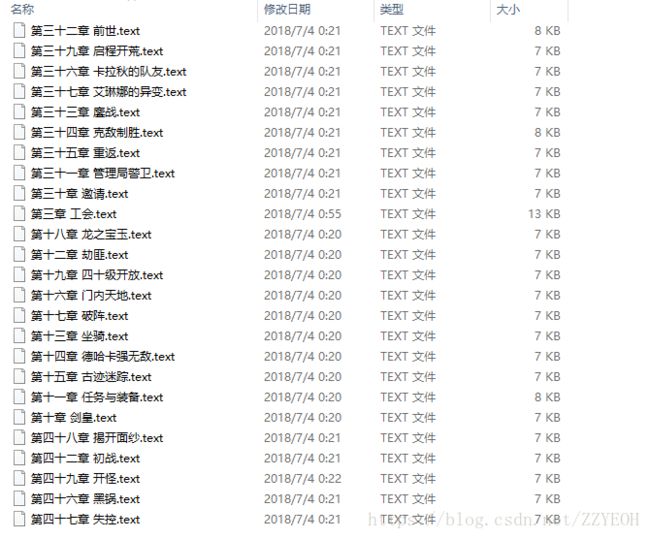

for chapter_url,chapter_title in get_chapter_list():

chapter_url = 'http://www.xs4.cc%s' % chapter_url

print(chapter_url,chapter_title)

# 数据持久化

print('正在保存 %s' % chapter_title)

fn = open('%s.text' % chapter_title, 'a+', encoding='utf-8')

fn.write(chapter_download(chapter_url))

每个章节以chapter_title创建文本存储小说章节内容

小说在此 -->龙之禁锢

总结:

虽然代码量很少,但也遇到一些坎,后面巩固基础,保持学习状态!