大话Flink之四-Flink流处理API 行行代码带你深刻理解Flink流处理API!

目录

流处理API

一、Environment

1.1 getExecutionEnvironment

1.2 createLocalEnvironment

1.3 createRemoteEnvironment

二、Source

2.1 从集合读取数据

2.2 从文件读取数据

2.3 以 kafka 消息队列的数据作为来源

2.4 自定义 Source

三、Transform

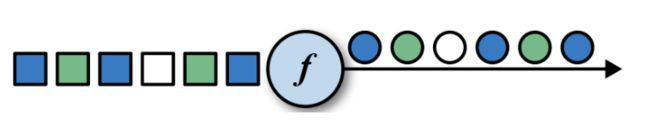

3.1 map

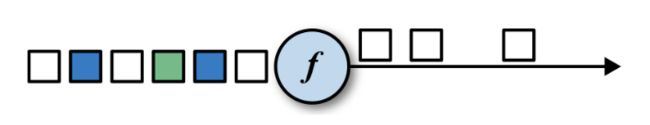

3.2 flatMap

3.3 Filter

3.4 KeyBy

3.5 滚动聚合算子(Rolling Aggregation)

3.6 Reduce

微小结:

3.7 Split 和 Select

3.8 Connect 和 CoMap

3.9 Union

总结:

四、支持的数据类型

4.1 基础数据类型

4.2 Java 和 Scala 元组(Tuples)

4.3 Scala 样例类(case classes)

4.4 Java 简单对象(POJOs)

4.5 其它(Arrays, Lists, Maps, Enums, 等等)

五 实现 UDF 函数——更细粒度的控制流

5.1 函数类(Function Classes)

5.2 匿名函数(Lambda Functions)

5.3 富函数(Rich Functions)

六 Sink

6.1 Kafka

6.2 Redis

6.3 Elasticsearch

6.4 JDBC 自定义 sink

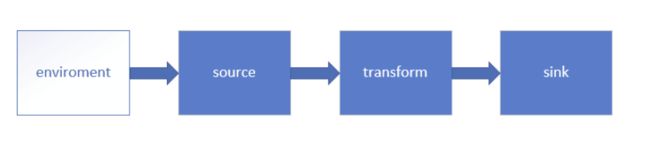

流处理API

一、Environment

1.1 getExecutionEnvironment

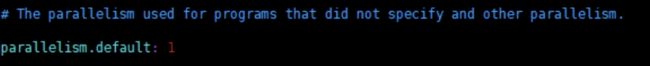

创建一个执行环境,表示当前执行程序的上下文。 如果程序是独立调用的,则 此方法返回本地执行环境;如果从命令行客户端调用程序以提交到集群,则此方法 返回此集群的执行环境,也就是说,getExecutionEnvironment 会根据查询运行的方 式决定返回什么样的运行环境,是最常用的一种创建执行环境的方式。

ExecutionEnvironment env = ExecutionEnvironment.getExecutionEnvironment();

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

如果没有设置并行度,会以 flink-conf.yaml 中的配置为准,默认是 1。

1.2 createLocalEnvironment

返回本地执行环境,需要在调用时指定默认的并行度。

LocalStreamEnvironment env = StreamExecutionEnvironment.createLocalEnvironment(1);

1.3 createRemoteEnvironment

返回集群执行环境,将 Jar 提交到远程服务器。需要在调用时指定 JobManager 的 IP 和端口号,并指定要在集群中运行的 Jar 包。

StreamExecutionEnvironment env =

StreamExecutionEnvironment.createRemoteEnvironment("jobmanage-hostname", 6123,"YOURPATH//WordCount.jar");

二、Source

2.1 从集合读取数据

package com.dongda.source;

import com.dongda.beans.SensorReading;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import java.util.Arrays;

public class SourceTest1_Collection {

public static void main(String[] args) throws Exception {

//1.Source:从集合中读取数据

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

DataStream sensorDataStream = env.fromCollection(Arrays.asList(

new SensorReading("sensor_1", 1547718199L, 35.8),

new SensorReading("sensor_6", 1547718201L, 15.4),

new SensorReading("sensor_7", 1547718202L, 6.7),

new SensorReading("sensor_10", 1547718205L, 38.1)

));

//2.打印

sensorDataStream.print();

//3.执行

env.execute();

}

}

2.2 从文件读取数据

在resource下创建sensor.txt文件,以便读取

sensor_1, 1547718199, 35.8 sensor_6", 1547718201, 15.4 sensor_7", 1547718202, 6.7 sensor_10", 1547718205, 38.1

package com.dongda.source;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class SourceTest2_File {

public static void main(String[] args) throws Exception {

//1.Source:从集合中读取数据

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

//从文件读取数据

DataStream sensorDataStream = env.readTextFile("/Users/haitaoyou/developer/flink/src/main/resources/sensor.txt");

//2.打印

sensorDataStream.print();

//3.执行

env.execute();

}

}

2.3 以 kafka 消息队列的数据作为来源

需要引入 kafka 连接器的依赖:

org.apache.flink flink-connector-kafka-0.11_2.12 1.10.1

package com.dongda.source;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer011;

import java.util.Properties;

public class SourceTest3_Kafka {

public static void main(String[] args) throws Exception {

//1.Source:从集合中读取数据

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// kafka 配置项

Properties properties = new Properties();

properties.setProperty("bootstrap.servers", "localhost:9092");

properties.setProperty("group.id", "consumer-group");

properties.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

properties.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

properties.setProperty("auto.offset.reset", "latest");

//从kafka读取数据

DataStream sensorDataStream = env.addSource(new FlinkKafkaConsumer011("sensor",new SimpleStringSchema(),properties));

//2.打印

sensorDataStream.print();

//3.执行

env.execute();

}

}

2.4 自定义 Source

package com.dongda.source;

import com.dongda.beans.SensorReading;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.SourceFunction;

import java.util.HashMap;

import java.util.Random;

public class SourceTest4_UDF {

public static void main(String[] args) throws Exception {

//1.Source:从集合中读取数据

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

//从文件读取数据

DataStream sensorDataStream = env.addSource(new MySensorSource());

//2.打印

sensorDataStream.print();

//3.执行

env.execute();

}

private static class MySensorSource implements SourceFunction {

//定义一个标识位,用来控制数据的产生

private boolean running = true;

public void run(SourceContext sourceContext) throws Exception {

//定义一个随机数发生器

Random random = new Random();

//设置10个传感器的初始温度

HashMap sensorTempMap = new HashMap();

for (int i = 0; i < 10; i++) {

sensorTempMap.put("sensor_" + (i + 1), 60 + random.nextGaussian() * 20);

}

while (running) {

for (String sensorId : sensorTempMap.keySet()) {

//在当前的温度基础上随机波动

double newtemp = sensorTempMap.get(sensorId) + random.nextGaussian();

sensorTempMap.put(sensorId,newtemp);

sourceContext.collect(new SensorReading(sensorId,System.currentTimeMillis(),newtemp));

}

Thread.sleep(1000L);

}

}

public void cancel() {

}

}

}

三、Transform

转换算子

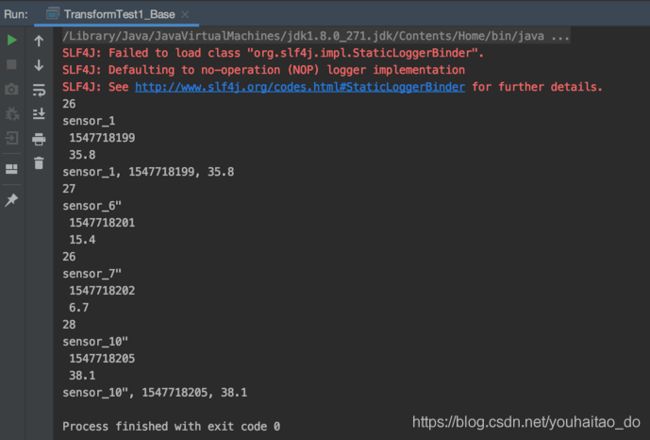

3.1 map

3.2 flatMap

3.3 Filter

package com.dongda.transform;

import org.apache.flink.api.common.JobExecutionResult;

import org.apache.flink.api.common.functions.FilterFunction;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

public class TransformTest1_Base {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);//为了方便观察打印出来的结果,将全局并行度设置为1

//从文件里面读取数据

DataStream inputStream = env.readTextFile("/Users/haitaoyou/developer/flink/src/main/resources/sensor.txt");

//1.map 把String 转换成长度输出

DataStream mapStream = inputStream.map(new MapFunction() {

public Integer map(String s) throws Exception {

return s.length();

}

});

//2.flatmap,按逗号分字段

DataStream flatMapStream = inputStream.flatMap(new FlatMapFunction() {

public void flatMap(String s, Collector collector) throws Exception {

String[] fields = s.split(",");

for (String field : fields) {

collector.collect(field);

}

}

});

//3.filter,筛选sensor_1开头的Id对应的数据

DataStream filterStream = inputStream.filter(new FilterFunction() {

public boolean filter(String s) throws Exception {

return s.startsWith("sensor_1");

}

});

//打印输出

mapStream.print();

flatMapStream.print();

filterStream.print();

//执行

env.execute();

}

}

3.4 KeyBy

DataStream → KeyedStream:逻辑地将一个流拆分成不相交的分区,每个分 区包含具有相同 key 的元素,在内部以 hash 的形式实现的。

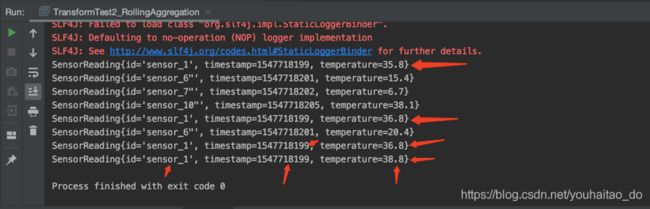

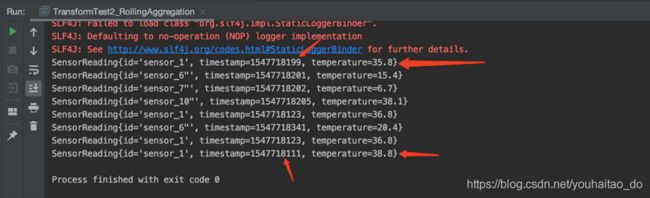

3.5 滚动聚合算子(Rolling Aggregation)

这些算子可以针对 KeyedStream 的每一个支流做聚合。

sum()

min()

max()

minBy()

maxBy()

这里面,min和minBy(或者max和maxBy)的区别是:语言解释起来有点绕,直接上代码上图!

sensor.txt

sensor_1,1547718199, 35.8 sensor_6",1547718201, 15.4 sensor_7",1547718202, 6.7 sensor_10",1547718205, 38.1 sensor_1,1547718123, 36.8 sensor_6",1547718341, 20.4 sensor_1,1547718239, 12.8 sensor_1,1547718111, 38.8

1、max()

package com.dongda.transform;

import com.dongda.beans.SensorReading;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.java.tuple.Tuple;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class TransformTest2_RollingAggregation {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);//为了方便观察打印出来的结果,将全局并行度设置为1

//从文件里面读取数据

DataStream inputStream = env.readTextFile("/Users/haitaoyou/developer/flink/src/main/resources/sensor.txt");

// DataStream dataStream = inputStream.map(new MapFunction() {

// public SensorReading map(String s) throws Exception {

// String[] fields = s.split(",");

// return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

// }

// });

//lamda表达式写法

DataStream dataStream = inputStream.map(line ->{

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

});

//分组

KeyedStream keyedStream = dataStream.keyBy("id");

//分组写法2

// KeyedStream keyedStream1 = dataStream.keyBy(SensorReading::getId);

//滚动聚合,取当前最大的温度值

SingleOutputStreamOperator resultStream = keyedStream.max("temperature");

resultStream.print();

env.execute();

}

}

运行输出如下:对应着sensor.txt,由于是滚动聚合,以sensor_1为例,第一条数据来的时候,最大值是35.8,第二条来的时候是38.1,temperature改变为当前最大值38.1,但是!时间戳timestamp没有变!!!以此类推

2、maxBy()

package com.dongda.transform;

import com.dongda.beans.SensorReading;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.java.tuple.Tuple;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class TransformTest2_RollingAggregation {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);//为了方便观察打印出来的结果,将全局并行度设置为1

//从文件里面读取数据

DataStream inputStream = env.readTextFile("/Users/haitaoyou/developer/flink/src/main/resources/sensor.txt");

// DataStream dataStream = inputStream.map(new MapFunction() {

// public SensorReading map(String s) throws Exception {

// String[] fields = s.split(",");

// return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

// }

// });

//lamda表达式写法

DataStream dataStream = inputStream.map(line ->{

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

});

//分组

KeyedStream keyedStream = dataStream.keyBy("id");

//分组写法2

// KeyedStream keyedStream1 = dataStream.keyBy(SensorReading::getId);

//滚动聚合,取当前最大的温度值

SingleOutputStreamOperator resultStream = keyedStream.maxBy("temperature");

resultStream.print();

env.execute();

}

}

输出结果如下:得到的是温度最大值的那一条整体数据!

3.6 Reduce

KeyedStream → DataStream:一个分组数据流的聚合操作,合并当前的元素 和上次聚合的结果,产生一个新的值,返回的流中包含每一次聚合的结果,而不是 只返回最后一次聚合的最终结果。

package com.dongda.transform;

import com.dongda.beans.SensorReading;

import org.apache.flink.api.common.functions.ReduceFunction;

import org.apache.flink.api.java.tuple.Tuple;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class TransformTest3_Reduce {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);//为了方便观察打印出来的结果,将全局并行度设置为1

//从文件里面读取数据

DataStream inputStream = env.readTextFile("/Users/haitaoyou/developer/flink/src/main/resources/sensor.txt");

//lamda表达式写法 转换成SensorReading类型

DataStream dataStream = inputStream.map(line ->{

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

});

//分组

KeyedStream keyedStream = dataStream.keyBy("id");

//分组写法2

// KeyedStream keyedStream1 = dataStream.keyBy(SensorReading::getId);

//reduce聚合,取最大的温度值以及当前最新的时间戳

SingleOutputStreamOperator reduce = keyedStream.reduce(new ReduceFunction() {

@Override

public SensorReading reduce(SensorReading sensorReading, SensorReading t1) throws Exception {

return new SensorReading(sensorReading.getId(), t1.getTimestamp(), Math.max(sensorReading.getTemperature(), t1.getTemperature()));

}

});

reduce.print();

env.execute();

}

}

输出结果如下: reduce聚合,取最大的温度值以及当前最新的时间戳

微小结:

以上我们介绍了基本的转换计算,也学完了稍微复杂一点的聚合计算,其实我们做大数据一般就是map、reduce这两组操作,要不就是你只跟当前状态有关,做一个简单转换,要不就是和之前的某些数据、和状态有关,做一个聚合、做一个统计,那还可以做什么操作呢?接下来介绍的又可以归为一大类,第7节到第9节,操作的是多条流,所以我们往往会把他们总结起来,叫做多流转换算子!现在开始!

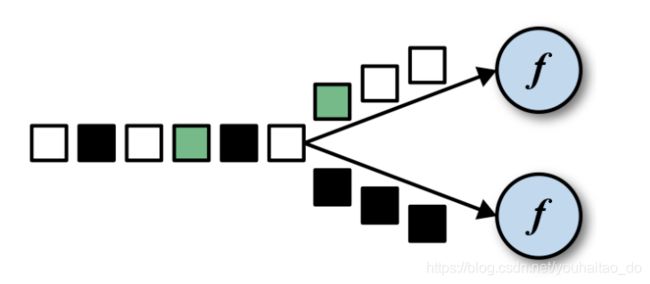

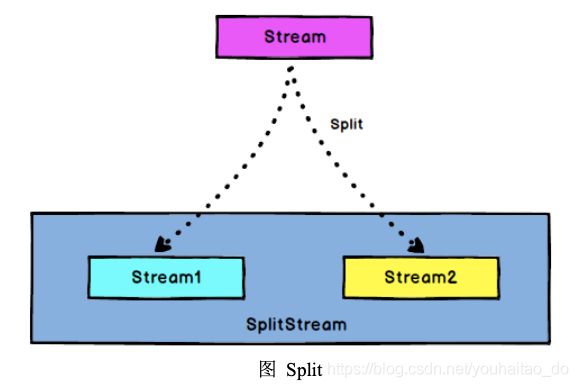

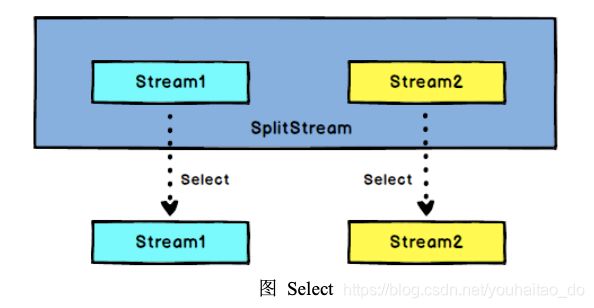

3.7 Split 和 Select

Split

DataStream → SplitStream:根据某些特征把一个 DataStream 拆分成两个或者多个 DataStream。Split名义上是把一条流拆成两个,但事实上SplitStream还是一条流,那Split操作到底干了一件什么事情呢?它是按照一定的特征,把数据做一个划分,然后给他相当于盖上一个戳(相当于一个拣选的标志),就我当前还是放在同一个流里面,但是我已经根据他不同的特点,盖了不同的戳,那接下来下一步就是根据那个戳做一个拣选,就可以得到不同的流。也就是说,你做完Split操作后一定要跟上一个Select操作,这才是一个完整的分流操作。

Select

基于这个SplitStream调用一个Select方法,然后根据不同的戳去提取,然后就能得到不同的DataStream,这就是一个完整的流程。

SplitStream→DataStream:从一个 SplitStream 中获取一个或者多个DataStream。

多流转换-需求:传感器数据按照温度高低(以 30 度为界),拆分成两个流,低温流和高温流。

package com.dongda.transform;

import com.dongda.beans.SensorReading;

import org.apache.flink.streaming.api.collector.selector.OutputSelector;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SplitStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import java.util.Collections;

public class TransformTest4_MultipleStreams {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);//为了方便观察打印出来的结果,将全局并行度设置为1

//从文件里面读取数据

DataStream inputStream = env.readTextFile("/Users/haitaoyou/developer/flink/src/main/resources/sensor.txt");

//lamda表达式写法 转换成SensorReading类型

DataStream dataStream = inputStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

});

SplitStream splitStream = dataStream.split(new OutputSelector() {

@Override

public Iterable select(SensorReading sensorReading) {

return (sensorReading.getTemperature() > 30) ? Collections.singletonList("high") : Collections.singletonList("low");

}

});

DataStream highTemStream = splitStream.select("high");

DataStream lowTemStream = splitStream.select("low");

DataStream allTemStream = splitStream.select("high", "low");

highTemStream.print("high");

lowTemStream.print("low");

allTemStream.print("all");

env.execute();

}

}

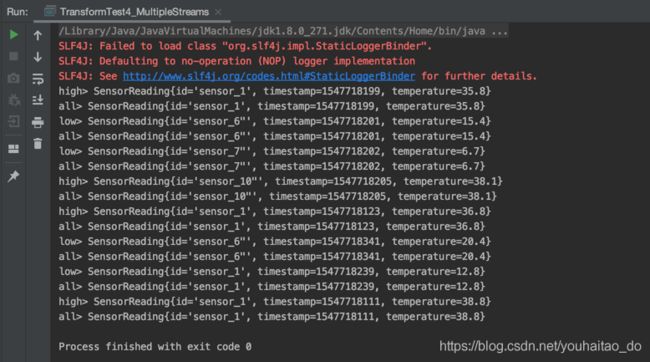

输出如下:

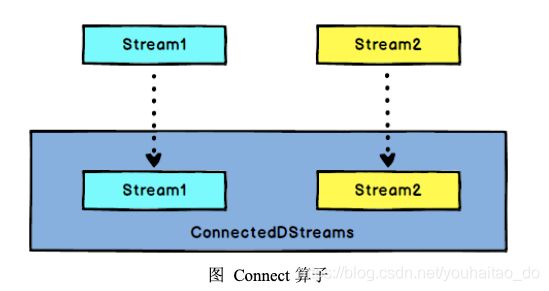

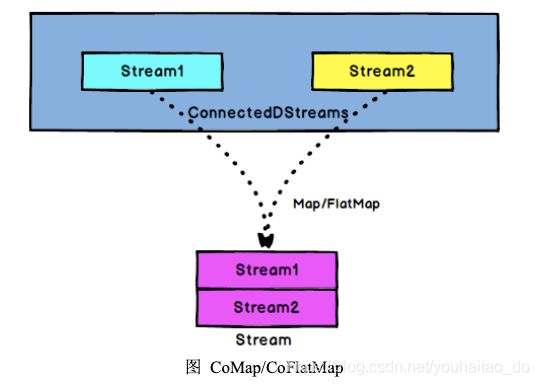

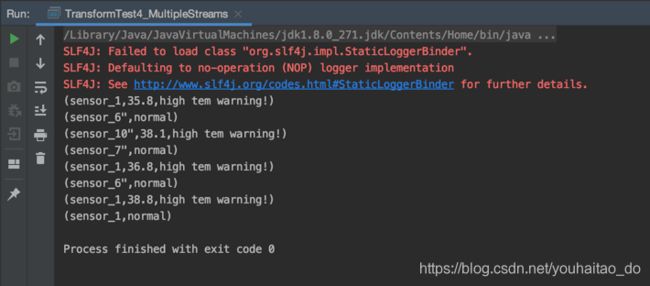

3.8 Connect 和 CoMap

Connect

DataStream,DataStream → ConnectedStreams:连接两个保持他们类型的数据流,两个数据流被 Connect 之后,只是被放在了一个同一个流中,内部依然保持 各自的数据和形式不发生任何变化,两个流相互独立。

CoMap,CoFlatMap

ConnectedStreams → DataStream:作用于 ConnectedStreams 上,功能与 map和 flatMap 一样,对 ConnectedStreams 中的每一个 Stream 分别进行 map 和 flatMap 处理。

需求:合流 connect 将高温流转换成二元组类型,与低温流连接合并之后,输出状态信息

package com.dongda.transform;

import com.dongda.beans.SensorReading;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.streaming.api.collector.selector.OutputSelector;

import org.apache.flink.streaming.api.datastream.ConnectedStreams;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.datastream.SplitStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.co.CoMapFunction;

import java.util.Collections;

public class TransformTest4_MultipleStreams {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);//为了方便观察打印出来的结果,将全局并行度设置为1

//从文件里面读取数据

DataStream inputStream = env.readTextFile("/Users/haitaoyou/developer/flink/src/main/resources/sensor.txt");

//lamda表达式写法 转换成SensorReading类型

DataStream dataStream = inputStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

});

SplitStream splitStream = dataStream.split(new OutputSelector() {

@Override

public Iterable select(SensorReading sensorReading) {

return (sensorReading.getTemperature() > 30) ? Collections.singletonList("high") : Collections.singletonList("low");

}

});

DataStream highTemStream = splitStream.select("high");

DataStream lowTemStream = splitStream.select("low");

DataStream allTemStream = splitStream.select("high", "low");

// highTemStream.print("high");

// lowTemStream.print("low");

// allTemStream.print("all");

//2.合流 connect 将高温流转换成二元组类型,与低温流连接合并之后,输出状态信息

SingleOutputStreamOperator> warningStream = highTemStream.map(new MapFunction>() {

@Override

public Tuple2 map(SensorReading sensorReading) throws Exception {

return new Tuple2<>(sensorReading.getId(), sensorReading.getTemperature());

}

});

ConnectedStreams, SensorReading> connectedStream = warningStream.connect(lowTemStream);

SingleOutputStreamOperator 输出如下:

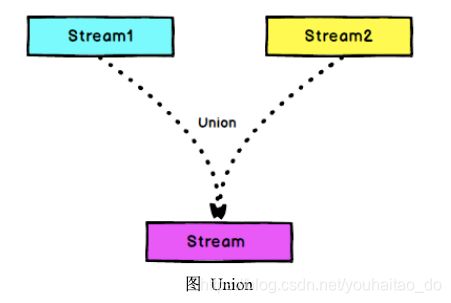

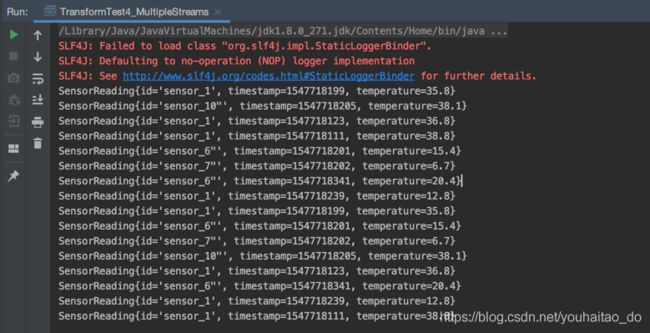

3.9 Union

前面介绍了connect连接两条流合并的操作,大家会发现connect的操作是非常灵活的,因为两个流的数据类型可以不一样,可以非常灵活的将各种各样的数据整合到一起,然后统一做操作(有点像一国两制)。但是他也有局限,它只能连接两条流,我们可以发现,他做map的时候只是实现了map1、map2。那么假如说我要想多条流合在一起怎么做呢?Union!它也不需要像connect操作后还要map,但是它要求合并的流的数据类型要一致。

总结一下就是说:各有各的特点,union的特点是可以合并多条流,但是它们的数据类型必须一样;connect的特点是数据类型可以不一样,然后再做转换,它就非常的灵活。实际使用中connect还是多一些。

DataStream → DataStream:对两个或者两个以上的DataStream进行union操作,产生一个包含所有 DataStream 元素的新 DataStream。

package com.dongda.transform;

import com.dongda.beans.SensorReading;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.streaming.api.collector.selector.OutputSelector;

import org.apache.flink.streaming.api.datastream.ConnectedStreams;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.datastream.SplitStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.co.CoMapFunction;

import java.util.Collections;

public class TransformTest4_MultipleStreams {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);//为了方便观察打印出来的结果,将全局并行度设置为1

//从文件里面读取数据

DataStream inputStream = env.readTextFile("/Users/haitaoyou/developer/flink/src/main/resources/sensor.txt");

//lamda表达式写法 转换成SensorReading类型

DataStream dataStream = inputStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

});

SplitStream splitStream = dataStream.split(new OutputSelector() {

@Override

public Iterable select(SensorReading sensorReading) {

return (sensorReading.getTemperature() > 30) ? Collections.singletonList("high") : Collections.singletonList("low");

}

});

DataStream highTemStream = splitStream.select("high");

DataStream lowTemStream = splitStream.select("low");

DataStream allTemStream = splitStream.select("high", "low");

// highTemStream.print("high");

// lowTemStream.print("low");

// allTemStream.print("all");

//2.合流 connect 将高温流转换成二元组类型,与低温流连接合并之后,输出状态信息

SingleOutputStreamOperator> warningStream = highTemStream.map(new MapFunction>() {

@Override

public Tuple2 map(SensorReading sensorReading) throws Exception {

return new Tuple2<>(sensorReading.getId(), sensorReading.getTemperature());

}

});

ConnectedStreams, SensorReading> connectedStream = warningStream.connect(lowTemStream);

SingleOutputStreamOperator 输出如下:

总结:

到目前为止我们可以稍微总结一下,为什么所有的转换算子,都叫DataStreamAPI呢?就是因为我们基础的数据结构就是DataStream,然后里面可能有范型定义了内部的数据结构,它在做转换的过程当中,数据类型有可能会发生改变。那这里面涉及到流本身的数据结构变化的有哪些呢?简单转换不改变。KeyBy之后就会由一个DataStream 转换成 KeyedStream,当然,KeyedStream本质我们说还是一个DataStream,但是大家会发现KeyedStream里面有很多DataStream本身调用不了的方法,比如说聚合操作。

具体的类型转换图如下,核心就是DataStream

四、支持的数据类型

Flink 流应用程序处理的是以数据对象表示的事件流。所以在 Flink 内部,我们 需要能够处理这些对象。它们需要被序列化和反序列化,以便通过网络传送它们; 或者从状态后端、检查点和保存点读取它们。为了有效地做到这一点,Flink 需要明 确知道应用程序所处理的数据类型。Flink 使用类型信息的概念来表示数据类型,并 为每个数据类型生成特定的序列化器、反序列化器和比较器。

Flink 还具有一个类型提取系统,该系统分析函数的输入和返回类型,以自动获 取类型信息,从而获得序列化器和反序列化器。但是,在某些情况下,例如 lambda 函数或泛型类型,需要显式地提供类型信息,才能使应用程序正常工作或提高其性 能。

Flink 支持 Java 和 Scala 中所有常见数据类型。使用最广泛的类型有以下几种。

4.1 基础数据类型

Flink 支持所有的 Java 和 Scala 基础数据类型,Int, Double, Long, String, ...

| DataStream |

| numberStream.map(data -> data * 2); |

4.2 Java 和 Scala 元组(Tuples)

| DataStream |

| new Tuple2("Adam", 17), |

| new Tuple2("Sarah", 23) ); |

| personStream.filter(p -> p.f1 > 18); |

4.3 Scala 样例类(case classes)

case class Person(name: String, age: Int)

val persons: DataStream[Person] = env.fromElements(Person("Adam", 17), Person("Sarah", 23) )

persons.filter(p => p.age > 18)

4.4 Java 简单对象(POJOs)

public class Person {

public String name;

public int age;

public Person() {}

public Person(String name, int age) {this.name = name;

this.age = age; }

}

DataStreampersons = env.fromElements( new Person("Alex", 42), new Person("Wendy", 23));

4.5 其它(Arrays, Lists, Maps, Enums, 等等)

Flink 对 Java 和 Scala 中的一些特殊目的的类型也都是支持的,比如 Java 的ArrayList,HashMap,Enum 等等。

五 实现 UDF 函数——更细粒度的控制流

5.1 函数类(Function Classes)

Flink 暴露了所有 udf 函数的接口(实现方式为接口或者抽象类)。例如 MapFunction, FilterFunction, ProcessFunction 等等。

下面例子实现了 FilterFunction 接口:

DataStream flinkTweets = tweets.filter(new FlinkFilter());

public static class FlinkFilter implements FilterFunction {

@Override

public boolean filter(String value) throws Exception {

return value.contains("flink");

}

} 还可以将函数实现成匿名类

DataStream flinkTweets = tweets.filter(new FilterFunction() {

@Override

public boolean filter(String value) throws Exception {

return value.contains("flink");

}

}); 我们 filter 的字符串"flink"还可以当作参数传进去。

DataStream tweets = env.readTextFile("INPUT_FILE ");

DataStream flinkTweets = tweets.filter(new KeyWordFilter("flink"));

public static class KeyWordFilter implements FilterFunction {

private String keyWord;

KeyWordFilter(String keyWord) {

this.keyWord = keyWord;

}

@Override

public boolean filter(String value) throws Exception {

return value.contains(this.keyWord);

}

} 5.2 匿名函数(Lambda Functions)

DataStream tweets = env.readTextFile("INPUT_FILE");

DataStream flinkTweets = tweets.filter(tweet -> tweet.contains("flink")); 5.3 富函数(Rich Functions)

“富函数”是 DataStream API 提供的一个函数类的接口,所有 Flink 函数类都 有其 Rich 版本。它与常规函数的不同在于,可以获取运行环境的上下文,并拥有一 些生命周期方法,所以可以实现更复杂的功能。

RichMapFunction

RichFlatMapFunction

RichFilterFunction

...

Rich Function 有一个生命周期的概念。典型的生命周期方法有:

open()方法是 rich function 的初始化方法,当一个算子例如 map 或者 filter

被调用之前 open()会被调用。

close()方法是生命周期中的最后一个调用的方法,做一些清理工作。

getRuntimeContext()方法提供了函数的 RuntimeContext 的一些信息,例如函数执行的并行度,任务的名字,以及 state 状态

package com.dongda.transform;

import com.dongda.beans.SensorReading;

import org.apache.flink.api.common.functions.RichMapFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class TransformTest5_RichFunction {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(4);//为了方便观察打印出来的结果,将全局并行度设置为1

//从文件里面读取数据

DataStream inputStream = env.readTextFile("/Users/haitaoyou/developer/flink/src/main/resources/sensor.txt");

//lamda表达式写法

DataStream dataStream = inputStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

});

DataStream> resultStream = dataStream.map(new MyMapper());

resultStream.print();

env.execute();

}

private static class MyMapper extends RichMapFunction> {

@Override

public Tuple2 map(SensorReading sensorReading) throws Exception {

return new Tuple2<>(sensorReading.getId(),getRuntimeContext().getIndexOfThisSubtask());

}

@Override

public void open(Configuration parameters) throws Exception {

//以下可以做一些初始化工作,例如建立一个和HDFS的连接

System.out.println("open");

}

@Override

public void close() throws Exception {

//以下做一些清理工作,例如断开和HDFS的连接

System.out.println("close");

}

}

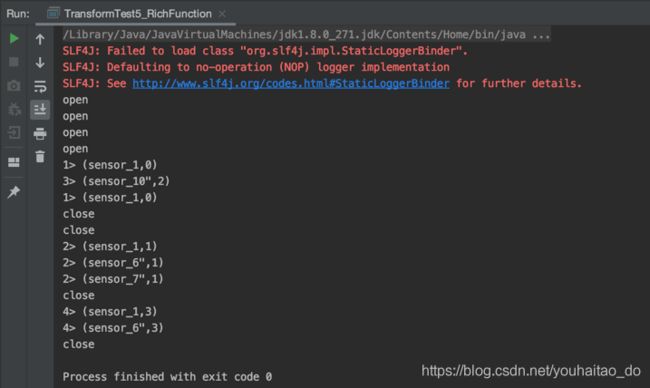

} 输出结果如下:

六 Sink

Flink 没有类似于 spark 中 foreach 方法,让用户进行迭代的操作。虽有对外的 输出操作都要利用 Sink 完成。最后通过类似如下方式完成整个任务最终输出操作。

stream.addSink(new MySink(xxxx))

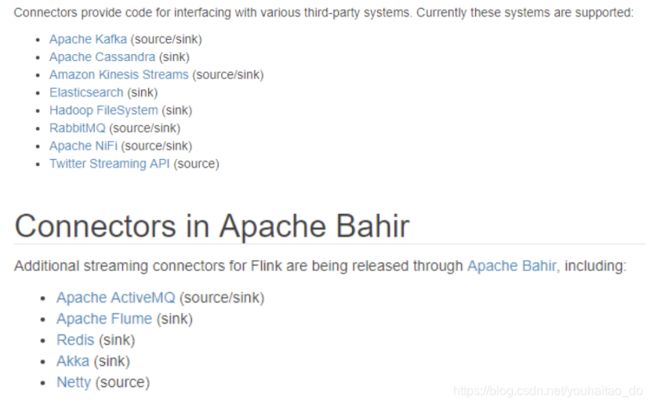

官方提供了一部分的框架的 sink。除此以外,需要用户自定义实现 sink。

6.1 Kafka

package com.dongda.sink;

import com.dongda.beans.SensorReading;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducer011;

public class SinkTest1_Kafka {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);//为了方便观察打印出来的结果,将全局并行度设置为1

//从文件里面读取数据

DataStream inputStream = env.readTextFile("/Users/haitaoyou/developer/flink/src/main/resources/sensor.txt");

//lamda表达式写法

DataStream dataStream = inputStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2])).toString();

});

//添加kafka sink

dataStream.addSink(new FlinkKafkaProducer011("localhost:9091","sinkTest",new SimpleStringSchema()));

env.execute();

}

}

6.2 Redis

需求:传进来的传感器数据,实时插入到redis(只保留最新)

添加pom依赖

org.apache.bahir flink-connector-redis_2.11 1.0

package com.dongda.sink;

import com.dongda.beans.SensorReading;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.redis.RedisSink;

import org.apache.flink.streaming.connectors.redis.common.config.FlinkJedisPoolConfig;

import org.apache.flink.streaming.connectors.redis.common.mapper.RedisCommand;

import org.apache.flink.streaming.connectors.redis.common.mapper.RedisCommandDescription;

import org.apache.flink.streaming.connectors.redis.common.mapper.RedisMapper;

public class SinkTest2_Redis {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);//为了方便观察打印出来的结果,将全局并行度设置为1

//从文件里面读取数据

DataStream inputStream = env.readTextFile("/Users/haitaoyou/developer/flink/src/main/resources/sensor.txt");

//lamda表达式写法

DataStream dataStream = inputStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

});

//定义jedis连接配置

FlinkJedisPoolConfig config = new FlinkJedisPoolConfig.Builder()

.setHost("localhost")

.setPort(6379)

.build();

dataStream.addSink(new RedisSink(config,new MyRedisMapper()));

env.execute();

}

//自定义RedisMapper

private static class MyRedisMapper implements RedisMapper {

//定义保存数据到redis命令,存成Hash表,hset 表名:sensor_temp id temperature

@Override

public RedisCommandDescription getCommandDescription() { //操作redis命令的描述,描述redis到底发一个什么指令过去

return new RedisCommandDescription(RedisCommand.HSET,"sensor_temp");

}

@Override

public String getKeyFromData(SensorReading sensorReading) {

return sensorReading.getId();

}

@Override

public String getValueFromData(SensorReading sensorReading) {

return sensorReading.getTemperature().toString();

}

}

}

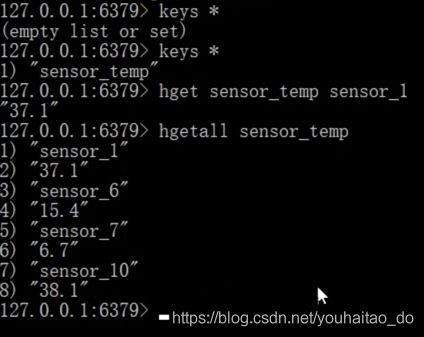

输出结果如下:

6.3 Elasticsearch

添加pom依赖

org.apache.flink flink-connector-elasticsearch6_2.12 1.10.1

package com.dongda.sink;

import com.dongda.beans.SensorReading;

import org.apache.flink.api.common.functions.RuntimeContext;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.elasticsearch.ElasticsearchSinkFunction;

import org.apache.flink.streaming.connectors.elasticsearch.RequestIndexer;

import org.apache.flink.streaming.connectors.elasticsearch6.ElasticsearchSink;

import org.apache.http.HttpHost;

import org.elasticsearch.action.index.IndexRequest;

import org.elasticsearch.client.Requests;

import java.util.ArrayList;

import java.util.HashMap;

public class SinkTest3_ElasticSearch {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);//为了方便观察打印出来的结果,将全局并行度设置为1

//从文件里面读取数据

DataStream inputStream = env.readTextFile("/Users/haitaoyou/developer/flink/src/main/resources/sensor.txt");

//lamda表达式写法 转换成SensorReading类型

DataStream dataStream = inputStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

});

//定义es的连接配置

ArrayList httpHosts = new ArrayList<>();

httpHosts.add(new HttpHost("localhost",9200));

dataStream.addSink(new ElasticsearchSink.Builder(httpHosts,new MyEsSinkFunction()).build());

env.execute();

}

//实现自定义的Es写入操作的ElasticsearchSinkFunction

private static class MyEsSinkFunction implements ElasticsearchSinkFunction {

@Override

public void process(SensorReading sensorReading, RuntimeContext runtimeContext, RequestIndexer requestIndexer) {

//1.定义写入的数据source

HashMap dataSource = new HashMap<>();

dataSource.put("id",sensorReading.getId());

dataSource.put("temp",sensorReading.getTemperature().toString());

dataSource.put("ts",sensorReading.getTimestamp().toString());

//2.创建请求,作为向es发起的写入命令

IndexRequest indexRequest = Requests.indexRequest()

.index("sensor")

.type("readingdata")

.source(dataSource);

//3.用index发送请求

requestIndexer.add(indexRequest);

}

}

}

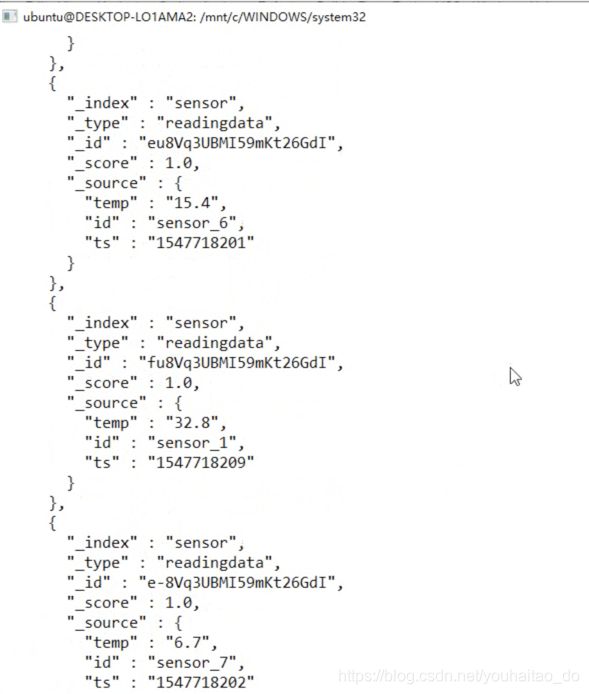

输出结果如下:

执行命令:

![]()

展示:

6.4 JDBC 自定义 sink

前面的场景是向Kafka、Redis、Es里面插入数据,那现在要直接把数据写入Mysql怎么办呢?在实际生产中还是有这种需求的,因为往往生产环境中的业务数据库就是Mysql。但是,在当前1.10版本里面并没有相关的依赖支持,Mysql没有连接器支持,我们没办法直接用(后面的版本已经有了),那么我们就可以自定义Sink。

添加mysql-connector pom依赖

mysql mysql-connector-java 5.1.44

package com.dongda.sink;

import com.dongda.beans.SensorReading;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.sink.RichSinkFunction;

import org.apache.flink.streaming.api.functions.sink.SinkFunction;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

public class SinkTest4_Jdbc {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);//为了方便观察打印出来的结果,将全局并行度设置为1

//从文件里面读取数据

DataStream inputStream = env.readTextFile("/Users/haitaoyou/developer/flink/src/main/resources/sensor.txt");

//lamda表达式写法 转换成SensorReading类型

DataStream dataStream = inputStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

});

dataStream.addSink(new MyJdbcSink());

env.execute();

}

//实现自定义的SinkFunction

private static class MyJdbcSink extends RichSinkFunction {

//声明连接和预编译语句

Connection connection=null;

PreparedStatement insertStmt=null;

PreparedStatement updateStmt=null;

@Override

public void open(Configuration parameters) throws Exception {

connection= DriverManager.getConnection("jdbc:mysql://localhost:3306/test","root","123456");

insertStmt=connection.prepareStatement("insert into sensor_temp(id,temp) values(?,?)");

updateStmt=connection.prepareStatement("update sensor_temp set temp= ? where id = ?");

}

//每来一条数据,调用连接,执行SQL

@Override

public void invoke(SensorReading value, Context context) throws Exception {

//直接执行更新语句,如果没有更新那么就插入

updateStmt.setDouble(1,value.getTemperature());

updateStmt.setString(2,value.getId());

updateStmt.execute();

if (updateStmt.getUpdateCount()==0){

insertStmt.setString(1,value.getId());

insertStmt.setDouble(2,value.getTemperature());

insertStmt.execute();

}

}

//最后有始有终,我们要进行关闭

@Override

public void close() throws Exception {

insertStmt.close();

updateStmt.close();

connection.close();

}

}

}

输出显示:可以看到每条都是最新的数据