Paddle图像分割从入门到实践(二):FCN与Unet

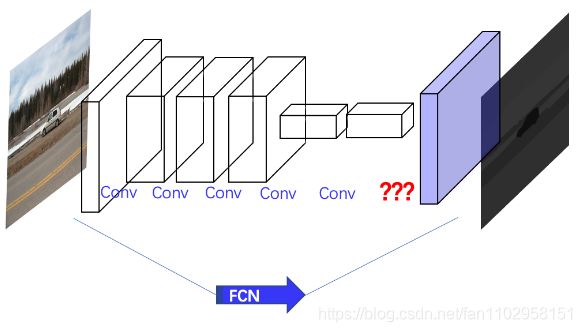

Fully Convolutional Networks(FCN)

什么是FCN? 就是全卷积网络没有全连接层(FC).

如何做语义分割? 语义分割![]() 像素级分类

像素级分类

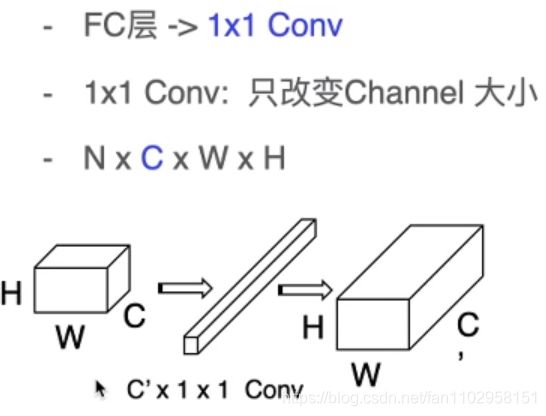

和图像分类有什么关系? 替换FC为Conv

1x1 Conv

在通道维度("C")上进行改变,即在通道上降维或升维.

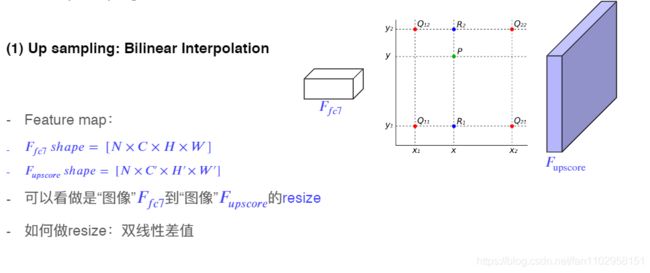

Upsample

目的:将H和W收缩后的特征图恢复为与输入图像尺寸相同的大小.

Up-sampling

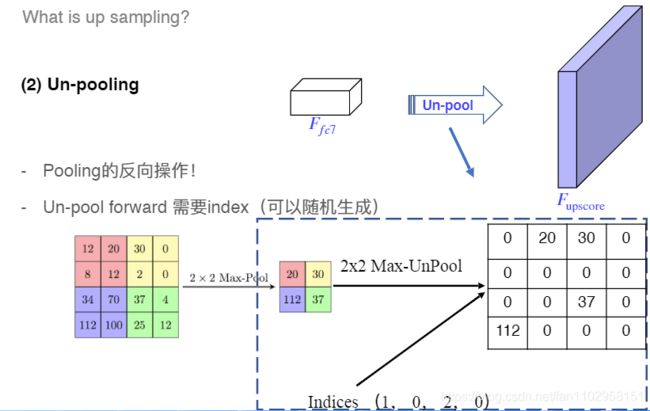

Un-pooling

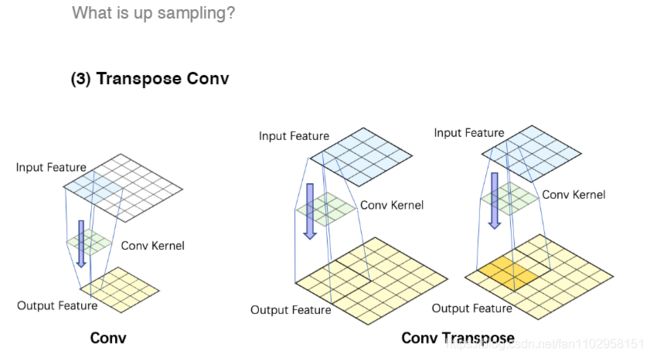

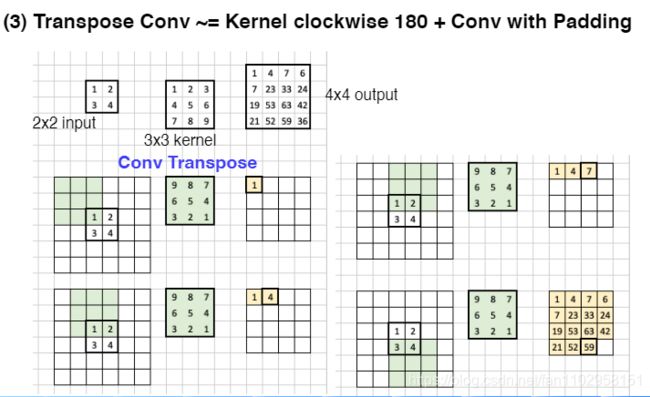

Transpose Conv

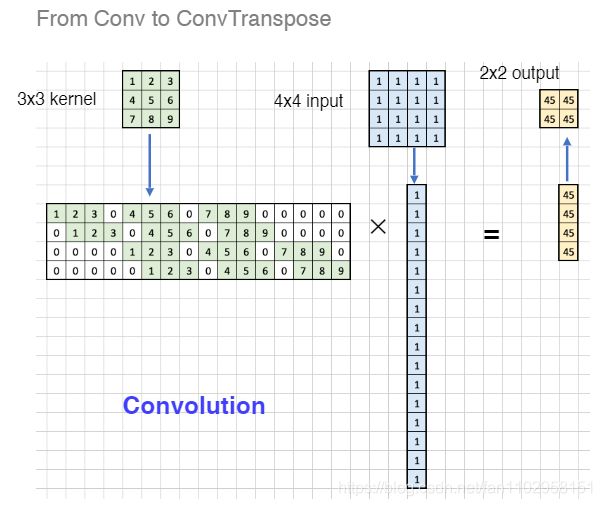

实际在计算机中卷积运算都是转换为矩阵运算进行的

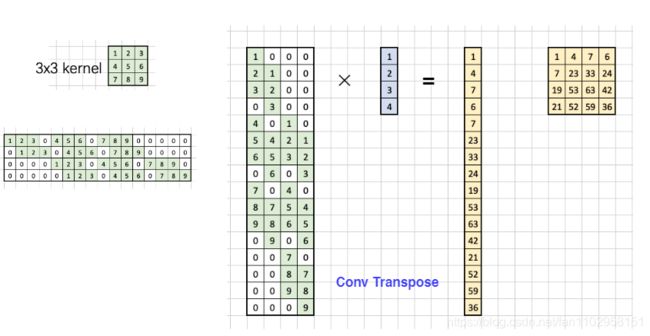

同理,反卷积转换为矩阵运算,则应该变为

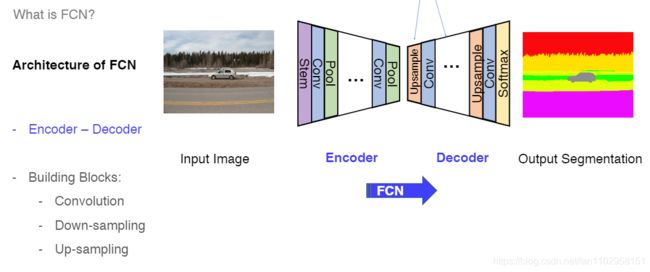

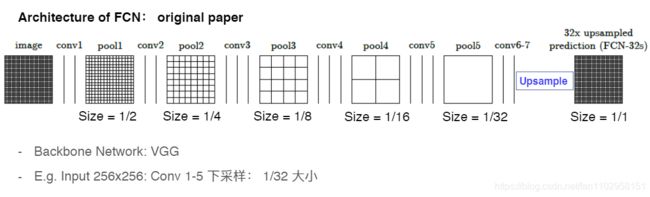

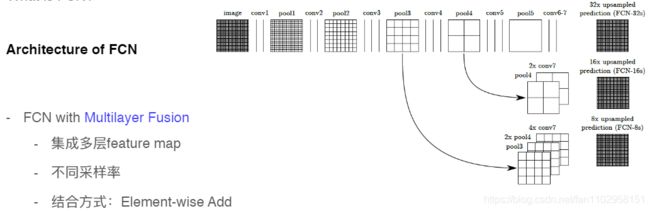

FCN网络结构

Encoder和Decoder

先将原图经过卷积和pooling层(encoder)得到一个相对长和宽维度较小的特征图,然后再通过卷积和上采样层(decoder)将特征恢复为与原图长宽相等且通道数为分类类别数的结果图输出.

想要分割结果好,就是在对一个像素点做分类时,不仅仅只考虑这一个点,而是利用周围点的信息,甚至是全图的信息来辅助判断它 的类别. 但是,一个点周围的信息可能会因为所选范围的大小呈现出不同的结果,那么为了能准确的找到我们想要的"正确"结果,最稳妥的算法就是在我们综合不同大小邻域的信息,于是FCN采用了"Multilayer Fusion"的结构

简单来看,就是在网络结构中将Decoder与Encoder中尺寸相同但感受野不同的特征图进行相加.

网络结构搭建代码(PaddlePaddle)

backbone VGG

import numpy as np

import paddle.fluid as fluid

from paddle.fluid.dygraph import to_variable

from paddle.fluid.dygraph import Conv2D

from paddle.fluid.dygraph import Dropout

from paddle.fluid.dygraph import BatchNorm

from paddle.fluid.dygraph import Pool2D

from paddle.fluid.dygraph import Linear

model_path = {

#'vgg16': './vgg16',

#'vgg16bn': './vgg16_bn',

# 'vgg19': './vgg19',

# 'vgg19bn': './vgg19_bn'

}

class ConvBNLayer(fluid.dygraph.Layer):

def __init__(self,

num_channels,

num_filters,

filter_size=3,

stride=1,

groups=1,

use_bn=True,

act='relu',

name=None):

super(ConvBNLayer, self).__init__(name)

self.use_bn = use_bn

if use_bn:

self.conv = Conv2D(num_channels=num_channels,

num_filters=num_filters,

filter_size=filter_size,

stride=stride,

padding=(filter_size-1)//2,

groups=groups,

act=None,

bias_attr=None)

self.bn = BatchNorm(num_filters, act=act)

else:

self.conv = Conv2D(num_channels=num_channels,

num_filters=num_filters,

filter_size=filter_size,

stride=stride,

padding=(filter_size-1)//2,

groups=groups,

act=act,

bias_attr=None)

def forward(self, inputs):

y = self.conv(inputs)

if self.use_bn:

y = self.bn(y)

return y

class VGG(fluid.dygraph.Layer):

def __init__(self, layers=16, use_bn=False, num_classes=1000):

super(VGG, self).__init__()

self.layers = layers

self.use_bn = use_bn

supported_layers = [16, 19]

assert layers in supported_layers

if layers == 16:

depth = [2, 2, 3, 3, 3]

elif layers == 19:

depth = [2, 2, 4, 4, 4]

num_channels = [3, 64, 128, 256, 512]

num_filters = [64, 128, 256, 512, 512]

self.layer1 = fluid.dygraph.Sequential(*self.make_layer(num_channels[0], num_filters[0], depth[0], use_bn, name='layer1'))

self.layer2 = fluid.dygraph.Sequential(*self.make_layer(num_channels[1], num_filters[1], depth[1], use_bn, name='layer2'))

self.layer3 = fluid.dygraph.Sequential(*self.make_layer(num_channels[2], num_filters[2], depth[2], use_bn, name='layer3'))

self.layer4 = fluid.dygraph.Sequential(*self.make_layer(num_channels[3], num_filters[3], depth[3], use_bn, name='layer4'))

self.layer5 = fluid.dygraph.Sequential(*self.make_layer(num_channels[4], num_filters[4], depth[4], use_bn, name='layer5'))

self.classifier = fluid.dygraph.Sequential(

Linear(input_dim=512 * 7 * 7, output_dim=4096, act='relu'),

Dropout(),

Linear(input_dim=4096, output_dim=4096, act='relu'),

Dropout(),

Linear(input_dim=4096, output_dim=num_classes))

self.out_dim = 512 * 7 * 7

def forward(self, inputs):

x = self.layer1(inputs)

x = fluid.layers.pool2d(x, pool_size=2, pool_stride=2)

x = self.layer2(x)

x = fluid.layers.pool2d(x, pool_size=2, pool_stride=2)

x = self.layer3(x)

x = fluid.layers.pool2d(x, pool_size=2, pool_stride=2)

x = self.layer4(x)

x = fluid.layers.pool2d(x, pool_size=2, pool_stride=2)

x = self.layer5(x)

x = fluid.layers.pool2d(x, pool_size=2, pool_stride=2)

x = fluid.layers.adaptive_pool2d(x, pool_size=(7,7), pool_type='avg')

x = fluid.layers.reshape(x, shape=[-1, self.out_dim])

x = self.classifier(x)

return x

def make_layer(self, num_channels, num_filters, depth, use_bn, name=None):

layers = []

layers.append(ConvBNLayer(num_channels, num_filters, use_bn=use_bn, name=f'{name}.0'))

for i in range(1, depth):

layers.append(ConvBNLayer(num_filters, num_filters, use_bn=use_bn, name=f'{name}.{i}'))

return layers

def VGG16(pretrained=False):

model = VGG(layers=16)

if pretrained:

model_dict, _ = fluid.load_dygraph(model_path['vgg16'])

model.set_dict(model_dict)

return model

def VGG16BN(pretrained=False):

model = VGG(layers=16, use_bn=True)

if pretrained:

model_dict, _ = fluid.load_dygraph(model_path['vgg16bn'])

model.set_dict(model_dict)

return model

def VGG19(pretrained=False):

model = VGG(layers=19)

if pretrained:

model_dict, _ = fluid.load_dygraph(model_path['vgg19'])

model.set_dict(model_dict)

return model

def VGG19BN(pretrained=False):

model = VGG(layers=19, use_bn=True)

if pretrained:

model_dict, _ = fluid.load_dygraph(model_path['vgg19bn'])

model.set_dict(model_dict)

return model

def main():

with fluid.dygraph.guard():

x_data = np.random.rand(2, 3, 224, 224).astype(np.float32)

x = to_variable(x_data)

model = VGG16()

model.eval()

pred = model(x)

print('vgg16: pred.shape = ', pred.shape)

model = VGG16BN()

model.eval()

pred = model(x)

print('vgg16bn: pred.shape = ', pred.shape)

model = VGG19()

model.eval()

pred = model(x)

print('vgg19: pred.shape = ', pred.shape)

model = VGG19BN()

model.eval()

pred = model(x)

print('vgg19bn: pred.shape = ', pred.shape)

if __name__ == "__main__":

main()

FCN8s

import numpy as np

import paddle.fluid as fluid

from paddle.fluid.dygraph import to_variable

from paddle.fluid.dygraph import Conv2D

from paddle.fluid.dygraph import Conv2DTranspose

from paddle.fluid.dygraph import Dropout

from paddle.fluid.dygraph import BatchNorm

from paddle.fluid.dygraph import Pool2D

from paddle.fluid.dygraph import Linear

from vgg import VGG16BN

# create fcn8s model

class FCN8s(fluid.dygraph.Layer):

def __init__( self, num_classes=59):

super(FCN8s, self).__init__()

backbone = VGG16BN(pretrained=False)

self.layer1 = backbone.layer1

self.layer1[0].conv._padding = [100, 100] #防止输入图片尺寸过小时,导致pool到中间层时,特征特征图消失

self.pool1 = Pool2D(pool_size=2, pool_stride=2, ceil_mode=True)

self.layer2 = backbone.layer2

self.pool2 = Pool2D(pool_size=2, pool_stride=2, ceil_mode=True)

self.layer3 = backbone.layer3

self.pool3 = Pool2D(pool_size=2, pool_stride=2, ceil_mode=True)

self.layer4 = backbone.layer4

self.pool4 = Pool2D(pool_size=2, pool_stride=2, ceil_mode=True)

self.layer5 = backbone.layer5

self.pool5 = Pool2D(pool_size=2, pool_stride=2, ceil_mode=True)

self.fc6 = Conv2D(512, 4096, 7, act='relu')

self.fc7 = Conv2D(4096, 4096, 1, act='relu')

self.drop6 = Dropout()

self.drop7 = Dropout()

self.score = Conv2D(4096, num_classes, 1)

self.score_pool3 = Conv2D(256, num_classes, 1)

self.score_pool4 = Conv2D(512, num_classes, 1)

self.up_output = Conv2DTranspose(num_channels=num_classes,

num_filters=num_classes,

filter_size=4,

stride=2,

bias_attr=False)

self.up_pool4 = Conv2DTranspose(num_channels=num_classes,

num_filters=num_classes,

filter_size=4,

stride=2,

bias_attr=False)

self.up_final = Conv2DTranspose(num_channels=num_classes,

num_filters=num_classes,

filter_size=16,

stride=8,

bias_attr=False)

def forward(self, inputs):

x = self.layer1(inputs)

x = self.pool1(x) # 1/2

x = self.layer2(x)

x = self.pool2(x) # 1/4

x = self.layer3(x)

x = self.pool3(x) # 1/8

pool3 = x

x = self.layer4(x)

x = self.pool4(x) # 1/16

pool4 = x

x = self.layer5(x)

x = self.pool5(x) # 1/32

x = self.fc6(x)

x = self.drop6(x)

x = self.fc7(x)

x = self.drop7(x)

x = self.score(x)

x = self.up_output(x)

up_output = x # 1/16

x = self.score_pool4(pool4)

x = x[:, :, 5:5+up_output.shape[2], 5:5+up_output.shape[3]]

up_pool4 = x

x = up_pool4 + up_output

x = self.up_pool4(x)

up_pool4 = x

x = self.score_pool3(pool3)

x = x[:, :, 9:9+up_pool4.shape[2], 9:9+up_pool4.shape[3]]

up_pool3 = x # 1/8

x = up_pool3 + up_pool4

x = self.up_final(x)

x = x[:, :, 31:31+inputs.shape[2], 31:31+inputs.shape[3]]

return x

def main():

with fluid.dygraph.guard():

x_data = np.random.rand(2, 3, 512, 512).astype(np.float32)

x = to_variable(x_data)

model = FCN8s(num_classes=59)

model.eval()

pred = model(x)

print(pred.shape)

if __name__ == '__main__':

main()

为什么要padding 100?

当输入图像大小为1x1时,

L1+Pool1 100x100

L2+Pool2 50x50

L3+Pool3 25x25

L4+Pool4 13x13

L5+Pool5 7x7

L6+L7 1x1

padding的目的就是保证特征图卷积到最小时,尺寸不会完全消失.

为什Cropoing [5,9,31]?

因为Conv与pool的尺寸变换为

而转置卷积的尺寸变换为

若L5+Pool5后输出特征维度为H,则

Tconv1 2H-10 L4的输入为2H crop size 10//2 = 5

Tconv2 4H-18 L3的输入为4H corp size 18//2 = 9

Tconv3 32H-136 L2的输入为32H = S +200 crop size 200-136//2 = 32

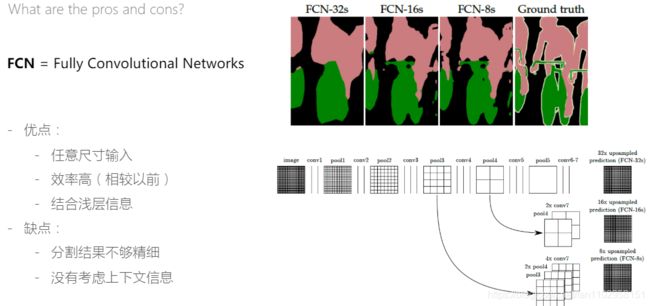

FCN的优缺点

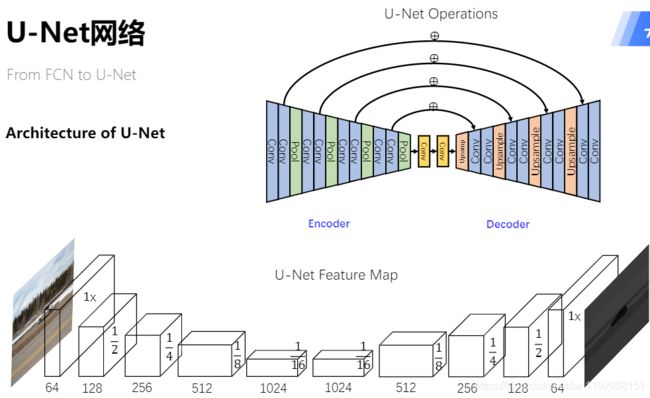

Unet

特点:将element-wise的相加,改为了在通道数C维度上的Concate.

实践代码

backbone Resnet

import numpy as np

import paddle.fluid as fluid

from paddle.fluid.dygraph import to_variable

from paddle.fluid.dygraph import Conv2D

from paddle.fluid.dygraph import BatchNorm

from paddle.fluid.dygraph import Pool2D

from paddle.fluid.dygraph import Linear

model_path = {'ResNet18': './resnet18',

'ResNet34': './resnet34',

'ResNet50': './resnet50',

'ResNet101': './resnet101',

'ResNet152': './resnet152'}

class ConvBNLayer(fluid.dygraph.Layer):

def __init__(self,

num_channels,

num_filters,

filter_size,

stride=1,

groups=1,

act=None,

dilation=1,

padding=None,

name=None):

super(ConvBNLayer, self).__init__(name)

if padding is None:

padding = (filter_size-1)//2

else:

padding=padding

self.conv = Conv2D(num_channels=num_channels,

num_filters=num_filters,

filter_size=filter_size,

stride=stride,

padding=padding,

groups=groups,

act=None,

dilation=dilation,

bias_attr=False)

self.bn = BatchNorm(num_filters, act=act)

def forward(self, inputs):

y = self.conv(inputs)

y = self.bn(y)

return y

class BasicBlock(fluid.dygraph.Layer):

expansion = 1 # expand ratio for last conv output channel in each block

def __init__(self,

num_channels,

num_filters,

stride=1,

shortcut=True,

name=None):

super(BasicBlock, self).__init__(name)

self.conv0 = ConvBNLayer(num_channels=num_channels,

num_filters=num_filters,

filter_size=3,

stride=stride,

act='relu',

name=name)

self.conv1 = ConvBNLayer(num_channels=num_filters,

num_filters=num_filters,

filter_size=3,

act=None,

name=name)

if not shortcut:

self.short = ConvBNLayer(num_channels=num_channels,

num_filters=num_filters,

filter_size=1,

stride=stride,

act=None,

name=name)

self.shortcut = shortcut

def forward(self, inputs):

conv0 = self.conv0(inputs)

conv1 = self.conv1(conv0)

if self.shortcut:

short = inputs

else:

short = self.short(inputs)

y = fluid.layers.elementwise_add(x=short, y=conv1, act='relu')

return y

class BottleneckBlock(fluid.dygraph.Layer):

expansion = 4

def __init__(self,

num_channels,

num_filters,

stride=1,

shortcut=True,

dilation=1,

padding=None,

name=None):

super(BottleneckBlock, self).__init__(name)

self.conv0 = ConvBNLayer(num_channels=num_channels,

num_filters=num_filters,

filter_size=1,

act='relu')

# name=name)

self.conv1 = ConvBNLayer(num_channels=num_filters,

num_filters=num_filters,

filter_size=3,

stride=stride,

padding=padding,

act='relu',

dilation=dilation)

# name=name)

self.conv2 = ConvBNLayer(num_channels=num_filters,

num_filters=num_filters * 4,

filter_size=1,

stride=1)

# name=name)

if not shortcut:

self.short = ConvBNLayer(num_channels=num_channels,

num_filters=num_filters * 4,

filter_size=1,

stride=stride)

# name=name)

self.shortcut = shortcut

self.num_channel_out = num_filters * 4

def forward(self, inputs):

conv0 = self.conv0(inputs)

#print('conv0 shape=',conv0.shape)

conv1 = self.conv1(conv0)

#print('conv1 shape=', conv1.shape)

conv2 = self.conv2(conv1)

#print('conv2 shape=', conv2.shape)

if self.shortcut:

short = inputs

else:

short = self.short(inputs)

#print('short shape=', short.shape)

y = fluid.layers.elementwise_add(x=short, y=conv2, act='relu')

return y

class ResNet(fluid.dygraph.Layer):

def __init__(self, layers=50, num_classes=1000):

super(ResNet, self).__init__()

self.layers = layers

supported_layers = [18, 34, 50, 101, 152]

assert layers in supported_layers

if layers == 18:

depth = [2, 2, 2, 2]

elif layers == 34:

depth = [3, 4, 6, 3]

elif layers == 50:

depth = [3, 4, 6, 3]

elif layers == 101:

depth = [3, 4, 23, 3]

elif layers == 152:

depth = [3, 8, 36, 3]

if layers < 50:

num_channels = [64, 64, 128, 256]

else:

num_channels = [64, 256, 512, 1024]

num_filters = [64, 128, 256, 512]

self.conv = ConvBNLayer(num_channels=3,

num_filters=64,

filter_size=7,

stride=2,

act='relu')

self.pool2d_max = Pool2D(pool_size=3,

pool_stride=2,

pool_padding=1,

pool_type='max')

if layers < 50:

block = BasicBlock

l1_shortcut=True

else:

block = BottleneckBlock

l1_shortcut=False

self.layer1 = fluid.dygraph.Sequential(

*self.make_layer(block,

num_channels[0],

num_filters[0],

depth[0],

stride=1,

shortcut=l1_shortcut,

name='layer1'))

self.layer2 = fluid.dygraph.Sequential(

*self.make_layer(block,

num_channels[1],

num_filters[1],

depth[1],

stride=2,

name='layer2'))

self.layer3 = fluid.dygraph.Sequential(

*self.make_layer(block,

num_channels[2],

num_filters[2],

depth[2],

stride=1,

name='layer3',

dilation=2))

self.layer4 = fluid.dygraph.Sequential(

*self.make_layer(block,

num_channels[3],

num_filters[3],

depth[3],

stride=1,

name='layer4',

dilation=4))

self.last_pool = Pool2D(pool_size=7, # ignore if global_pooling is True

global_pooling=True,

pool_type='avg')

self.fc = Linear(input_dim=num_filters[-1] * block.expansion,

output_dim=num_classes,

act=None)

self.out_dim = num_filters[-1] * block.expansion

def forward(self, inputs):

x = self.conv(inputs)

x = self.pool2d_max(x)

#print(x.shape)

x = self.layer1(x)

#print(x.shape)

x = self.layer2(x)

#print(x.shape)

x = self.layer3(x)

#print(x.shape)

x = self.layer4(x)

#print(x.shape)

x = self.last_pool(x)

x = fluid.layers.reshape(x, shape=[-1, self.out_dim])

x = self.fc(x)

return x

def make_layer(self, block, num_channels, num_filters, depth, stride, dilation=1, shortcut=False, name=None):

layers = []

if dilation > 1:

padding=dilation

else:

padding=None

layers.append(block(num_channels,

num_filters,

stride=stride,

shortcut=shortcut,

dilation=dilation,

padding=padding,

name=f'{name}.0'))

for i in range(1, depth):

layers.append(block(num_filters * block.expansion,

num_filters,

stride=1,

dilation=dilation,

padding=padding,

name=f'{name}.{i}'))

return layers

def ResNet18(pretrained=False):

model = ResNet(layers=18)

if pretrained:

model_state, _ = fluid.load_dygraph(model_path['ResNet18'])

model.set_dict(model_state)

return model

def ResNet34(pretrained=False):

model = ResNet(layers=34)

if pretrained:

model_state, _ = fluid.load_dygraph(model_path['ResNet34'])

model.set_dict(model_state)

return model

def ResNet50(pretrained=False):

model = ResNet(layers=50)

if pretrained:

model_state, _ = fluid.load_dygraph(model_path['ResNet50'])

model.set_dict(model_state)

return model

def ResNet101(pretrained=False):

model = ResNet(layers=101)

if pretrained:

model_state, _ = fluid.load_dygraph(model_path['ResNet101'])

model.set_dict(model_state)

return model

def ResNet152(pretrained=False):

model = ResNet(layers=152)

if pretrained:

model_state, _ = fluid.load_dygraph(model_path['ResNet152'])

model.set_dict(model_state)

return model

def main():

with fluid.dygraph.guard():

x_data = np.random.rand(2, 3, 512, 512).astype(np.float32)

#x_data = np.random.rand(2, 3, 224, 224).astype(np.float32)

x = to_variable(x_data)

#model = ResNet18()

#model.eval()

#pred = model(x)

#print('resnet18: pred.shape = ', pred.shape)

#model = ResNet34()

#pred = model(x)

#model.eval()

#print('resnet34: pred.shape = ', pred.shape)

model = ResNet50()

model.eval()

pred = model(x)

print('dilated resnet50: pred.shape = ', pred.shape)

#model = ResNet101()

#pred = model(x)

#model.eval()

#print('resnet101: pred.shape = ', pred.shape)

#model = ResNet152()

#pred = model(x)

#model.eval()

#print('resnet152: pred.shape = ', pred.shape)

#print(model.sublayers())

#for name, sub in model.named_sublayers(include_sublayers=True):

# #print(sub.full_name())

# if (len(sub.named_sublayers()))

# print(name)

if __name__ == "__main__":

main()

Unet

import numpy as np

import paddle

import paddle.fluid as fluid

from paddle.fluid.dygraph import to_variable

from paddle.fluid.dygraph import Layer

from paddle.fluid.dygraph import Conv2D

from paddle.fluid.dygraph import BatchNorm

from paddle.fluid.dygraph import Pool2D

from paddle.fluid.dygraph import Conv2DTranspose

class Encoder(Layer):

def __init__(self, num_channels, num_filters):

super(Encoder, self).__init__()

#TODO: encoder contains:

# 1 3x3conv + 1bn + relu +

# 1 3x3conc + 1bn + relu +

# 1 2x2 pool

# return features before and after pool

self.conv1 = Conv2D(num_channels,

num_filters,

filter_size=3,

stride=1,

padding=1)

self.bn1 = BatchNorm(num_filters,act='relu')

self.conv2 = Conv2D(num_filters,

num_filters,

filter_size=3,

stride=1,

padding=1)

self.bn2 = BatchNorm(num_filters,act='relu')

self.pool = Pool2D(pool_size=2,pool_stride=2,pool_type='max',ceil_mode = True)

def forward(self, inputs):

# TODO: finish inference part

x = self.conv1(inputs)

x = self.bn1(x)

x = self.conv2(x)

x = self.bn2(x)

x_pooled = self.pool(x)

return x, x_pooled

class Decoder(Layer):

def __init__(self, num_channels, num_filters):

super(Decoder, self).__init__()

# TODO: decoder contains:

# 1 2x2 transpose conv (makes feature map 2x larger)

# 1 3x3 conv + 1bn + 1relu +

# 1 3x3 conv + 1bn + 1relu

self.up = Conv2DTranspose(num_channels = num_channels,

num_filters = num_filters,

filter_size = 2,

stride = 2)

self.conv1 = Conv2D(num_channels,

num_filters,

filter_size=3,

stride=1,

padding=1)

self.bn1 = BatchNorm(num_filters,act='relu')

self.conv2 = Conv2D(num_filters,

num_filters,

filter_size=3,

stride=1,

padding=1)

self.bn2 = BatchNorm(num_filters,act='relu')

def forward(self, inputs_prev, inputs):

# TODO: forward contains an Pad2d and Concat

x = self.up(inputs)

h_diff = (inputs_prev.shape[2] - x.shape[2])

w_diff = (inputs_prev.shape[3] - x.shape[3])

x = fluid.layers.pad2d(x,paddings = [h_diff,h_diff - h_diff//2,w_diff,w_diff - w_diff//2])

x = fluid.layers.concat([inputs_prev,x],axis=1)

x = self.conv1(x)

x = self.bn1(x)

x = self.conv2(x)

x = self.bn2(x)

#Pad

return x

class UNet(Layer):

def __init__(self, num_classes=59):

super(UNet, self).__init__()

# encoder: 3->64->128->256->512

# mid: 512->1024->1024

#TODO: 4 encoders, 4 decoders, and mid layers contains 2 1x1conv+bn+relu

self.down1 = Encoder(num_channels=3,num_filters=64)

self.down2 = Encoder(num_channels=64,num_filters=128)

self.down3 = Encoder(num_channels=128,num_filters=256)

self.down4 = Encoder(num_channels=256,num_filters=512)

self.mid_conv1 = Conv2D(512,1024,filter_size=1,padding=0,stride=1)

self.mid_bn1 = BatchNorm(1024,'relu')

self.mid_conv2 = Conv2D(1024,1024,filter_size=1,stride = 1,padding=0)

self.mid_bn2 = BatchNorm(1024,'relu')

#这里通道数是encoder的两倍,因为这里进行了特征图通道叠加

self.up4 = Decoder(1024,512)

self.up3 = Decoder(512,256)

self.up2 = Decoder(256,128)

self.up1 = Decoder(128,64)

self.last_conv = Conv2D(num_channels = 64,num_filters = num_classes,filter_size = 1)

def forward(self, inputs):

x1, x = self.down1(inputs)

print(x1.shape, x.shape)

x2, x = self.down2(x)

print(x2.shape, x.shape)

x3, x = self.down3(x)

print(x3.shape, x.shape)

x4, x = self.down4(x)

print(x4.shape, x.shape)

# middle layers

x = self.mid_conv1(x)

x = self.mid_bn1(x)

x = self.mid_conv2(x)

x = self.mid_bn2(x)

print(x4.shape, x.shape)

x = self.up4(x4, x)

print(x3.shape, x.shape)

x = self.up3(x3, x)

print(x2.shape, x.shape)

x = self.up2(x2, x)

print(x1.shape, x.shape)

x = self.up1(x1, x)

print(x.shape)

x = self.last_conv(x)

return x

def main():

with fluid.dygraph.guard(fluid.CUDAPlace(0)):

model = UNet(num_classes=59)

x_data = np.random.rand(1, 3, 123, 123).astype(np.float32)

inputs = to_variable(x_data)

pred = model(inputs)

print(pred.shape)

if __name__ == "__main__":

main()

![H'_{out} = \frac{(H_{in} + stride[0] - 1)}{stride[0]} \\ W'_{out} = \frac{(W_{in} + stride[1] - 1)}{stride[1]}](http://img.e-com-net.com/image/info8/68f2eea7ae7f4ed0b679a3f75212c73c.gif)