远程服务器 Linux 用cityscape训练DeepLabv3模型(Pytorch版)并用图像测试

参考

https://blog.csdn.net/qq_45389690/article/details/111591713?utm_medium=distribute.pc_relevant_download.none-task-blog-baidujs-2.nonecase&depth_1-utm_source=distribute.pc_relevant_download.none-task-blog-baidujs-2.nonecase

https://blog.csdn.net/weixin_41919571/article/details/107906066

代码

https://github.com/jfzhang95/pytorch-deeplab-xception

出现问题

ImportError: No module named pycocotools.coco

解决

https://blog.csdn.net/u011961856/article/details/77676461

https://blog.csdn.net/joejeanjean/article/details/78839318?utm_medium=distribute.pc_relevant.none-task-blog-baidujs_title-6&spm=1001.2101.3001.4242

https://blog.csdn.net/haiyonghao/article/details/80472713?utm_medium=distribute.pc_relevant.none-task-blog-baidujs_title-11&spm=1001.2101.3001.4242

一定要把PythonAPI目录下除setup.py之外的所有文件拷贝到pytorch-deeplab-xception-master文件夹下

出现问题

ImportError: No module named ‘Queue’

解决

https://blog.csdn.net/DarrenXf/article/details/82962412

出现问题

from utils.loss import SegmentationLosses

ImportError: No module named loss

参考

https://blog.csdn.net/Diliduluw/article/details/103742766

解决

在utils文件下 新建一个空白的__init__.py

出现问题

AttributeError: ‘module’ object has no attribute ‘kaiming_normal_’

参考

https://blog.csdn.net/songchunxiao1991/article/details/83104893?utm_medium=distribute.pc_relevant_t0.none-task-blog-BlogCommendFromMachineLearnPai2-1.control&depth_1-utm_source=distribute.pc_relevant_t0.none-task-blog-BlogCommendFromMachineLearnPai2-1.control

https://blog.csdn.net/Aug0st/article/details/42707709

然后 我删除了所有pyc文件 (主要是不敢更新Python27\Lib\urllib2.pyc文件 怕影响别的算法)

删除指令 find /dir -name “*.pyc” | xargs rm -rf

但并没用

然后

我又配了一个python3.5 pytorch0.4.1的环境

出现问题

RuntimeError: CUDA error: out of memory

解决

train.py中改batch-size的default=2

出现问题

ValueError: Expected more than 1 value per channel when training, got input size [1, 256, 1, 1]

参考

https://blog.csdn.net/weixin_43925119/article/details/109755329

https://www.cnblogs.com/zmbreathing/p/pyTorch_BN_error.html

https://blog.csdn.net/sinat_39307513/article/details/87917537

https://blog.csdn.net/qq_42079689/article/details/102587401?utm_medium=distribute.pc_relevant.none-task-blog-BlogCommendFromMachineLearnPai2-2.control&depth_1-utm_source=distribute.pc_relevant.none-task-blog-BlogCommendFromMachineLearnPai2-2.control

https://blog.csdn.net/jining11/article/details/111478935?utm_medium=distribute.pc_relevant.none-task-blog-baidujs_title-2&spm=1001.2101.3001.4242

https://blog.csdn.net/qq_36321330/article/details/108954588?utm_medium=distribute.pc_relevant.none-task-blog-BlogCommendFromMachineLearnPai2-2.control&depth_1-utm_source=distribute.pc_relevant.none-task-blog-BlogCommendFromMachineLearnPai2-2.control

https://blog.csdn.net/qq_34124009/article/details/109100053?utm_medium=distribute.pc_relevant.none-task-blog-BlogCommendFromMachineLearnPai2-4.control&depth_1-utm_source=distribute.pc_relevant.none-task-blog-BlogCommendFromMachineLearnPai2-4.control

https://blog.csdn.net/qq_21230831/article/details/103711545?utm_medium=distribute.pc_relevant.none-task-blog-OPENSEARCH-7.control&depth_1-utm_source=distribute.pc_relevant.none-task-blog-OPENSEARCH-7.control

https://blog.csdn.net/lrs1353281004/article/details/108262018?utm_medium=distribute.pc_relevant.none-task-blog-baidujs_title-7&spm=1001.2101.3001.4242

模型中含有nn.BatchNorm层,训练时需要batch_size大于1,来计算当前batch的running mean and std,数据数量除以batch_size后刚好余1时就会报错。

解决

改batch_size 使其除完不余1,但我改完5之后内存又不行了,报错。

于是 我去找了/usr/local/lib/python3.5/dist-packages/torch/utils/data/dataloader.py文件,改里面drop_last=True。

因为远程服务器不能直接打开这个文件,所以粘过来又拷过去的。

跑的好慢啊!!!

跑完了,运行demo测试一下

import argparse

import os

import numpy as np

import time

from modeling.deeplab import *

from dataloaders import custom_transforms as tr

from PIL import Image

from torchvision import transforms

from dataloaders.utils import *

from torchvision.utils import make_grid, save_image

def main():

parser = argparse.ArgumentParser(description="PyTorch DeeplabV3Plus Training")

parser.add_argument('--in-path', type=str, required=True, help='image to test')

parser.add_argument('--out-path', type=str, required=True, help='mask image to save')

parser.add_argument('--backbone', type=str, default='xception',

choices=['resnet', 'xception', 'drn', 'mobilenet'],

help='backbone name (default: xception)')

parser.add_argument('--ckpt', type=str, default='deeplab-xception.pth',

help='saved model')

parser.add_argument('--out-stride', type=int, default=16,

help='network output stride (default: 8)')

parser.add_argument('--no-cuda', action='store_true', default=False,

help='disables CUDA training')

parser.add_argument('--gpu-ids', type=str, default='0',

help='use which gpu to train, must be a \

comma-separated list of integers only (default=0)')

parser.add_argument('--dataset', type=str, default='cityscapes',

choices=['pascal', 'coco', 'cityscapes','invoice'],

help='dataset name (default: cityscapes)')

parser.add_argument('--crop-size', type=int, default=513,

help='crop image size')

parser.add_argument('--num_classes', type=int, default=19,

help='crop image size')

parser.add_argument('--sync-bn', type=bool, default=None,

help='whether to use sync bn (default: auto)')

parser.add_argument('--freeze-bn', type=bool, default=False,

help='whether to freeze bn parameters (default: False)')

args = parser.parse_args()

args.cuda = not args.no_cuda and torch.cuda.is_available()

if args.cuda:

try:

args.gpu_ids = [int(s) for s in args.gpu_ids.split(',')]

except ValueError:

raise ValueError('Argument --gpu_ids must be a comma-separated list of integers only')

if args.sync_bn is None:

if args.cuda and len(args.gpu_ids) > 1:

args.sync_bn = True

else:

args.sync_bn = False

model_s_time = time.time()

model = DeepLab(num_classes=args.num_classes,

backbone=args.backbone,

output_stride=args.out_stride,

sync_bn=args.sync_bn,

freeze_bn=args.freeze_bn)

ckpt = torch.load(args.ckpt, map_location='cpu')

model.load_state_dict(ckpt['state_dict'])

model = model.cuda()

model_u_time = time.time()

model_load_time = model_u_time-model_s_time

print("model load time is {}".format(model_load_time))

composed_transforms = transforms.Compose([

tr.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)),

tr.ToTensor()])

for name in os.listdir(args.in_path):

s_time = time.time()

image = Image.open(args.in_path+"/"+name).convert('RGB')

# image = Image.open(args.in_path).convert('RGB')

target = Image.open(args.in_path+"/"+name).convert('L')

sample = {

'image': image, 'label': target}

tensor_in = composed_transforms(sample)['image'].unsqueeze(0)

model.eval()

if args.cuda:

tensor_in = tensor_in.cuda()

with torch.no_grad():

output = model(tensor_in)

grid_image = make_grid(decode_seg_map_sequence(torch.max(output[:3], 1)[1].detach().cpu().numpy()),

3, normalize=False, range=(0, 255))

save_image(grid_image,args.out_path+"/"+"{}_mask.png".format(name[0:-4]))

u_time = time.time()

img_time = u_time-s_time

print("image:{} time: {} ".format(name,img_time))

# save_image(grid_image, args.out_path)

# print("type(grid) is: ", type(grid_image))

# print("grid_image.shape is: ", grid_image.shape)

print("image save in out_path.")

if __name__ == "__main__":

main()

# python demo.py --in-path your_file --out-path your_dst_file --ckpt /home/ubuntu/pytorch-deeplab-xception-master/run/cityscapes/deeplab-xception/model_best.pth.tar --backbone xception

代码里面记得改成你自己用的模型,数据集之类的。

我的本意是用 这个用cityscape训练好的模型 去分割我自己的街景图,但我发现分割出来的图 颜色完全和cityscape不一样,

然后我发现 我的原图是jpg,于是我就将jpg转为png(因为cityscape的图是png)

import os

import sys

from PIL import Image

input_folder=r'C:/Users/wo/Desktop/yaogaishuju/human8' #源文件夹,包含.png格式图片

output_folder=r'C:/Users/wo/Desktop/yaogaishuju/human8png'# 输出文件夹

#training_data=[]

a=[]

for root, dirs, files in os.walk(input_folder):

for filename in (x for x in files if x.endswith('.jpg')):

filepath = os.path.join(root, filename)

object_class = filename.split('.')[0]

a.append(object_class)

print(a)

for i in a:

old_path=input_folder+"\\"+str(i)+'.jpg'

new_path=output_folder+"\\"+str(i)+'.png'

img=Image.open(old_path)

img.save(new_path)

然后将dome.py里面的

image = Image.open(args.in_path+"/"+name).convert(‘RGB’)

改成

image = Image.open(args.in_path+"/"+name).convert(‘RGBA’)

果然 就出错

ValueError: operands could not be broadcast together with shapes (576,768,4) (3,) (576,768,4)

维度不一样

要改的太多了,我又换回’RGB’了

合着白转成png了,输入的都是RGB三通道,

分割的图的颜色一点变化都没有。

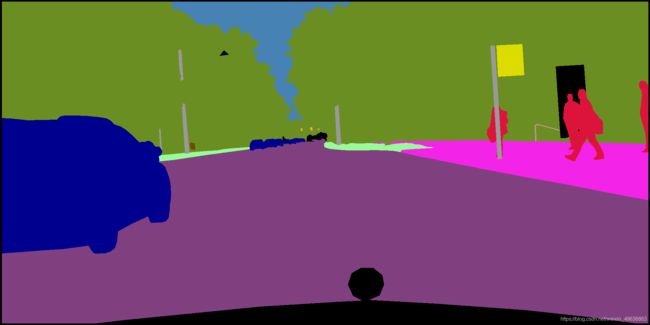

有没有大神告诉我 为什么用分割网络得到的街景图 和cityscape的label颜色不一样??????

为什么我得到的分割颜色是这个鬼样子?????

就你马离谱