【NLP】3 word2vec库与基于搜狗全网新闻数据集实例

word2vec库基于中文语料库实战

- 1. 语料库获取

- 2. 读取dat文件中有效内容、生成txt文件

- 3. 分词

- 4. 构建词向量

- 小结

思路参考word2vec构建中文词向量,原文是Linux环境,这里是win10

1. 语料库获取

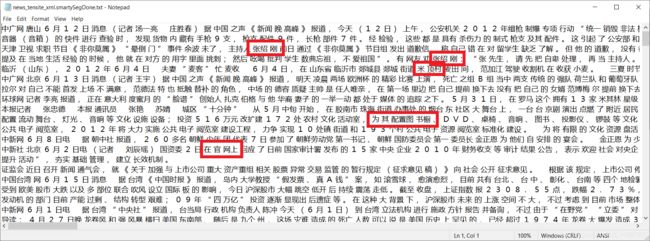

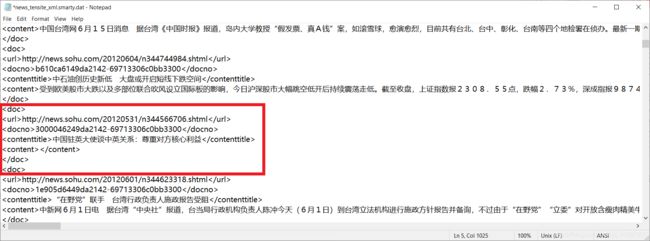

在搜狗实验室注册并下载全网新闻数据(SogouCA),完整版共711MB,格式为tar.gz格式,格式如下所示:

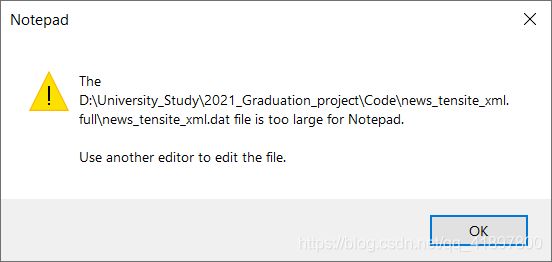

解压之后得到一个名为"news_tensite_xml.dat"的文件,解压后1.43G,太大了记事本打不开:

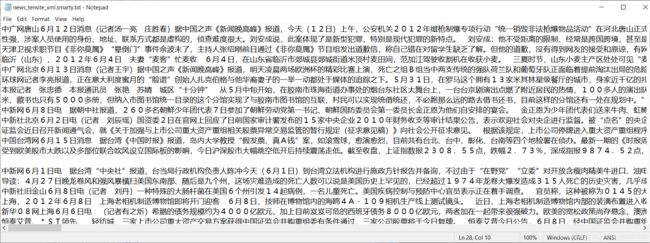

下载样例数据集"news_tensite_xml.smarty.dat"看一下,长这样:

pycharm读取文件路径时,和win10直接复制粘贴过去的\和/符号是反的,参考PyCharm中批量查找及替换,利用快捷键ctrl+ r(replace)可以进行替换

2. 读取dat文件中有效内容、生成txt文件

参考python读取dat文件

代码如下:

f = open(r'...your path/news_tensite_xml.smarty.dat',encoding='utf-8')

print(f.read())

f.close()

报错:

UnicodeDecodeError: 'utf-8' codec can't decode byte 0xb9 in position 127: invalid start byte

解决办法:修改encoding='gb18030’即可

去除

print(item.repalce('' ,'').replace('',''))

报错:

'str' object has no attribute 'repalce'

解决办法:打错了,应该是replace

生成的txt文件如下所示:

可以看到有些没用的换行符,这是因为本来的语料库就是有

我们当然不能一个一个去手动修改,这对于相当大的数据集肯定是不高效的,解决办法:*改为+,至少一次匹配,这样得到的txt好看很多

3. 分词

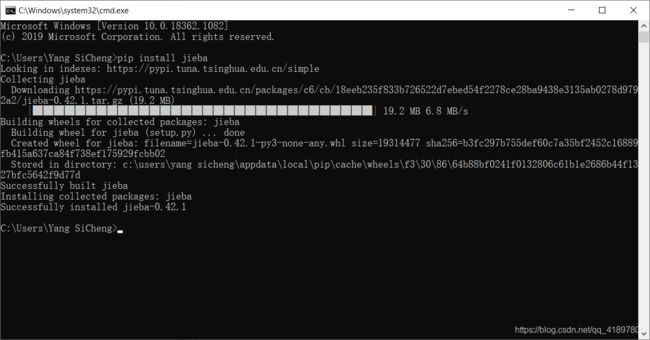

打开命令行,输入:

pip install jieba

这篇博客有jieba分词的详解,最后实现分词并导出txt效果如下:

Building prefix dict from the default dictionary ...

Loading model from cache C:\Users\YANGSI~1\AppData\Local\Temp\jieba.cache

Loading model cost 0.744 seconds.

Prefix dict has been built successfully.

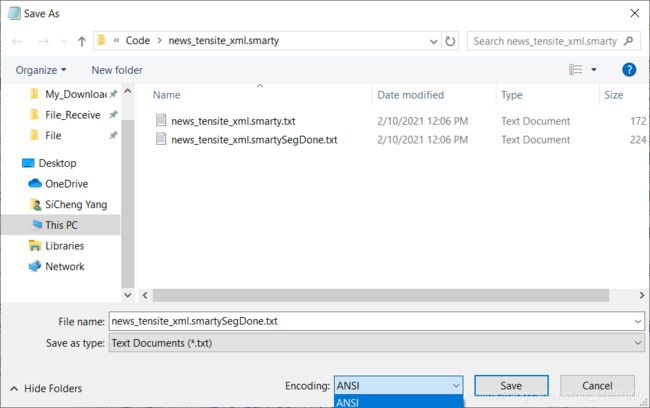

注意到每次读取和保存程序时都需要声明一下encoding=‘gb18030’,比较麻烦,这里可以另存为的时候直接修改格式为UTF-8:

UTF-8也需要转码,需要’gbk’格式的文件,可以通过如下代码生成:

fpath = '...your path/news_tensite_xml.smartySegDone_ANSI.txt'

file = open(fpath, 'r', encoding='gb18030')

newfpath = '...your path/news_tensite_xml.smartySegDone_gbk.txt'

newf = open(newfpath, 'w')

newf.write(file.read().replace('\ue40c','\t'))

file.close()

newf.close()

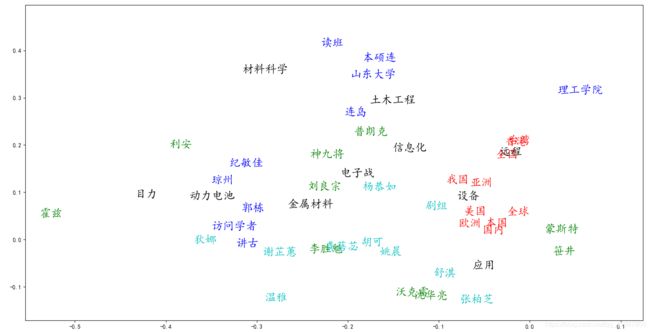

4. 构建词向量

训练word2vec的时候内存占用需要额外3G左右,总共大概3.5G的空间,分词时间大概一个小时,最后生成了"news_tensite_xmlSegDone.txt"文件

训练word2vec模型时报错:

File "E:\ProgramData\Anaconda3\lib\site-packages\word2vec\wordvectors.py", line 221, in from_binary

vocab[i] = word.decode(encoding)

UnicodeDecodeError: 'utf-8' codec can't decode byte 0xd3 in position 0: invalid continuation byte

这里使用utf-8的数据试试也不行。参考这篇文章解决’utf-8’ codec cann’t decode bytes in position 96-07:unexpected end of data

参考这篇文章查看编码,代码如下:

import chardet

path = '...your path/news_tensite_xml.smartyWord2Vec.bin'

f = open(path,'rb')

data = f.read()

print(chardet.detect(data))

有意思的来了,发现这个.bin文件编码居然也是utf-8编码,用记事本也打得开,说明就不是二进制编码啊,参考上一篇blog,解决方法:

训练时加上:'binary=True’这个参数即可,这个blog写的就是错的,不能训练成功,model也没有cosine的类,参考上一篇blog继续做

model = word2vec.load('...your path/news_tensite_xmlWord2Vec.bin')

print(model.vectors) # 查看词向量

[[-0.17513306 0.06612565 0.02054873 ... -0.04957951 -0.10750175

-0.0037561 ]

[ 0.05434967 -0.0818748 -0.02526366 ... 0.05428585 -0.18850493

0.21659254]

[-0.05116278 0.17824839 0.03333148 ... 0.01466534 -0.19820313

0.24522921]

...

[-0.12983356 0.161402 0.07890003 ... 0.15807958 -0.14913535

-0.00403439]

[-0.06313889 -0.05563448 -0.06698888 ... -0.05643742 -0.12080646

-0.0428595 ]

[ 0.01240269 0.0436162 -0.07861283 ... -0.08935557 -0.01481815

-0.07193998]]

index = 1000

print(model.vocab[index]) # 查看词表中的词

亿美元

indexes = model.similar('加拿大')

for index in indexes[0]:

print(model.vocab[index])

美国

卡尔加里

英国

新加坡

德国

蒙特利尔

洛杉矶

秘鲁

田纳西州

索马里

indexes = model.similar('清华大学')

for index in indexes[0]:

print(model.vocab[index])

系主任

上海财经大学

山东大学

英文系

中国人民大学

中国政法大学

研究生院

宁波大学

复旦大学

博士生

indexes = model.similar('爱因斯坦')

for index in indexes[0]:

print(model.vocab[index])

国民生产

儒家

强军

先入为主

唯心主义

唯物主义

玄烨

论文

恩格斯

别国

报错:

RuntimeWarning: Glyph 20122 missing from current font. font.set_text(s, 0, flags=flags)

解决办法:

matplotlib.rcParams['font.sans-serif'] = ['KaiTi']

负号:

RuntimeWarning: Glyph 8722 missing from current font.

font.set_text(s, 0, flags=flags)

解决方法:

matplotlib.rcParams['axes.unicode_minus'] = False # 正常显示负号

# -*- coding:utf-8 -*-

import re

import jieba

import word2vec

# dat文件打开

f = open(r'...your path/news_tensite_xml.dat',encoding='gb18030')

file = f.read()

f.close()

# 取出.+? '

match = re.findall(regex, file)

# 新建一个txt

new_file_path = '...your path/news_tensite_xml.txt'

f = open(new_file_path, 'w', encoding='gb18030')

# 写入内容

for item in match:

f.write(item.replace('' ,'').replace('','') + '\n')

f.close()

# 打开生成的txt

filepath = '...your path/news_tensite_xml.txt'

fileTrainRead = []

with open(filepath, encoding='gb18030') as file:

for line in file:

fileTrainRead.append(line)

# 新建分词生成的txt路径

new_file_path = '...your path/news_tensite_xmlSegDone.txt'

fileTrainSeg = []

for i in range(len(fileTrainRead)):

fileTrainSeg.append(' '.join(jieba.cut(fileTrainRead[i],cut_all=False)))

file = open(new_file_path, 'w', encoding='gb18030')

for item in fileTrainSeg:

file.write(item)

# gb18030 to UTF-8

fpath = '...your path/news_tensite_xmlSegDone.txt'

file = open(fpath, 'r', encoding='gb18030')

newfpath = '...your path/news_tensite_xmlSegDone_UTF8.txt'

newf = open(newfpath, 'w', encoding='utf-8')

newf.write(file.read().replace('\ue40c','\t'))

file.close()

newf.close()

# word2vec

word2vec.word2vec('...your path/news_tensite_xmlSegDone_UTF8.txt','...your path/news_tensite_xmlWord2Vec.bin', size=100, binary=True, verbose=True)

model = word2vec.load('...your path/news_tensite_xmlWord2Vec.bin')

print(model.vectors) # 查看词向量

index = 1000

print(model.vocab[index]) # 查看词表中的词

indexes = model.similar('爱因斯坦')

for index in indexes[0]:

print(model.vocab[index])

# PCA降维数据可视化

from sklearn.decomposition import PCA

X_reduced = PCA(n_components=2).fit_transform(model.vectors) # # reduce the dimension of word vector

index1,metrics1 = model.similar('中国') # show some word(center word) and it's similar words

index2,metrics2 = model.similar('清华')

index3,metrics3 = model.similar('牛顿')

index4,metrics4 = model.similar('自动化')

index5,metrics5 = model.similar('刘亦菲')

import matplotlib.pyplot as plt

import matplotlib

matplotlib.rcParams['font.sans-serif'] = ['KaiTi']

matplotlib.rcParams['axes.unicode_minus'] = False # 正常显示负号

fig = plt.figure()

ax = fig.add_subplot(111)

for i in index1:

ax.text(X_reduced[i][0],X_reduced[i][1], model.vocab[i],color='r', fontsize=20)

for i in index2:

ax.text(X_reduced[i][0],X_reduced[i][1], model.vocab[i],color='b', fontsize=20)

for i in index3:

ax.text(X_reduced[i][0],X_reduced[i][1], model.vocab[i],color='g', fontsize=20)

for i in index4:

ax.text(X_reduced[i][0],X_reduced[i][1], model.vocab[i],color='k', fontsize=20)

for i in index5:

ax.text(X_reduced[i][0],X_reduced[i][1], model.vocab[i],color='c', fontsize=20)

plt.show()

小结

word2vec库基于中文语料库实战,原博客有一些问题,在此更正,下一步学习gensim库的word2vec模型构建方法,分别基于英文和中文的语料库