Python Scrapy爬虫框架爬取51job职位信息并保存至数据库

Python Scrapy爬虫框架爬取51job职位信息并保存至数据库

————————————————————————————————

版权声明:本文为CSDN博主「杠精运动员」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/CN_Nidus/article/details/109695639

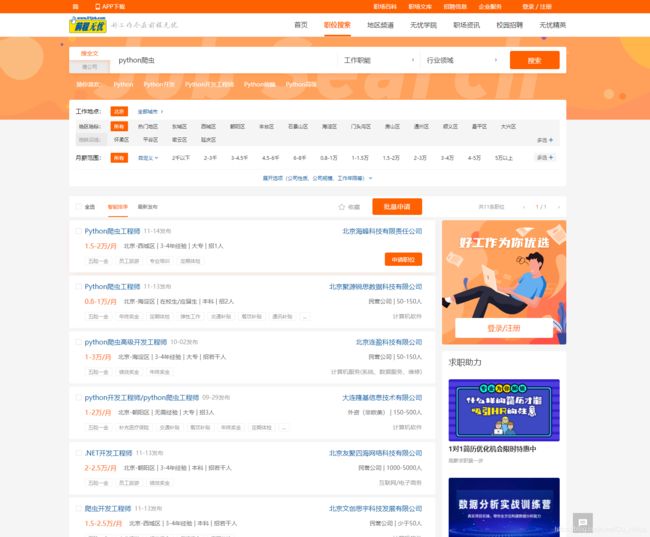

项目要求

- 使用Scrapy爬虫框架练习爬取51Job网站招聘职位信息并保存至数据库(已sqlite3为例)。

工具软件

python == 3.7

pycharm == 20.2.3

sqlite == 3.33.0

scrapy == 2.4.0

爬取字段

- 职位名称、薪资、发布日期、工作地点、任职要求、公司名称、公司规模、福利待遇、详情链接。

思路分析

- 网页连接:https://search.51job.com/list/010000,000000,0000,00,9,99,python%25E7%2588%25AC%25E8%2599%25AB,2,1.html?lang=c&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&ord_field=0&dibiaoid=0&line=&welfare=

- 分析:只要更改链接中的两个关键参数即可实现自定义爬取内容和翻页。

- 查看网页源代码

- 分析:页面展示的每一条职位信息都隐藏在script标签内以json格式保存。

完整代码展示

- 定义Items

import scrapy

class Job51Item(scrapy.Item):

jobname = scrapy.Field()

salary = scrapy.Field()

pubdate = scrapy.Field()

job_adress = scrapy.Field()

requirement = scrapy.Field()

company_name = scrapy.Field()

company_size = scrapy.Field()

welfare = scrapy.Field()

url = scrapy.Field()

- 定义Pipelines

import sqlite3

class Job51Pipeline:

def open_spider(self, spider):

# 连接数据库

self.conn = sqlite3.connect('D:\\OfficeLib\\JetBrains\\PyCharmFiles\\PycharmProjects\\MyScrapy\\db.sqlite3')

self.cur = self.conn.cursor()

spider.index = 1

def close_spider(self, spider):

# 关闭数据库

self.cur.close()

self.conn.close()

def process_item(self, item, spider):

# 构造sql语句

insert_sql = 'insert into job51 (jobname, salary, pubdate, job_adress, requirement, company_name, company_size, welfare, url)' \

'values ("{}", "{}", "{}", "{}", "{}", "{}", "{}", "{}", "{}")'.format(

item["jobname"],

item["salary"],

item["pubdate"],

item["job_adress"],

item["requirement"],

item["company_name"],

item["company_size"],

item["welfare"],

item["url"])

self.cur.execute(insert_sql)

self.conn.commit()

print("第{}条保存成功".format(spider.index))

spider.index += 1

return item

- 爬虫代码

# -*- coding: utf-8 -*-

import re

import scrapy

import json

from MyScrapy.items import Job51Item

from urllib import parse

class Job51Spider(scrapy.Spider):

name = 'Job51'

allowed_domains = ['51job.com']

# 指定管道

custom_settings = {

'ITEM_PIPELINES': {'MyScrapy.pipelines.Job51Pipeline': 299}

}

# 自定义爬取内容

infokey = str(input("请输入要爬取的职位:"))

print("开始爬取,请稍等……")

infokey_encode = parse.quote(infokey) # 进行url编码

base_url = 'https://search.51job.com/list/010000,000000,0000,00,9,99,{},2,1.html?' \

'lang=c&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&ord_field=0&dibiaoid=0&line=&welfare='

start_urls = [base_url.format(infokey_encode)]

def parse(self, response, **kwargs):

item = Job51Item()

# 获取响应数据并格式化

body = response.body.decode('gbk')

data_json = re.findall(r'window.__SEARCH_RESULT__ =(.+)}', body)[0] + "}"

data_py = json.loads(data_json)

for data in data_py['engine_search_result']:

print("正在爬取第{}条……".format(self.index))

item["jobname"] = data["job_title"]

if len(data["providesalary_text"]) <= 0:

item["salary"] = ""

else:

item["salary"] = data["providesalary_text"]

item["pubdate"] = data["updatedate"]

if len(data["workarea_text"]) <= 0:

item["job_adress"] = ""

else:

item["job_adress"] = data["workarea_text"]

item["requirement"] = "、".join(data["attribute_text"])

item["company_name"] = data["company_name"]

item["company_size"] = data["companysize_text"]

if len(data["jobwelf_list"]) <= 0:

item["welfare"] = ""

else:

item["welfare"] = "、".join(data["jobwelf_list"])

item["url"] = data["job_href"]

yield item

# 构造下一页url,当前设置只爬取前5页数据

for i in range(2, 5):

next_url = "https://search.51job.com/list/010000,000000,0000,00,9,99,{},2,{}.html?" \

"lang=c&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99" \

"&companysize=99&ord_field=0&dibiaoid=0&line=&welfare=".format(self.infokey_encode, i)

yield scrapy.Request(url=next_url, callback=self.parse)

- Settings配置

# 设置请求延时

DOWNLOAD_DELAY = 3

RANDOMIZE_DOWNLOAD_DELAY = True

# 设置user-agent,可以设置多个

USER_AGENT = [

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.1 (KHTML, like Gecko) Chrome/14.0.835.163 Safari/535.1",

]

# 关闭robot协议

ROBOTSTXT_OBEY = False

# 设置下载中间件

DOWNLOADER_MIDDLEWARES = {

'MyScrapy.middlewares.RandomUserAgentMiddleware': 542,

}

# 启用管道

ITEM_PIPELINES = {

'MyScrapy.pipelines.Job51Pipeline': 299,

}

- 下载中间件使用随机user-agent

from scrapy import signals

import random

class RandomUserAgentMiddleware:

def process_request(self, request, spider):

useragent = random.choice(spider.settings.get("USER_AGENT"))

request.headers["User-Agent"] = useragent

运行结果

结束语

爬虫有风险!抓数需谨慎!!!

————————————————

版权声明:本文为CSDN博主「杠精运动员」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/CN_Nidus/article/details/109695639

本文章仅供学习交流使用,切勿他用!