python学习之爬虫(五)--高性能爬虫: 单线程爬虫、多线程爬虫、多进程爬虫、多线程池爬虫、协程池爬虫

一、单线程爬虫:

1. 爬取糗事百科段子:

页面url是: https://www.qiushibaike.com/8hr/page/1/

思路分析:

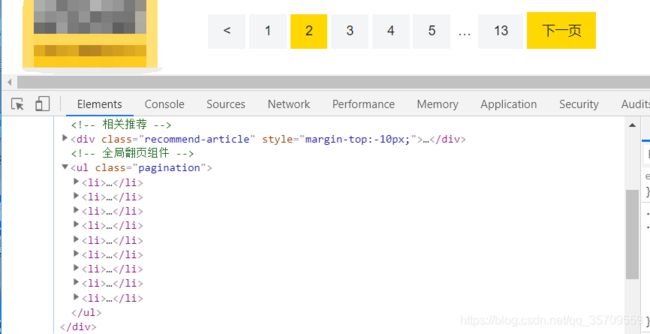

(1) 确定url地址:

url地址的规律很明显,一共13页的url地址:

(2)确定数据的位置:

数据都在class="recommend-article"的div下的ul的li中,在这个区域中,url地址对应的响应和elements相同

2. 糗事百科代码实现:

# coding=utf-8

import requests

import time

from lxml import etree

class QiuBai:

def __init__(self):

self.temp_url = "http://www.qiushibaike.com/8hr/page/{}"

self.headers = {

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_13_4) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.139 Safari/537.36"}

def get_url_list(self):

"""

准备url地址的列表

"""

return [self.temp_url.format(i) for i in range(1, 14)]

def parse_url(self, url):

"""发送请求,获取响应"""

response = requests.get(url=url, headers=self.headers)

return response.content.decode()

def get_content_list(self, html_str):

"""提取数据"""

html = etree.HTML(html_str)

li_list = html.xpath('//*[@id="content"]/div/div[2]/div/ul/li')

content_list = []

for li in li_list:

item = {

}

item['user_name'] = li.xpath('./div/div/a[@class="recmd-user"]/span/text()')

item['content'] = [i.strip() for i in li.xpath('./div/a[@class="recmd-content"]/text()')]

if item['user_name'] or item['content']:

content_list.append(item)

return content_list

def save_content_list(self, content_list):

"""保存"""

for content in content_list:

print(content)

def run(self):

"""实现主要逻辑"""

# 1. 准备url列表

url_list = self.get_url_list()

# 2. 便利发送请求,获取响应

for url in url_list:

html_str = self.parse_url(url)

# 3.提取数据

content_list = self.get_content_list(html_str)

# 4.保存

self.save_content_list(content_list)

if __name__ == "__main__":

t1 = time.time()

qiushi = QiuBai()

qiushi.run()

print("total cost:",time.time()-t1)

3.小结

- 完成单线程爬虫案例为多线程做准备

- 爬取糗事百科首页数据

- 解析糗事百科页面数据

- 爬取糗事百科所有数据

- 保存数据

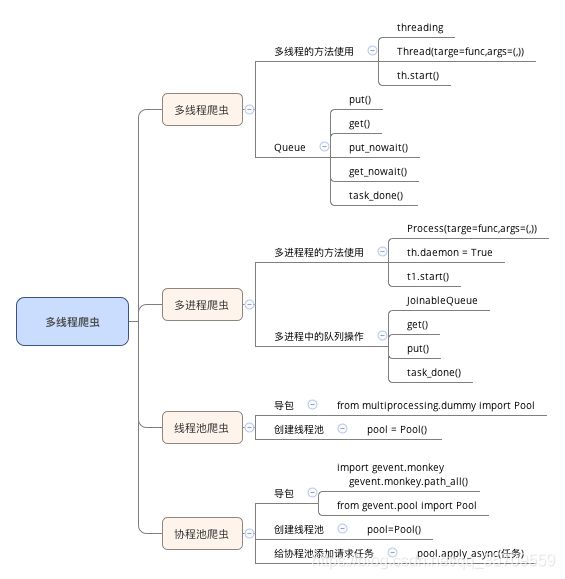

二、多线程爬虫:

1. 回顾多线程的使用:

在python3中, 主线程主进程结束, 子进程, 子线程不会结束;

为了鞥狗让主线程结束回收子线程,可以把子进程设置为守护线程,即该线程不重要, 主线程结束,子线程结束.

t1 = threading.Thread(target=func, args=(, ))

t1.setDaemon(True) # 设置为守护线程

t1.start() # 启动线程

2.回顾队列模块的使用:

from queue import Queue

q = Queue(maxsize=100) # maxsize为队列长度

item = {

}

q.put_nowait(item) # 不等待直接接受, 队列满是报错

q.put(item) # 放入队列, 队满时阻塞等待

q.get_nowait() # 不等待直接取, 队列空时报错

q.get() # 取出数据,队列为空的时候阻塞等待

q.qsize() # 获取队列中现存数据的个数

q.join() # 队列中维持了一个计数(初始为0), 计数不为0时让主线程阻塞等待,队列计数为0时才会继续往后执行

# q.join()实际作用就是阻塞主线程, 与task_done()配合使用

# put()操作会让计数+1, task_done()会让计数-1

# 计数为0, 才停止阻塞, 让主线程继续执行

q.task_done() # put()让计数+1, get()不会-1, get需要配合task_done一起才能使计数-1

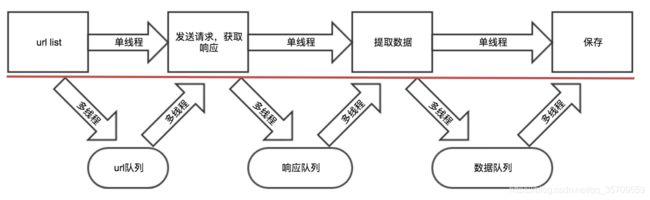

3.多线程实现思路剖析:

4. 具体代码实现:

# coding=utf-8

import requests

import time

from lxml import etree

from queue import Queue

import threading

class QiuBai:

def __init__(self):

self.temp_url = "http://www.qiushibaike.com/8hr/page/{}"

self.headers = {

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_13_4) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.139 Safari/537.36"}

self.url_queue = Queue()

self.html_queue = Queue()

self.content_list_queue = Queue()

def get_url_list(self):

"""

准备url地址的列表

"""

for i in range(1, 14):

self.url_queue.put(self.temp_url.format(i))

def parse_url(self):

"""发送请求,获取响应"""

while True:

# 在这里使用子线程不会结束,吧子线程设置为守护线程

url = self.url_queue.get()

response = requests.get(url, headers = self.headers)

self.html_queue.put(response.content.decode())

self.url_queue.task_done()

def get_content_list(self):

"""提取数据"""

while True:

html_str = self.html_queue.get()

html = etree.HTML(html_str)

li_list = html.xpath('//*[@id="content"]/div/div[2]/div/ul/li')

content_list = []

for li in li_list:

item = {

}

item['user_name'] = li.xpath('./div/div/a[@class="recmd-user"]/span/text()')

item['content'] = [i.strip() for i in li.xpath('./div/a[@class="recmd-content"]/text()')]

if item['user_name'] or item['content']:

content_list.append(item)

self.content_list_queue.put(content_list)

self.html_queue.task_done()

def save_content_list(self):

"""保存"""

while True:

content_list = self.content_list_queue.get()

for content in content_list:

print(content)

self.content_list_queue.task_done()

def run(self):

"""实现主要逻辑"""

thread_list = []

# 1.准备url_list

t_url = threading.Thread(target=self.get_url_list)

thread_list.append(t_url)

# 2. 遍历, 发送请求

for _ in range(3): # 三个线程发送

t_parse = threading.Thread(target=self.parse_url)

thread_list.append(t_parse)

# 3.提取数据

t_content = threading.Thread(target=self.get_content_list)

thread_list.append(t_content)

# 4.保存

t_save = threading.Thread(target=self.save_content_list)

thread_list.append(t_save)

for t in thread_list:

t.setDaemon(True) # 把子线程设置成守护线程

t.start()

for q in [self.url_queue, self.html_queue, self.content_list_queue]:

q.join() # 让主线程阻塞

print("主线程结束")

if __name__ == "__main__":

t1 = time.time()

qiushi = QiuBai()

qiushi.run()

print("total cost:",time.time()-t1)

注意点:

- put会让队列的计数+1,但是单纯的使用get不会让其-1,需要和task_done同时使用才能够-1

- task_done不能放在另一个队列的put之前,否则可能会出现数据没有处理完成,程序结束的情况

三、多进程爬虫:

在一个进程中无论开启多少线程都只在一个CPU的核心之上,这是Cpython的特点,不能说是缺点.

如果想利用计算机的多核有事, 就可以用多进程的方式实现,思想和多线程相似,只是对应的api不相同.

1.回顾多进程的方法使用:

from multiprocessing import Process

t1 = Process(target=func, arg=(, ))

t1.daemon = True # 设置为守护进程

t1.start() # 此时进程才会开启

2. 多进程中使用队列:

多进程中使用普通的队列欧快会发生阻塞, 对应的需要使用multiprocessing中的JoinablieQueue模块, 其实用过程和线程中使用的queue方法相同.

3. 具体实现:

# coding=utf-8

import requests

import time

from lxml import etree

from multiprocessing import JoinableQueue as Queue

from multiprocessing import Process

class QiuBai:

"""多进程糗事百科爬虫"""

def __init__(self):

self.temp_url = "https://www.qiushibaike.com/8hr/page/{}"

self.headers = {

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_13_4) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.139 Safari/537.36"}

self.url_queue = Queue()

self.html_queue = Queue()

self.content_list_queue = Queue()

def get_url_list(self):

for i in range(1, 14):

self.url_queue.put(self.temp_url.format(i))

def parse_url(self):

"""发送请求,获取响应"""

while True:

# 在这里使用子线程不会结束,吧子线程设置为守护线程

url = self.url_queue.get()

response = requests.get(url, headers = self.headers)

self.html_queue.put(response.content.decode())

self.url_queue.task_done()

def get_content_list(self):

"""提取数据"""

while True:

html_str = self.html_queue.get()

html = etree.HTML(html_str)

li_list = html.xpath('//*[@id="content"]/div/div[2]/div/ul/li')

content_list = []

for li in li_list:

item = {

}

item['user_name'] = li.xpath('./div/div/a[@class="recmd-user"]/span/text()')

item['content'] = [i.strip() for i in li.xpath('./div/a[@class="recmd-content"]/text()')]

if item['user_name'] or item['content']:

content_list.append(item)

self.content_list_queue.put(content_list)

self.html_queue.task_done()

def save_content_list(self):

"""保存"""

while True:

content_list = self.content_list_queue.get()

for content in content_list:

print(content)

self.content_list_queue.task_done()

def run(self):

"""实现主要逻辑"""

process_list = []

# 1.准备url_list

t_url = Process(target=self.get_url_list)

process_list.append(t_url)

# 2. 遍历, 发送请求

for _ in range(5): # 三个进程发送

t_parse = Process(target=self.parse_url)

process_list.append(t_parse)

# 3.提取数据

t_content = Process(target=self.get_content_list)

process_list.append(t_content)

# 4.保存

t_save = Process(target=self.save_content_list)

process_list.append(t_save)

for t in process_list:

t.daemon=True # 把子线程设置成守护线程

t.start()

for q in [self.url_queue, self.html_queue, self.content_list_queue]:

q.join() # 让主线程阻塞

print("主线程结束")

if __name__ == "__main__":

t1 = time.time()

qiushi = QiuBai()

qiushi.run()

print("total cost:",time.time()-t1)

上述代码中,multipocessing提供的JoinableQueue可以创建可连接的共享进程队列. 和普通的Queue对象一样,队列允许项目的使用者通知生产者项目已经被成功处理. 通知进程是使用共享的信号和条件变量来实现的. 对应的该队列能够和普通队列一样能够调用task_done和join方法.

4.小结:

- multiprocessing导包:from multiprocessing import Process

- 创建进程: Process(target=self.get_url_list)

- 添加入队列: put

- 从队列中获取: get

- 设置守护进程: t.daemon=True

- 主进程阻塞: q.join()

- 跨进程通信可以使用: from multiprocessing import JoinableQueue as Queue

四、线程池实现爬虫:

1. 线程池使用方法介绍:

1.实例化线程池对象:

from multiprocessing.dummy import Pool

pool = Pool(processes = 3) # 默认大小是cpu的个数

"""源码内容:

if processes is None:

processes = os.cpu_count() or 1

# 此处or的用法:

默认选择or前边的值,

如果or前边的值为False,就选择后边的值

"""

2.把从发送请求,提取数据,到保存合并成一个函数, 交给线程池一步执行使用方法pool.apply_async(func)

def exetute_requests_item_save(self):

url = self.queue.get()

html_str = self.parse_url(url)

content_list = sekf.get_count_list(html_str)

self.save_content_list(content_list)

self.total_response += 1

pool.apply_async(self.exetute_requests_item_save)

3.添加回调函数:

通过apply_async的方法能够让函数一步执行,但是只能执行一次, 为了让其能够反复执行,通过添加回调函数的方式能够让_callback递归的调用自己同事, 同时需要指定递归退出的条件

def _callback(self, temp):

if self.is_running:

pool.apply_async(self.exetute_requests_item_save,callback=self._callback)

pool.apply_async(self.exetute_requests_item_save,callback=self._callback)

4.确定程序结束的条件:

程序在获取的响应和url数量相同的时候可以结束

while True:

time.sleep(0.0001) # 防止cpu空转

if self.total_response_num>=self.total_requests_num:

self.is_running= False

break

self.pool.close() #关闭线程池,防止新的线程开启

# self.pool.join() #等待所有的子线程结束

2.使用线程池实现批爬虫的具体实现:

# coding=utf-8

import requests

import time

from lxml import etree

from multiprocessing.dummy import Pool

from queue import Queue

class QiuBai:

"""多进程糗事百科爬虫"""

def __init__(self):

self.temp_url = "https://www.qiushibaike.com/8hr/page/{}"

self.headers = {

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_13_4) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.139 Safari/537.36"}

self.queue = Queue()

self.pool = Pool(processes=6)

self.is_running = True

self.total_requests_num = 0

self.totsl_response_num = 0

def get_url_list(self):

print("准备url")

for i in range(1, 14):

self.queue.put(self.temp_url.format(i))

self.total_requests_num += 1

def parse_url(self, url):

"""发送请求,获取响应"""

return requests.get(url, headers=self.headers).content.decode()

def get_content_list(self, html_str):

"""提取数据"""

html = etree.HTML(html_str)

li_list = html.xpath('//*[@id="content"]/div/div[2]/div/ul/li')

content_list = []

for li in li_list:

item = {

}

item['user_name'] = li.xpath('./div/div/a[@class="recmd-user"]/span/text()')

item['content'] = [i.strip() for i in li.xpath('./div/a[@class="recmd-content"]/text()')]

if item['user_name'] or item['content']:

content_list.append(item)

return content_list

def save_content_list(self, content_list):

"""保存"""

for content in content_list:

print(content)

def exetute_requests_item_save(self):

url = self.queue.get()

html_str = self.parse_url(url)

content_list = self.get_content_list(html_str)

self.save_content_list(content_list)

self.totsl_response_num += 1

def _callback(self, temp):

if self.is_running:

self.pool.apply_async(self.exetute_requests_item_save, callback=self._callback)

def run(self):

"""实现主要逻辑"""

self.get_url_list()

for i in range(2): # 控制并发数量

self.pool.apply_async(self.exetute_requests_item_save, callback=self._callback)

while True: # 防止主线程结束

time.sleep(0.0001)

if self.totsl_response_num >= self.total_requests_num:

self.is_running = False

break

if __name__ == "__main__":

t1 = time.time()

qiushi = QiuBai()

qiushi.run()

print("total cost:",time.time()-t1)

3.小结:

- 线程池导包: from multiprocessing.dummy import Pool

- 线程池的创建:pool = Pool(process=3)

- 线程池异步方法:pool.apply_async(func)

五、协程池实现爬虫:

1.协程池模块使用介绍:

from gevent import monkey

monkey.patch_all()

from gevent.pool import Pool

2.使用协程池实现爬虫的具体实现:

# coding=utf-8

from gevent import monkey

monkey.patch_all()

import requests

import time

from lxml import etree

from queue import Queue

from lxml import etree

from gevent.pool import Pool

class QiuBai:

"""多进程糗事百科爬虫"""

def __init__(self):

self.temp_url = "https://www.qiushibaike.com/8hr/page/{}"

self.headers = {

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_13_4) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.139 Safari/537.36"}

self.queue = Queue()

self.pool = Pool(processes=6)

self.total_requests_num = 0

self.totsl_response_num = 0

self.is_running = True

def get_url_list(self):

print("准备url")

for i in range(1, 14):

self.queue.put(self.temp_url.format(i))

self.total_requests_num += 1

def parse_url(self, url):

"""发送请求,获取响应"""

return requests.get(url, headers=self.headers).content.decode()

def get_content_list(self, html_str):

"""提取数据"""

html = etree.HTML(html_str)

li_list = html.xpath('//*[@id="content"]/div/div[2]/div/ul/li')

content_list = []

for li in li_list:

item = {

}

item['user_name'] = li.xpath('./div/div/a[@class="recmd-user"]/span/text()')

item['content'] = [i.strip() for i in li.xpath('./div/a[@class="recmd-content"]/text()')]

if item['user_name'] or item['content']:

content_list.append(item)

return content_list

def save_content_list(self, content_list):

"""保存"""

for content in content_list:

print(content)

def exetute_requests_item_save(self):

url = self.queue.get()

html_str = self.parse_url(url)

content_list = self.get_content_list(html_str)

self.save_content_list(content_list)

self.totsl_response_num += 1

def _callback(self, temp):

if self.is_running:

self.pool.apply_async(self.exetute_requests_item_save, callback=self._callback)

def run(self):

"""实现主要逻辑"""

self.get_url_list()

for i in range(2): # 控制并发数量

self.pool.apply_async(self.exetute_requests_item_save, callback=self._callback)

while True: # 防止主线程结束

time.sleep(0.0001)

if self.totsl_response_num >= self.total_requests_num:

self.is_running = False

break

if __name__ == "__main__":

t1 = time.time()

qiushi = QiuBai()

qiushi.run()

print("total cost:",time.time()-t1)

3.小结

- 协程池的导包:from gevent.pool import Pool

- 协程池异步方法:pool.apply_async()