用于CMFD的BusterNet神经网络介绍

BusterNet图像篡改检测介绍

- 文章说明

- 双分支结构

-

- Mani-Det branch:

-

- 大致流程

- mask decoder模块

-

- BN-Incep层

- 到这里,该分支的框架就介绍完毕了,基于tf2的代码实现如下:

- Simi-Det branch

- Fusion Modle

- 总结与后续说明:

文章说明

BusterNet是2018年,由 Wael Abd-Almageed等人提出的,针对CMFD问题的端到端的深度神经网络解决方案,本文对其结构和其python模型代码(基于TensorFlow2框架)进行说明和简单讲解,对其思路进行总结和简单扩展,对相关概念进行介绍,用于交流学习,给初学者入门提供借鉴。

- 原文《BusterNet: Detecting Copy-Move Image Forgery with Source/Target Localization》可知知网查到,作者也在github中提供了模型的原码。

下面直接进入正题:

双分支结构

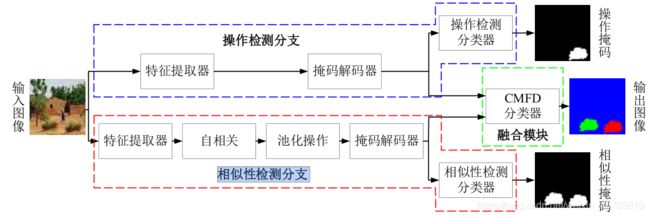

busternet的一大特点即是双分支结构,分为操作检测分支(Mani-Det branch)和相似性检测分支( Simi-Det branch),如下图所示(摘自busternet原论文,看不懂不要紧,下面我慢慢介绍):

中文版大致为(摘自学长毕设,在此再次对资源提供者表示感谢,我们总是站在巨人的肩膀上哈哈):

中文版的图示仅帮助简易理解,讲解介绍以原作者英文图示为主!!

Mani-Det branch:

大致流程

** 输入图像(256,256,3)**到CNN feature extractor,这里的特征提取器使用的是VGG16神经网络的前4块结构,输出(16,16,512)的特征信息,为了提高输出结果的分辨率采用mask decoder模块(该模块将在下面介绍),得到(256,256,6)的输出,最后通过Binary Classifier(一个3*3的2D卷积层,+sigmoid激活函数)得到(256,256,1)的输出结果,即为aux1;

mask decoder模块

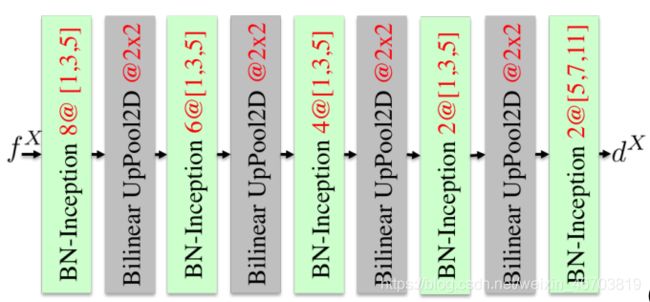

该模块是由BN-Inception与BilinerUpPool2D交替组成,如图:

BN-Incep层进行BN标准化操作,BilinerUpPool2D(2*2的核)作扩展操作,每次对宽和高的分辨率提高一倍,4个则提高16倍,即16 *16=256输出;

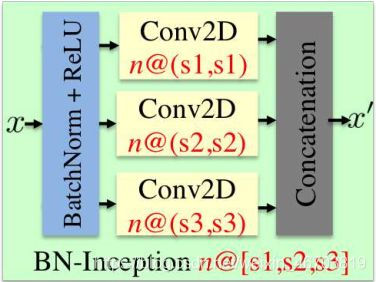

BN-Incep层

如下所示:可见是先BN操作,在并行经过3个卷积层后进行con-cat连接操作。(图中n=8、6、4、2、2,可见特征维度也不断下降,s1和s2和s3先为(1,3,5)最后一次为5,7,11,具体为什么这样设置作者也没说清楚,经验吧,有懂得大佬可以评论或者发私信给我哦)

python代码实现如下:

def BnInception(x, nb_inc=16, inc_filt_list=[(1,1), (3,3), (5,5)], name='uinc') :

'''Basic Google inception module with batch normalization

Input:

x = tensor4d, (n_samples, n_rows, n_cols, n_feats)

nb_inc = int, number of filters in individual Conv2D

inc_filt_list = list of kernel sizes, individual Conv2D kernel size

name = str, name of module

Output:

xn = tensor4d, (n_samples, n_rows, n_cols, n_new_feats)

'''

uc_list = []

for idx, ftuple in enumerate( inc_filt_list ) :

uc = Conv2D( nb_inc, ftuple, activation='linear', padding='same', name=name+'_c%d' % idx)(x)

uc_list.append(uc)

if ( len( uc_list ) > 1 ) :

uc_merge = Concatenate( axis=-1, name=name+'_merge')(uc_list)

else :

uc_merge = uc_list[0]

uc_norm = BatchNormalization(name=name+'_bn')(uc_merge)

xn = Activation('relu', name=name+'_re')(uc_norm)

return xn

到这里,该分支的框架就介绍完毕了,基于tf2的代码实现如下:

def create_cmfd_manipulation_branch( img_shape=(256,256,3),

name='maniDet' ) :

'''Create the manipulation branch for copy-move forgery detection

'''

#---------------------------------------------------------

# Input

#---------------------------------------------------------

img_input = Input( shape = img_shape, name = name+'_in' )

#---------------------------------------------------------

# VGG16 Conv Featex

#---------------------------------------------------------

bname = name + '_cnn'

# Block 1

x1 = Conv2D(64, (3, 3), activation='relu', padding='same', name=bname+'_b1c1')(img_input)

x1 = Conv2D(64, (3, 3), activation='relu', padding='same', name=bname+'_b1c2')(x1)

x1 = MaxPooling2D((2, 2), strides=(2, 2), name=bname+'_b1p')(x1)

# Block 2

x2 = Conv2D(128, (3, 3), activation='relu', padding='same', name=bname+'_b2c1')(x1)

x2 = Conv2D(128, (3, 3), activation='relu', padding='same', name=bname+'_b2c2')(x2)

x2 = MaxPooling2D((2, 2), strides=(2, 2), name=bname+'_b2p')(x2)

# Block 3

x3 = Conv2D(256, (3, 3), activation='relu', padding='same', name=bname+'_b3c1')(x2)

x3 = Conv2D(256, (3, 3), activation='relu', padding='same', name=bname+'_b3c2')(x3)

x3 = Conv2D(256, (3, 3), activation='relu', padding='same', name=bname+'_b3c3')(x3)

x3 = MaxPooling2D((2, 2), strides=(2, 2), name=bname+'_b3p')(x3)

# Block 4

x4 = Conv2D(512, (3, 3), activation='relu', padding='same', name=bname+'_b4c1')(x3)

x4 = Conv2D(512, (3, 3), activation='relu', padding='same', name=bname+'_b4c2')(x4)

x4 = Conv2D(512, (3, 3), activation='relu', padding='same', name=bname+'_b4c3')(x4)

x4 = MaxPooling2D((2, 2), strides=(2, 2), name=bname+'_b4p')(x4)

#---------------------------------------------------------

# Deconvolution Network

#---------------------------------------------------------

patch_list = [(1,1),(3,3),(5,5)]

bname = name + '_dconv'

# MultiPatch Featex

f16 = BnInception( x4, 8, patch_list, name =bname+'_mpf')

# Deconv x2

f32 = BilinearUpSampling2D(name=bname+'_bx2')( f16 )

dx32 = BnInception( f32, 6, patch_list, name=bname+'_dx2')

# Deconv x4

f64 = BilinearUpSampling2D(name=bname+'_bx4')( dx32 )

dx64 = BnInception( f64, 4, patch_list, name=bname+'_dx4')

# Deconv x8

f128 = BilinearUpSampling2D(name=bname+'_bx8')( dx64 )

dx128 = BnInception( f128, 2, patch_list, name=bname+'_dx8')

# Deconv x16

f256 = BilinearUpSampling2D(name=bname+'_bx16')( dx128 )

dx256 = BnInception( f256, 2, [(5,5),(7,7),(11,11)], name=bname+'_dx16')

#---------------------------------------------------------

# Output for Auxiliary Task

#---------------------------------------------------------

pred_mask = Conv2D(1, (3,3), activation='sigmoid', name=bname+'_pred_mask', padding='same')(dx256)

#---------------------------------------------------------

# End to End

#---------------------------------------------------------

model = Model(inputs=img_input, outputs=pred_mask, name = bname)

return model

Simi-Det branch

- **input(256,256,3)**进行CNN特征提取(也是VGG16的前四块)输出特征信息(16,16,512),

- 在进行自相关操作(皮尔逊相关性)得到(16,16,256)的自相关信息【这里16*16代表每个特征点,每个特征点有256个相关信息(即每一个点与其它点的相关性,16 * 16=256)】;

- ☆☆再经过Percentile Pool进行统计信息提取,该层对自相关信息进行了固定长度的有序化处理,作者说是便于后续DNN,既克服了图片分辨率不同而导致的维度变化的不确定性,也起到了对输出固定维度的作用。得到(16,16,100)的输出,其中100可理解为你想固定的排序长度,这里其实对之前的256维信息进行了压缩,因为我们其实只需要让网络找到突变点即可,举个例子:

一个特征数列为【1,1,1,2,3,4,4,5,5,9,9,10,10,11】,我们对其排序信息进行压缩为【1,2,3,4,5,9,10,11】可以更快的找到突变信息,即这里的5到9的突变位置,这是我们所关心的。 - 然后就是MaskDecoder操作和BinaryClassifier操作,和上一分支类似。

Fusion Modle

融合模块综合考虑两个分支的输出的特征,输出最终能够区分目标区域和源区域的掩码。-需要说明的是,两个分支输入到融合模块的特征并不是它们各自二进制分类器输出的二进制掩码,而是前一个的掩码解码器输出的特征。-两个分支的二进制分类器只在分支训练时激活,不参与融合模块的最终输出。融合模块的具体工作是,将两个分支的输出特征叠接,再用 BN-Inception 模型融合,最后通过一个三层的 3 *3 的卷积滤波器输出最终的彩色掩码(256,256,3)。

总结与后续说明:

下一步会介绍对原论文模型的experiments验证,因为原论文没有给相关代码,所以还是很值得分享的,在下一篇也会介绍机器学习相关的几个性能指标及计算方法及编程实现,我们下一篇见哦!!