python面对对面编程对三国演义,水浒传,红楼梦人物关系与出场频率进行文本分析,数据可视化

python对文本进行分析和数据可视化,主要运用到了jieba,worldcloudmatplotlib,nxwworkx,pandas库,其他库在代码中给出。

1.首先准备好这三本名著

2.准备好停词词库

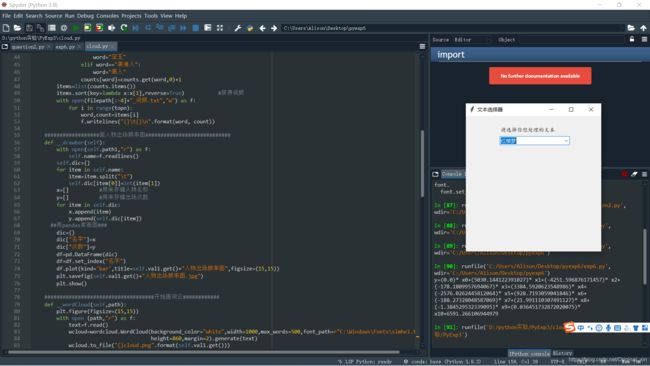

代码如下:

import matplotlib.pyplot as plt

import matplotlib

import networkx as nx

import tkinter as tk

import tkinter.ttk as ttk

import pandas as pd

matplotlib.rcParams['font.sans-serif']=['SimHei']

class Text:

###################获取词频###########################################

#读取文本文件数据

def __getText(self):

with open(self.path,"r",encoding="UTF-8") as f:

self.__text=f.read()

#获取停用词

def __stopwordslist(self):

self.stopwords=[line.strip() for line in open(self.stoppath,'r',encoding="UTF-8").readlines()]

#获取词频

def __wordFreq(self,filepath,topn,text):

words=jieba.lcut(text.strip())

counts={}

self.__stopwordslist()

for word in words:

if len(word)==1: #删除长度为1的字符

continue

elif word not in self.stopwords:

if word=="凤姐儿":

word="凤姐"

elif word=="林黛玉" or word=="林妹妹" or word=="黛玉笑":

word="黛玉"

elif word=="宝二爷":

word="宝玉"

elif word=="袭道人":

word="袭人"

counts[word]=counts.get(word,0)+1

items=list(counts.items())

items.sort(key=lambda x:x[1],reverse=True) #获得词频

with open(filepath[:-4]+"_词频.txt","w") as f:

for i in range(topn):

word,count=items[i]

f.writelines("{}\t{}\n".format(word, count))

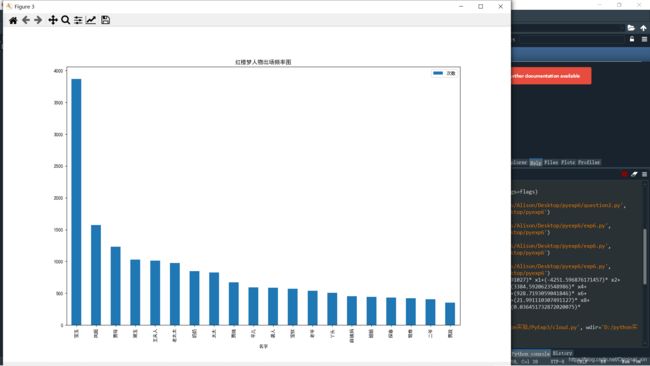

##################画人物出场频率图############################

def __drawbar(self):

with open(self.path1,"r") as f:

self.name=f.readlines()

self.dic={}

for item in self.name:

item=item.split("\t")

self.dic[item[0]]=int(item[1])

x=[] #用来存储人物名称

y=[] #用来存储出场次数

for item in self.dic:

x.append(item)

y.append(self.dic[item])

##用pandas库画图###

dic={}

dic["名字"]=x

dic["次数"]=y

df=pd.DataFrame(dic)

df=df.set_index("名字")

df.plot(kind='bar',title=self.val1.get()+"人物出场频率图",figsize=(15,15))

plt.savefig(self.val1.get()+"人物出场频率图.jpg")

plt.show()

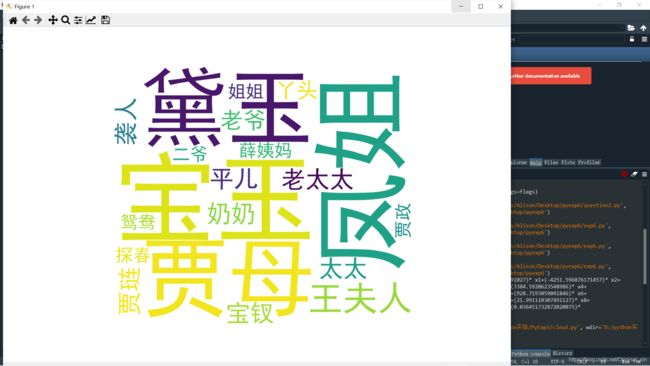

####################################开始画词云############

def __wordCloud(self,path):

plt.figure(figsize=(15,15))

with open (path,"r") as f:

text=f.read()

wcloud=wordcloud.WordCloud(background_color="white",width=1000,max_words=500,font_path=r"C:\Windows\Fonts\simhei.ttf",

height=860,margin=2).generate(text)

wcloud.to_file("{}cloud.png".format(self.val1.get()))

plt.imshow(wcloud)

plt.axis('off')

plt.show()

###################找到文本中的人物关系################################

def __getrelations(self):

if self.val1.get()=='红楼梦':

self.Names=["宝玉","凤姐","贾母","王夫人","老太太","袭人","贾琏","平儿","宝钗","薛姨妈",

"探春","鸳鸯","贾政","晴雯","湘云","刘姥姥","邢夫人","贾珍","紫鹃","香菱",

"尤氏","薛蟠","贾赦"]

if self.val1.get()=='水浒传':

self.Names=["宋江","李逵","吴用","公孙胜","关胜","林冲","秦明","呼延","花荣","柴进",

"燕青","朱仝","鲁智深","武松","董平","秦明","李俊","卢俊义","晁盖","戴宗"]

if self.val1.get()=="三国演义":

self.Names=["刘备","刘禅","关公","张飞","赵云","诸葛亮","徐遮","马良","黄忠","玄德","曹丕","孙权"

,"司马懿","周瑜","孔明","卢布","周瑜","袁绍","马超","魏延","姜维","马岱","庞德"]

f=open(self.path,'r',encoding="UTF-8")

s=f.read()

self.relations={}

self.lst_para=s.split('\n') #安段落划分

for text in self.lst_para:

for name1 in self.Names:

if name1 in text:

for name2 in self.Names:

if name2 in text and name1 !=name2 and (name2,name1) not in self.relations:

self.relations[(name1,name2)]=self.relations.get((name1,name2),0)+1

self.maxRela=max([v for k,v in self.relations.items()]) #取最大共现次数

self.relations={k:v/self.maxRela for k,v in self.relations.items()}

###########################画出人物关系图###################################################

def __getmap(self):

plt.figure(figsize=(15,15))

self.G=nx.Graph()

#根据relations的数据想G中添加边

for k,v in self.relations.items():

self.G.add_edge(k[0],k[1],weight=v)

#筛选权重大于0.6的边

self.elarge=[(u,v) for (u,v,d) in self.G.edges(data=True) if d['weight']>0.6]

#筛选权重大于0.3但小于0.6的边

self.emidle=[(u,v) for (u,v,d) in self.G.edges(data=True) if (d['weight']>0.3) & (d['weight']<=0.6)]

#筛选权重小于0.3的边

self.esmall=[(u,v) for (u,v,d) in self.G.edges(data=True) if d['weight']<=0.3]

#设置图形布局

self.pos=nx.circular_layout(self.G)

nx.draw_networkx_nodes(self.G,self.pos,alpha=0.6,node_size=800)

#alpha是透明度,width是连接线的宽度

nx.draw_networkx(self.G, self.pos,edgelist=self.elarge,width=2.5,alpha=0.9,edge_color='g')

nx.draw_networkx(self.G, self.pos,edgelist=self.emidle,width=1.5,alpha=0.6,edge_color='y')

nx.draw_networkx(self.G, self.pos,edgelist=self.esmall,width=1,alpha=0.2,edge_color='b',style='dashed')

nx.draw_networkx_labels(self.G,self.pos,font_size=12)

plt.axis('off')

plt.title("{}主要人物关系网络".format(self.val1.get()))

plt.show()

################上面函数的调用#####################

def __getvar(self,event):

self.path=self.val1.get()+".txt" #文本路径

self.__getText() #读取文本文件数据

self.__wordFreq(self.path,20,self.__text) #获取20个词的词频

self.path1=self.val1.get()+'_词频.txt' #词频路径

self.__wordCloud(self.path1) #画词频云图

self.__getrelations() #获得文本中人物关系

self.__getmap() #画出人物关系图

self.__drawbar()

#####################构造函数,GUI界面的设计#####################

def __init__(self):

self.window=tk.Tk()

self.window.geometry("400x400")

self.window.title("文本选择器")

self.txt=["红楼梦","水浒传","三国演义"]

self.val1=tk.StringVar()

self.cb=ttk.Combobox(self.window,textvariable=self.val1)

self.cb['value']=self.txt

self.cb.current()

self.cb.place(x=100,y=60)

self.cb.bind("<>",self.__getvar)

self.stoppath="C:/Users/Alison/Desktop/PyExp3/stop_words.txt" #停词文本路径

self.lab1=tk.Label(self.window,text="请选择你想处理的文本",font="楷体").place(x=100,y=30)

self.window.mainloop()

def main():

txt=Text()

if "__name__"==main():

main()

运行结果如下:

因为需要分析整个文本,分析,话云图等问题,其实程序运行得还是比较慢的,这是一个没有改进的地方,希望大家多多指教!!