第十章 keras 人工神经网络

第十章 keras 人工神经网络

目录

- 第十章 keras 人工神经网络

-

-

- 10.2.5 使用子类API构建动态模型

- 10.2.6 保存和还原模型

- 10.2.7 训练模型时使用回调函数

-

10.2.5 使用子类API构建动态模型

# 打印版本信息

import sys, time

import sklearn

import tensorflow

from tensorflow import keras

print(f"time: {time.strftime('%Y-%m-%d %H:%M:%S', time.localtime())}")

print(f"python version: {sys.version.split(' ')[0]}")

print(f"sklearn version: {sklearn.__version__}")

print(f"tensorflow verson: {tensorflow.__version__}")

print(f"keras version: {keras.__version__}")

time: 2021-02-14 18:22:59

python version: 3.8.6

sklearn version: 0.24.1

tensorflow verson: 2.4.1

keras version: 2.4.0

# 导入加州房子数据集

from sklearn.datasets import fetch_california_housing

housing = fetch_california_housing()

# 将数据集分类训练集,验证集,测试集

from sklearn.model_selection import train_test_split

X_train_full, X_test, y_train_full, y_test = train_test_split(housing.data, housing.target)

X_train_full.shape, X_test.shape

((15480, 8), (5160, 8))

X_train, X_valid, y_train, y_valid = train_test_split(X_train_full, y_train_full)

# 数据平移、归一化变换

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

X_train = scaler.fit_transform(X_train)

X_valid = scaler.transform(X_valid)

X_test = scaler.transform(X_test)

X_train_A, X_train_B = X_train[:, :5], X_train[:, 2:8]

X_valid_A, X_valid_B = X_valid[:, :5], X_valid[:, 2:8]

X_test_A, X_test_B = X_test[:, :5], X_test[:, 2:8]

# 继承keras.Model类 重写 init 与 call 方法

class WideAndDeepModel(keras.Model):

def __init__(self, units=30, activation="relu", **kwargs):

super().__init__(**kwargs)

self.hidden1 = keras.layers.Dense(units, activation=activation)

self.hidden2 = keras.layers.Dense(units, activation=activation)

# Model 对象本身有一个属性output 不能覆盖

self.main_output = keras.layers.Dense(1)

self.aux_output = keras.layers.Dense(1)

def call(self, inputs):

input_A, input_B = inputs

hidden1 = self.hidden1(input_B)

hidden2 = self.hidden2(hidden1)

concat = keras.layers.concatenate([input_A, hidden2])

main_output = self.main_output(concat)

aux_output = self.aux_output(hidden2)

return main_output, aux_output

model = WideAndDeepModel()

# 编译模型

model.compile(loss="mse", loss_weights=[0.9, 0.1],

optimizer=keras.optimizers.SGD(lr=1e-3))

# 训练模型

history = model.fit([X_train_A, X_train_B], [y_train, y_train],

epochs=20,

validation_data=([X_valid_A, X_valid_B], [y_valid, y_valid]))

Epoch 1/20

363/363 [==============================] - 2s 4ms/step - loss: 3.0409 - output_1_loss: 2.8772 - output_2_loss: 4.5144 - val_loss: 1.1048 - val_output_1_loss: 0.8933 - val_output_2_loss: 3.0085

Epoch 2/20

363/363 [==============================] - 1s 2ms/step - loss: 1.0514 - output_1_loss: 0.8602 - output_2_loss: 2.7720 - val_loss: 0.8614 - val_output_1_loss: 0.7490 - val_output_2_loss: 1.8729

Epoch 3/20

363/363 [==============================] - 1s 2ms/step - loss: 0.8523 - output_1_loss: 0.7476 - output_2_loss: 1.7945 - val_loss: 0.7644 - val_output_1_loss: 0.6872 - val_output_2_loss: 1.4589

Epoch 4/20

363/363 [==============================] - 1s 3ms/step - loss: 0.7669 - output_1_loss: 0.6899 - output_2_loss: 1.4597 - val_loss: 0.7120 - val_output_1_loss: 0.6462 - val_output_2_loss: 1.3041

Epoch 5/20

363/363 [==============================] - 1s 2ms/step - loss: 0.6953 - output_1_loss: 0.6266 - output_2_loss: 1.3131 - val_loss: 0.6779 - val_output_1_loss: 0.6154 - val_output_2_loss: 1.2399

Epoch 6/20

363/363 [==============================] - 1s 2ms/step - loss: 0.6776 - output_1_loss: 0.6099 - output_2_loss: 1.2869 - val_loss: 0.6519 - val_output_1_loss: 0.5908 - val_output_2_loss: 1.2020

Epoch 7/20

363/363 [==============================] - 1s 2ms/step - loss: 0.6376 - output_1_loss: 0.5725 - output_2_loss: 1.2243 - val_loss: 0.6320 - val_output_1_loss: 0.5719 - val_output_2_loss: 1.1729

......

Epoch 18/20

363/363 [==============================] - 1s 2ms/step - loss: 0.5389 - output_1_loss: 0.4894 - output_2_loss: 0.9848 - val_loss: 0.5397 - val_output_1_loss: 0.4922 - val_output_2_loss: 0.9673

Epoch 19/20

363/363 [==============================] - 1s 2ms/step - loss: 0.5459 - output_1_loss: 0.4958 - output_2_loss: 0.9968 - val_loss: 0.5352 - val_output_1_loss: 0.4887 - val_output_2_loss: 0.9539

Epoch 20/20

363/363 [==============================] - 1s 2ms/step - loss: 0.5520 - output_1_loss: 0.5046 - output_2_loss: 0.9791 - val_loss: 0.5319 - val_output_1_loss: 0.4864 - val_output_2_loss: 0.9412

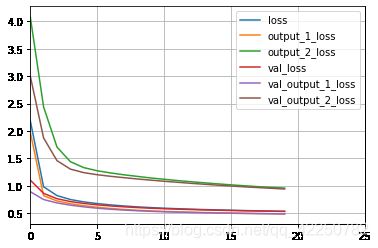

# 绘制学习曲线

import matplotlib.pyplot as plt

import pandas as pd

pd.DataFrame(history.history).plot()

plt.gca().set_xlim(0, 25)

plt.grid(True)

plt.show()

# 评估模型

total_loss, main_loss, aux_loss = model.evaluate([X_test_A, X_test_B],

[y_test, y_test]

)

total_loss, main_loss, aux_loss

162/162 [==============================] - 0s 1ms/step - loss: 0.5262 - output_1_loss: 0.4818 - output_2_loss: 0.9257

(0.5261973738670349, 0.4818061888217926, 0.9257184267044067)

# 使用模型预测

X_new = X_test[:3]

y_new = y_test[:3]

X_new_A, X_new_B = X_new[:, :5], X_new[:, 2: 8]

y_pred_main, _ = model.predict([X_new_A, X_new_B])

print("y_pred_main: ", y_pred_main)

print("y_new: ", y_new)

y_pred_main: [[3.2975657]

[1.542799 ]

[3.1939414]]

y_new: [5.00001 1.176 2.7 ]

10.2.6 保存和还原模型

# 生成模型

model = keras.models.Sequential([

keras.layers.Dense(30, activation="relu", input_shape=[X_train.shape[1]]),

keras.layers.Dense(30, activation="relu"),

keras.layers.Dense(1)

])

# 编译模型

model.compile(loss="mse", optimizer=keras.optimizers.SGD(lr=1e-3))

# 训练模型

history = model.fit(X_train, y_train, epochs=10,

validation_data=(X_valid, y_valid))

# 评估模型

mse_test = model.evaluate(X_test, y_test)

print("mse_test: ", mse_test)

Epoch 1/10

363/363 [==============================] - 1s 3ms/step - loss: 3.2555 - val_loss: 0.8640

Epoch 2/10

363/363 [==============================] - 1s 2ms/step - loss: 0.8450 - val_loss: 0.7112

Epoch 3/10

363/363 [==============================] - 1s 2ms/step - loss: 0.7088 - val_loss: 0.6526

Epoch 4/10

363/363 [==============================] - 1s 2ms/step - loss: 0.6903 - val_loss: 0.6138

Epoch 5/10

363/363 [==============================] - 1s 2ms/step - loss: 0.6188 - val_loss: 0.5863

Epoch 6/10

363/363 [==============================] - 1s 2ms/step - loss: 0.5757 - val_loss: 0.5633

Epoch 7/10

363/363 [==============================] - 1s 2ms/step - loss: 0.5636 - val_loss: 0.5453

Epoch 8/10

363/363 [==============================] - 1s 2ms/step - loss: 0.5557 - val_loss: 0.5307

Epoch 9/10

363/363 [==============================] - 1s 2ms/step - loss: 0.5313 - val_loss: 0.5172

Epoch 10/10

363/363 [==============================] - 1s 3ms/step - loss: 0.5174 - val_loss: 0.5071

162/162 [==============================] - 0s 1ms/step - loss: 0.4962

mse_test: 0.49622637033462524

# 预测

y_pred = model.predict(X_new)

y_pred

array([[3.8223999],

[1.1989222],

[2.8776248]], dtype=float32)

# 保存模型 仅合适与顺序API函数式API生成的模型

model.save("keras_model_1.hdf5")

# 还原模型

model = keras.models.load_model("keras_model_1.hdf5")

y_pred = model.predict(X_new)

print(y_pred)

[[3.8223999]

[1.1989222]

[2.8776248]]

# 模型权重参数保存于加载

model.save_weights("keras_model_weights.ckpt")

# 加载

model.load_weights("keras_model_weights.ckpt")

10.2.7 训练模型时使用回调函数

# 清除前面加载的模型实例

keras.backend.clear_session()

model = keras.models.Sequential([

keras.layers.Dense(30, activation="relu", input_shape=[X_train.shape[1]]),

keras.layers.Dense(30, activation="relu"),

keras.layers.Dense(1)

])

model.compile(loss='mse', optimizer=keras.optimizers.SGD(lr=1e-3))

# 使用ModelCheckpoint保存模型

checkpoint_cb = keras.callbacks.ModelCheckpoint("model-10-2-6-a.h5",

save_best_only=True)

# 训练模型,并且使用回调函数

history = model.fit(X_train, y_train, epochs=20,

validation_data=(X_valid, y_valid),

callbacks=[checkpoint_cb])

Epoch 1/20

363/363 [==============================] - 1s 2ms/step - loss: 4.7894 - val_loss: 1.1104

Epoch 2/20

363/363 [==============================] - 1s 2ms/step - loss: 1.0420 - val_loss: 0.7272

Epoch 3/20

363/363 [==============================] - 1s 2ms/step - loss: 0.6886 - val_loss: 0.6323

Epoch 4/20

363/363 [==============================] - 1s 2ms/step - loss: 0.6318 - val_loss: 0.5915

Epoch 5/20

363/363 [==============================] - 1s 2ms/step - loss: 0.5957 - val_loss: 0.5648

......

Epoch 16/20

363/363 [==============================] - 1s 2ms/step - loss: 0.4862 - val_loss: 0.4741

Epoch 17/20

363/363 [==============================] - 1s 2ms/step - loss: 0.4779 - val_loss: 0.4701

Epoch 18/20

363/363 [==============================] - 1s 2ms/step - loss: 0.4694 - val_loss: 0.4674

Epoch 19/20

363/363 [==============================] - 1s 2ms/step - loss: 0.4539 - val_loss: 0.4641

Epoch 20/20

363/363 [==============================] - 1s 2ms/step - loss: 0.4638 - val_loss: 0.4627

# 加载最优模型

model = keras.models.load_model("model-10-2-6-a.h5")

# 评估模型

mse_test = model.evaluate(X_test, y_test)

162/162 [==============================] - 0s 1ms/step - loss: 0.4517

model.compile(loss="mse", optimizer=keras.optimizers.SGD(lr=1e-5))

# 提前退出回调, 当在patience个轮次时,验证集上面如果没有任何进展, 中断训练

early_stopping_cb = keras.callbacks.EarlyStopping(patience=10,

restore_best_weights=True)

history = model.fit(X_train, y_train, epochs=100,

validation_data=(X_valid, y_valid),

callbacks=[early_stopping_cb])

Epoch 1/100

363/363 [==============================] - 1s 3ms/step - loss: 0.4480 - val_loss: 0.4626

Epoch 2/100

363/363 [==============================] - 1s 2ms/step - loss: 0.4500 - val_loss: 0.4624

Epoch 3/100

363/363 [==============================] - 1s 2ms/step - loss: 0.4630 - val_loss: 0.4622

......

Epoch 94/100

363/363 [==============================] - 1s 2ms/step - loss: 0.4510 - val_loss: 0.4587

Epoch 95/100

363/363 [==============================] - 1s 2ms/step - loss: 0.4665 - val_loss: 0.4587

Epoch 96/100

363/363 [==============================] - 1s 2ms/step - loss: 0.4491 - val_loss: 0.4587

Epoch 97/100

363/363 [==============================] - 1s 2ms/step - loss: 0.4598 - val_loss: 0.4586

Epoch 98/100

363/363 [==============================] - 1s 2ms/step - loss: 0.4369 - val_loss: 0.4586

Epoch 99/100

363/363 [==============================] - 1s 2ms/step - loss: 0.4415 - val_loss: 0.4586

Epoch 100/100

363/363 [==============================] - 1s 2ms/step - loss: 0.4774 - val_loss: 0.4585

# 自定义回调类

class PrintValTrainRaionCallback(keras.callbacks.Callback):

def on_epoch_end(self, epoch, logs):

# 没个轮次结束打印

print(f"=== logs: {logs} type: {type(logs)}")

print(f"=== val/train: {logs['val_loss'] / logs['loss']}")

val_train_ratio_cb = PrintValTrainRaionCallback()

history = model.fit(X_train, y_train, epochs=10,

validation_data=(X_valid, y_valid),

callbacks=[val_train_ratio_cb]

)

Epoch 1/10

363/363 [==============================] - 1s 3ms/step - loss: 0.4538 - val_loss: 0.4585

=== logs: {'loss': 0.45377272367477417, 'val_loss': 0.4585181474685669} type:

=== val/train: 1.010457710537035

Epoch 2/10

363/363 [==============================] - 1s 2ms/step - loss: 0.4537 - val_loss: 0.4585

=== logs: {'loss': 0.4537428021430969, 'val_loss': 0.458490788936615} type:

=== val/train: 1.010464048732217

Epoch 3/10

363/363 [==============================] - 1s 2ms/step - loss: 0.4537 - val_loss: 0.4585

=== logs: {'loss': 0.4537127912044525, 'val_loss': 0.4584639370441437} type:

=== val/train: 1.0104717035353543

Epoch 4/10

363/363 [==============================] - 1s 2ms/step - loss: 0.4537 - val_loss: 0.4584

=== logs: {'loss': 0.45368272066116333, 'val_loss': 0.45843762159347534} type:

=== val/train: 1.0104806745237787

Epoch 5/10

363/363 [==============================] - 1s 2ms/step - loss: 0.4537 - val_loss: 0.4584

=== logs: {'loss': 0.4536520540714264, 'val_loss': 0.4584110975265503} type:

=== val/train: 1.0104905145086693

Epoch 6/10

363/363 [==============================] - 1s 2ms/step - loss: 0.4536 - val_loss: 0.4584

=== logs: {'loss': 0.4536226987838745, 'val_loss': 0.45838460326194763} type:

=== val/train: 1.010497500435581

Epoch 7/10

363/363 [==============================] - 1s 2ms/step - loss: 0.4536 - val_loss: 0.4584

=== logs: {'loss': 0.4535929262638092, 'val_loss': 0.4583580195903778} type:

=== val/train: 1.010505219659879

Epoch 8/10

363/363 [==============================] - 1s 2ms/step - loss: 0.4536 - val_loss: 0.4583

=== logs: {'loss': 0.4535619616508484, 'val_loss': 0.4583314061164856} type:

=== val/train: 1.010515530112529

Epoch 9/10

363/363 [==============================] - 1s 3ms/step - loss: 0.4535 - val_loss: 0.4583

=== logs: {'loss': 0.4535328149795532, 'val_loss': 0.45830458402633667} type:

=== val/train: 1.0105213313991372

Epoch 10/10

363/363 [==============================] - 1s 2ms/step - loss: 0.4535 - val_loss: 0.4583

=== logs: {'loss': 0.4535016119480133, 'val_loss': 0.4582781195640564} type:

=== val/train: 1.0105325041636029