spark源码分析-Standalone Cluster模式源码分析,driver,executor开启

史上最全面的spark源码分析,独一无二的分析,让你彻底明白spark 如何开启driver,以及什么时候会开启executor。避免培训机构讲解误导。

本文使用spark3.0.1提供计算π 的案例进行演示 ,运行调度Standalone Cluster模式。

演示步骤如下:

启动master,ip:169.254.150.140

启动worker:传参 spark://169.254.150.140:7077

环境变量设置:调试的时候会报错java.lang.IllegalStateException: Cannot find any build directories,设置完环境变量就可以了哦。

SPARK_HOME=D:\spark-3.0.1-bin-hadoop2.7

SCALA_HOME=E:\install_soft\scala-2.11\scala

SPARK_SCALA_VERSION=scala-2.11

window idea运行结果:Pi is roughly 3.1419757098785492

一、运行spark-submit:

exec ${

SPARK_HOME}/bin/**spark-class** **org.apache.spark.deploy.SparkSubmit** "$@"

二、运行spark-class:

build_command() {

$RUNNER -Xmx128m $SPARK_LAUNCHER_OPTS -cp $LAUNCH_CLASSPATH **org.apache.spark.launcher.Main** "$@"

printf "%d\0" $?

}

Main类参数 “$@”:

org.apache.spark.deploy.SparkSubmit --class org.apache.spark.examples.SparkPi --master spark://169.254.150.140:7077 --deploy-mode cluster D:\spark-examples_2.12-3.0.1.jar

Main类运行结束后,spark-class会执行如下命令:

E:\jdk1.8.0_181\bin*java* -cp “D:\spark-3.0.1-bin-hadoop2.7\conf;D:\spark-3.0.1-bin-hadoop2.7\jars*” org.apache.spark.deploy.SparkSubmit --master spark://169.254.150.140:7077 --deploy-mode cluster --class org.apache.spark.examples.SparkPi D:\spark-examples_2.12-3.0.1.jar

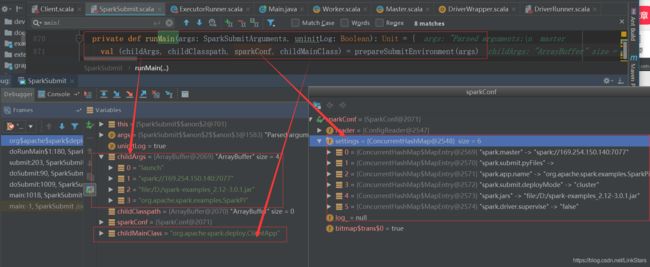

三、SparkSubmit类:

按上面的进行传参:

–class org.apache.spark.examples.SparkPi --master spark://169.254.150.140:7077 --deploy-mode cluster D:\spark-examples_2.12-3.0.1.jar

val submit = new SparkSubmit()

submit.doSubmit(args)

submit(args: SparkSubmitArguments, uninitLog: Boolean)

doRunMain()

runMain(args, uninitLog)

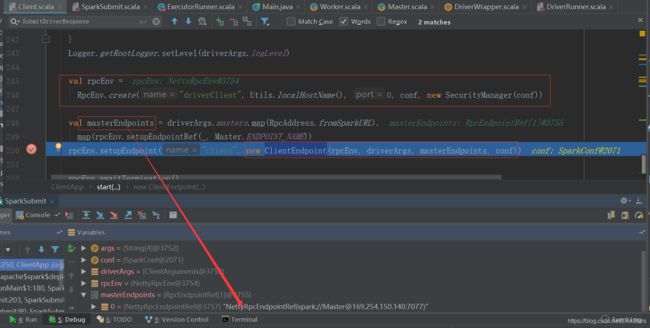

四、ClientApp类:

start(args: Array[String], conf: SparkConf)

开启netty服务端,向master注册,该步骤不是创建dirver,而是driverClient。

rpcEnv.setupEndpoint(“client”, new ClientEndpoint(rpcEnv, driverArgs, masterEndpoints, conf)),会调度该端点的onStart方法。为什么调onStart,可以参考我的文章:https://blog.csdn.net/LinkStars/article/details/112982187

override def onStart(): Unit = {

driverArgs.cmd match {

case "launch" =>

**val mainClass = "org.apache.spark.deploy.worker.DriverWrapper"**//开启driver的类

val **command** = new Command(mainClass,

Seq("{

{WORKER_URL}}", "{

{USER_JAR}}", driverArgs.mainClass) ++ driverArgs.driverOptions,

sys.env, classPathEntries, libraryPathEntries, javaOpts)

val **driverDescription** = new DriverDescription(

driverArgs.jarUrl,

driverArgs.memory,

driverArgs.cores,

driverArgs.supervise,

**command**,

driverResourceReqs)

asyncSendToMasterAndForwardReply[SubmitDriverResponse]( **RequestSubmitDriver**(**driverDescription**))//向master进行通信

}

六、master类:

master 收到RequestSubmitDriver消息

21/02/17 12:40:50 INFO Master: Driver submitted org.apache.spark.deploy.worker.DriverWrapper

21/02/17 12:40:50 INFO Master: Launching driver driver-20210217124050-0001 on worker worker-20210217113948-169.254.150.140-60650

21/02/17 12:40:54 INFO Master: Registering app Spark Pi

case **RequestSubmitDriver**(description) =>

logInfo("Driver submitted " + description.command.**mainClass**) //Driver submitted org.apache.spark.deploy.worker.**DriverWrapper**

val driver = createDriver(description)

persistenceEngine.addDriver(driver)

waitingDrivers += driver

drivers.add(driver)

**schedule()//会调度 launchDriver(worker, driver)**查看那台有资源,向worker发送消息 worker.endpoint.send(LaunchDriver(driver.id, driver.desc, driver.resources))

context.reply(SubmitDriverResponse(self, true, Some(driver.id),

s"Driver successfully submitted as ${driver.id}"))

七、worker 类:

case LaunchDriver(driverId, driverDesc, resources_) =>

logInfo(s"Asked to launch driver $driverId")

val driver = new DriverRunner(

conf,

driverId,

workDir,

sparkHome,

driverDesc.copy(command = Worker.maybeUpdateSSLSettings(driverDesc.command, conf)),

self,

workerUri,

securityMgr,

resources_)

drivers(driverId) = driver

**driver.start()**// **prepareAndRunDriver**()

coresUsed += driverDesc.cores

memoryUsed += driverDesc.mem

addResourcesUsed(resources_)

八、DriverRunner类:

private[worker] def prepareAndRunDriver(): Int = {

val driverDir = createWorkingDirectory()

val localJarFilename = downloadUserJar(driverDir)

val resourceFileOpt = prepareResourcesFile(SPARK_DRIVER_PREFIX, resources, driverDir)

def substituteVariables(argument: String): String = argument match {

case "{

{WORKER_URL}}" => workerUrl

case "{

{USER_JAR}}" => localJarFilename

case other => other

}

// config resource file for driver, which would be used to load resources when driver starts up

val javaOpts = driverDesc.command.javaOpts ++ resourceFileOpt.map(f =>

Seq(s"-D${DRIVER_RESOURCES_FILE.key}=${f.getAbsolutePath}")).getOrElse(Seq.empty)

// TODO: If we add ability to submit multiple jars they should also be added here

val builder = CommandUtils.buildProcessBuilder(driverDesc.command.copy(javaOpts = javaOpts),

securityManager, driverDesc.mem, sparkHome.getAbsolutePath, substituteVariables)

**runDriver(builder, driverDir, driverDesc.supervise)**// 后面去调 runCommandWithRetry(),再运行DriverWrapper类,再去找logInfo("**Launch Command**: " + redactedCommand)

}

运行结果日志:

21/02/17 12:40:51 INFO DriverRunner: Launch Command: “E:\jdk1.8.0_181\bin\java” “-cp” “D:\spark-3.0.1-bin-hadoop2.7\conf;D:\spark-3.0.1-bin-hadoop2.7\jars*” “-Xmx1024M” “-Dspark.jars=file:/D:/spark-examples_2.12-3.0.1.jar” “-Dspark.driver.supervise=false” “-Dspark.master=spark://169.254.150.140:7077” “-Dspark.app.name=org.apache.spark.examples.SparkPi” “-Dspark.submit.deployMode=cluster” “-Dspark.submit.pyFiles=” “-Dspark.rpc.askTimeout=10s” “org.apache.spark.deploy.worker.DriverWrapper” “spark://[email protected]:60650” “D:\spark-3.0.1-bin-hadoop2.7\work\driver-20210217124050-0001\spark-examples_2.12-3.0.1.jar” “org.apache.spark.examples.SparkPi”

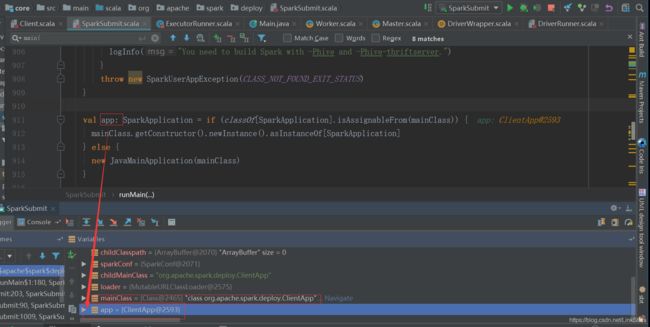

九、DriverWrapper类:

def main(args: Array[String]): Unit = {

args.toList match {

case workerUrl :: userJar :: mainClass :: extraArgs =>

val conf = new SparkConf()

val host: String = Utils.localHostName()

val port: Int = sys.props.getOrElse(config.DRIVER_PORT.key, "0").toInt

val rpcEnv = RpcEnv.create("**Driver**", host, port, conf, new SecurityManager(conf))//开启**driver**

logInfo(s"Driver address: ${rpcEnv.address}")

rpcEnv.setupEndpoint("**workerWatcher**", new WorkerWatcher(rpcEnv, workerUrl))

val currentLoader = Thread.currentThread.getContextClassLoader

val userJarUrl = new File(userJar).toURI().toURL()

val loader =

if (sys.props.getOrElse(config.DRIVER_USER_CLASS_PATH_FIRST.key, "false").toBoolean) {

new ChildFirstURLClassLoader(Array(userJarUrl), currentLoader)

} else {

new MutableURLClassLoader(Array(userJarUrl), currentLoader)

}

Thread.currentThread.setContextClassLoader(loader)

setupDependencies(loader, userJar)

// Delegate to supplied main class

val clazz = Utils.classForName(**mainClass**)

**val mainMethod = clazz.getMethod("main", classOf[Array[String]])

mainMethod.invoke(null, extraArgs.toArray[String])**//运行org.apache.spark.examples.SparkPi中的main方法,去开启**SparkContext**

rpcEnv.shutdown()

十、SparkContext类:

val (sched, ts) = SparkContext.createTaskScheduler(this, master, deployMode)

createTaskScheduler(...){

case SPARK_REGEX(sparkUrl) =>

checkResourcesPerTask(clusterMode = true, None)

val **scheduler** = new **TaskSchedulerImpl**(sc)

val masterUrls = sparkUrl.split(",").map("spark://" + _)

val **backend** = new **StandaloneSchedulerBackend**(scheduler, sc, masterUrls)

}

十一、TaskSchedulerImpl类:

backend.start()

十二、StandaloneSchedulerBackend类:

override def start(): Unit = {

val command = Command("org.apache.spark.executor.**CoarseGrainedExecutorBackend**",

args, sc.executorEnvs, classPathEntries ++ testingClassPath, libraryPathEntries, javaOpts)

val appDesc = ApplicationDescription(sc.appName, maxCores, sc.executorMemory, command,

webUrl, sc.eventLogDir, sc.eventLogCodec, coresPerExecutor, initialExecutorLimit,

resourceReqsPerExecutor = executorResourceReqs)

client = new **StandaloneAppClient**(sc.env.rpcEnv, masters, appDesc, this, conf)

**client.start()**

launcherBackend.setState(SparkAppHandle.State.SUBMITTED)

waitForRegistration()

launcherBackend.setState(SparkAppHandle.State.RUNNING)

}

十三、StandaloneAppClient类: endpoint.set会执行onStart()

def **start**(): Unit = {

// Just launch an rpcEndpoint; it will call back into the listener.

endpoint.set(rpcEnv.setupEndpoint("AppClient", new **ClientEndpoint**(rpcEnv)))

}

十四、ClientEndpoint类:

override def **onStart**(): Unit = {

try {

**registerWithMaster(1)**//调tryRegisterAllMasters() ,向master注册app

} catch {

case e: Exception =>

logWarning("Failed to connect to master", e)

markDisconnected()

stop()

}

private def **tryRegisterAllMasters**(): Array[JFuture[_]] = {

for (masterAddress <- masterRpcAddresses) yield {

registerMasterThreadPool.submit(new Runnable {

override def run(): Unit = try {

if (registered.get) {

return

}

logInfo("Connecting to master " + masterAddress.toSparkURL + "...")

val masterRef = rpcEnv.setupEndpointRef(masterAddress, Master.ENDPOINT_NAME)

**masterRef**.send(**RegisterApplication**(appDescription, self))

} catch {

case ie: InterruptedException => // Cancelled

case NonFatal(e) => logWarning(s"Failed to connect to master $masterAddress", e)

}

})

}

}

十五、master类:

case **RegisterApplication**(description, driver) =>

// TODO Prevent repeated registrations from some driver

if (state == RecoveryState.STANDBY) {

// ignore, don't send response

} else {

logInfo("Registering app " + description.name)

val app = createApplication(description, driver)

**registerApplication**(app)

logInfo("Registered app " + description.name + " with ID " + app.id)

persistenceEngine.addApplication(app)

**driver**.send(**RegisteredApplication**(app.id, self))

**schedule**()//去调度开启executor

}

十六、worker类:

case **LaunchExecutor**(masterUrl, appId, execId, appDesc, cores_, memory_, resources_) =>

if (masterUrl != activeMasterUrl) {

logWarning("Invalid Master (" + masterUrl + ") attempted to launch executor.")

} else {

try {

logInfo("Asked to launch executor %s/%d for %s".format(appId, execId, appDesc.name)//Worker: Asked to launch executor app-20210217124054-0001/0 for Spark Pi

logInfo(s"workercommand-------------------: ${appDesc.command}")

// 运行结果:

// Worker: workercommand------------------:Command(org.apache.spark.executor.**CoarseGrainedExecutorBackend**,List(--driver-url, spark://CoarseGrainedScheduler@LAPTOP-TC12SF9P:61716, --executor-id, {

{EXECUTOR_ID}}, --hostname, {

{HOSTNAME}}, --cores, {

{CORES}}, --app-id, {

{APP_ID}}, --worker-url, {

{WORKER_URL}}),Map(SPARK_USER -> yqb, SPARK_EXECUTOR_MEMORY -> 1024m),List(),List(),ArraySeq(-Dspark.driver.port=61716, -Dspark.rpc.askTimeout=10s))

val manager = new **ExecutorRunner**(

appId,

execId,

appDesc.copy(command = Worker.maybeUpdateSSLSettings(appDesc.command, conf)),

cores_,

memory_,

self,

workerId,

webUi.scheme,

host,

webUi.boundPort,

publicAddress,

sparkHome,

executorDir,

workerUri,

conf,

appLocalDirs,

ExecutorState.LAUNCHING,

resources_)

executors(appId + "/" + execId) = manager

**manager.start()**

coresUsed += cores_

memoryUsed += memory_

addResourcesUsed(resources_)

十七、ExecutorRunner类:

val builder = CommandUtils.buildProcessBuilder(subsCommand, new SecurityManager(conf),

memory, sparkHome.getAbsolutePath, substituteVariables)

val command = builder.command()

logInfo(s"command:--------------- -------------${command}")

process = builder.start()

运行命令:

21/02/17 12:40:54 INFO ExecutorRunner: command:--------------- -------------[E:\jdk1.8.0_181\bin\java, -cp, D:\spark-3.0.1-bin-hadoop2.7\conf;D:\spark-3.0.1-bin-hadoop2.7\jars*, -Xmx1024M, -Dspark.driver.port=61716, -Dspark.rpc.askTimeout=10s, org.apache.spark.executor.CoarseGrainedExecutorBackend, --driver-url, spark://CoarseGrainedScheduler@LAPTOP-TC12SF9P:61716, --executor-id, 0, --hostname, 169.254.150.140, --cores, 7, --app-id, app-20210217124054-0001, --worker-url, spark://[email protected]:60650]

十八、CoarseGrainedExecutorBackend类:

env.rpcEnv.setupEndpoint("**Executor**",

backendCreateFn(env.rpcEnv, arguments, env, cfg.resourceProfile))//运行onStart方法,向driver发送RegisterExecutor

override def **onStart**(): Unit = {

logInfo("Connecting to driver: " + driverUrl)

try {

_resources = parseOrFindResources(resourcesFileOpt)

} catch {

case NonFatal(e) =>

exitExecutor(1, "Unable to create executor due to " + e.getMessage, e)

}

rpcEnv.asyncSetupEndpointRefByURI(driverUrl).flatMap {

ref =>

// This is a very fast action so we can use "ThreadUtils.sameThread"

driver = Some(ref)

ref.ask[Boolean](**RegisterExecutor**(executorId, self, hostname, cores, extractLogUrls,

extractAttributes, _resources, resourceProfile.id))

}(ThreadUtils.sameThread).onComplete {

case Success(_) =>

self.send(RegisteredExecutor)

case Failure(e) =>

exitExecutor(1, s"Cannot register with driver: $driverUrl", e, notifyDriver = false)

}(ThreadUtils.sameThread)

}

作者:小亦

好好学习,天天向上