手写字识别——可视化训练过程

数据集介绍:

Keras里已经封装好了mnist数据集(包含6000张训练数据,1000张测试数据),图片大小为28x28。一行代码就可以从keras里导入进来,第一次导入时间长点,请慢慢等待。

from keras.datasets import mnist

导入各种包

from keras.layers import Dense, Dropout, Convolution2D

from keras.layers import MaxPooling2D

from keras import Sequential

from keras.datasets import mnist

from keras.optimizers import Adam

from keras.utils import np_utils

from keras.layers import Flatten

import matplotlib.pyplot as plt

数据归一化

(X_train, Y_train), (X_test, Y_test) = mnist.load_data()

X_train = X_train.reshape(-1, 28, 28, 1)/255.0

X_test = X_test.reshape(-1, 28, 28, 1)/255.0

Y_train = np_utils.to_categorical(Y_train, num_classes=10)

Y_test = np_utils.to_categorical(Y_test, num_classes=10)

使用keras搭建网络模型

手写字属于10分类,最后一层全连接层和分类数相匹配,两层全连接层中间加Dropout层,按比例丢弃神经元,抑制过拟合。

model = Sequential()

model.add(Convolution2D(12, kernel_size=5, activation='relu', strides=1,

padding='same', input_shape=(28, 28, 1)))

model.add(MaxPooling2D(pool_size=2, strides=2, padding='same'))

model.add(Convolution2D(64, 5, activation='relu', strides=1,

padding='same'))

model.add(MaxPooling2D(pool_size=2, strides=2, padding='same'))

model.add(Flatten())

model.add(Dense(1024, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(10, activation='softmax'))

编译训练模型

可以自定义优化器的学习率,我选用是是Adam优化器,它相对于其他优化器可以实现快速找到全局最小。损失函数选择的是交叉熵损失。

adam = Adam(lr=1e-4)

model.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy'])

history = model.fit(X_train, Y_train, epochs=10, batch_size=32, verbose=2, validation_data=(X_test, Y_test))

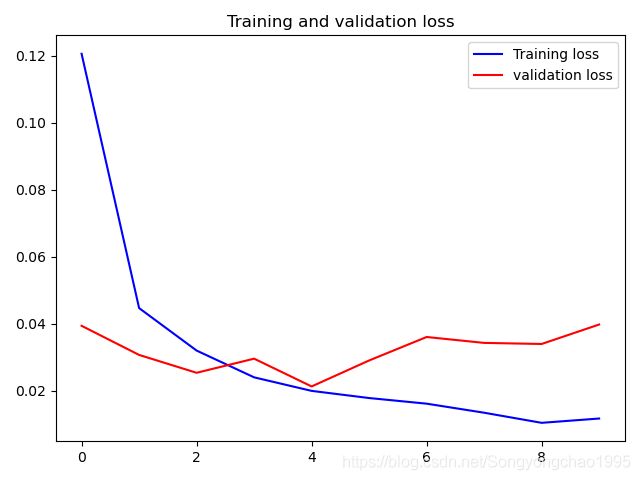

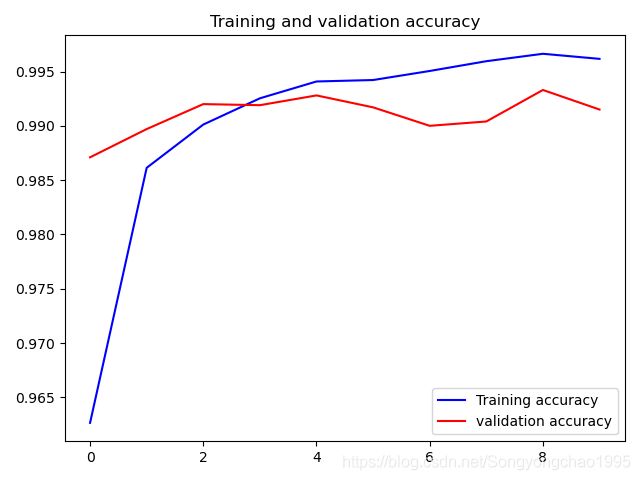

使用matplotlib绘制训练的准确率和损失

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'b', label='Training accuracy')

plt.plot(epochs, val_acc, 'r', label='validation accuracy')

plt.title('Training and validation accuracy')

plt.legend(loc='lower right')

plt.figure()

plt.plot(epochs, loss, 'b', label='Training loss')

plt.plot(epochs, val_loss, 'r', label='validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

结果

由于时间问题,我只训练了10个epochs,验证准确率已到99.3%

完整代码

from keras.layers import Dense, Dropout, Convolution2D

from keras.layers import MaxPooling2D

from keras import Sequential

from keras.datasets import mnist

from keras.optimizers import Adam

from keras.utils import np_utils

from keras.layers import Flatten

import matplotlib.pyplot as plt

(X_train, Y_train), (X_test, Y_test) = mnist.load_data()

X_train = X_train.reshape(-1, 28, 28, 1)/255.0

X_test = X_test.reshape(-1, 28, 28, 1)/255.0

Y_train = np_utils.to_categorical(Y_train, num_classes=10)

Y_test = np_utils.to_categorical(Y_test, num_classes=10)

model = Sequential()

model.add(Convolution2D(12, kernel_size=5, activation='relu', strides=1,

padding='same', input_shape=(28, 28, 1)))

model.add(MaxPooling2D(pool_size=2, strides=2, padding='same'))

model.add(Convolution2D(64, 5, activation='relu', strides=1,

padding='same'))

model.add(MaxPooling2D(pool_size=2, strides=2, padding='same'))

model.add(Flatten())

model.add(Dense(1024, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(10, activation='softmax'))

adam = Adam(lr=1e-4)

model.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy'])

history = model.fit(X_train, Y_train, epochs=10, batch_size=32, verbose=2, validation_data=(X_test, Y_test))

# loss, accuracy = score = model.evaluate(X_test, Y_test)

# print('test loss', loss)

# print('test accuracy', accuracy)

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'b', label='Training accuracy')

plt.plot(epochs, val_acc, 'r', label='validation accuracy')

plt.title('Training and validation accuracy')

plt.legend(loc='lower right')

plt.figure()

plt.plot(epochs, loss, 'b', label='Training loss')

plt.plot(epochs, val_loss, 'r', label='validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()