Python爬虫抓取微博热搜榜

环境

Python 3.7.4

bs4==0.0.1

xlwt==1.3.0

urllib3==1.24.2

re

初始化

def __init__(self):

self.hotSearchPattern = re.compile(r'(.*?)')

self.advertisementPattern = re.compile(r'(.*?)')

self.url = 'https://s.weibo.com/top/summary'

self.prefixUrl = 'https://s.weibo.com'

self.headers = {

"User-Agent" : "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.61 Safari/537.36"}

抓取网页

def crawlPage(self, url):

request = urllib.request.Request(headers = self.headers, url = url)

page = None

try:

response = urllib.request.urlopen(request)

page = response.read().decode('utf-8')

except urllib.error.URLError as e:

print("Get page fail!")

print(e)

return page

提取数据

def extractData(self):

page = self.crawlPage(self.url)

beautifulSoup = BeautifulSoup(page, 'html.parser')

hotSearchList = []

for tag in beautifulSoup.find_all('td', class_ = 'td-02'):

tag = str(tag)

item = re.findall(self.hotSearchPattern, tag)

if len(item) == 0:

item = re.findall(self.advertisementPattern, tag)

hotSearchList.append([item[0][1], self.prefixUrl + item[0][0]])

return hotSearchList

导出为XLS文件

class Exporter():

def __init__(self):

self.workbook = xlwt.Workbook(encoding='utf-8')

def exportXLS(self, columns, data, path, sheetName):

if len(data) == 0:

print("Please get data first!")

return

sheet = self.workbook.add_sheet(sheetName)

for column in range(0, len(columns)):

sheet.write(0, column, columns[column])

for i in range(1, len(data) + 1):

for column in range(0, len(columns)):

sheet.write(i, column, data[i - 1][column])

self.workbook.save(path)

实现代码

import re

from bs4 import BeautifulSoup

import urllib

import xlwt

class Spider():

'''

Description:

Spider program to crawl data from Weibo hot search rank list

Attributes:

None

'''

def __init__(self):

self.hotSearchPattern = re.compile(r'(.*?)')

self.advertisementPattern = re.compile(r'(.*?)')

self.url = 'https://s.weibo.com/top/summary'

self.prefixUrl = 'https://s.weibo.com'

self.headers = {

"User-Agent" : "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.61 Safari/537.36"}

'''

Description:

crawl page from the given URL

Args:

url: the URL of page need to get

Returns:

page of the given URL

'''

def crawlPage(self, url):

request = urllib.request.Request(headers = self.headers, url = url)

page = None

try:

response = urllib.request.urlopen(request)

page = response.read().decode('utf-8')

except urllib.error.URLError as e:

print("Get page fail!")

print(e)

return page

'''

Description:

extract data from the given page, return the list of data

Args:

None

Returns:

list of data extract from given page

'''

def extractData(self):

page = self.crawlPage(self.url)

beautifulSoup = BeautifulSoup(page, 'html.parser')

hotSearchList = []

for tag in beautifulSoup.find_all('td', class_ = 'td-02'):

tag = str(tag)

item = re.findall(self.hotSearchPattern, tag)

if len(item) == 0:

item = re.findall(self.advertisementPattern, tag)

hotSearchList.append([item[0][1], self.prefixUrl + item[0][0]])

return hotSearchList

class Exporter():

'''

Description:

export the dictionary and list to xls file

Attributes:

None

'''

def __init__(self):

self.workbook = xlwt.Workbook(encoding='utf-8')

'''

Description:

export the dictionary and list to xls file

Args:

columns: the labels of each c0lumn

data: the list of data

path: the path to save xls file

sheetName: the name of sheet created in the xls file

Returns:

None

'''

def exportXLS(self, columns, data, path, sheetName):

if len(data) == 0:

print("Please get data first!")

return

sheet = self.workbook.add_sheet(sheetName)

for column in range(0, len(columns)):

sheet.write(0, column, columns[column])

for i in range(1, len(data) + 1):

for column in range(0, len(columns)):

sheet.write(i, column, data[i - 1][column])

self.workbook.save(path)

if __name__ == "__main__":

spider = Spider()

hotSearchList = spider.extractData()

for hotSearch in hotSearchList:

print("%s %s" % (hotSearch[0], hotSearch[1]))

columns = ['Word', 'URL']

sheetName = 'HotSearch'

path = './HotSearch.xls'

exporter = Exporter()

exporter.exportXLS(columns, hotSearchList, path, sheetName)

输出结果

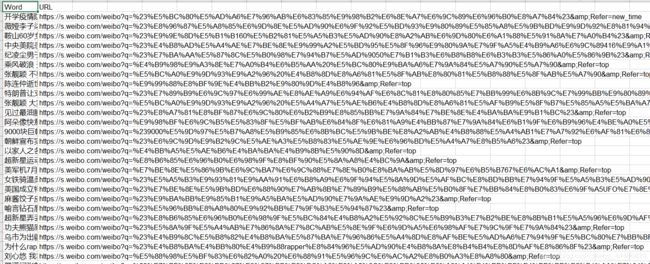

XLS文件结果

最后

- 由于博主水平有限,不免有疏漏之处,欢迎读者随时批评指正,以免造成不必要的误解!