【Kafka】Kafka stream 模拟股票证券大屏实时动态显示

文章目录

-

- kafka Producer 模拟股市价格的成交价

- 利用流统计每种股票价格

- 实时数据基于吞吐量存储与redis

- 使用 Echarts 完成实时动态图标呈现

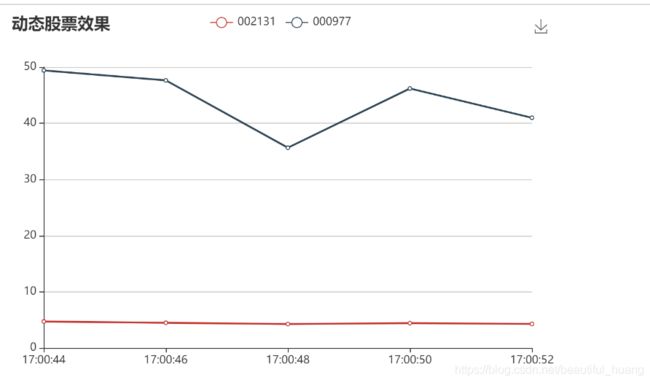

- 效果图

kafka Producer 模拟股市价格的成交价

package com.njbdqn.services;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.common.serialization.FloatSerializer;

import org.apache.kafka.common.serialization.StringSerializer;

import java.util.Properties;

import java.util.Random;

/**

* @Author: Stephen

* @Date: 2020/3/3 15:51

* @Content: 模拟股市价格的成交价

*/

public class SendMsg {

public static void main(String[] args) throws InterruptedException {

Properties prop = new Properties();

prop.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.56.122:9092");

prop.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

prop.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, FloatSerializer.class);

Random rand = new Random();

KafkaProducer<String,Float> prod = new KafkaProducer<String,Float>(prop);

while (true){

for (int i=0;i<50;i++){

float lc = 30+20*rand.nextFloat();

float lou = 4+rand.nextFloat();

// 利欧股票成交价格

ProducerRecord<String, Float> lousend = new ProducerRecord<String, Float>("play01", "002131", lou);

// 浪潮股票成交价格

ProducerRecord<String, Float> lcsend = new ProducerRecord<String, Float>("play01", "000977", lc);

prod.send(lcsend);

Thread.sleep(250);

prod.send(lousend);

Thread.sleep(250);

}

prod.flush();

}

}

}

利用流统计每种股票价格

实体类封装股票信息并实现序列化以及反序列化接口

package com.njbdqn.servers;

/**

* @Author: Stephen

* @Date: 2020/3/4 11:42

* @Content:

*/

public class Agg {

private float sum=0;

private float avg=0;

private float count=0;

public float getSum() {

return sum;

}

public void setSum(float sum) {

this.sum = sum;

}

public float getAvg() {

return avg;

}

public void setAvg(float avg) {

this.avg = avg;

}

public float getCount() {

return count;

}

public void setCount(float count) {

this.count = count;

}

@Override

public String toString() {

return "Agg{" +

"sum=" + sum +

", avg=" + avg +

", count=" + count +

'}';

}

}

实现序列化反序列化接口

package com.njbdqn.servers;

import com.fasterxml.jackson.core.JsonProcessingException;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.apache.kafka.common.serialization.Serializer;

import java.util.Map;

/**

* @Author: Stephen

* @Date: 2020/3/4 11:43

* @Content:

*/

public class AggSeriallizer implements Serializer<Agg> {

@Override

public void configure(Map<String, ?> map, boolean b) {

}

@Override

public byte[] serialize(String s, Agg agg) {

ObjectMapper mapper = new ObjectMapper();

try {

return mapper.writeValueAsBytes(agg);

} catch (JsonProcessingException e) {

return null;

}

}

@Override

public void close() {

}

}

package com.njbdqn.servers;

import com.fasterxml.jackson.core.JsonProcessingException;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.apache.kafka.common.serialization.Deserializer;

import java.util.Map;

/**

* @Author: Stephen

* @Date: 2020/3/4 11:47

* @Content:

*/

public class AggDeseriallizer implements Deserializer<Agg> {

@Override

public void configure(Map<String, ?> map, boolean b) {

}

@Override

public Agg deserialize(String s, byte[] bytes) {

ObjectMapper om = new ObjectMapper();

try {

return om.readValue(bytes,Agg.class);

} catch (Exception e) {

return null;

}

}

@Override

public void close() {

}

}

利用流统计每种股票价格

package com.njbdqn.servers;

import com.fasterxml.jackson.core.JsonProcessingException;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.common.serialization.Serdes;

import org.apache.kafka.common.utils.Bytes;

import org.apache.kafka.streams.KafkaStreams;

import org.apache.kafka.streams.StreamsBuilder;

import org.apache.kafka.streams.StreamsConfig;

import org.apache.kafka.streams.Topology;

import org.apache.kafka.streams.kstream.*;

import org.apache.kafka.streams.state.WindowStore;

import redis.clients.jedis.Jedis;

import java.time.Duration;

import java.util.Properties;

import java.util.concurrent.CountDownLatch;

/**

* @Author: Stephen

* @Date: 2020/3/3 16:27

* @Content: 利用流统计每种股票价格

*/

public class CountPrice {

public static void main(String[] args) {

Properties prop = new Properties();

prop.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.56.122:9092");

prop.put(StreamsConfig.APPLICATION_ID_CONFIG,"play2");

prop.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass());

prop.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG,Serdes.Float().getClass());

prop.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG,"earliest");

// prop.put(StreamsConfig.CACHE_MAX_BYTES_BUFFERING_CONFIG,0);

final StreamsBuilder builder = new StreamsBuilder();

// 计算的拓扑结构

KStream<String,Float> play01 = builder.stream("play01");

KTable<Windowed<String>,Agg> tab = play01.groupByKey()

.windowedBy(TimeWindows.of(Duration.ofSeconds(2)))

.aggregate(

new Initializer<Agg>() {

@Override

public Agg apply() {

return new Agg();

}

},

new Aggregator<String, Float, Agg>() {

@Override

public Agg apply(String key, Float newValue, Agg aggValue) {

// System.out.println("当前窗口:"+key+".....新值:"+newValue+".....上次的值:"+aggValue);

float cnum = aggValue.getSum()+newValue;

aggValue.setSum(cnum);

aggValue.setCount(aggValue.getCount()+1);

aggValue.setAvg(cnum/(aggValue.getCount()));

return aggValue;

}

},

Materialized.,Agg, WindowStore<Bytes,byte[]>>as("tmp-stream-store")

.withValueSerde(Serdes.serdeFrom(new AggSeriallizer(),new AggDeseriallizer()))

);

final Jedis jedis = new Jedis("192.168.56.122");

final ObjectMapper om = new ObjectMapper();

tab.toStream().foreach((k,v)->

{

try {

jedis.rpush("gp2","{\"shareid\":\""+k.key()+"\",\"infos\":"+om.writeValueAsString(v)

+",\"timestamp\":\""+k.toString().substring(22,35)+"\"}");

} catch (JsonProcessingException e) {

e.printStackTrace();

}

//System.out.println(k.toString().replaceAll("",""));

}

);

final Topology topo = builder.build();

final KafkaStreams streams = new KafkaStreams(topo, prop);

final CountDownLatch latch = new CountDownLatch(1);

Runtime.getRuntime().addShutdownHook(new Thread("hw"){

@Override

public void run() {

streams.close();

latch.countDown();

}

});

try {

streams.start();

latch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

实时数据基于吞吐量存储与redis

package com.njbdqn.mykafkatoech.controller;

import com.njbdqn.mykafkatoech.services.ReadService;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.CrossOrigin;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import java.util.List;

/**

* @Author: Stephen

* @Date: 2020/3/4 17:42

* @Content:

*/

@RestController

@CrossOrigin("*")

public class InitCtrl {

@Autowired

private ReadService readService;

@RequestMapping("/data")

public List<String> findData(int begin,int size){

return readService.getData(begin,size);

}

}

package com.njbdqn.mykafkatoech.services;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.redis.core.StringRedisTemplate;

import org.springframework.stereotype.Service;

import java.util.List;

/**

* @Author: Stephen

* @Date: 2020/3/4 17:32

* @Content:

*/

@Service

public class ReadService {

@Autowired

private StringRedisTemplate temp;

public List<String> getData(int begin, int count){

// 首先计算开始位置和结束位置

int over = begin+count-1;

// 获取需要的数据

return temp.opsForList().range("gp2",begin,over);

}

}

package com.njbdqn.mykafkatoech;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

@SpringBootApplication

public class MykafkatoechApplication {

public static void main(String[] args) {

SpringApplication.run(MykafkatoechApplication.class, args);

}

}

使用 Echarts 完成实时动态图标呈现

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<title>ECharts</title>

<!-- 引入 echarts.js -->

<script src="js/jquery.min.js"></script>

<script src="js/echarts.min.js"></script>

</head>

<body>

<!-- 为ECharts准备一个具备大小(宽高)的Dom -->

<div id="main" style="width: 600px;height:400px;"></div>

<script type="text/javascript">

// 基于准备好的dom,初始化echarts实例

var myChart = echarts.init(document.getElementById('main'));

// 指定图表的配置项和数据

option = {

title: {

text: '动态股票效果'

},

tooltip: {

trigger: 'axis'

},

legend: {

data: ['002131','000977']

},

grid: {

left: '3%',

right: '4%',

bottom: '3%',

containLabel: true

},

toolbox: {

feature: {

saveAsImage: {

}

}

},

xAxis: {

type: 'category',

boundaryGap: false,

data: []

},

yAxis: {

type: 'value'

},

series: [

{

name: '002131',

type: 'line',

stack: '总量',

data: []

},

{

name: '000977',

type: 'line',

stack: '总量',

data: []

}

]

};

// 使用刚指定的配置项和数据显示图表。

myChart.setOption(option);

//定义1个全局变量

var start =1;

var loadData = function(){

// 调用ajax

$.ajax({

url:'http://localhost:8080/data',

data:{

"begin":start,"size":10},

type:'get',

dataType:'JSON',

success:function (res) {

console.log(res);

//循环数组出值

//x轴显示信息数组

var x1 =[];

//series数据数组

var series1=[];

var series2=[];

for(inf in res){

//将字符串转为对象

var gp = JSON.parse(res[inf]);

//获取所有的000977股票的信息

if (gp.shareid=="000977"){

//修改x轴时间格式

x1.push(dateFormat(gp.timestamp));

series1.push(gp.infos.avg);

}else{

series2.push(gp.infos.avg);

}

}

console.log(series1.length,series2.length,x1.length)

//将两个填充好的数组赋值给option

myChart.setOption({

xAxis:{

data:x1.reverse()},

series:[

{

name: '000977',

data: series1.reverse()

},

{

name: '002131',

data: series2.reverse()

}

]

})

}

})

start+=2;

}

var dateFormat = function(ctime){

var d = new Date();

d.setTime(ctime);

hour = d.getHours()<10?"0"+d.getHours():d.getHours();

mins = d.getMinutes()<10?"0"+d.getMinutes():d.getMinutes();

sec = d.getSeconds()<10?"0"+d.getSeconds():d.getSeconds();

return hour +":"+mins+":"+sec;

}

setInterval(function(){

loadData();

},3000);

</script>

</body>

</html>