ingress controller安装总结

本文主要介绍kubernetes官方推荐的ingress控制器ingress-Nginx controller在bare-metal环境中搭建的经验总结,因为我是在私有的服务器上搭建的kubernetes集群,而且还要求要通过IP的方式作为外部访问的入口点,在网上找了好多资料都没有找到满意的,最后还是根据官网指引完成的,所以这里对官网上bare-metal的安装指引总结一下(因为看英文太费劲了,所以记录一下,哈哈)。如果有不对的地方,也望能得到指正。

下面就开始了。

Ingress控制器

Ingress控制器有多种类型,我尝试安装的是traefik和kubernetes官方推荐的控制器ingress-Nginx。这两款ingress控制器是当前比较流行的两种控制器。traefik因能够实时和kubernetes api交互,并且能够与常见的微服务系统直接整合,可以实现自动化动态配置而越来越流行。

安装两款控制器后发现,traefik只能通过域名实现kubernetes 服务的统一访问入口(可能是因为基于规则的原因),而Nginx控制器可以支持域名或者IP的方式实现kubernetes服务的统一访问入口。

说明:ingress-Nginx是kubernetes官方提供的ingress控制器,Nginx-ingress是Nginx官方提供的ingress控制器,两者都支持域名和ip两种方式做kubernetes服务的统一入口,只是ingress的配置不太一样。

因为我要通过IP方式访问kubernetes中的服务,所以就选择了kubernetes官方的ingress-Nginx控制器,下面开始介绍ingress-Nginx控制器的安装。

注意:默认配置会监控所有命名空间的ingress对象。可以通过--watch-namespace 标志将监控范围限制到某一个特定的命名空间。

警告:如果有多个ingress定义了同一个域名的多个path,ingress控制器会将这些定义合并。

危险:准入webhook需要KubernetesAPI服务器和入口控制器之间的连接,如果有网络策略或者附加的防火墙,请允许访问端口8443。

参考:https://github.com/kubernetes/ingress-nginx中的Overview及Get Start部分

https://kubernetes.github.io/ingress-nginx/deploy/ingress-Nginx安装指引

https://kubernetes.github.io/ingress-nginx/deploy/baremetal/

因为是在自己的服务器上搭建的,所以就参考了bare-metal的安装方式(其他方式官网也有介绍,参考官网即可,我这里就不说了)。因为如果是在像GKE、AWS之类的云上安装的kubernetes集群的话,在搭建ingress控制器时就可以直接指定负载均衡的ip地址作为统一的入口,云提供商会自动分配这个IP地址。而在自己的服务器上搭建就需要参照Bare-metal considerations部分来完成负载均衡ip地址的配置。

bare-metal安装默认采用的是NodePort安装方式,就是ingress controller的service type设置成NodePort。

安装步骤如下:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/provider/baremetal/deploy.yaml

下面是我改之后的deploy.yaml:

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

---

# Source: ingress-nginx/templates/controller-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

helm.sh/chart: ingress-nginx-2.0.3

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.32.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

helm.sh/chart: ingress-nginx-2.0.3

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.32.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

data:

---

# Source: ingress-nginx/templates/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

helm.sh/chart: ingress-nginx-2.0.3

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.32.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ''

resources:

- nodes

verbs:

- get

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- update

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

# Source: ingress-nginx/templates/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-2.0.3

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.32.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

helm.sh/chart: ingress-nginx-2.0.3

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.32.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- namespaces

verbs:

- get

- apiGroups:

- ''

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- update

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- configmaps

resourceNames:

- ingress-controller-leader-nginx

verbs:

- get

- update

- apiGroups:

- ''

resources:

- configmaps

verbs:

- create

- apiGroups:

- ''

resources:

- endpoints

verbs:

- create

- get

- update

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

---

# Source: ingress-nginx/templates/controller-rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-2.0.3

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.32.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-service-webhook.yaml

apiVersion: v1

kind: Service

metadata:

labels:

helm.sh/chart: ingress-nginx-2.0.3

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.32.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

type: ClusterIP

ports:

- name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-service.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

labels:

helm.sh/chart: ingress-nginx-2.0.3

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.32.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

# externalTrafficPolicy: Local

# type: LoadBalancer

# loadBalancerIP: 10.245.5.2

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: http

nodePort: 30278

- name: https

port: 443

protocol: TCP

targetPort: https

nodePort: 30579

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

helm.sh/chart: ingress-nginx-2.0.3

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.32.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

# replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

revisionHistoryLimit: 10

minReadySeconds: 0

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

spec:

dnsPolicy: ClusterFirstWithHostNet

containers:

- name: controller

image: xx.xx.xx.xx/kubernetes/ingress/nginx-ingress-controller:0.32.0

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --ingress-class=nginx

- --configmap=ingress-nginx/ingress-nginx-controller

# - --publish-service=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

runAsUser: 101

allowPrivilegeEscalation: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

livenessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 1

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 1

successThreshold: 1

failureThreshold: 3

ports:

- name: http

containerPort: 80

protocol: TCP

# hostPort: 80

- name: https

containerPort: 443

protocol: TCP

# hostPort: 443

- name: webhook

containerPort: 8443

protocol: TCP

# hostPort: 8443

volumeMounts:

- name: webhook-cert

mountPath: /usr/local/certificates/

readOnly: true

resources:

requests:

cpu: 100m

memory: 90Mi

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

# Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml

# before changing this value, check the required kubernetes version

# https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisites

apiVersion: admissionregistration.k8s.io/v1beta1

kind: ValidatingWebhookConfiguration

metadata:

labels:

helm.sh/chart: ingress-nginx-2.0.3

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.32.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

name: ingress-nginx-admission

webhooks:

- name: validate.nginx.ingress.kubernetes.io

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1beta1

operations:

- CREATE

- UPDATE

resources:

- ingresses

failurePolicy: Fail

sideEffects: None

admissionReviewVersions:

- v1

- v1beta1

clientConfig:

service:

namespace: ingress-nginx

name: ingress-nginx-controller-admission

path: /networking/v1beta1/ingresses

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-2.0.3

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.32.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-2.0.3

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.32.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-2.0.3

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.32.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-2.0.3

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.32.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- secrets

verbs:

- get

- create

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-2.0.3

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.32.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-create

annotations:

helm.sh/hook: pre-install,pre-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-2.0.3

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.32.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

spec:

template:

metadata:

name: ingress-nginx-admission-create

labels:

helm.sh/chart: ingress-nginx-2.0.3

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.32.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: create

image: xx.xx.xx.xx/kubernetes/ingress/kube-webhook-certgen:v1.2.0

imagePullPolicy: IfNotPresent

args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.ingress-nginx.svc

- --namespace=ingress-nginx

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

securityContext:

runAsNonRoot: true

runAsUser: 2000

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-patch

annotations:

helm.sh/hook: post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-2.0.3

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.32.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

spec:

template:

metadata:

name: ingress-nginx-admission-patch

labels:

helm.sh/chart: ingress-nginx-2.0.3

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.32.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: patch

image: xx.xx.xx.xx/kubernetes/ingress/kube-webhook-certgen:v1.2.0

imagePullPolicy: IfNotPresent

args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=ingress-nginx

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

securityContext:

runAsNonRoot: true

runAsUser: 2000

其实通过上面的步骤部署好ingress controller之后,就可以通过master ip:NodePort/ingress path访问到内部服务了,但是还没有做到负载均衡。

在传统的云环境中,网络负载均衡器是按需提供的,单个Kubernetes manifest足以向外部客户端提供NGINX入口控制器的单一接触点,并间接地向在集群内运行的任何应用程序提供接触点。 bare-metal(裸机)环境缺乏这种商品,需要一个稍微不同的设置,以对外部消费者提供相同类型的访问入口。

对于运行在bare-metal环境中的kubernetes集群,官方文档中给了几种部署Nginx ingress Controller的推荐方法,下面逐一说明。

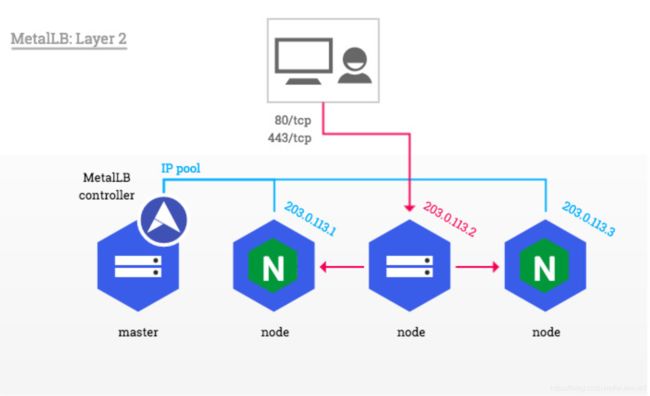

1、一个纯软件的解决方案:MetalLB

Metal LB为不在支持的云提供商上运行的Kubernetes集群提供了一个网络负载均衡器实现,有效地允许在任何集群中使用负载均衡器服务。

本节演示如何在具有公共可访问节点的Kubernetes集群中一起使用MetalLB的Layer 2配置模式和NGINX Ingress控制器。 在这种模式下,一个节点承担ingress-Nginx service IP的所有流量。

注意:此文档没有描述其他可支持的配置模式。

警告:Metal LB目前处于beta状态。

MetalLB可以通过一个简单的kubernetes manifast或者helm来部署,具体安装可以参考metalLB官网https://metallb.universe.tf/installation/,这里不再阐述。

MetalLB需要一个IP池,以便拥有ingress-nginx的所有权。这个池可以在一个和MetalLB controller相同命名空间的ConfigMap的config位置进行定义。这个池里的IP必须是专用于MetalLB,不能重复使用kubernetes node节点的ip或者是一个DHCP服务器分配的ip。

示例:

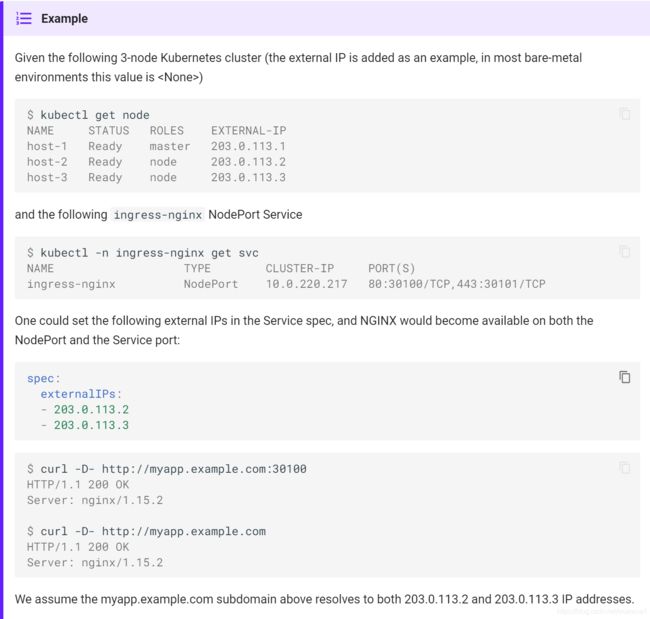

下面的kubernetes集群有3个节点(其中,EXTERNAL-IP是作为例子加上的,在绝大多数的裸机环境中,这个值是

$ kubectl get node

NAME STATUS ROLES EXTERNAL-IP

host-1 Ready master 203.0.113.1

host-2 Ready node 203.0.113.2

host-3 Ready node 203.0.113.3

创建完下面的ConfigMap之后,MetalLB拥有池中某一个IP的所有权,并相应地更新ingress-nginx service的loadBalancer IP字段。

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 203.0.113.10-203.0.113.15

$ kubectl -n ingress-nginx get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S)

default-http-backend ClusterIP 10.0.64.249

ingress-nginx LoadBalancer 10.0.220.217 203.0.113.10 80:30100/TCP,443:30101/TCP

一旦MetalLB设置了ingress-nginx负载均衡 service的外部IP地址,在iptable NAT表中就会创建相应的条目,具有选定的ip地址的节点开始响应负载均衡器service中配置的端口上的HTTP请求。

Tip:为了保存发送给NGINX的HTTP请求中的源IP地址,需要使用Local通信策略。更多的通信策略可以阅读https://metallb.universe.tf/usage/#traffic-policies。

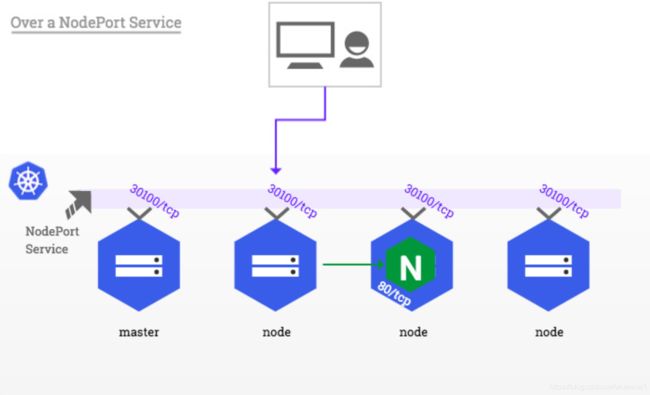

2、基于NodePort服务

因为其简单性,这种方式也是用户在遵循安装指南中描述的步骤部署时的默认部署方式。

这种配置方式下,Nginx容器和本地的网络是独立的,因此,它能够安全的绑定到任何端口,包括HTTP的标准端口80和443。然而,因为容器的命名空间的独立性,一个集群网络外面的客户端(如公共网络)是不能通过80和443端口直接进入ingress主机的。外部客户端必须将分配给ingress-Nginx service的NodePort添加到HTTP请求中才可以访问。

示例:

给ingress-nginx服务配置30100端口

$ kubectl -n ingress-nginx get svc

NAME TYPE CLUSTER-IP PORT(S)

default-http-backend ClusterIP 10.0.64.249 80/TCP

ingress-nginx NodePort 10.0.220.217 80:30100/TCP,443:30101/TCP

一个拥有开放IP地址:203.0.113.2 集群节点(其中,EXTERNAL-IP是作为例子加上的,在绝大多数的裸机环境中,这个值是

$ kubectl get node NAME STATUS ROLES EXTERNAL-IP

host-1 Ready master 203.0.113.1

host-2 Ready node 203.0.113.2

host-3 Ready node 203.0.113.3

客户端将通过http:/myapp.example.com:30100到达带有host:myapp.example.com的ingress,其中myapp.example.com子域名解析为203.0.113.2 IP地址。

---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

对主机系统的影响:

虽然可以使用-service-node-port-range API服务器标志重新配置NodePort的范围,使其可以包含非特权端口,并且能够公开端口80和443,但这样做可能会导致意想不到的问题,包括(但不限于)使用其他保留给系统守护进程的端口,以及必须授予它可能不需要的kube-proxy特权。因此不鼓励这么做。

这种方法还有一些其他限制:

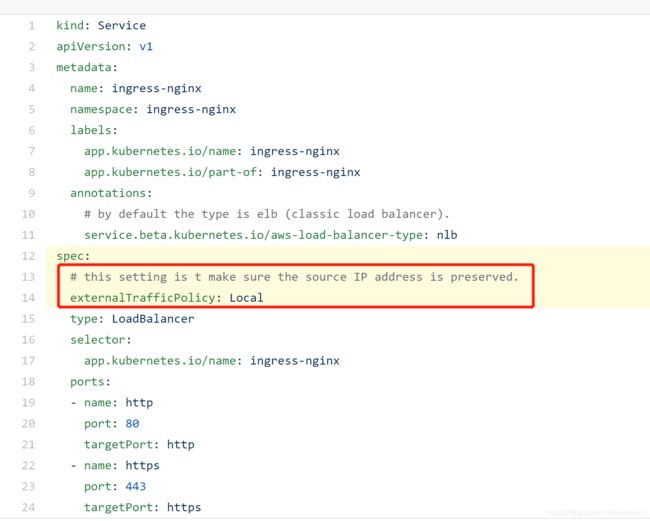

(1) 源IP地址

NodePort类型的服务默认执行源地址转换。这意味着HTTP请求的源IP总是从NGINX的角度接收请求的Kubernetes节点的IP地址。

在NodePort设置中保存源IP的建议方法是将ingress-nginx service spec的externalTrafficPolicy 字段设置成Local。

注意:此设置有效地删除发送到Kubernetes节点的数据包,这些节点不运行NGINX入口控制器的任何实例。可以考虑将NGINX PODS分配给特定节点(https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/),以便控制NGINX ingress控制器应该调度或不调度哪些节点。

示例:

下面的kubernetes集群有3个节点(其中,EXTERNAL-IP是作为例子加上的,在绝大多数的裸机环境中,这个值是

$ kubectl get node

NAME STATUS ROLES EXTERNAL-IP

host-1 Ready master 203.0.113.1

host-2 Ready node 203.0.113.2

host-3 Ready node 203.0.113.3

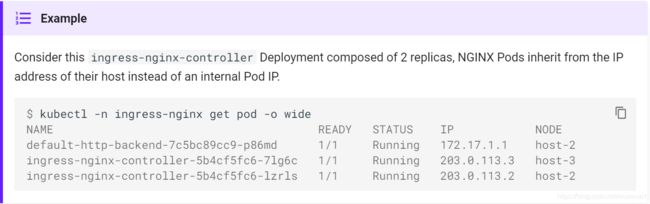

一个ingress-nginx-controller 部署2个副本

$ kubectl -n ingress-nginx get pod -o wide

NAME READY STATUS IP NODE

default-http-backend-7c5bc89cc9-p86md 1/1 Running 172.17.1.1 host-2

ingress-nginx-controller-cf9ff8c96-8vvf8 1/1 Running 172.17.0.3 host-3

ingress-nginx-controller-cf9ff8c96-pxsds 1/1 Running 172.17.1.4 host-2

发送到host-2和host-3的请求将被转发到Nginx并且原始客户端的IP会被保存。而发送到host-1的请求将会被删掉,因为在那个节点上没有运行Nginx副本。

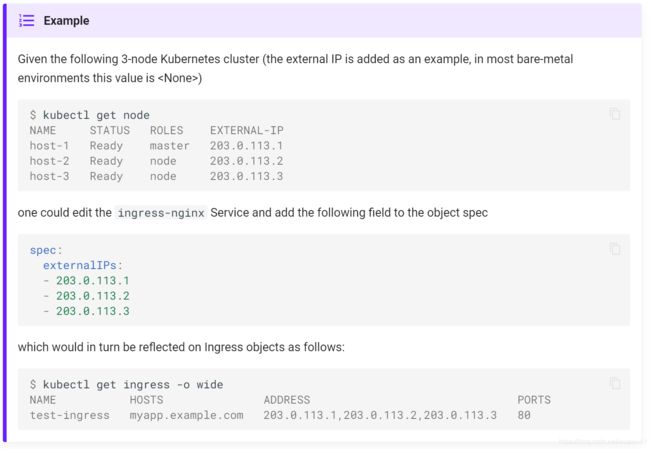

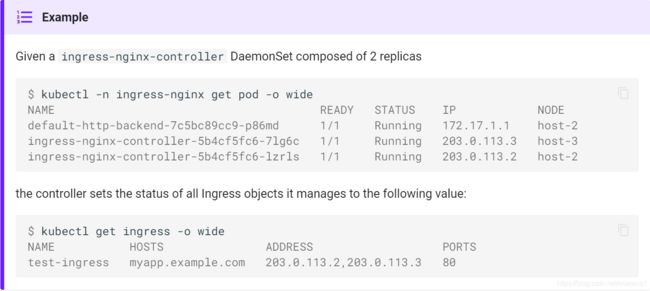

(2) ingress状态

因为NodePort service获取不到定义分配的LoadBalancerIP,NGINX Ingress controller不能更新它管理的ingress对象的状态。

$ kubectl get ingress

NAME HOSTS ADDRESS PORTS

test-ingress myapp.example.com 80

尽管没有向NGINX ingress控制器提供公共IP地址的负载均衡器,但可以通过设置ingress-Nginx service的externalIPs字段来强制所有管理的ingress对象的状态更新。

注意:设置externalIPs不仅仅用于可以使NGINX ingress控制器更新ingress对象的状态。 请阅读官方Kubernetes文档的 Services页面中的此选项以及本文档中关于External IPs 的部分,以获得更多信息。

(3) 重定向

由于NGINX不知道NodePort service操作的端口转换,后端应用程序负责生成考虑外部客户端(包括节点端口)使用的URL的重定向URL。

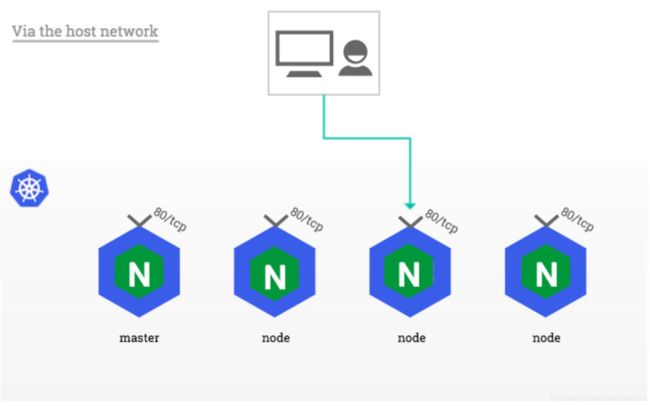

3、基于主机网络

在没有外部负载均衡器可用但使用NodePorts不是选项的设置中,可以配置ingress-NginxPods来使用它们运行的主机的网络,而不是专用的网络名称空间。这种方法的好处是NGINX ingress控制器可以将端口80和443直接绑定到Kubernetes节点的网络接口,而不需要NodePort service施加额外的网络转换。

注意:此方法不利用任何Service对象来暴露NGINX ingress控制器。如果目标集群中存在这样的ingress-Nginx service,建议删除。

这可以通过在Pod的spec中启用hostNetwork选项来实现。

template:

spec:

hostNetwork: true

安全因素:

启用此选项将每个系统守护进程暴露给任何网络接口上的NGINX ingress controller,包括主机的回送。 请仔细评估这可能对您的系统安全的影响。

这种部署方法的一个主要限制就是集群的每一个节点上只能部署一个Nginx ingress controller的pod,因为在同一个网络接口上多次绑定同一个端口在技术上是不可能的。因为这种情况导致pod调度失败会看到下面的事件:

$ kubectl -n ingress-nginx describe pod

...

Events:

Type Reason From Message

---- ------ ---- -------

Warning FailedScheduling default-scheduler 0/3 nodes are available: 3 node(s) didn't have free ports for the requested pod ports.

一种确保只创建可调度的pod的方法就是将Nginx ingress controller部署成一个DaemonSet 而不是传统的Deployment。

info:DaemonSet对每个集群节点(包括masters)精确调度一种类型的Pod,除非配置了一个节点来击退这些Pods。 更多的信息可以查看 DaemonSet 。

跟NodePort一样,这种方法也有几个需要注意的问题:

(1) DNS解析

配置为hostNetwork: true的pod不会使用内部的DNS解析(如kube-dns或CoreDNS),除非它们的dnsPolicy spec字段被设置成ClusterFirstWithHostNet。如果NGINX因任何原因需要解析内部名称,请考虑使用此设置。

(2) Ingress状态

因为在使用主机网络的配置中,没有service暴露Nginx ingress controller,标准云设置中使用的--publish-service标志不适用,并且所有Ingress对象的状态保持为空白。

$ kubectl get ingress

NAME HOSTS ADDRESS PORTS

test-ingress myapp.example.com 80

相反,由于bare-metal节点通常没有外部IP,因此必须启用--report-node-internal-ip-address标志,该标志将所有ingress对象的状态设置为运行NGINX ingress controller的所有节点的内部IP地址。

注意:另外,可以使用--publish-status-address标志覆盖写入Ingress对象的地址。详见 Command line arguments。

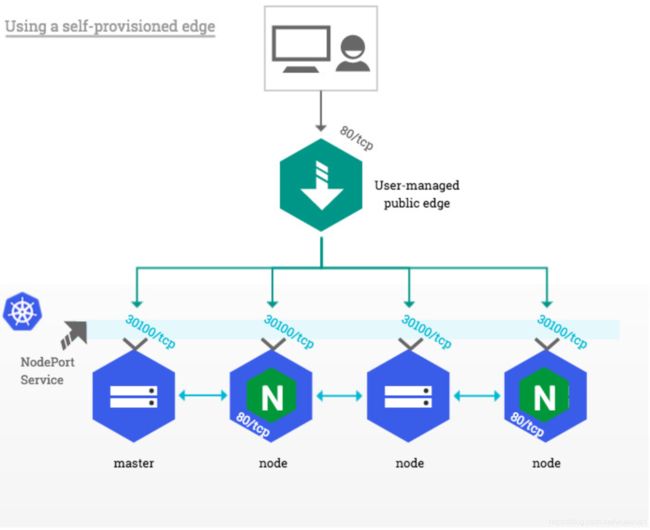

4、使用自建的边缘(Using a self-provisioned edge)

与云环境类似,这种部署方法需要一个边缘网络组件,为Kubernetes集群提供一个公共入口点。这个边缘组件可以是硬件(例如 供应商设备)或软件(例如 Haproxy),并且通常由操作团队在Kubernetes集群之外进行管理。

这种部署方法建立在上面描述的“基于NodePort服务”之上,有一个重要的不同是:外部客户端不直接访问集群节点,只有边缘组件直接访问集群节点。这特别适合于私有Kubernetes集群,其中没有一个节点具有公共IP地址。

在边缘端,唯一的先决条件是指定一个公共IP地址,该地址将所有HTTP流量转发给Kubernetes node节点和/或master。在TCP端口80和443上的传入流量被转发到目标节点上相应的HTTP和HTTPS NodePort,如下图所示:

5、外部IP(External IPs)

危险:源IP地址

该方法不允许以任何方式保留HTTP请求的源IP,因此尽管它显然很简单,但不建议使用它。

这种externalIPs service选项在之前的NodePort部分也提到过了。

根据官方Kubernetes文档的 Services页面,externalIPs选项导致kube-proxy将发送到任意IP地址和服务端口的流量路由到该服务的端点。这些IP地址必须属于目标节点。

总结:阅读官方文档的安装及注意事项,我最终选择了使用自建边缘的方式搭建统一入口点,边缘我选择的是Nginx,并且把Nginx ingress controller的NodePort设置成固定的端口(在ingress controller service中指定NodePort: 30278),避免因为某些原因重新部署ingress controller后NodePort发生改变,还要改边缘Nginx的配置。

ingress配置举例:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: hello-web-test

namespace: test

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

rules:

- http:

paths:

- path: /test

backend:

serviceName: hellotest

servicePort: 8190

- path: /test2

backend:

serviceName: hellotest

servicePort: 8190

以上配置好后,就可以通过边缘组件Nginx的IP/test访问服务hellotest了。我用Nginx的80端口映射了kubernetes master ip:30278。