准备要导入的数据

mysql创建表并插入数据

-- 建表

-- 学生表

DROP TABLE IF EXISTS student;

CREATE TABLE IF NOT EXISTS student (

s_id VARCHAR ( 20 ) COMMENT "学生编号",

s_name VARCHAR ( 20 ) NOT NULL DEFAULT '' comment

"学生姓名",

s_birth VARCHAR ( 20 ) NOT NULL DEFAULT '' comment

"出生年月",

s_sex VARCHAR ( 10 ) NOT NULL DEFAULT '',

PRIMARY KEY (s_id) comment

"学生性别"

);

-- 插入学生表测试数据

INSERT INTO Student

VALUES

( '01', '赵雷', '1990-01-01', '男' );

INSERT INTO Student

VALUES

( '02', '钱电', '1990-12-21', '男' );

INSERT INTO Student

VALUES

( '03', '孙风', '1990-05-20', '男' );

INSERT INTO Student

VALUES

( '04', '李云', '1990-08-06', '男' );

INSERT INTO Student

VALUES

( '05', '周梅', '1991-12-01', '女' );

INSERT INTO Student

VALUES

( '06', '吴兰', '1992-03-01', '女' );

INSERT INTO Student

VALUES

( '07', '郑竹', '1989-07-01', '女' );

INSERT INTO Student

VALUES

( '08', '王菊', '1990-01-20', '女' );

准备要导出的数据

customer.csv

上传到hdfs 任意目录

启动相关服务

# 启动hdfs

start-dfs.sh

# 启动yarn

start-yarn.sh

# 启动historyserver

mr-jobhistory-daemon.sh start historyserver

# 启动sqoop2

sqoop2-server start

首先保证SQOOP_SERVER_EXTRA_LIB 目录下有mysql的驱动jar包

1. 创建mysql-->link

创建一个link 从mysql读取数据

sqoop-shell中执行

查看connector:

如果需要额外的connector,需要添加connector 的jar包到SQOOP_SERVER_EXTRA_LIB 目录下;

create link -c generic-jdbc-connector

填写name:jdbc_link

填写driverclass:com.mysql.jdbc.Driver

填写url: jdbc:mysql://192.168.5.155:3306/test3

填写username:root

填写密码:sa

Identifier enclose:`(反引号)或者空格 不能直接回车

2. 创建HDFS-->link

创建一个link,写数据到指定的目录下

create link -c hdfs-connector

name:hdfs_link

uri: hdfs://master:9000/

Conf directory: /opt/hadoop/hadoop-2.7.6/etc/hadoop

3. 创建job

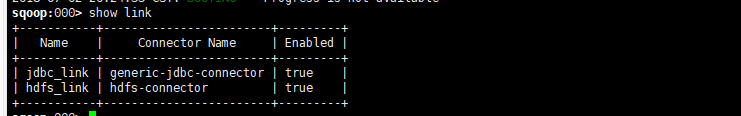

查看link

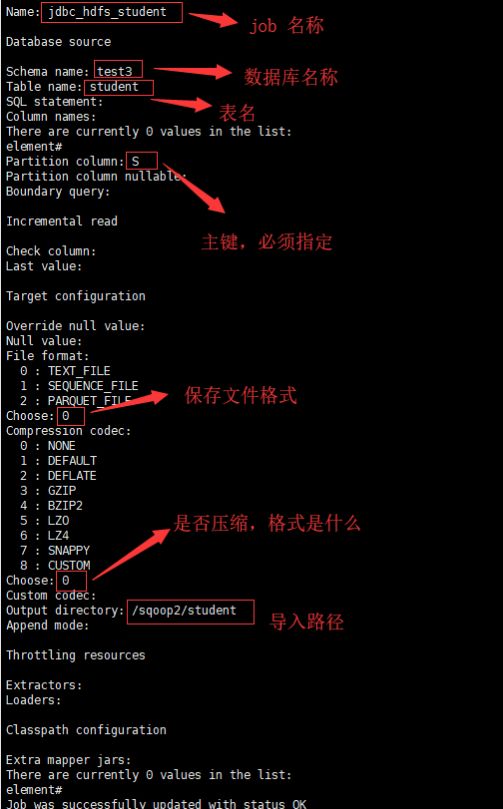

3.1 创建导入job

from jdbc to hdfs

create job -f jdbc_link -t hdfs_link

查看 jdbc_link 对应的connector的from job configuration 来配置取数规则

查看 hdfs_link 对应的connector的to job configuration 来配置存数规则

name:jdbc_hdfs_student

Table name: student

Partition column: S

Output directory: /sqoop2/test1

3.2 创建导出job

from hdfs to jdbc

要导出到mysql中的表要先建好

mysql中执行:

CREATE TABLE customer_from_hdfs(

c_id VARCHAR ( 20 ) COMMENT "消费者编号",

c_name VARCHAR ( 20 ) NOT NULL DEFAULT '' comment

"姓名",

c_country VARCHAR(20) COMMENT "国家"

);

sqoop2-shell 中执行:

create job -f jdbc_link -t hdfs_link

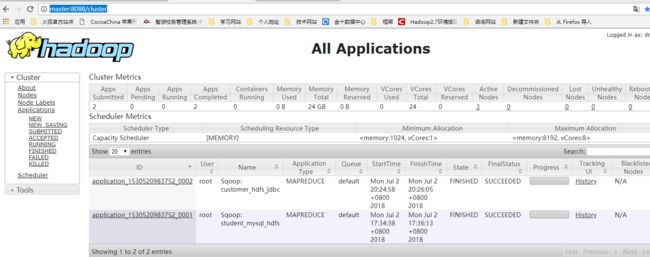

4. 执行job

- 执行导入job

start job -n jdbc_hdfs_student

- 执行导出job

start job -n customer_hdfs_jdbc

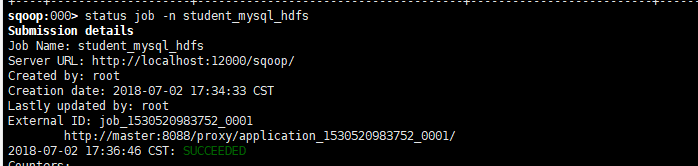

5. 查看job执行状态

status job -n student_fmysql_thdfs