大数据5_03_Flume进阶-内部原理-案例

4 Flume进阶

4.1 Flume事务

事务的4个特点是:ACID:原子性,一致性,隔离性,持久性

flume的事务分成两个部分:

- 第一部分是put事务

- doput会将数据写入临时缓冲区putlist,docommit会检查channel内存队列是否可以合并,如果可以则正常写入channel,如果失败,会回滚数据

- 第二部分是take事务

- dotake会将数据取到临时缓冲区takelist,docommit正常写出数据,还会清空临时缓冲区的takelist,如果失败,会回滚,把临时缓冲区takelist的数据归还给channel内存

4.2 Flume Agent内部原理

Channel Processor

将事件传给拦截器Interceptor;事务的管理。

一般都是自定义拦截器,拦截器需要实现flume的Interceptor接口,实现方法,然后将jar包放到flume的lib下,然后在配置文件中设置拦截器a1.sources.r1.interceptors = i1

a1.sources.r1.interceptors.i1.type = 全类名$Builder。

自定义Source的时候不需要写Channel Processor部分。

Channel Selector

Channel选择器,主要有两种策略:

一是默认的Replicating(复制)

- 复制策略是指,将事件传给多个目的地,目的地sink的类型可能不同。(一个source–> 多个channel–> 多个sink)

二是多路复用策略Multiplexing

多路复用策略,一般需要结合Interceptor拦截器一起使用,根据文件类型的不同发送到不同的channel中

其原理是根据event中Header的key值进行过滤。

Sink Processor

sink processor,sink组有三种策略:

- 一是默认DefaultSinkProcessor,(channel–>sink)

- 二是负载均衡LoadBalancingProcessor(sink group)

- 三是故障转移FailoverSinkProcessor(sink group)

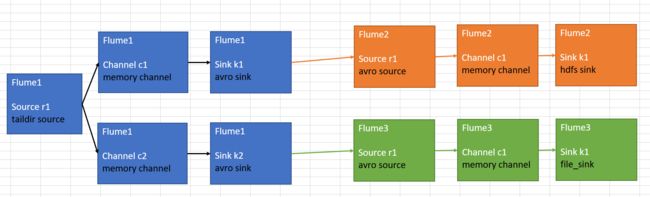

4.3 复制策略 Replicating

案例1:flume1监控hive的日志文件,flume1将日志更新内容传给flume2,flume2将内容输出到HDFS;flume1将日志更新传给flume3,flume3将内容输出到本地文件系统。

步骤1:在hadoop102上创建/opt/module/flume/job/group1,创建三个配置文件

[atguigu@hadoop102 job] mkdir group1

[atguigu@hadoop102 job] touch group1/flume1.conf

[atguigu@hadoop102 job] touch group1/flume2.conf

[atguigu@hadoop102 job] touch group1/flume3.conf

步骤2:配置文件

flume1.conf(taildir-memory-avro)

a1.sources.r1.selector.type = replicating不写也可以,系统默认就是replicating模式

# Name the components on this agent

a1.sources = r1

a1.sinks = k1 k2

a1.channels = c1 c2

# 将数据流复制给所有channel

a1.sources.r1.selector.type = replicating

# Describe/configure the source

a1.sources.r1.type = TAILDIR

a1.sources.r1.positionFile = /opt/module/flume/data/taildir_position11.json

a1.sources.r1.filegroups = f1

a1.sources.r1.filegroups.f1 = /opt/module/hive/logs/hive.log

# Describe the sink

# sink端的avro是一个数据发送者

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = hadoop102

a1.sinks.k1.port = 4141

a1.sinks.k2.type = avro

a1.sinks.k2.hostname = hadoop102

a1.sinks.k2.port = 4142

# Describe the channel

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

a1.channels.c2.type = memory

a1.channels.c2.capacity = 1000

a1.channels.c2.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1 c2

a1.sinks.k1.channel = c1

a1.sinks.k2.channel = c2

flume2.conf(avro-memory-hdfs)

# Name the components on this agent

a2.sources = r1

a2.sinks = k1

a2.channels = c1

# Describe/configure the source

# source端的avro是一个数据接收服务

a2.sources.r1.type = avro

a2.sources.r1.bind = hadoop102

a2.sources.r1.port = 4141

# Describe the sink

a2.sinks.k1.type = hdfs

a2.sinks.k1.hdfs.path = hdfs://hadoop102:9820/flume2/%Y%m%d/%H

#上传文件的前缀

a2.sinks.k1.hdfs.filePrefix = flume2-

#是否按照时间滚动文件夹

a2.sinks.k1.hdfs.round = true

#多少时间单位创建一个新的文件夹

a2.sinks.k1.hdfs.roundValue = 1

#重新定义时间单位

a2.sinks.k1.hdfs.roundUnit = hour

#是否使用本地时间戳

a2.sinks.k1.hdfs.useLocalTimeStamp = true

#积攒多少个Event才flush到HDFS一次

a2.sinks.k1.hdfs.batchSize = 100

#设置文件类型,可支持压缩

a2.sinks.k1.hdfs.fileType = DataStream

#多久生成一个新的文件

a2.sinks.k1.hdfs.rollInterval = 600

#设置每个文件的滚动大小大概是128M

a2.sinks.k1.hdfs.rollSize = 134217700

#文件的滚动与Event数量无关

a2.sinks.k1.hdfs.rollCount = 0

# Describe the channel

a2.channels.c1.type = memory

a2.channels.c1.capacity = 1000

a2.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a2.sources.r1.channels = c1

a2.sinks.k1.channel = c1

flume3.conf(avro-memory-file_roll)

# Name the components on this agent

a3.sources = r1

a3.sinks = k1

a3.channels = c2

# Describe/configure the source

a3.sources.r1.type = avro

a3.sources.r1.bind = hadoop102

a3.sources.r1.port = 4142

# Describe the sink

a3.sinks.k1.type = file_roll

a3.sinks.k1.sink.directory = /opt/module/data/flume3

# Describe the channel

a3.channels.c2.type = memory

a3.channels.c2.capacity = 1000

a3.channels.c2.transactionCapacity = 100

# Bind the source and sink to the channel

a3.sources.r1.channels = c2

a3.sinks.k1.channel = c2

注意:输出的本地目录必须是已经存在的目录,如果该目录不存在,并不会创建新的目录。

步骤3:执行启动命令

[atguigu@hadoop102 flume]$ bin/flume-ng agent --conf conf/ --name a3 --conf-file job/group1/flume-flume-dir.conf

[atguigu@hadoop102 flume]$ bin/flume-ng agent --conf conf/ --name a2 --conf-file job/group1/flume-flume-hdfs.conf

[atguigu@hadoop102 flume]$ bin/flume-ng agent --conf conf/ --name a1 --conf-file job/group1/flume-file-flume.conf

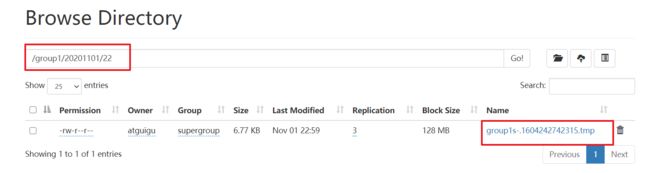

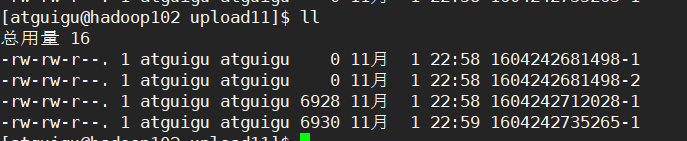

步骤4:操作hive,查看HDFS和本地文件系统结果

4.4 多路复用策略 Multipexing

需要自定义Interceptor拦截器,配置channel selector为multiplexing模式

步骤1:创建maven工程,导入pom依赖

<dependencies>

<dependency>

<groupId>org.apache.flumegroupId>

<artifactId>flume-ng-coreartifactId>

<version>1.9.0version>

dependency>

dependencies>

步骤2:创建自定义的Interceptor(继承flume的Interceptor类)

public class CustomInterceptor implements Interceptor {

private List<Event> addHeaderEvents ;

@Override

public void initialize() {

addHeaderEvents = new ArrayList<>();

}

@Override

public Event intercept(Event event) {

//1 获取事件的头部信息

Map<String, String> headers = event.getHeaders();

//2 获取事件的body信息

String body = new String(event.getBody());

//3 根据body中是否含有“hello”来决定怎样添加头信息

if (body.contains("hello")){

//4 添加头部信息

headers.put("type", "hello");

}else {

headers.put("type", "bigdata");

}

return event;

}

@Override

public List<Event> intercept(List<Event> events) {

//1 清空集合

addHeaderEvents.clear();

//2 遍历events

for (Event event : events) {

addHeaderEvents.add(intercept(event));

}

return addHeaderEvents;

}

@Override

public void close() {

}

public static class Builder implements Interceptor.Builder{

@Override

public Interceptor build() {

return new CustomInterceptor();

}

@Override

public void configure(Context context) {

}

}

}

步骤3:打包,放到flume的lib目录下。

步骤4:配置flume配置文件

在hadoop102的job/group22下创建flume1.conf

# Name

a1.sources = r1

a1.channels = c1 c2

a1.sinks = k1 k2

# Source

a1.sources.r1.type = netcat

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44444

# Interceptor

a1.sources.r1.interceptors = i1

a1.sources.r1.interceptors.i1.type = com.codejiwei.flume.interceptor.CustomInterceptor$Builder

# Channel Selector

a1.sources.r1.selector.type = multiplexing

a1.sources.r1.selector.header = type

a1.sources.r1.selector.mapping.hello = c1

a1.sources.r1.selector.mapping.bigdata = c2

# Channel

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

a1.channels.c2.type = memory

a1.channels.c2.capacity = 1000

a1.channels.c2.transactionCapacity = 100

# Sink

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = hadoop103

a1.sinks.k1.port = 4545

a1.sinks.k2.type = avro

a1.sinks.k2.hostname = hadoop104

a1.sinks.k2.port = 4546

# Bind

a1.sources.r1.channels = c1 c2

a1.sinks.k1.channel = c1

a1.sinks.k2.channel = c2

在hadoop103的job/group22下创建flume2.conf

# Name

a2.sources = r1

a2.channels = c1

a2.sinks = k1

# Source

a2.sources.r1.type = avro

a2.sources.r1.bind = hadoop103

a2.sources.r1.port = 4545

# Channel

a2.channels.c1.type = memory

a2.channels.c1.capacity = 1000

a2.channels.c1.transactionCapacity = 100

# Sink

a2.sinks.k1.type = logger

# Bind

a2.sources.r1.channels = c1

a2.sinks.k1.channel = c1

在hadoop104的job/group22下创建flume3.conf

# Name

a3.sources = r1

a3.channels = c1

a3.sinks = k1

# Source

a3.sources.r1.type = avro

a3.sources.r1.bind = hadoop104

a3.sources.r1.port = 4546

# Channel

a3.channels.c1.type = memory

a3.channels.c1.capacity = 1000

a3.channels.c1.transactionCapacity = 100

# Sink

a3.sinks.k1.type = logger

# Bind

a3.sources.r1.channels = c1

a3.sinks.k1.channel = c1

步骤5:在hadoop102上使用netcat发送信息

[atguigu@hadoop102 job]$ nc localhost 44444

helloword

helloaaa

nihao

hellobbb

nihaoaaa

hadoop103上的结果

hadoop104上的结果

4.5 故障转移策略 Failover

- 故障转移是必须给sink指明优先级,数据进入优先级高的sink。

- 当某一个挂掉,可以将数据发送到另一个。

a1.sinkgroups = g1

a1.sinkgroups.g1.sinks = k1 k2

a1.sinkgroups.g1.processor.type = failover

a1.sinkgroups.g1.processor.priority.k1 = 5

a1.sinkgroups.g1.processor.priority.k2 = 10

a1.sinkgroups.g1.processor.maxpenalty = 10000

步骤1:在hadoop102、hadoop103、hadoop104上分别创建配置文件。

配置文件:

hadoop102,flume1.conf

# Name

a1.sources = r1

a1.channels = c1

a1.sinks = k1 k2

a1.sinkgroups = g1

# Source

a1.sources.r1.type = netcat

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44444

# Channel

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Sink

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = hadoop103

a1.sinks.k1.port = 4545

a1.sinks.k2.type = avro

a1.sinks.k2.hostname = hadoop104

a1.sinks.k2.port = 4546

# SinkGroup

a1.sinkgroups.g1.sinks = k1 k2

a1.sinkgroups.g1.processor.type = failover

a1.sinkgroups.g1.processor.priority.k1 = 10

a1.sinkgroups.g1.processor.priority.k2 = 5

a1.sinkgroups.g1.processor.maxpenalty = 10000

# Bind

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

a1.sinks.k2.channel = c1

hadoop103,flume2.conf

# Name

a2.sources = r1

a2.channels = c1

a2.sinks = k1

# Source

a2.sources.r1.type = avro

a2.sources.r1.bind = hadoop103

a2.sources.r1.port = 4545

# Channel

a2.channels.c1.type = memory

a2.channels.c1.capacity = 1000

a2.channels.c1.transactionCapacity = 100

# Sink

a2.sinks.k1.type = logger

# Bind

a2.sources.r1.channels = c1

a2.sinks.k1.channel = c1

hadoop104,flume3.conf

# Name

a3.sources = r1

a3.channels = c1

a3.sinks = k1

# Source

a3.sources.r1.type = avro

a3.sources.r1.bind = hadoop104

a3.sources.r1.port = 4546

# Channel

a3.channels.c1.type = memory

a3.channels.c1.capacity = 1000

a3.channels.c1.transactionCapacity = 100

# Sink

a3.sinks.k1.type = logger

# Bind

a3.sources.r1.channels = c1

a3.sinks.k1.channel = c1

步骤2:在hadoop102、hadoop103、hadoop104上开启flume-ng agent;在hadoop102上开启nc 发送数据。

可以看到,数据发送给优先级高的下端flume,当某一个flume挂掉后,会发送给另一个flume。

4.6 负载均衡策略 LoadBalancing

负载均衡是指一个channel有多个sink,缓解某一个sink的压力。

processor.selector的类型有random和round_robin。

负载均衡也可以实现故障的一个转移。

a1.sinkgroups = g1

a1.sinkgroups.g1.sinks = k1 k2

a1.sinkgroups.g1.processor.type = load_balance

a1.sinkgroups.g1.processor.backoff = true

a1.sinkgroups.g1.processor.selector = random

步骤1:在hadoop102、hadoop103、hadoop104上分别创建配置文件。

配置文件:

hadoop102,flume1.conf

# Name

a1.sources = r1

a1.channels = c1

a1.sinks = k1 k2

a1.sinkgroups = g1

# Source

a1.sources.r1.type = netcat

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44444

# Channel

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Sink

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = hadoop103

a1.sinks.k1.port = 4545

a1.sinks.k2.type = avro

a1.sinks.k2.hostname = hadoop104

a1.sinks.k2.port = 4546

# SinkGroup

a1.sinkgroups.g1.sinks = k1 k2

a1.sinkgroups.g1.processor.type = load_balance

# Bind

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

a1.sinks.k2.channel = c1

hadoop103,flume2.conf

# Name

a2.sources = r1

a2.channels = c1

a2.sinks = k1

# Source

a2.sources.r1.type = avro

a2.sources.r1.bind = hadoop103

a2.sources.r1.port = 4545

# Channel

a2.channels.c1.type = memory

a2.channels.c1.capacity = 1000

a2.channels.c1.transactionCapacity = 100

# Sink

a2.sinks.k1.type = logger

# Bind

a2.sources.r1.channels = c1

a2.sinks.k1.channel = c1

hadoop104,flume3.conf

# Name

a3.sources = r1

a3.channels = c1

a3.sinks = k1

# Source

a3.sources.r1.type = avro

a3.sources.r1.bind = hadoop104

a3.sources.r1.port = 4546

# Channel

a3.channels.c1.type = memory

a3.channels.c1.capacity = 1000

a3.channels.c1.transactionCapacity = 100

# Sink

a3.sinks.k1.type = logger

# Bind

a3.sources.r1.channels = c1

a3.sinks.k1.channel = c1

步骤2:在hadoop102、hadoop103、hadoop104上开启flume-ng agent;在hadoop102上开启nc 发送数据。

可以看到,数据随机的发送到不同的下端flume,当设置为round-robin就是轮询的发送给下端的flume。

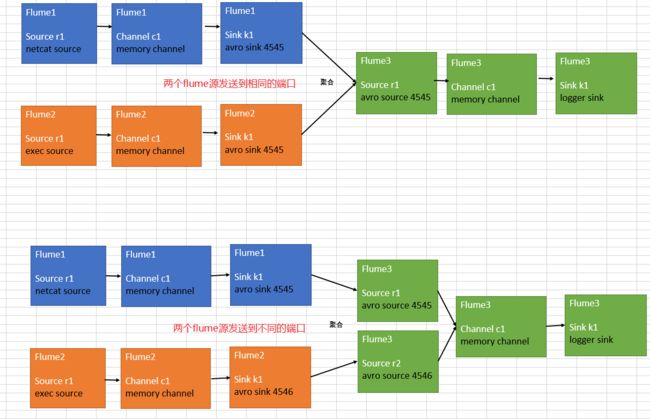

4.7 聚合操作

聚合有两种方式聚合:

- 在flume1和flume2的sink发送给两个不同的端口

- flume1和flume2的sink发送给相同的端口

方式 1:

flume1.conf

# Name

a1.sources = r1

a1.channels = c1

a1.sinks = k1

# Source

a1.sources.r1.type = netcat

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44444

# Channel

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Sink

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = hadoop104

a1.sinks.k1.port = 4545

# Bind

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

flume2.conf

# Name

a2.sources = r1

a2.channels = c1

a2.sinks = k1

# Source

a2.sources.r1.type = exec

a2.sources.r1.command = tail -F /opt/module/flume/data/file1.txt

# Channel

a2.channels.c1.type = memory

a2.channels.c1.capacity = 1000

a2.channels.c1.transactionCapacity = 100

# Sink

a2.sinks.k1.type = avro

a2.sinks.k1.hostname = hadoop104

a2.sinks.k1.port = 4545

# Bind

a2.sources.r1.channels = c1

a2.sinks.k1.channel = c1

flume3.conf

# Name

a3.sources = r1

a3.channels = c1

a3.sinks = k1

# Source

a3.sources.r1.type = avro

a3.sources.r1.bind = hadoop104

a3.sources.r1.port = 4545

# Channel

a3.channels.c1.type = memory

a3.channels.c1.capacity = 1000

a3.channels.c1.transactionCapacity = 100

# Sink

a3.sinks.k1.type = logger

# Bind

a3.sources.r1.channels = c1

a3.sinks.k1.channel = c1

方式2:

flume1.conf

# Name

a1.sources = r1

a1.channels = c1

a1.sinks = k1

# Source

a1.sources.r1.type = netcat

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44444

# Channel

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Sink

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = hadoop104

a1.sinks.k1.port = 4545

# Bind

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

flume2.conf

# Name

a2.sources = r1

a2.channels = c1

a2.sinks = k1

# Source

a2.sources.r1.type = exec

a2.sources.r1.command = tail -F /opt/module/flume/data/file1.txt

# Channel

a2.channels.c1.type = memory

a2.channels.c1.capacity = 1000

a2.channels.c1.transactionCapacity = 100

# Sink

a2.sinks.k1.type = avro

a2.sinks.k1.hostname = hadoop104

a2.sinks.k1.port = 4546

# Bind

a2.sources.r1.channels = c1

a2.sinks.k1.channel = c1

flume3.conf

# Name

a3.sources = r1 r2

a3.channels = c1

a3.sinks = k1

# Source

a3.sources.r1.type = avro

a3.sources.r1.bind = hadoop104

a3.sources.r1.port = 4545

a3.sources.r2.type = avro

a3.sources.r2.bind = hadoop104

a3.sources.r2.port = 4546

# Channel

a3.channels.c1.type = memory

a3.channels.c1.capacity = 1000

a3.channels.c1.transactionCapacity = 100

# Sink

a3.sinks.k1.type = logger

# Bind

a3.sources.r1.channels = c1

a3.sources.r2.channels = c1

a3.sinks.k1.channel = c1