很不幸,fabric的配置部分的实现居然是所有模块中复杂度最高的,倒不是复杂,是逻辑混乱。一份配置翻来覆去的折腾。耐着性子都看不下去。不过1.4比1.0改进了很多,虽然还是那么混乱。接下来几篇都跟配置有多多少少的联系,不要怪我不能太detail,实在是我也没看懂。不过看源码就是这样,搞清楚流程远比细节更重要。

从创建通道开始,之前的准备工作已经在outputChannelCreateTx篇分析完毕了,那里产生了一份创建通道所需要的配置文件的读写集,最终我们这篇需要搞清楚的一个重要问题是,这份配置怎么生效?

这里有个背景是,configtxgen处理完后,在peer端执行通道创建的时候,会将这份配置通过broadcastclient(参考broadcast篇)发给orderer,下面我们从orderer开始入手。

Orderer

func (bh *Handler) ProcessMessage(msg *cb.Envelope, addr string) (resp *ab.BroadcastResponse) {

...

chdr, isConfig, processor, err := bh.SupportRegistrar.BroadcastChannelSupport(msg)

...

if err != nil {

...

} else { // isConfig

...

config, configSeq, err := processor.ProcessConfigUpdateMsg(msg)

...

if err = processor.WaitReady();

...

err = processor.Configure(config, configSeq)

...

}

...

return &ab.BroadcastResponse{Status: cb.Status_SUCCESS}

}

- 这里是orderer处理broadcast的地方,进来后,首先要根据收到的消息类型来决定所需要的channelsupport。背景是,一,消息是HeaderType_CONFIG_UPDATE类型;二,创建通道

- 综上,我们拿到的processor是systemchannel

- 那么下面我们看下ProcessConfigUpdateMsg在做什么

ProcessConfigUpdateMsg

func (s *SystemChannel) ProcessConfigUpdateMsg(envConfigUpdate *cb.Envelope) (config *cb.Envelope, configSeq uint64, err error) {

channelID, err := utils.ChannelID(envConfigUpdate)

bundle, err := s.templator.NewChannelConfig(envConfigUpdate)

newChannelConfigEnv, err := bundle.ConfigtxValidator().ProposeConfigUpdate(envConfigUpdate)

newChannelEnvConfig, err := utils.CreateSignedEnvelope(cb.HeaderType_CONFIG, channelID, s.support.Signer(), newChannelConfigEnv, msgVersion, epoch)

wrappedOrdererTransaction, err := utils.CreateSignedEnvelope(cb.HeaderType_ORDERER_TRANSACTION, s.support.ChainID(), s.support.Signer(), newChannelEnvConfig, msgVersion, epoch)

err = s.StandardChannel.filters.Apply(wrappedOrdererTransaction)

return wrappedOrdererTransaction, s.support.Sequence(), nil

}

- templator.NewChannelConfig具体是干嘛呢?就是基于orderer的系统通道的配置来生成一份通道的模板配置。

- bundle.ConfigtxValidator().ProposeConfigUpdate(envConfigUpdate)这里就根据模板和传入的配置变更的部分进行合并,然后基于这个,生成一份完整的通道配置。

- 包装成HeaderType_ORDERER_TRANSACTION的envelope

- 最后针对envelope做一遍过滤,systemchannel有一套自己的过滤器

- envelope的签名身份不能过期

- 消息大小不能超标

- 要满足/channel/writer的权限,也就是Any member,看你配置。

- orderer所能维护的通道数有限制(MaxChannelsCount),看下是不是超过了。

func CreateSystemChannelFilters(chainCreator ChainCreator, ledgerResources channelconfig.Resources) *RuleSet { ordererConfig, ok := ledgerResources.OrdererConfig() if !ok { logger.Panicf("Cannot create system channel filters without orderer config") } return NewRuleSet([]Rule{ EmptyRejectRule, NewExpirationRejectRule(ledgerResources), NewSizeFilter(ordererConfig), NewSigFilter(policies.ChannelWriters, ledgerResources), NewSystemChannelFilter(ledgerResources, chainCreator), }) }

Configure

if msg.configSeq < seq {

msg.configMsg, _, err = ch.support.ProcessConfigMsg(msg.configMsg)

if err != nil {

logger.Warningf("Discarding bad config message: %s", err)

continue

}

}

batch := ch.support.BlockCutter().Cut()

if batch != nil {

block := ch.support.CreateNextBlock(batch)

ch.support.WriteBlock(block, nil)

}

block := ch.support.CreateNextBlock([]*cb.Envelope{msg.configMsg})

ch.support.WriteConfigBlock(block, nil)

timer = nil

- 为了简单起见,我们只关注solo的流程,其他的共识算法实现都类似。这里Configure的最终目的是将envelope发给solo去处理,而kafka和etcd,无非就是不光是自己处理,还要分发给其他人同步处理而已。

- if msg.configSeq < seq,有没有发现这里跟前面类似,又要去ProcessConfigMsg。一般说来,这里进不来,换句话说,从orderer接收到配置更新的请求,然后生成全量通道配置,然后传递到solo的过程中,一般说来是很顺的。加入不巧中间又有配置变更,那么之前的通道配置就需要重新生成。

- 接下来,意思就是处理config事件之前,cut也就是打包待处理的事件成新的block,进行写入。换句话说config单独成包。

CreateNextBlock

func (bw *BlockWriter) CreateNextBlock(messages []*cb.Envelope) *cb.Block {

previousBlockHash := bw.lastBlock.Header.Hash()

data := &cb.BlockData{

Data: make([][]byte, len(messages)),

}

var err error

for i, msg := range messages {

data.Data[i], err = proto.Marshal(msg)

if err != nil {

logger.Panicf("Could not marshal envelope: %s", err)

}

}

block := cb.NewBlock(bw.lastBlock.Header.Number+1, previousBlockHash)

block.Header.DataHash = data.Hash()

block.Data = data

return block

}

- 可以看到我们前面生成的完整通道配置,也就是envelope,是block的关键组成。

- 如果是创建通道的场景,那么这个block是第一个block,也就是GenesisBlock。

WriteConfigBlock

func (bw *BlockWriter) WriteConfigBlock(block *cb.Block, encodedMetadataValue []byte) {

...

switch chdr.Type {

case int32(cb.HeaderType_ORDERER_TRANSACTION):

newChannelConfig, err := utils.UnmarshalEnvelope(payload.Data)

if err != nil {

logger.Panicf("Told to write a config block with new channel, but did not have config update embedded: %s", err)

}

bw.registrar.newChain(newChannelConfig)

case int32(cb.HeaderType_CONFIG):

...

}

bw.WriteBlock(block, encodedMetadataValue)

}

- 首先我们看到了久违的HeaderType_ORDERER_TRANSACTION,很简单,直接去registrar.newChain。

- 这里值得注意的是registrar,这里保存了orderer维护的所有channel,是实现fabric多channel的关键。

newChain

func (r *Registrar) newChain(configtx *cb.Envelope) {

r.lock.Lock()

defer r.lock.Unlock()

ledgerResources := r.newLedgerResources(configtx)

// If we have no blocks, we need to create the genesis block ourselves.

if ledgerResources.Height() == 0 {

ledgerResources.Append(blockledger.CreateNextBlock(ledgerResources, []*cb.Envelope{configtx}))

}

// Copy the map to allow concurrent reads from broadcast/deliver while the new chainSupport is

newChains := make(map[string]*ChainSupport)

for key, value := range r.chains {

newChains[key] = value

}

cs := newChainSupport(r, ledgerResources, r.consenters, r.signer, r.blockcutterMetrics)

chainID := ledgerResources.ConfigtxValidator().ChainID()

logger.Infof("Created and starting new chain %s", chainID)

newChains[string(chainID)] = cs

cs.start()

r.chains = newChains

}

这里分三步,1,准备ledgerResource。2,新建ChainSupport。3,chainSuppoort启动。4,更新registrar的chains

LedgerResource

func (r *Registrar) newLedgerResources(configTx *cb.Envelope) *ledgerResources {

...

configEnvelope, err := configtx.UnmarshalConfigEnvelope(payload.Data)

if err != nil {

logger.Panicf("Error umarshaling config envelope from payload data: %s", err)

}

bundle, err := channelconfig.NewBundle(chdr.ChannelId, configEnvelope.Config)

if err != nil {

logger.Panicf("Error creating channelconfig bundle: %s", err)

}

checkResourcesOrPanic(bundle)

ledger, err := r.ledgerFactory.GetOrCreate(chdr.ChannelId)

if err != nil {

logger.Panicf("Error getting ledger for %s", chdr.ChannelId)

}

return &ledgerResources{

configResources: &configResources{

mutableResources: channelconfig.NewBundleSource(bundle, r.callbacks...),

},

ReadWriter: ledger,

}

}

这里又是似曾相识的代码,这里拿到ProcessConfigUpdateMsg生成的configEnvelope,然后再转回到bundle。

- 这里解释下bundle,bundle是通道配置的视图类,结构见文后,可以体会下bundle的内容。

- orderer和peer如果需要跟通道配置交互的话,都需要跟bundle进行交互

- 可以看到bundle和envelope来来回回转来转去,差点转晕了。首先你要知道配置的三种用途。

- 同步给其他成员

- 生成configblock

- 系统内配置项获取

- 当然了前两种用envelope就好,但是如果要更直观,更有效,更方便的跟配置交互,envelope就太麻烦了,想象下一层一层去拆解的步骤,太麻烦了,所以就有bundle的意义。

- 每个通道的配置bundle有且只有一份最新的,只要有配置更新就是update。

而ledgerFactory.GetOrCreate这个就是准备底层的账本环境,这个之前讲过,不再赘述。

- 首先上面生成的bundle是全量的完整配置,而bundlesource就是通道对应的配置,而orderer和peer所有跟通道配置的交互都是通过这个。

- 而channelconfig.NewBundleSource这里就是更新通道的这份配置,至此,整个orderer端配置已经更新完毕

newChainSupport

func newChainSupport(

registrar *Registrar,

ledgerResources *ledgerResources,

consenters map[string]consensus.Consenter,

signer crypto.LocalSigner,

blockcutterMetrics *blockcutter.Metrics,

) *ChainSupport {

// Read in the last block and metadata for the channel

lastBlock := blockledger.GetBlock(ledgerResources, ledgerResources.Height()-1)

metadata, err := utils.GetMetadataFromBlock(lastBlock, cb.BlockMetadataIndex_ORDERER)

// Assuming a block created with cb.NewBlock(), this should not

// error even if the orderer metadata is an empty byte slice

if err != nil {

logger.Fatalf("[channel: %s] Error extracting orderer metadata: %s", ledgerResources.ConfigtxValidator().ChainID(), err)

}

// Construct limited support needed as a parameter for additional support

cs := &ChainSupport{

ledgerResources: ledgerResources,

LocalSigner: signer,

cutter: blockcutter.NewReceiverImpl(

ledgerResources.ConfigtxValidator().ChainID(),

ledgerResources,

blockcutterMetrics,

),

}

// When ConsortiumsConfig exists, it is the system channel

_, cs.systemChannel = ledgerResources.ConsortiumsConfig()

// Set up the msgprocessor

cs.Processor = msgprocessor.NewStandardChannel(cs, msgprocessor.CreateStandardChannelFilters(cs))

// Set up the block writer

cs.BlockWriter = newBlockWriter(lastBlock, registrar, cs)

// TODO Identify recovery after crash in the middle of consensus-type migration

if cs.detectMigration(lastBlock) {

// We do this because the last block after migration (COMMIT/CONTEXT) carries Kafka metadata.

// This prevents the code down the line from unmarshaling it as Raft, and panicking.

metadata.Value = nil

logger.Debugf("[channel: %s] Consensus-type migration: restart on to Raft, resetting Kafka block metadata", cs.ChainID())

}

// Set up the consenter

consenterType := ledgerResources.SharedConfig().ConsensusType()

consenter, ok := consenters[consenterType]

if !ok {

logger.Panicf("Error retrieving consenter of type: %s", consenterType)

}

cs.Chain, err = consenter.HandleChain(cs, metadata)

if err != nil {

logger.Panicf("[channel: %s] Error creating consenter: %s", cs.ChainID(), err)

}

logger.Debugf("[channel: %s] Done creating channel support resources", cs.ChainID())

return cs

}

ChainSupport可以看成是通道的代名词。下面我们看下他新建的过程要做什么。

- StandardChannel是跟SystemChannel对标的,如果是新建通道的场景是SystemChannel来处理配置变更的消息。而channel新建完后,就由StandardChannel来处理,这个在后面的篇章再来分析。

- channel在orderer间的共识服务也需要初始化。

- 其他的什么签名身份,blockwriter,ledgerResources,cutter等等。不再赘述,看过前面篇章的都知道那些是干什么的。

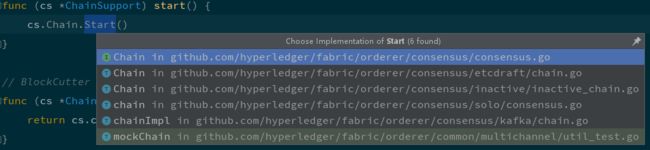

然后就是chainSuppoort启动,这里的意义就是channel正式开始启动,

ChainSupport.start

这里简单提下,细节去看orderer的共识篇。

- 启动主要是做几件事情

- 接收peer发来的事件消息

- 交给orderer模块去生成block

- orderer集群共识

WriteBlock

bw.WriteBlock(block, encodedMetadataValue)

前面已经初始化了底层账本,现在就是将genesisblock写入本地账本,之后共识机制会保证这个block会同步给orderer的其他成员。

Peer

前面我们讲了创建通道的请求怎么在orderer里面流转的,但是不要忘了,peer是事件的始作俑者。他不是通知orderer我要创建通道就完了,还有后续步骤,我们一起来看下。

func executeCreate(cf *ChannelCmdFactory) error {

err := sendCreateChainTransaction(cf)

if err != nil {

return err

}

block, err := getGenesisBlock(cf)

if err != nil {

return err

}

b, err := proto.Marshal(block)

if err != nil {

return err

}

file := channelID + ".block"

if outputBlock != common.UndefinedParamValue {

file = outputBlock

}

err = ioutil.WriteFile(file, b, 0644)

if err != nil {

return err

}

return nil

}

- sendCreateChainTransaction这里就是给orderer发请求

- getGenesisBlock,关键是这一步。

- 最后写入到本地block文件

getGenesisBlock

func getGenesisBlock(cf *ChannelCmdFactory) (*cb.Block, error) {

timer := time.NewTimer(timeout)

defer timer.Stop()

for {

select {

case <-timer.C:

cf.DeliverClient.Close()

return nil, errors.New("timeout waiting for channel creation")

default:

if block, err := cf.DeliverClient.GetSpecifiedBlock(0); err != nil {

cf.DeliverClient.Close()

cf, err = InitCmdFactory(EndorserNotRequired, PeerDeliverNotRequired, OrdererRequired)

if err != nil {

return nil, errors.WithMessage(err, "failed connecting")

}

time.Sleep(200 * time.Millisecond)

} else {

cf.DeliverClient.Close()

return block, nil

}

}

}

}

- 不熟悉的可以去看前面的Deliver篇,简单来说Deliver是peer去orderer拉取block的服务。

- 这里是跟orderer请求genesisblock,如果成功,说明orderer那边已经处理完毕,并且持久化到了账本。这里就是去orderer拉取通道的第一个block,也就是genesisblock。这个就是上面折腾这么久最终生成的完整通道配置。

小结

回忆下整个截至到通道创建流程中涉及到的三个文件, 现在看来清晰多了。

configtxgen -profile TwoOrgsOrdererGenesis -channelID byfn-sys-channel -outputBlock ./channel-artifacts/genesis.block

这里可以理解为系统级genesis.block,是提供给orderer使用的。

TwoOrgsOrdererGenesis: <<: *ChannelDefaults Orderer: <<: *OrdererDefaults Organizations: - *OrdererOrg Consortiums: SampleConsortium: Organizations: - *Org1 - *Org2 - *Org3configtxgen -profile TwoOrgsChannel -outputCreateChannelTx ./channel-artifacts/channel.tx -channelID $CHANNEL_NAME

而这里是生成的是具体通道的配置文件,也就是具体账本。

TwoOrgsChannel: Consortium: SampleConsortium Application: <<: *ApplicationDefaults Organizations: - *Org1 - *Org2 - *Org3peer channel create -o orderer.example.com:7050 -c mychannel -f ./channel-artifacts/channel.tx

- 这里执行完会生成mychannel.block

- 而这一步看到这里也知道了,就是结合系统级配置和通道配置进行合并,生成一份完整的配置,作为通道的genesisblock

附录

BundleSource

可以看到这个类是通道配置的视图类,比envelope好用多了不是么?

type BundleSource struct {

bundle atomic.Value

callbacks []BundleActor

}

// BundleActor performs an operation based on the given bundle

type BundleActor func(bundle *Bundle)

// NewBundleSource creates a new BundleSource with an initial Bundle value

// The callbacks will be invoked whenever the Update method is called for the

// BundleSource. Note, these callbacks are called immediately before this function

// returns.

func NewBundleSource(bundle *Bundle, callbacks ...BundleActor) *BundleSource {

bs := &BundleSource{

callbacks: callbacks,

}

bs.Update(bundle)

return bs

}

// Update sets a new bundle as the bundle source and calls any registered callbacks

func (bs *BundleSource) Update(newBundle *Bundle) {

bs.bundle.Store(newBundle)

for _, callback := range bs.callbacks {

callback(newBundle)

}

}

// StableBundle returns a pointer to a stable Bundle.

// It is stable because calls to its assorted methods will always return the same

// result, as the underlying data structures are immutable. For instance, calling

// BundleSource.Orderer() and BundleSource.MSPManager() to get first the list of orderer

// orgs, then querying the MSP for those org definitions could result in a bug because an

// update might replace the underlying Bundle in between. Therefore, for operations

// which require consistency between the Bundle calls, the caller should first retrieve

// a StableBundle, then operate on it.

func (bs *BundleSource) StableBundle() *Bundle {

return bs.bundle.Load().(*Bundle)

}

// PolicyManager returns the policy manager constructed for this config

func (bs *BundleSource) PolicyManager() policies.Manager {

return bs.StableBundle().policyManager

}

// MSPManager returns the MSP manager constructed for this config

func (bs *BundleSource) MSPManager() msp.MSPManager {

return bs.StableBundle().mspManager

}

// ChannelConfig returns the config.Channel for the chain

func (bs *BundleSource) ChannelConfig() Channel {

return bs.StableBundle().ChannelConfig()

}

// OrdererConfig returns the config.Orderer for the channel

// and whether the Orderer config exists

func (bs *BundleSource) OrdererConfig() (Orderer, bool) {

return bs.StableBundle().OrdererConfig()

}

// ConsortiumsConfig() returns the config.Consortiums for the channel

// and whether the consortiums config exists

func (bs *BundleSource) ConsortiumsConfig() (Consortiums, bool) {

return bs.StableBundle().ConsortiumsConfig()

}

// ApplicationConfig returns the Application config for the channel

// and whether the Application config exists

func (bs *BundleSource) ApplicationConfig() (Application, bool) {

return bs.StableBundle().ApplicationConfig()

}

// ConfigtxValidator returns the configtx.Validator for the channel

func (bs *BundleSource) ConfigtxValidator() configtx.Validator {

return bs.StableBundle().ConfigtxValidator()

}

// ValidateNew passes through to the current bundle

func (bs *BundleSource) ValidateNew(resources Resources) error {

return bs.StableBundle().ValidateNew(resources)

}

Bundle

channelconfig.Bundle{

policyManager: &policies.ManagerImpl{

path: "Channel",

policies: {

"Orderer/Writers": &policies.implicitMetaPolicy{

threshold: 0,

subPolicies: {

},

managers: {

},

subPolicyName: "Writers",

},

"Orderer/Admins": &policies.implicitMetaPolicy{

threshold: 0,

subPolicies: {

},

managers: {

},

subPolicyName: "Admins",

},

"Application/ChannelCreationPolicy": &policies.implicitMetaPolicy{

threshold: 0,

subPolicies: {

},

managers: {

},

subPolicyName: "Admins",

},

"Admins": &policies.implicitMetaPolicy{

threshold: 2,

subPolicies: {

&policies.policyLogger{

policy: &policies.implicitMetaPolicy{(CYCLIC REFERENCE)},

policyName: "/Channel/Orderer/Admins",

},

"Admins",

},

managers: {

"Orderer": &policies.ManagerImpl{

path: "Channel/Orderer",

policies: {

"BlockValidation": &policies.implicitMetaPolicy{

threshold: 0,

subPolicies: {

},

managers: {

},

subPolicyName: "Writers",

},

"Readers": &policies.implicitMetaPolicy{

threshold: 0,

subPolicies: {

},

managers: {

},

subPolicyName: "Readers",

},

"Writers": &policies.implicitMetaPolicy{(CYCLIC REFERENCE)},

"Admins": &policies.implicitMetaPolicy{(CYCLIC REFERENCE)},

},

managers: {

},

},

"Application": &policies.ManagerImpl{

path: "Channel/Application",

policies: {

"ChannelCreationPolicy": &policies.implicitMetaPolicy{(CYCLIC REFERENCE)},

},

managers: {

},

},

},

subPolicyName: "Admins",

},

"Readers": &policies.implicitMetaPolicy{

threshold: 1,

subPolicies: {

&policies.policyLogger{

policy: &policies.implicitMetaPolicy{

threshold: 0,

subPolicies: {

},

managers: {

},

subPolicyName: "Readers",

},

policyName: "/Channel/Orderer/Readers",

},

"Readers",

},

managers: {

"Orderer": &policies.ManagerImpl{

path: "Channel/Orderer",

policies: {

"BlockValidation": &policies.implicitMetaPolicy{

threshold: 0,

subPolicies: {

},

managers: {

},

subPolicyName: "Writers",

},

"Readers": &policies.implicitMetaPolicy{

threshold: 0,

subPolicies: {

},

managers: {

},

subPolicyName: "Readers",

},

"Writers": &policies.implicitMetaPolicy{(CYCLIC REFERENCE)},

"Admins": &policies.implicitMetaPolicy{(CYCLIC REFERENCE)},

},

managers: {

},

},

"Application": &policies.ManagerImpl{

path: "Channel/Application",

policies: {

"ChannelCreationPolicy": &policies.implicitMetaPolicy{(CYCLIC REFERENCE)},

},

managers: {

},

},

},

subPolicyName: "Readers",

},

"Writers": &policies.implicitMetaPolicy{

threshold: 1,

subPolicies: {

&policies.policyLogger{

policy: &policies.implicitMetaPolicy{(CYCLIC REFERENCE)},

policyName: "/Channel/Orderer/Writers",

},

"Writers",

},

managers: {

"Application": &policies.ManagerImpl{

path: "Channel/Application",

policies: {

"ChannelCreationPolicy": &policies.implicitMetaPolicy{(CYCLIC REFERENCE)},

},

managers: {

},

},

"Orderer": &policies.ManagerImpl{

path: "Channel/Orderer",

policies: {

"BlockValidation": &policies.implicitMetaPolicy{

threshold: 0,

subPolicies: {

},

managers: {

},

subPolicyName: "Writers",

},

"Readers": &policies.implicitMetaPolicy{

threshold: 0,

subPolicies: {

},

managers: {

},

subPolicyName: "Readers",

},

"Writers": &policies.implicitMetaPolicy{(CYCLIC REFERENCE)},

"Admins": &policies.implicitMetaPolicy{(CYCLIC REFERENCE)},

},

managers: {

},

},

},

subPolicyName: "Writers",

},

"Orderer/BlockValidation": &policies.implicitMetaPolicy{

threshold: 0,

subPolicies: {

},

managers: {

},

subPolicyName: "Writers",

},

"Orderer/Readers": &policies.implicitMetaPolicy{

threshold: 0,

subPolicies: {

},

managers: {

},

subPolicyName: "Readers",

},

},

managers: {

"Orderer": &policies.ManagerImpl{

path: "Channel/Orderer",

policies: {

"BlockValidation": &policies.implicitMetaPolicy{(CYCLIC REFERENCE)},

"Readers": &policies.implicitMetaPolicy{(CYCLIC REFERENCE)},

"Writers": &policies.implicitMetaPolicy{(CYCLIC REFERENCE)},

"Admins": &policies.implicitMetaPolicy{(CYCLIC REFERENCE)},

},

managers: {

},

},

"Application": &policies.ManagerImpl{

path: "Channel/Application",

policies: {

"ChannelCreationPolicy": &policies.implicitMetaPolicy{(CYCLIC REFERENCE)},

},

managers: {

},

},

},

},

mspManager: nil,

channelConfig: &channelconfig.ChannelConfig{

protos: &channelconfig.ChannelProtos{

HashingAlgorithm: &common.HashingAlgorithm{

Name: "SHA256",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

BlockDataHashingStructure: &common.BlockDataHashingStructure{

Width: 0xffffffff,

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

OrdererAddresses: &common.OrdererAddresses{

Addresses: {"127.0.0.1:7050"},

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

Consortium: &common.Consortium{

Name: "SampleConsortium",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

Capabilities: &common.Capabilities{

Capabilities: {

"V1_3": &common.Capability{},

},

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

},

hashingAlgorithm: func([]uint8) []uint8 {...},

mspManager: &msp.mspManagerImpl{

mspsMap: {

},

mspsByProviders: {

},

up: true,

},

appConfig: &channelconfig.ApplicationConfig{

applicationOrgs: {

},

protos: &channelconfig.ApplicationProtos{

ACLs: &peer.ACLs{},

Capabilities: &common.Capabilities{},

},

},

ordererConfig: &channelconfig.OrdererConfig{

protos: &channelconfig.OrdererProtos{

ConsensusType: &orderer.ConsensusType{

Type: "solo",

Metadata: nil,

MigrationState: 0,

MigrationContext: 0x0,

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

BatchSize: &orderer.BatchSize{

MaxMessageCount: 0xa,

AbsoluteMaxBytes: 0xa00000,

PreferredMaxBytes: 0x80000,

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

BatchTimeout: &orderer.BatchTimeout{

Timeout: "2s",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

KafkaBrokers: &orderer.KafkaBrokers{},

ChannelRestrictions: &orderer.ChannelRestrictions{},

Capabilities: &common.Capabilities{

Capabilities: {

"V1_1": &common.Capability{},

},

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

},

orgs: {

},

batchTimeout: 2000000000,

},

consortiumsConfig: (*channelconfig.ConsortiumsConfig)(nil),

},

configtxManager: &configtx.ValidatorImpl{

channelID: "test-new-chain",

sequence: 0x0,

configMap: {

"[Policy] /Channel/Orderer/Writers": {

ConfigGroup: (*common.ConfigGroup)(nil),

ConfigValue: (*common.ConfigValue)(nil),

ConfigPolicy: &common.ConfigPolicy{

Version: 0x0,

Policy: &common.Policy{

Type: 3,

Value: {0xa, 0x7, 0x57, 0x72, 0x69, 0x74, 0x65, 0x72, 0x73},

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

key: "Writers",

path: {"Channel", "Orderer"},

},

"[Group] /Channel/Application": {

ConfigGroup: &common.ConfigGroup{

Version: 0x0,

Groups: {

},

Values: {

},

Policies: {

"ChannelCreationPolicy": &common.ConfigPolicy{

Version: 0x0,

Policy: &common.Policy{

Type: 3,

Value: {0xa, 0x6, 0x41, 0x64, 0x6d, 0x69, 0x6e, 0x73},

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ModPolicy: "",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

},

ModPolicy: "ChannelCreationPolicy",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ConfigValue: (*common.ConfigValue)(nil),

ConfigPolicy: (*common.ConfigPolicy)(nil),

key: "Application",

path: {"Channel"},

},

"[Value] /Channel/Orderer/BatchSize": {

ConfigGroup: (*common.ConfigGroup)(nil),

ConfigValue: &common.ConfigValue{

Version: 0x0,

Value: {0x8, 0xa, 0x10, 0x80, 0x80, 0x80, 0x5, 0x18, 0x80, 0x80, 0x20},

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ConfigPolicy: (*common.ConfigPolicy)(nil),

key: "BatchSize",

path: {"Channel", "Orderer"},

},

"[Policy] /Channel/Orderer/BlockValidation": {

ConfigGroup: (*common.ConfigGroup)(nil),

ConfigValue: (*common.ConfigValue)(nil),

ConfigPolicy: &common.ConfigPolicy{

Version: 0x0,

Policy: &common.Policy{

Type: 3,

Value: {0xa, 0x7, 0x57, 0x72, 0x69, 0x74, 0x65, 0x72, 0x73},

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

key: "BlockValidation",

path: {"Channel", "Orderer"},

},

"[Policy] /Channel/Orderer/Admins": {

ConfigGroup: (*common.ConfigGroup)(nil),

ConfigValue: (*common.ConfigValue)(nil),

ConfigPolicy: &common.ConfigPolicy{

Version: 0x0,

Policy: &common.Policy{

Type: 3,

Value: {0xa, 0x6, 0x41, 0x64, 0x6d, 0x69, 0x6e, 0x73, 0x10, 0x2},

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

key: "Admins",

path: {"Channel", "Orderer"},

},

"[Policy] /Channel/Readers": {

ConfigGroup: (*common.ConfigGroup)(nil),

ConfigValue: (*common.ConfigValue)(nil),

ConfigPolicy: &common.ConfigPolicy{

Version: 0x0,

Policy: &common.Policy{

Type: 3,

Value: {0xa, 0x7, 0x52, 0x65, 0x61, 0x64, 0x65, 0x72, 0x73},

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

key: "Readers",

path: {"Channel"},

},

"[Policy] /Channel/Writers": {

ConfigGroup: (*common.ConfigGroup)(nil),

ConfigValue: (*common.ConfigValue)(nil),

ConfigPolicy: &common.ConfigPolicy{

Version: 0x0,

Policy: &common.Policy{

Type: 3,

Value: {0xa, 0x7, 0x57, 0x72, 0x69, 0x74, 0x65, 0x72, 0x73},

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

key: "Writers",

path: {"Channel"},

},

"[Value] /Channel/Orderer/ConsensusType": {

ConfigGroup: (*common.ConfigGroup)(nil),

ConfigValue: &common.ConfigValue{

Version: 0x0,

Value: {0xa, 0x4, 0x73, 0x6f, 0x6c, 0x6f},

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ConfigPolicy: (*common.ConfigPolicy)(nil),

key: "ConsensusType",

path: {"Channel", "Orderer"},

},

"[Value] /Channel/Orderer/Capabilities": {

ConfigGroup: (*common.ConfigGroup)(nil),

ConfigValue: &common.ConfigValue{

Version: 0x0,

Value: {0xa, 0x8, 0xa, 0x4, 0x56, 0x31, 0x5f, 0x31, 0x12, 0x0},

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ConfigPolicy: (*common.ConfigPolicy)(nil),

key: "Capabilities",

path: {"Channel", "Orderer"},

},

"[Value] /Channel/Orderer/BatchTimeout": {

ConfigGroup: (*common.ConfigGroup)(nil),

ConfigValue: &common.ConfigValue{

Version: 0x0,

Value: {0xa, 0x2, 0x32, 0x73},

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ConfigPolicy: (*common.ConfigPolicy)(nil),

key: "BatchTimeout",

path: {"Channel", "Orderer"},

},

"[Value] /Channel/HashingAlgorithm": {

ConfigGroup: (*common.ConfigGroup)(nil),

ConfigValue: &common.ConfigValue{

Version: 0x0,

Value: {0xa, 0x6, 0x53, 0x48, 0x41, 0x32, 0x35, 0x36},

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ConfigPolicy: (*common.ConfigPolicy)(nil),

key: "HashingAlgorithm",

path: {"Channel"},

},

"[Group] /Channel/Orderer": {

ConfigGroup: &common.ConfigGroup{

Version: 0x0,

Groups: {},

Values: {

"BatchTimeout": &common.ConfigValue{(CYCLIC REFERENCE)},

"ChannelRestrictions": &common.ConfigValue{

Version: 0x0,

Value: nil,

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

"Capabilities": &common.ConfigValue{(CYCLIC REFERENCE)},

"ConsensusType": &common.ConfigValue{(CYCLIC REFERENCE)},

"BatchSize": &common.ConfigValue{(CYCLIC REFERENCE)},

},

Policies: {

"BlockValidation": &common.ConfigPolicy{(CYCLIC REFERENCE)},

"Readers": &common.ConfigPolicy{

Version: 0x0,

Policy: &common.Policy{

Type: 3,

Value: {0xa, 0x7, 0x52, 0x65, 0x61, 0x64, 0x65, 0x72, 0x73},

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

"Writers": &common.ConfigPolicy{(CYCLIC REFERENCE)},

"Admins": &common.ConfigPolicy{(CYCLIC REFERENCE)},

},

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ConfigValue: (*common.ConfigValue)(nil),

ConfigPolicy: (*common.ConfigPolicy)(nil),

key: "Orderer",

path: {"Channel"},

},

"[Value] /Channel/Orderer/ChannelRestrictions": {

ConfigGroup: (*common.ConfigGroup)(nil),

ConfigValue: &common.ConfigValue{

Version: 0x0,

Value: nil,

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ConfigPolicy: (*common.ConfigPolicy)(nil),

key: "ChannelRestrictions",

path: {"Channel", "Orderer"},

},

"[Policy] /Channel/Orderer/Readers": {

ConfigGroup: (*common.ConfigGroup)(nil),

ConfigValue: (*common.ConfigValue)(nil),

ConfigPolicy: &common.ConfigPolicy{

Version: 0x0,

Policy: &common.Policy{

Type: 3,

Value: {0xa, 0x7, 0x52, 0x65, 0x61, 0x64, 0x65, 0x72, 0x73},

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

key: "Readers",

path: {"Channel", "Orderer"},

},

"[Value] /Channel/OrdererAddresses": {

ConfigGroup: (*common.ConfigGroup)(nil),

ConfigValue: &common.ConfigValue{

Version: 0x0,

Value: {0xa, 0xe, 0x31, 0x32, 0x37, 0x2e, 0x30, 0x2e, 0x30, 0x2e, 0x31, 0x3a, 0x37, 0x30, 0x35, 0x30},

ModPolicy: "/Channel/Orderer/Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ConfigPolicy: (*common.ConfigPolicy)(nil),

key: "OrdererAddresses",

path: {"Channel"},

},

"[Value] /Channel/Consortium": {

ConfigGroup: (*common.ConfigGroup)(nil),

ConfigValue: &common.ConfigValue{

Version: 0x0,

Value: {0xa, 0x10, 0x53, 0x61, 0x6d, 0x70, 0x6c, 0x65, 0x43, 0x6f, 0x6e, 0x73, 0x6f, 0x72, 0x74, 0x69, 0x75, 0x6d},

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ConfigPolicy: (*common.ConfigPolicy)(nil),

key: "Consortium",

path: {"Channel"},

},

"[Value] /Channel/Capabilities": {

ConfigGroup: (*common.ConfigGroup)(nil),

ConfigValue: &common.ConfigValue{

Version: 0x0,

Value: {0xa, 0x8, 0xa, 0x4, 0x56, 0x31, 0x5f, 0x33, 0x12, 0x0},

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ConfigPolicy: (*common.ConfigPolicy)(nil),

key: "Capabilities",

path: {"Channel"},

},

"[Group] /Channel": {

ConfigGroup: &common.ConfigGroup{

Version: 0x0,

Groups: {

"Orderer": &common.ConfigGroup{(CYCLIC REFERENCE)},

"Application": &common.ConfigGroup{(CYCLIC REFERENCE)},

},

Values: {

"HashingAlgorithm": &common.ConfigValue{(CYCLIC REFERENCE)},

"BlockDataHashingStructure": &common.ConfigValue{

Version: 0x0,

Value: {0x8, 0xff, 0xff, 0xff, 0xff, 0xf},

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

"OrdererAddresses": &common.ConfigValue{(CYCLIC REFERENCE)},

"Consortium": &common.ConfigValue{(CYCLIC REFERENCE)},

"Capabilities": &common.ConfigValue{(CYCLIC REFERENCE)},

},

Policies: {

"Readers": &common.ConfigPolicy{(CYCLIC REFERENCE)},

"Writers": &common.ConfigPolicy{(CYCLIC REFERENCE)},

"Admins": &common.ConfigPolicy{

Version: 0x0,

Policy: &common.Policy{

Type: 3,

Value: {0xa, 0x6, 0x41, 0x64, 0x6d, 0x69, 0x6e, 0x73, 0x10, 0x2},

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

},

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ConfigValue: (*common.ConfigValue)(nil),

ConfigPolicy: (*common.ConfigPolicy)(nil),

key: "Channel",

path: {},

},

"[Policy] /Channel/Application/ChannelCreationPolicy": {

ConfigGroup: (*common.ConfigGroup)(nil),

ConfigValue: (*common.ConfigValue)(nil),

ConfigPolicy: &common.ConfigPolicy{

Version: 0x0,

Policy: &common.Policy{

Type: 3,

Value: {0xa, 0x6, 0x41, 0x64, 0x6d, 0x69, 0x6e, 0x73},

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ModPolicy: "",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

key: "ChannelCreationPolicy",

path: {"Channel", "Application"},

},

"[Value] /Channel/BlockDataHashingStructure": {

ConfigGroup: (*common.ConfigGroup)(nil),

ConfigValue: &common.ConfigValue{

Version: 0x0,

Value: {0x8, 0xff, 0xff, 0xff, 0xff, 0xf},

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ConfigPolicy: (*common.ConfigPolicy)(nil),

key: "BlockDataHashingStructure",

path: {"Channel"},

},

"[Policy] /Channel/Admins": {

ConfigGroup: (*common.ConfigGroup)(nil),

ConfigValue: (*common.ConfigValue)(nil),

ConfigPolicy: &common.ConfigPolicy{

Version: 0x0,

Policy: &common.Policy{

Type: 3,

Value: {0xa, 0x6, 0x41, 0x64, 0x6d, 0x69, 0x6e, 0x73, 0x10, 0x2},

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

ModPolicy: "Admins",

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

key: "Admins",

path: {"Channel"},

},

},

configProto: &common.Config{

Sequence: 0x0,

ChannelGroup: &common.ConfigGroup{(CYCLIC REFERENCE)},

XXX_NoUnkeyedLiteral: struct {}{},

XXX_unrecognized: nil,

XXX_sizecache: 0,

},

namespace: "Channel",

pm: &policies.ManagerImpl{(CYCLIC REFERENCE)},

},