5.回归算法-梯度下降求解逻辑回归代码调试

梯度下降求解逻辑回归

《跟着迪哥学Python数据分析与机器学习实战》

报错一

path = 'data' + os.sep + 'LogiReg_data.txt'

#os.sep在Windows上文件的路径分隔符是'\'在Linux上是'/'

#os.sep根据你所处的平台自动采用相应的分隔符号

修改

path=r'D:\software\Anaconda\JupyterNotebookCode\LogiReg_data.txt'

import pandas as pd

path=r'D:\software\Anaconda\JupyterNotebookCode\LogiReg_data.txt'

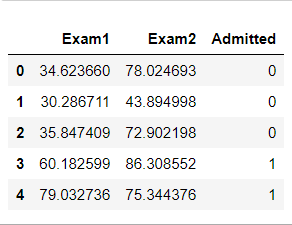

pdData=pd.read_csv(path,header=None,names=['Exam1','Exam2','Admitted'])

pdData.head()

import pandas as pd

from pandas import read_csv

pdData=read_csv('LogiReg_data.txt',header=None,names=['Exam1','Exam2','Admitted'])

pdData.head()

import pandas as pd

pdData=pd.read_csv('LogiReg_data.txt',header=None,names=['Exam 1','Exam 2','Admitted'])

pdData.head()

报错二

pdData.insert(0,'Ones',1)

pdData.insert(0,'Ones',1,allow_duplicates=True)

#Logistic Regression

#The data

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

%matplotlib inline

#inline为静态绘图notebook为交互式图

import os

print(os.getcwd()) #显示当前工作目录

import pandas as pd

path=r'D:\software\Anaconda\JupyterNotebookCode\LogiReg_data.txt'

#os.sep在Windows上文件的路径分隔符是'\'在Linux上是'/'

#os.sep根据你所处的平台自动采用相应的分隔符号

pdData=pd.read_csv(path,header=None,names=['Exam1','Exam2','Admitted'])

pdData.head()

import pandas as pd

from pandas import read_csv

pdData=read_csv('LogiReg_data.txt',header=None,names=['Exam1','Exam2','Admitted'])

pdData.head()

import pandas as pd

pdData=pd.read_csv('LogiReg_data.txt',header=None,names=['Exam 1','Exam 2','Admitted'])

pdData.head()

pdData.shape #查看数据维度100行3列

positive=pdData[pdData['Admitted']==1]#正例

negative=pdData[pdData['Admitted']==0]#负例

fig,ax=plt.subplots(figsize=(10,5))

ax.scatter(positive['Exam 1'],positive['Exam 2'],s=30,c='b',marker='o',label='Admitted')

ax.scatter(negative['Exam 1'],negative['Exam 2'],s=30,c='r',marker='x',label='Not Admitted')

ax.legend()

ax.set_xlabel('Exam 1 Score')

ax.set_ylabel('Exam 2 Score')

#建立分类器 求解出三个参数012

#设定阈值 根据阈值判断录取结果

#sigmoid映射到概率的函数

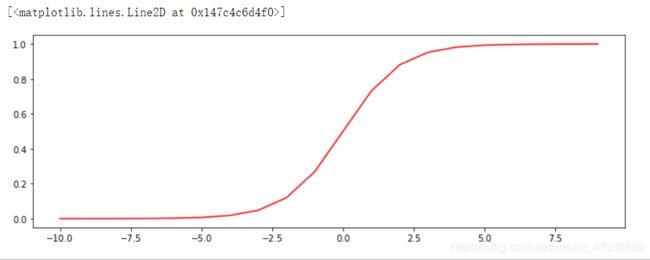

def sigmoid(z):

return 1/(1+np.exp(-z)) #公式

nums=np.arange(-10,10,step=1)

#creates a vector containing 20 equally spaced values from -10 to 10

#创建一个包含20个等距值(从-10到10)的向量

fig,ax=plt.subplots(figsize=(12,4))

ax.plot(nums,sigmoid(nums),'r')

#model返回预测结果值

def model(X,theta): #预测结果

return sigmoid(np.dot(X,theta.T)) #矩阵的乘法

#pdData.insert(0,'Ones',1) #新增一列值都为1

pdData.insert(0,'Ones',1,allow_duplicates=True)

# in a try / except structure so as not to return an error if the block si executed several times

#在try/except结构中,以便在块si执行多次时不返回错误

# set X (training data) and y (target variable)

#orig_data=pdData.as_matrix()

orig_data=pdData.values

# convert the Pandas representation of the data to an array useful for further computations

# 将数据的表示形式转换为有助于进一步计算的数组

cols=orig_data.shape[1]

X=orig_data[:,0:cols-1]

y=orig_data[:,cols-1:cols]

# convert to numpy arrays and initalize the parameter array theta

# 转换为numpy数组并初始化参数数组theta

#X = np.matrix(X.values)

#y = np.matrix(data.iloc[:,3:4].values) #np.array(y.values)

theta=np.zeros([1,3]) #参数为1行3列

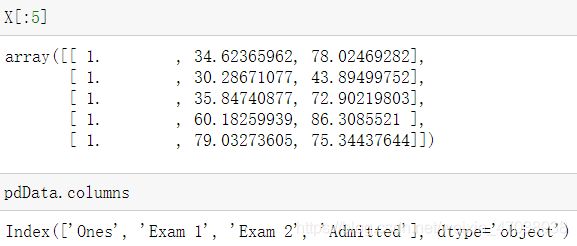

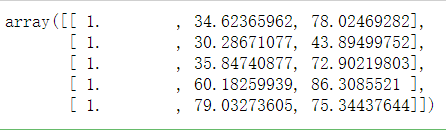

X[:5]

pdData.columns

y[:5]

theta

X.shape,y.shape,theta.shape

#cost根据参数计算损失

def cost(X,y,theta):

left=np.multiply(-y,np.log(model(X,theta)))

right=np.multiply(1-y,np.log(1-model(X,theta)))

return np.sum(left-right)/(len(X))

cost(X,y,theta)

#0.6931471805599453

#gradient计算每个参数的梯度方向

def gradient(X,y,theta):

grad=np.zeros(theta.shape) #有几个theta就有几个梯度

error=(model(X,theta)-y).ravel()

for j in range(len(theta.ravel())): #for each parmeter

term=np.multiply(error,X[:,j])

grad[0,j]=np.sum(term)/len(X)

return grad

#descent进行参数更新

STOP_ITER=0 #迭代次数

STOP_COST=1 #损失值

STOP_GRAD=2 #梯度

def stopCriterion(type,value,threshold):

#设定三种不同的停止策略

if type==STOP_ITER:return value>threshold #threshold设定阈值

elif type==STOP_COST:return abs(value[-1]-value[-2])<threshold

elif type==STOP_GRAD:return np.linalg.norm(value)<threshold

import numpy.random

#洗牌 为了使模型的泛化能力更强 将数据全部打乱

def shuffleData(data):

np.random.shuffle(data)

cols=data.shape[1]

X=data[:,0:cols-1]

y=data[:,cols-1:]

return X,y

#观察时间对结果的影响

import time

def descent(data,theta,batchSize,stopType,thresh,alpha):

#data数据,theta参数,batchSize,stopType停止策略,thresh阈值,alpha学习率

#梯度下降求解

init_time=time.time()

i=0 #迭代次数

k=0 #batch

X,y=shuffleData(data)

grad=np.zeros(theta.shape) #计算的梯度

costs=[cost(X,y,theta)] #损失值

while True:

grad=gradient(X[k:k+batchSize],y[k:k+batchSize],theta) #梯度

k+=batchSize #取batch数量个数据

if k>=n:

k=0

X,y=shuffleData(data) #重新洗牌

theta=theta-alpha*grad #参数更新

costs.append(cost(X,y,theta)) #计算新的损失

i+=1

#何时停止

if stopType==STOP_ITER:value=i

elif stopType==STOP_COST:value=costs

elif stopType==STOP_GRAD:value=grad

if stopCriterion(stopType,value,thresh):break

return theta,i-1,costs,grad,time.time()-init_time

#根据传入参数选择梯度下降方式以及停止策略并绘图展示

def runExpe(data,theta,batchSize,stopType,thresh,alpha):

#import pdb; pdb.set_trace();

theta,iter,costs,grad,dur=descent(data,theta,batchSize,stopType,thresh,alpha) #核心代码

name="Original"if(data[:,1]>2).sum()>1 else "Scaled"

name+="data-learning rate:{}-".format(alpha)

if batchSize==n:strDescType="Gradient"

elif batchSize==1:strDescType="Stochastic"

else:strDescType="Mini-batch({})".format(batchSize)

name+=strDescType+"descent-Stop:"

if stopType==STOP_ITER:strStop="{} iterations".format(thresh)

elif stopType==STOP_COST:strStop="costs change < {}".format(thresh)

else:strStop="gradient norm < {}".format(thresh)

name+=strStop

print("***{}\nTheta: {} - Iter: {} - Last cost: {:03.2f} - Duration: {:03.2f}s".format(

name, theta, iter, costs[-1], dur))

fig,ax=plt.subplots(figsize=(12,4))

ax.plot(np.arange(len(costs)),costs,'r')

ax.set_xlabel('Iterations')

ax.set_ylabel('Cost')

ax.set_title(name.upper()+' - Error vs. Iteration')

return theta

#选择的梯度下降方法是基于所有样本的

n=100

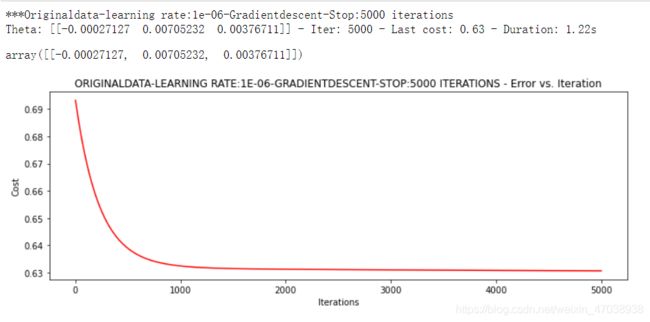

runExpe(orig_data,theta,n,STOP_ITER,thresh=5000,alpha=0.000001)

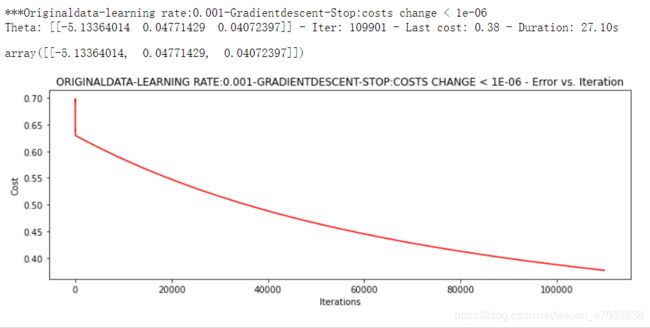

runExpe(orig_data,theta,n,STOP_COST,thresh=0.000001,alpha=0.001)

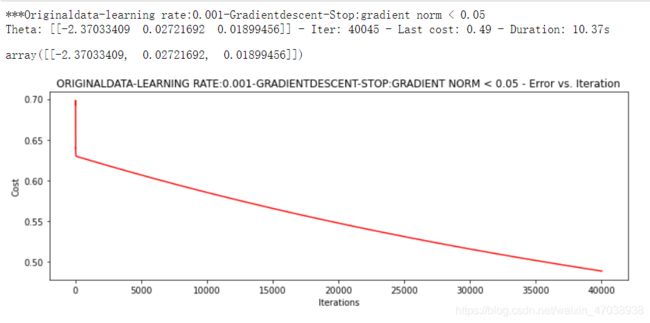

runExpe(orig_data,theta,n,STOP_GRAD,thresh=0.05,alpha=0.001)

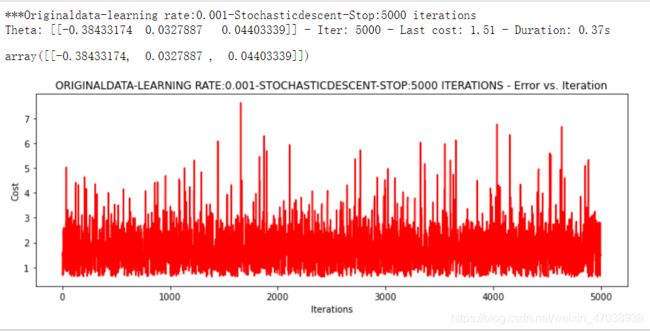

runExpe(orig_data,theta,1,STOP_ITER,thresh=5000,alpha=0.001)

runExpe(orig_data,theta,1,STOP_ITER,thresh=15000,alpha=0.000002)

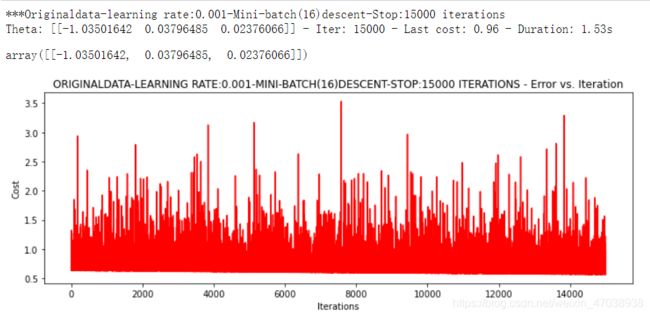

runExpe(orig_data,theta,16,STOP_ITER,thresh=15000,alpha=0.001)

from sklearn import preprocessing as pp

scaled_data=orig_data.copy()

scaled_data[:,1:3]=pp.scale(orig_data[:,1:3])

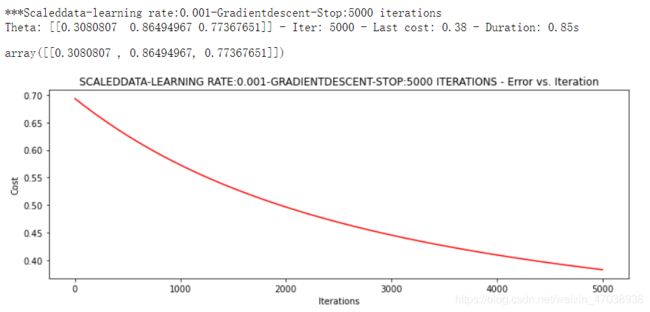

runExpe(scaled_data,theta,n,STOP_ITER,thresh=5000,alpha=0.001)

runExpe(scaled_data,theta,n,STOP_GRAD,thresh=0.02,alpha=0.001)

theta=runExpe(scaled_data,theta,1,STOP_GRAD,thresh=0.002/5,alpha=0.001)

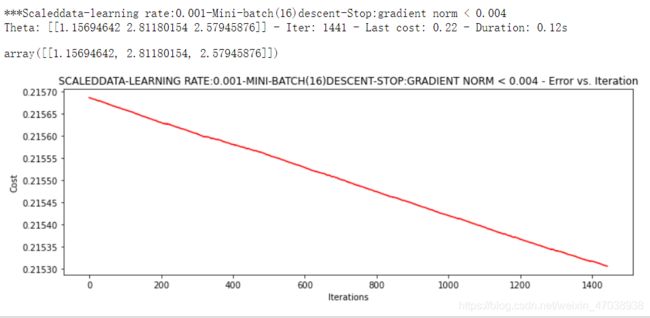

runExpe(scaled_data,theta,16,STOP_GRAD,thresh=0.002*2,alpha=0.001)

#accuracy计算精度

#设定阈值

def predict(X,theta):

return[1 if x >= 0.5 else 0 for x in model(X,theta)]

scaled_X=scaled_data[:,:3]

y=scaled_data[:,3]

predictions=predict(scaled_X,theta)

correct=[1 if ((a==1 and b==1)or(a==0 and b==0)) else 0 for (a,b)in zip(predictions,y)]

accuracy=(sum(map(int,correct))%len(correct))

print('accuracy={0}%'.format(accuracy))

#accuracy=89%

认真是一种态度更是一种责任