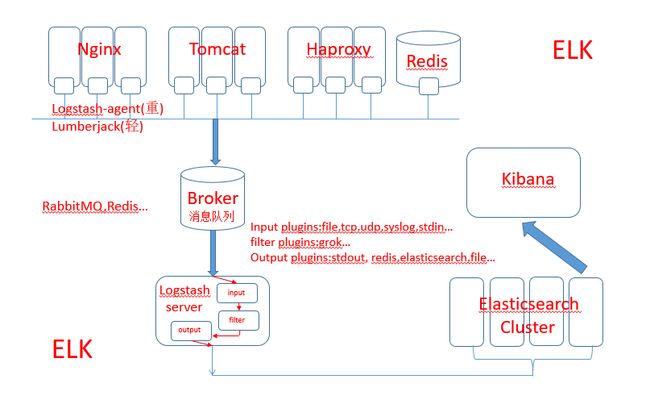

ELK架构图:

logstash

官方网站:https://www.elastic.co/

logstash工作模式:Agent---Server

logstash工作流程:input---(filter,codec)---output

Agent与Server并无区别。

常用插件:

input plugins: stdin,file,redis,

filter plugins:grok,

output plugins:stdout,redis,elasticsearch,

logstash是属于重量级数据收集工具,需要有JDK环境。

部署JDK

# yum install -y java-1.8.0-openjdk-headles java-1.8.0-openjdk-devel java-1.8.0-openjdk

# echo "export JAVA_HOME=/usr" > /etc/profile.d/java.sh

# source /etc/profile.d/java.sh

安装Logstash

Logstash版本有:1.X,2.X,5.X

(vm做实验可以设置CPU双核四线程,2G内存,重量级)

# yum install -y logstash-1.5.4-1.noarch.rpm

# echo "export PATH=/opt/logstash/bin:$PATH" > /etc/profile.d/logstash.sh //logstash命令路径

# /etc/sysconfig/logstash 启动参数

# /etc/logstash/conf.d/ 此目录下的所有文件

# logstash --help //需要好一会儿才出现帮助,启动比较慢

编辑测试文件:

# vim /etc/logstash/conf.d/simple.conf

# input { //设置数据输入方式

# stdin {} //标准输入,键盘

# }

# output { //设置数据输出方式

# stdout { //标准输出,屏幕

# codec => rubydebug //采用输出格式

# }

# }

运行:

# logstash -f /etc/logstash/conf.d/simple.conf --configtest //测试配置文件编写是否正确

# Configuration OK

# logstash -f /etc/logstash/conf.d/simple.conf //运行

# Logstash startup completed //信息提示启动完成

# hello,logstash //此时等待我们从标准输入数据(键盘),接着会在标准输出(屏幕)打印如下数据

# {

# "message" => "hello,logstash",

# "@version" => "1",

# "@timestamp" => "2017-03-02T09:35:12.773Z",

# "host" => "elk"

# }

Logstash基本工作流程完成,接下来就是研究各类插件。

input plugins:file, udp

file作为数据输入,参考说明https://www.elastic.co/guide/en/logstash/1.5/plugins-inputs-file.html#_file_rotation //官方说明

# vim /etc/logstash/conf.d/file-simple.conf

# input {

# file {

# path => ["/var/log/httpd/access_log"] //数组,可以输入多个日志文件

# type => "system" //归类,可以在filter插件中调用

# start_position => "beginning" //文件内容的监控位置从最先开始,(日志滚动是从新的日志文件第一行开始监控)

# }

# }

# output {

# stdout {

# codec => rubydebug

# }

# }

# logstash -f /etc/logstash/conf.d/file-simple.conf --configtest

# logstash -f /etc/logstash/conf.d/file-simple.conf

采用udp方式来输入数据到logstash,官方说明:https://www.elastic.co/guide/en/logstash/1.5/plugins-inputs-udp.html

数据生产者将数据以udp协议的方式通过网络发送至logstash指定的udp端口

数据生产者采用collectd性能监控工具实现,epel源中安装。

# 另外一台主机

# yum install collectd -y

# [root@elknode1 ~]# grep -Ev "(#|$)" /etc/collectd.conf

# Hostname "elk-node1"

# LoadPlugin syslog

# LoadPlugin cpu

# LoadPlugin df

# LoadPlugin disk

# LoadPlugin interface

# LoadPlugin load

# LoadPlugin memory

# LoadPlugin network

#

#

#

#

# Include "/etc/collectd.d"

# systemctl start collectd.service

配置logstash文件:

# vim /etc/logstash/conf.d/udp-simple.conf

# input {

# udp {

# port => "25826"

# codec => collectd {}

# type => "collectd"

# }

# }

# output {

# stdout {

# codec => rubydebug

# }

# }

# logstash -f /etc/logstash/conf.d/udp-simple.conf --configtest

# logstash -f /etc/logstash/conf.d/udp-simple.conf

启动完成就会有日志信息送过来

# {

# "host" => "elk-node1",

# "@timestamp" => "2017-02-28T23:46:14.354Z",

# "plugin" => "disk",

# "plugin_instance" => "dm-1",

# "collectd_type" => "disk_ops",

# "read" => 322,

# "write" => 358,

# "@version" => "1",

# "type" => "collectd"

# }

filter plugins:grok(web日志核心插件)

grok用于分析并结构化文本数据

格式化模版:

/opt/logstash/vendor/bundle/jruby/1.9/gems/logstash-patterns-core-0.3.0/patterns 此目录下放置很多默认模版:

aws bro firewalls grok-patterns haproxy java junos linux-syslog mcollective mongodb nagios postgresql rails redis ruby

# COMMONAPACHELOG %{IPORHOST:clientip} %{USER:ident} %{USER:auth} [%{HTTPDATE:timestamp}] "(?:%{WORD:verb} %{NOTSPACE:request}(?: HTTP/%{NUMBER:httpversion})?|%{DATA:rawrequest})" %{NUMBER:response} (?:%{NUMBER:bytes}|-)

# 以上就是apache的common日志格式模版,

# 以IPORHOST为例:模版文件中定义了如何去匹配IP或者HOST

# IPORHOST (?:%{HOSTNAME}|%{IP})

# HOSTNAME \b(?:[0-9A-Za-z][0-9A-Za-z-]{0,62})(?:.(?:[0-9A-Za-z][0-9A-Za-z-]{0,62}))*(.?|\b)

# HOST %{HOSTNAME}

# IPV4 (?<

# IP (?:%{IPV6}|%{IPV4}) // ipv6太长了,就不复制贴上了

# 这个配置文件都定义好了,如何去匹配IP,HOST,HOSTNAME等各种各样的信息

# 当然也可以自定义

# %{SYNTAX:SEMANTIC}

SYNTAX:预定义模式名称(自定义有,没有的话需要自己定义),用于如何识别数据

SEMANTIC:匹配到的文本的自定义标识符

举个例子:

# 192.168.0.215 - - [02/Mar/2017:18:03:40 +0800] "GET /images/apache_pb.gif HTTP/1.1" 304 - "http://192.168.9.77/" "Mozilla/5.0 (Windows NT 10.0; WOW64; rv:48.0) Gecko/20100101 Firefox/48.0"

# 以此日志为例,匹配模版如下:

# COMMONAPACHELOG %{IPORHOST:clientip} %{USER:ident} %{USER:auth} [%{HTTPDATE:timestamp}] "(?:%{WORD:verb} %{NOTSPACE:request}(?: HTTP/%{NUMBER:httpversion})?|%{DATA:rawrequest})" %{NUMBER:response} (?:%{NUMBER:bytes}|-)

# 格式化之后的信息如下

# clientip : 192.168.0.215

# ident : -

# auth : -

# timestamp : 02/Mar/2017:18:03:40 +0800

# verb : GET

# request : /images/apache_pb.gif

# httpversion : 1.1

# ......

测试匹配apache日志:

# vim /etc/logstash/conf.d/grok-apache.conf

# input {

# file {

# path => ["/var/log/httpd/access_log"]

# type => "apachelog"

# }

# }

# filter {

# grok {

# match => { "message" => "%{COMBINEDAPACHELOG}" }

# }

# }

# output {

# stdout {

# codec => rubydebug

# }

# }

# logstash -f /etc/logstash/conf.d/grok-apache.conf --configtest

# logstash -f /etc/logstash/conf.d/grok-apache.conf

输出显示:

# {

# "message" => "192.168.0.215 - - [02/Mar/2017:19:45:17 +0800] "GET /noindex/css/fonts/Bold/OpenSans-Bold.ttf HTTP/1.1" 404 238 " http://192.168.9.77/noindex/css/open-sans.css" "Mozilla/5.0 (Windows NT 10.0; WOW64; rv:48.0) Gecko/20100101 Firefox/48.0"",

# "@version" => "1",

# "@timestamp" => "2017-03-02T11:45:17.834Z",

# "host" => "elk",

# "path" => "/var/log/httpd/access_log",

# "type" => "apachelog",

# "clientip" => "192.168.0.215",

# "ident" => "-",

# "auth" => "-",

# "timestamp" => "02/Mar/2017:19:45:17 +0800",

# "verb" => "GET",

# "request" => "/noindex/css/fonts/Bold/OpenSans-Bold.ttf",

# "httpversion" => "1.1",

# "response" => "404",

# "bytes" => "238",

# "referrer" => "" http://192.168.9.77/noindex/css/open-sans.css"",

# "agent" => ""Mozilla/5.0 (Windows NT 10.0; WOW64; rv:48.0) Gecko/20100101 Firefox/48.0""

# }

测试匹配nginx日志:

需要自定义nginx日志,默认没有此日志模版,

在/opt/logstash/vendor/bundle/jruby/1.9/gems/logstash-patterns-core-0.3.0/patterns此目录下创建nginx文件

# NGUSERNAME [a-zA-Z.@-+_%]+

# NGUSER %{NGUSERNAME}

# NGINXACCESS %{IPORHOST:clientip} - %{NOTSPACE:remote_user} [%{HTTPDATE:timestamp}] "(?:%{WORD:verb} %{NOTSPACE:request}(?: HTTP/%{NUMBER:httpversion})?|%{DATA:rawrequest})" %{NUMBER:response} (?:%{NUMBER:bytes}|-) %{QS:referrer} %{QS:agent} %{NOTSPACE:http_x_forwarded_for}

nginx日志的logstash配置文件:

# vim /etc/logstash/conf.d/grok-nginx.conf

# input {

# file {

# path => ["/var/log/nginx/access.log"]

# type => "nginxlog"

# }

# }

# filter {

# grok {

# match => { "message" => "%{NGINXACCESS}" }

# }

# }

# output {

# stdout {

# codec => rubydebug

# }

# }

# logstash -f /etc/logstash/conf.d/grok-nginx.conf --configtest

# logstash -f /etc/logstash/conf.d/grok-nginx.conf

检查输出结构化数据信息:

# {

# "message" => "192.168.0.215 - - [02/Mar/2017:20:06:02 +0800] "GET /poweredby.png HTTP/1.1" 200 2811 "http://192.168.9.77/" "Mozilla/5.0 (Windows NT 10.0; WOW64; rv:48.0) Gecko/20100101 Firefox/48.0" "-"",

# "@version" => "1",

# "@timestamp" => "2017-03-02T12:06:02.984Z",

# "host" => "elk",

# "path" => "/var/log/nginx/access.log",

# "type" => "nginxlog",

# "clientip" => "192.168.0.215",

# "remote_user" => "-",

# "timestamp" => "02/Mar/2017:20:06:02 +0800",

# "verb" => "GET",

# "request" => "/poweredby.png",

# "httpversion" => "1.1",

# "response" => "200",

# "bytes" => "2811",

# "referrer" => ""http://192.168.9.77/"",

# "agent" => ""Mozilla/5.0 (Windows NT 10.0; WOW64; rv:48.0) Gecko/20100101 Firefox/48.0"",

# "http_x_forwarded_for" => ""-""

# }

output plugins:redis, elasticsearch

(在ELK图中logstash server的数据来源很有可能是redis,也就是在input plugins上采用redis插件)

redis作为数据输出对象,https://www.elastic.co/guide/en/logstash/1.5/plugins-outputs-redis.html

部署redis:

# yum install -y redis

# vim /etc/redis.conf

# bind 0.0.0.0

# systemctl start redis.service

测试redis正常工作:

# [root@elk patterns]# redis-cli

# 127.0.0.1:6379> SET name neo

# OK

# 127.0.0.1:6379> get name

# "neo"

# 127.0.0.1:6379>

将nginx日志数据结构化之后输出到redis数据库中:

# vim /etc/logstash/conf.d/grok-nginx-redis.conf

# input {

# file {

# path => ["/var/log/nginx/access.log"]

# type => "nginxlog"

# }

# }

# filter {

# grok {

# match => { "message" => "%{NGINXACCESS}" }

# }

# }

# output {

# redis {

# port => "redis"

# host => "192.168.9.77"

# data_type => "list" //list,channel两种模式

# key => "logstash-nginxlog" //The name of a Redis list or channel.

# }

# }

# logstash -f grok-nginx-redis.conf.conf --configtest

# systemctl -f /etc/logstash/conf.d/grok-nginx-redis.conf

在redis数据库中检查数据:

# [root@elk patterns]# redis-cli -h 192.168.9.77

# 192.168.9.77:6379> llen logstash-nginxlog

# (integer) 66

# 192.168.9.77:6379> lindex logstash-nginxlog 65

# "{"message":"192.168.0.215 - - [02/Mar/2017:21:38:07 +0800] \"GET /poweredby.png HTTP/1.1\" 200 2811 \"http://192.168.9.77/\" \"Mozilla/5.0 (Windows NT 10.0; WOW64; rv:48.0) Gecko/20100101 Firefox/48.0\" \"-\"","@version":"1","@timestamp":"2017-03-02T13:38:07.870Z","host":"elk","path":"/var/log/nginx/access.log","type":"nginxlog","clientip":"192.168.0.215","remote_user":"-","timestamp":"02/Mar/2017:21:38:07 +0800","verb":"GET","request":"/poweredby.png","httpversion":"1.1","response":"200","bytes":"2811","referrer":"\"http://192.168.9.77/\"","agent":"\"Mozilla/5.0 (Windows NT 10.0; WOW64; rv:48.0) Gecko/20100101 Firefox/48.0\"","http_x_forwarded_for":"\"-\""}"

# 192.168.9.77:6379>

#已经可以查看到redis收到了logstash发送过来的数据

elasticsearch作为logstash的数据输出对象:

# vim /etc/elasticsearch/elasticsearch.yml

# cluster.name: myes

# node.name: "elk"

# network.bind_host: 192.168.9.77

# transport.tcp.port: 9300

# http.port: 9200

配置elasticsearch输出的logstash配置文件:以访问日志文件作为输入,经过grok数据结构化处理输出至es

# vim /etc/logstash/conf.d/grok-nginx-es.conf

# input {

# file {

# path => ["/var/log/nginx/access.log"]

# type => "nginxlog"

# }

# }

# filter {

# grok {

# match => { "message" => "%{NGINXACCESS}" }

# }

# }

# output {

# elasticsearch {

# cluster => "myes"

# index => "logstash-%{+YYYY.MM.dd}"

# }

# }

# logstash -f /etc/logstash/conf.d/grok-nginx-es.conf --configtest

# logstash -f /etc/logstash/conf.d/grok-nginx-es.conf

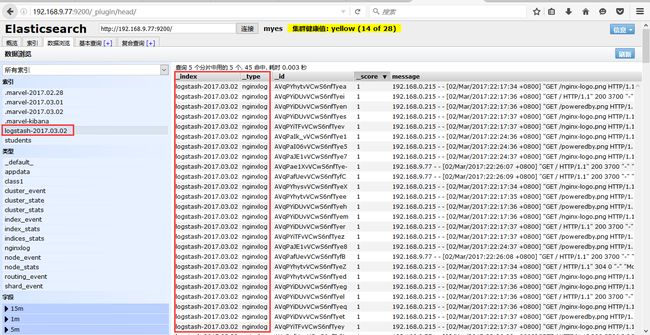

测试查看es上的存储数据:

# [root@elk patterns]# curl -XGET 'http://192.168.9.77:9200/_cat/indices'

# yellow open .marvel-2017.03.02 1 1 15208 0 19.9mb 19.9mb

# yellow open .marvel-2017.03.01 1 1 2054 0 4.4mb 4.4mb

# yellow open logstash-2017.03.02 5 1 45 0 141.2kb 141.2kb

# logstash-2017.03.02 索引数据就是日志数据的索引

# curl -XGET 'http://192.168.9.77:9200/logstash-2017.03.02/_search?pretty' //查看所有数据

通过在es安装head插件,来web展示搜索数据:

redis作为logstash的数据输入源类似作为输出对象。