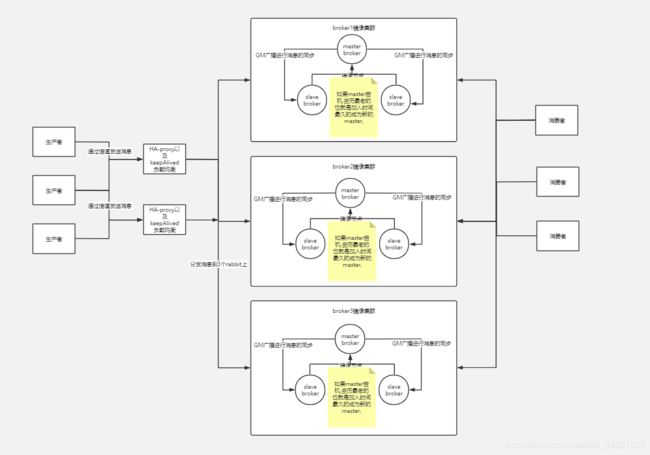

rabbitmq+haproxy+keepalived(搭建高可用RabbitMQ镜像模式集群)

rabbitmq+haproxy+keepalived(搭建高可用RabbitMQ镜像模式集群)

1、环境准备

3台centos7操作系统,ip分别为:

192.168.122.124

192.168.122.66

192.168.122.122

2、修改 hosts 文件 (3 台)

[root@rabbitmq01 ~]# getenforce

Disabled

[root@rabbitmq01 ~]# systemctl status firewalld.service

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:firewalld(1)

[root@rabbitmq01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.122.124 rabbitmq01

192.168.122.66 rabbitmq02

192.168.122.122 rabbitmq03

3、保证 3 台能 ping 通 (3 台)

[root@rabbitmq01 ~]# ping rabbitmq01

PING rabbitmq01 (192.168.122.124) 56(84) bytes of data.

64 bytes from rabbitmq01 (192.168.122.124): icmp_seq=1 ttl=64 time=0.078 ms

64 bytes from rabbitmq01 (192.168.122.124): icmp_seq=2 ttl=64 time=0.052 ms

64 bytes from rabbitmq01 (192.168.122.124): icmp_seq=3 ttl=64 time=0.052 ms

[root@rabbitmq01 ~]# ping rabbitmq02

PING rabbitmq02 (192.168.122.66) 56(84) bytes of data.

64 bytes from rabbitmq02 (192.168.122.66): icmp_seq=1 ttl=64 time=0.595 ms

64 bytes from rabbitmq02 (192.168.122.66): icmp_seq=2 ttl=64 time=0.536 ms

64 bytes from rabbitmq02 (192.168.122.66): icmp_seq=3 ttl=64 time=0.453 ms

64 bytes from rabbitmq02 (192.168.122.66): icmp_seq=4 ttl=64 time=0.478 ms

[root@rabbitmq01 ~]# ping rabbitmq03

PING rabbitmq03 (192.168.122.122) 56(84) bytes of data.

64 bytes from rabbitmq03 (192.168.122.122): icmp_seq=1 ttl=64 time=0.800 ms

64 bytes from rabbitmq03 (192.168.122.122): icmp_seq=2 ttl=64 time=0.470 ms

64 bytes from rabbitmq03 (192.168.122.122): icmp_seq=3 ttl=64 time=0.712 ms

64 bytes from rabbitmq03 (192.168.122.122): icmp_seq=4 ttl=64 time=0.533 ms

4、安装 rabbitmq 依赖 erlang 环境 (3 台)

[root@rabbitmq1 ~]# yum install erlang

5、安装 socat (3 台)

[root@rabbitmq1 ~]# yum install -y socat

6、安装 rabbitmq (3 台)

[root@rabbitmq1 ~]# yum -y install rabbitmq-server

7、搭建 rabbitmq 的一般模式集群

-

在上述的 3 台机器上安装 rabbitmq 完成之后,你可以看到你的机器中有如下 1 个文件。路径在 $HOME 中或者在 /var/lib/rabbitmq 中,文件名称为.erlang.cookie, 他是一个隐藏文件。那么这文件存储的内容是什么,是做什么用的呢?

-

RabbitMQ 的集群是依赖 erlang 集群,而 erlang 集群是通过这个 cookie 进行通信认证的,因此我们做集群的第一步就是干 cookie。

1、统一 erlang.cookie 文件中 cookie 值

- 必须使集群中也就是rabbitmq02,rabbitmq03这两台机器的.erlang.cookie 文件中 cookie 值一致,且权限为 owner 只读。

# 复制 rabbitmq01 中 /var/lib/rabbitmq/.erlang.cookie 的内容到 rabbitmq02 rabbitmq03 相同的内容

[root@rabbitmq02 ~]# chmod 600 /var/lib/rabbitmq/.erlang.cookie

[root@rabbitmq03 ~]# chmod 600 /var/lib/rabbitmq/.erlang.cookie

2、启动服务(三台)

[root@rabbitmq01 ~]# systemctl enable rabbitmq-server --now

Created symlink from /etc/systemd/system/multi-user.target.wants/rabbitmq-server.service to /usr/lib/systemd/system/rabbitmq-server.service

3、查看集群状态

- 查看节点状态

[root@rabbitmq01 ~]# rabbitmqctl status

Status of node rabbit@rabbitmq01 ...

[{

pid,1390},

{

running_applications,

[{

rabbitmq_management,"RabbitMQ Management Console","3.3.5"},

{

rabbitmq_web_dispatch,"RabbitMQ Web Dispatcher","3.3.5"},

{

webmachine,"webmachine","1.10.3-rmq3.3.5-gite9359c7"},

{

mochiweb,"MochiMedia Web Server","2.7.0-rmq3.3.5-git680dba8"},

{

rabbitmq_management_agent,"RabbitMQ Management Agent","3.3.5"},

{

rabbit,"RabbitMQ","3.3.5"},

{

os_mon,"CPO CXC 138 46","2.2.14"},

{

inets,"INETS CXC 138 49","5.9.8"},

{

amqp_client,"RabbitMQ AMQP Client","3.3.5"},

{

xmerl,"XML parser","1.3.6"},

{

mnesia,"MNESIA CXC 138 12","4.11"},

{

sasl,"SASL CXC 138 11","2.3.4"},

{

stdlib,"ERTS CXC 138 10","1.19.4"},

{

kernel,"ERTS CXC 138 10","2.16.4"}]},

{

os,{

unix,linux}},

{

erlang_version,

"Erlang R16B03-1 (erts-5.10.4) [source] [64-bit] [async-threads:30] [hipe] [kernel-poll:true]\n"},

{

memory,

[{

total,40042936},

{

connection_procs,5440},

{

queue_procs,36744},

{

plugins,275040},

{

other_proc,14015480},

{

mnesia,87352},

{

mgmt_db,58576},

{

msg_index,23896},

{

other_ets,1098048},

{

binary,35496},

{

code,19941789},

{

atom,703377},

{

other_system,3761698}]},

{

alarms,[]},

{

listeners,[{

clustering,25672,"::"},{

amqp,5672,"::"}]},

{

vm_memory_high_watermark,0.4},

{

vm_memory_limit,416260096},

{

disk_free_limit,50000000},

{

disk_free,2157924352},

{

file_descriptors,

[{

total_limit,924},{

total_used,3},{

sockets_limit,829},{

sockets_used,1}]},

{

processes,[{

limit,1048576},{

used,180}]},

{

run_queue,0},

{

uptime,61}]

...done.

- 查看集群状态

[root@rabbitmq01 ~]# rabbitmqctl cluster_status

Cluster status of node rabbit@rabbitmq01 ...

[{

nodes,[{

disc,[rabbit@rabbitmq01]},

{

ram,[]}]},

{

running_nodes,[rabbit@rabbitmq01]},

{

cluster_name,<<"rabbit@rabbitmq01">>},

{

partitions,[]}]

...done.

4、Rabbitmq 集群添加节点

- 重启 rabbitmq02机器中 rabbitmq 的服务 在 rabbitmq01,rabbitmq03 分别执行

[root@rabbitmq01 ~]# rabbitmqctl stop_app

[root@rabbitmq01 ~]# rabbitmqctl join_cluster --ram rabbit@rabbitmq02

[root@rabbitmq01 ~]# rabbitmqctl start_app

[root@rabbitmq01 ~]# rabbitmq-plugins enable rabbitmq_management

[root@rabbitmq01 ~]# systemctl restart rabbitmq-server.service

[root@rabbitmq03 ~]# rabbitmqctl stop_app

[root@rabbitmq03 ~]# rabbitmqctl join_cluster --ram rabbit@rabbitmq02

[root@rabbitmq03 ~]# rabbitmqctl start_app

[root@rabbitmq03 ~]# rabbitmq-plugins enable rabbitmq_management

[root@rabbitmq03 ~]# systemctl restart rabbitmq-server.service

- 重启 rabbitmq02

[root@rabbitmq02 ~]# systemctl restart rabbitmq-server.service

5、打开网页管理页面查看 nodes

8、搭建 rabbitmq 的镜像高可用模式集群

- 这一节要参考的文档是:http://www.rabbitmq.com/ha.html

- 首先镜像模式要依赖 policy 模块,这个模块是做什么用的呢?

- policy 中文来说是政策,策略的意思就是要设置那些Exchanges或者queue的数据需要如何复制,同步。

[root@rabbitmq01 ~]# rabbitmqctl set_policy ha-all "^" '{"ha-mode":"all"}'

- ha-all:为策略名称。

- :为匹配符,只有一个代表匹配所有,^qfedu为匹配名称为qfedu的exchanges或者queue。

- ha-mode:为匹配类型,他分为3种模式:

-

- all-所有(所有的 queue),

- exctly-部分(需配置ha-params参数,此参数为int类型, 比如3,众多集群中的随机3台机器),

- nodes-指定(需配置ha-params参数,此参数为数组类型比如[“rabbit@F”,“rabbit@G”]这样指定为F与G这2台机器。)。

9、部署haproxy,实现访问5672端口时,轮询访问3个节点

1、环境(selinux firewalld)

- haproxy1: 192.168.122.65

- haproxy2: 192.168.122.180

2、安装haproxy(2 台)

[root@haproxy1 ~]# yum -y install haproxy

[root@haproxy2 ~]# yum -y install haproxy

3、创建 haproxy.conf

- haproxy1

[root@haproxy1 ~]# vim /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local1

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

listen stats

bind 0.0.0.0:8888

mode http

stats enable

stats hide-version

stats uri /haproxystats

stats realm Haproxy\ stats

stats auth admin:admin

stats admin if TRUE

frontend http-in

bind 0.0.0.0:5672

mode tcp

log global

option httplog

option httpclose

default_backend rabbitmq-server

backend rabbitmq-server

mode tcp

balance roundrobin

server rabbitmq1 192.168.122.124:5672 maxconn 2000 weight 1 check inter 5s rise 2 fall 2

server rabbitmq2 192.168.122.66:5672 maxconn 2000 weight 1 check inter 5s rise 2 fall 2

server rabbitmq3 192.168.122.122:5672 maxconn 2000 weight 1 check inter 5s rise 2 fall 2

- haproxy2

[root@haproxy2 ~]# vim /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local1

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

listen stats

bind 0.0.0.0:8888

mode http

stats enable

stats hide-version

stats uri /haproxystats

stats realm Haproxy\ stats

stats auth admin:admin

stats admin if TRUE

frontend http-in

bind 0.0.0.0:5672

mode tcp

log global

option httplog

option httpclose

default_backend rabbitmq-server

backend rabbitmq-server

mode tcp

balance roundrobin

server rabbitmq1 192.168.122.124:5672 maxconn 2000 weight 1 check inter 5s rise 2 fall 2

server rabbitmq2 192.168.122.66:5672 maxconn 2000 weight 1 check inter 5s rise 2 fall 2

server rabbitmq3 192.168.122.122:5672 maxconn 2000 weight 1 check inter 5s rise 2 fall 2

3、Haproxy rsyslog 日志配置

- haproxy1

[root@haproxy1 ~]# vim /etc/rsyslog.conf

# Provides UDP syslog reception

$ModLoad imudp

$UDPServerRun 514

# Provides TCP syslog reception

$ModLoad imtcp

$InputTCPServerRun 514

local1.* /var/log/haproxy/redis.log

[root@haproxy1 ~]# mkidr /var/log/haproxy

[root@haproxy1 ~]# systemctl restart rsyslog.service

[root@haproxy1 ~]# systemctl start haproxy

[root@haproxy1 ~]# cat /var/log/haproxy/rabbitmq.log

Sep 19 20:03:18 localhost haproxy[1654]: Proxy stats started.

Sep 19 20:03:18 localhost haproxy[1654]: Proxy http-in started.

Sep 19 20:03:18 localhost haproxy[1654]: Proxy http-in started.

Sep 19 20:03:18 localhost haproxy[1654]: Proxy html-server started.

- haproxy2

[root@haproxy2 ~]# vim /etc/rsyslog.conf

# Provides UDP syslog reception

$ModLoad imudp

$UDPServerRun 514

# Provides TCP syslog reception

$ModLoad imtcp

$InputTCPServerRun 514

local1.* /var/log/haproxy/redis.log

[root@haproxy2 ~]# mkidr /var/log/haproxy

[root@haproxy2 ~]# systemctl restart rsyslog.service

[root@haproxy2 ~]# systemctl start haproxy

[root@haproxy2 ~]# cat /var/log/haproxy/rabbitmq.log

Sep 19 20:03:18 localhost haproxy[1654]: Proxy stats started.

Sep 19 20:03:18 localhost haproxy[1654]: Proxy http-in started.

Sep 19 20:03:18 localhost haproxy[1654]: Proxy http-in started.

Sep 19 20:03:18 localhost haproxy[1654]: Proxy html-server started.

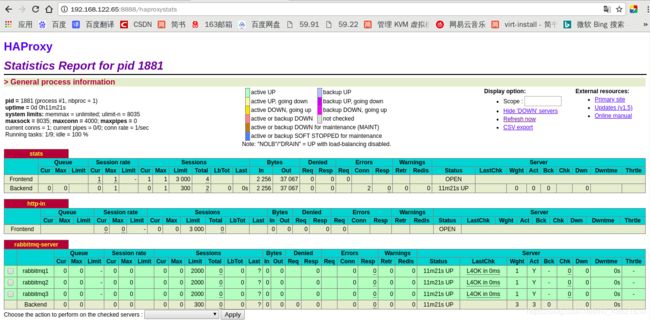

4、haproxy 监控页面访问验证

- 端口8888 账号密码 admin:admin

- 192.168.122.65:8888/haproxystats

- 192.168.122.189:8888/haproxystats

- 有以下效果

10、部署Keepalived,实现主从热备、秒级切换

1、环境

- 两台虚拟机或者选择集群中的任意两个节点配置

- keepalived1:192.168.122.65

- keepalived2:192.168.122.180

- VIP地址:192.168.122.160

2、安装keepalived

[root@haproxy1 ~]# yum -y install keepalived

[root@haproxy2 ~]# yum -y install keepalived

3、修改配置文件

1、keepalived1 配置

[root@haproxy1 ~]# cp /etc/keepalived/keepalived.conf{,.bak}

[root@haproxy1 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id director1

}

vrrp_script check_haproxy {

script "/etc/keepalived/haproxy_chk.sh"

interval 5

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 80

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.122.160/24

}

track_script {

check_haproxy

}

}

2、keepalived2 配置

[root@haproxy2 ~]# cp /etc/keepalived/keepalived.conf{,.bak}

[root@haproxy2 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id director2

}

vrrp_script check_haproxy {

script "/etc/keepalived/haproxy_chk.sh"

interval 5

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 80

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.122.160/24

}

track_script {

check_haproxy

}

}

3、健康检测脚本 haproxy_chk.sh

[root@redis1 ~]# vim /etc/keepalived/haproxy_chk.sh

#!/usr/bin/env bash

# test haproxy server running

systemctl status haproxy.service &>/dev/null

if [ $? -ne 0 ];then

systemctl start haproxy.service &>/dev/null

sleep 5

systemctl status haproxy.service &>/dev/null

if [ $? -ne 0 ];then

systemctl stop keepalived

fi

fi

[root@redis2 ~]# vim /etc/keepalived/haproxy_chk.sh

#!/usr/bin/env bash

# test haproxy server running

systemctl status haproxy.service &>/dev/null

if [ $? -ne 0 ];then

systemctl start haproxy.service &>/dev/null

sleep 5

systemctl status haproxy.service &>/dev/null

if [ $? -ne 0 ];then

systemctl stop keepalived

fi

fi

4、开启服务验证是VIP

[root@haproxy2 ~]# systemctl start keepalived

[root@haproxy1 ~]# systemctl start keepalived

[root@haproxy1 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:ff:3b:5d brd ff:ff:ff:ff:ff:ff

inet 192.168.122.65/24 brd 192.168.122.255 scope global dynamic eth0

valid_lft 2426sec preferred_lft 2426sec

inet 192.168.122.160/24 scope global secondary eth0

valid_lft forever preferred_lft forever

inet6 fe80::227e:ba3f:f915:f16c/64 scope link

valid_lft forever preferred_lft forever

inet6 fe80::ec3b:957f:e7e1:f7d7/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever