入门金融风控比赛-Task2-数据分析

**

赛题: 零基础入门数据挖掘-零基础入门金融风控之贷款违约

**

目的:

1.EDA价值主要在于熟悉了解整个数据集的基本情况(缺失值,异常值),对数据集进行验证是否可以进行接下来的机器学习或者深度学习建模.

2.了解变量间的相互关系、变量与预测值之间的存在关系。

3.为特征工程做准备

项目地址:https://github.com/datawhalechina/team-learning-data-mining/tree/master/FinancialRiskControl

比赛地址:https://tianchi.aliyun.com/competition/entrance/531830/introduction

2.1 学习目标

- 学习如何对数据集整体概况进行分析,包括数据集的基本情况(缺失值,异常值)

- 学习了解变量间的相互关系、变量与预测值之间的存在关系

- 完成相应学习打卡任务

2.2 内容介绍

-

数据总体了解:

- 读取数据集并了解数据集大小,原始特征维度;

- 通过info熟悉数据类型;

- 粗略查看数据集中各特征基本统计量;

-

缺失值和唯一值:

- 查看数据缺失值情况

- 查看唯一值特征情况

-

深入数据-查看数据类型

- 类别型数据

- 数值型数据

- 离散数值型数据

- 连续数值型数据

-

数据间相关关系

- 特征和特征之间关系

- 特征和目标变量之间关系

-

用pandas_profiling生成数据报告

2.3 代码示例

2.3.1 导入数据分析及可视化过程需要的库

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import datetime

import warnings

warnings.filterwarnings('ignore')

如果电脑安装了Anaconda软件,则自带了这些库,无需重复安装。

若没有安装,则使用pip install安装库。

2.3.2 读取文件

python读取训练文件和测试文件

data_train = pd.read_csv('./train.csv')

data_test_a = pd.read_csv('./testA.csv')

2.3.2.1读取文件的拓展知识

- pandas读取数据时相对路径载入报错时,尝试使用os.getcwd()查看当前工作目录。

- TSV与CSV的区别:

- 从名称上即可知道,TSV是用制表符(Tab,’\t’)作为字段值的分隔符;CSV是用半角逗号(’,’)作为字段值的分隔符;

- Python对TSV文件的支持:Python的csv模块准确的讲应该叫做dsv模块,因为它实际上是支持范式的分隔符分隔值文件(DSV,delimiter-separated values)的。delimiter参数值默认为半角逗号,即默认将被处理文件视为CSV。当delimiter=’\t’时,被处理文件就是TSV。

- 读取文件的部分(适用于文件特别大的场景)

- 通过nrows参数,来设置读取文件的前多少行,nrows是一个大于等于0的整数。

- 分块读取

读取训练文件的前五行

data_train_sample = pd.read_csv(data_train, nrows=5)

# 设置chunksize参数,来控制每次迭代数据的大小

chunker = pd.read_csv('I:/finance/train.csv', chunksize=5)

for item in chunker: # 循环打印迭代数据的类型和长度大小

print(type(item))

print(len(item))

2.3.3总体了解

查看数据集的样本个数和原始特征维度

print(data_test_a.shape)

[1]:(20000,48)

print(data_train.shape)

[2]:(80000,47)

print(data_train.columns)

通过info()来熟悉数据类型

print(data_train.info())

总体粗略的查看数据集各个特征的一些基本统计量

print(data_train.describe())

print(data_test_a.head(3).append(data_test_a.tail(3)))

2.3.4查看数据集中特征缺失值,唯一值等

查看缺失值

print(f'There are {data_train.isnull().any().sum()} columns in train dataset with missing value')

上面得到训练集有22列特征有缺失值,进一步查看缺失特征中缺失率大于50%的特征

# 查看缺失特征中缺失率大于50%的特征

have_null_fea_dict = (data_train.isnull().sum()/len(data_train)).to_dict()

# data_train.isnull().sum()为所有特征为0的之和

# len(data_train)为所有数据条数(80000)

fea_null_moreThanHalf = {

}

for key,value in have_null_fea_dict.items():

if value > 0.5:

fea_null_moreThanHalf[key] = value

具体的查看缺失特征及缺失率

# nan可视化

missing = data_train.isnull().sum()/len(data_train)

missing = missing[missing>0]

missing.sort_values(inplace=True)

missing.plot.bar()

- 纵向了解(看特征列)哪些列存在 “nan”, 并可以把nan的个数打印,主要的目的在于查看某一列nan存在的个数是否真的很大,如果nan存在的过多,说明这一列对label的影响几乎不起作用了,可以考虑删掉。如果缺失值很小一般可以选择填充。

- 另外可以横向比较(看数据行),如果在数据集中,某些样本数据的大部分列都是缺失的且样本足够的情况下可以考虑删除该条数据。

Tips: 比赛大杀器lgb模型可以自动处理缺失值!

查看训练集测试集中特征属性只有一值的特征

one_value_fea_train = [col for col in data_train.columns if data_train[col].nunique() <= 1]

one_value_fea_test = [col for col in data_test_a.columns if data_test_a[col].nunique() <= 1]

print(one_value_fea_train)

print(one_value_fea_test)

print(f'There are {len(one_value_fea_train)} columns in train dataset with one unique value.')

print(f'There are {len(one_value_fea_test)} columns in test dataset with one unique value.')

总结:

47列数据中有22列都缺少数据,这在现实世界中很正常。‘policyCode’具有一个唯一值(或全部缺失)。有很多连续变量和一些分类变量。

2.3.5 查看特征的数值类型有哪些,对象类型有哪些

- 特征一般都是由类别型特征和数值型特征组成,而数值型特征又分为连续型和离散型。

- 类别型特征有时具有非数值关系,有时也具有数值关系。比如‘grade’中的等级A,B,C等,是否只是单纯的分类,还是A优于其他要结合业务判断。

- 数值型特征本是可以直接入模的,但往往风控人员要对其做分箱,转化为WOE编码进而做标准评分卡等操作。从模型效果上来看,特征分箱主要是为了降低变量的复杂性,减少变量噪音对模型的影响,提高自变量和因变量的相关度。从而使模型更加稳定。

numerical_fea = list(data_train.select_dtypes(exclude=['object']).columns)

print(numerical_fea)

category_fea = list(filter(lambda x: x not in numerical_fea, list(data_train.columns)))

print(category_fea)

print(data_train.grade)

数值型变量分析,数值型肯定是包括连续型变量和离散型变量的

- 划分数值型变量中的连续变量和离散型变量

查看数值型变量中特征取值个数大于10的(连续型变量)和小于10的(离散型变量)

# 过滤数字型类别特征

def get_numerical_serial_fea(data, feas):

numerical_serial_fea = []

numerical_noserial_fea = []

for fea in feas:

temp = data[fea].nunique()

if temp <= 10:

numerical_noserial_fea.append(fea)

numerical_noserial_fea.append(fea)

# 调用该函数

numerical_serial_fea, numerical_noserial_fea = get_numerical_serial_fea(data_train, numerical_fea)

- 数值类别型变量分析

data_train['term'].value_counts() #离散型变量

data_train['homeOwnership'].value_counts()#离散型变量

data_train['verificationStatus'].value_counts()#离散型变量

data_train['initialListStatus'].value_counts()#离散型变量

data_train['applicationType'].value_counts()#离散型变量

data_train['policyCode'].value_counts()#离散型变量,无用,全部一个值

data_train['n11'].value_counts()#离散型变量,相差悬殊,用不用再分析

#每个数字特征得分布可视化

f = pd.melt(data_train, value_vars=numerical_serial_fea)

g = sns.FacetGrid(f, col="variable", col_wrap=2, sharex=False, sharey=False)

g = g.map(sns.distplot, "value")

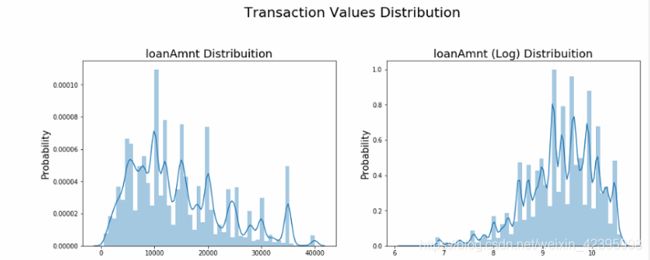

- 数值连续型变量分析

- 查看某一个数值型变量的分布,查看变量是否符合正态分布,如果不符合正太分布的变量可以log化后再观察下是否符合正态分布。

- 如果想统一处理一批数据变标准化 必须把这些之前已经正态化的数据提出

- 正态化的原因:一些情况下正态非正态可以让模型更快的收敛,一些模型要求数据正态(eg.GMM、KNN),保证数据不要过偏态即可,过于偏态可能会影响模型预测结果。

plt.figure(figsize=(16,12))

plt.suptitle('Transaction Values Distribution', fontsize=22)

plt.subplot(211)

sub_plot_1 = sns.distplot(data_train['loanAmnt'])

sub_plot_1.set_title('loanAmnt Distribuition', fonts=18)

sub_plot_1.set_xlabel('')

sub_plot_1.set_ylabel('Probability', fontsize=15)

plt.subplot(212)

sub_plot_1 = sns.distplot(np.log(data_train['loanAmnt']))

sub_plot_1.set_title('loanAmnt (Log) Distribuition', fonts=18)

sub_plot_1.set_xlabel('')

sub_plot_1.set_ylabel('Probability', fontsize=15)

- 非数值类别型变量分析

print(category_fea)

print(data_train['grade'].value_counts())

总结:

- 上面我们用value_counts()等函数看了特征属性的分布,但是图表是概括原始信息最便捷的方式。

- 数无形时少直觉。

- 同一份数据集,在不同的尺度刻画上显示出来的图形反映的规律是不一样的。python将数据转化成图表,但结论是否正确需要由你保证。

2.3.6 变量分布可视化

单一变量分布可视化

plt.figure(figsize=(8, 8))

sns.barplot(data_train["employmentLength"].value_counts(dropna=False)[:20],

data_train["employmentLength"].value_counts(dropna=False).keys()[:20])

plt.show()

根据y值(label值)不同可视化x(特征)某个特征的分布

- 首先查看类别型变量在不同y值(y–>label:0/1)上的分布

train_loan_fr = data_train.loc[data_train['isDefault'] == 1]

train_loan_nofr = data_train.loc[data_train['isDefault'] == 0]

fig, ((ax1, ax2), (ax3, ax4)) = plt.subplots(2, 2, figsize=(15, 18))

train_loan_fr.groupby('grade')['grade'].count().plot(kind='barh', ax=ax1, title='Count of grade fraud')

train_loan_nofr.groupby('grade')['grade'].count().plot(kind='barh', ax=ax2, title='Count of grade fraud')

train_loan_fr.groupby('employmentLength')['employmentLength'].count().plot(kind='barh', ax=ax3, title='Count of employmentLength fraud')

train_loan_nofr.groupby('employmentLength')['employmentLength'].count().plot(kind='barh', ax=ax4, title='Count of employmentLength fraud')

plt.show()

- 其次查看连续型变量在不同y值上的分布

fig, ((ax1, ax2)) = plt.subplots(1, 2, figsize=(15, 6))

data_train.loc[data_train['isDefault'] == 1]\

['loanAmnt'].apply(np.log) \

.plot(kind='hist',

bins=100,

title='Log Loan Amt - Fraud',

color='r',

xlim=(-3, 10),

ax= ax1)

data_train.loc[data_train['isDefault'] == 0]\

['loanAmnt'].apply(np.log) \

.plot(kind='hist',

bins=100,

title='Log Loan Amt - Not Fraud',

color='b',

xlim=(-3, 10),

ax= ax2)

total = len(data_train)

total_amt = data_train.groupby(['isDefault'])['loanAmnt'].sum().sum()

plt.figure(figsize=(12,5))

plt.subplots(121)

plot_tr = sns.countplot(x='isDefault', data=data_train)

plot_tr.set_title("Fraud Loan Distribution \n 0: good user | 1: bad user", fontsize=14)

plot_tr.set_xlabel("Is fraud by count", fontsize=16)

plot_tr.set_ylabel('Count', fontsize=16)

for p in plot_tr.patches:

height = p.get_height()

plot_tr.text(p.get_x()+p.get_width()/2.,

height + 3,

'{:1.2f}%'.format(height/total*100),

ha="center", fontsize=15)

percent_amt = (data_train.groupby(['isDefault'])['loanAmnt'].sum())

percent_amt = percent_amt.reset_index()

plt.subplot(122)

plot_tr_2 = sns.barplot(x='isDefault', y='loanAmnt', dodge=True, data=percent_amt)

plot_tr_2.set_title("Total Amount in loanAmnt \n 0: good user | 1: bad user", fontsize=14)

plot_tr_2.set_xlabel("Is fraud by percent", fontsize=16)

plot_tr_2.set_ylabel('Total Loan Amount Scalar', fontsize=16)

for p in plot_tr_2.patches:

height = p.get_height()

plot_tr_2.text(p.get_x()+p.get_width()/2.,

height + 3,

'{:1.2f}%'.format(height/total_amt * 100),

ha="center", fontsize=15)

2.3.6 时间格式数据处理及查看

#转化成时间格式 issueDate特征表示数据日期离数据集中日期最早的日期(2007-06-01)的天数

data_train['issueDate'] = pd.to_datetime(data_train['issueDate'],format='%Y-%m-%d')

startdate = datetime.datetime.strptime('2007-06-01', '%Y-%m-%d')

data_train['issueDate'] = data_train['issueDate'].apply(lambda x: x-startdate).dt.days

#转化成时间格式

data_test_a['issueDate'] = pd.to_datetime(data_test_a['issueDate'], format='%Y-%m-%d')

startdate = datetime.datetime.strptime('2007-06-01', '%Y-%m-%d')

data_test_a['issueDate'] = data_test_a['issueDate'].apply(lambda x: x-startdate).dt.days

plt.hist(data_train['issueDate'], label='train')

plt.hist(data_test_a['issueDateDT'], label='test');

plt.legend();

plt.title('Distribution of issueDateDT dates');

2.3.7 掌握透视图可以让我们更好的了解数据

#透视图 索引可以有多个,“columns(列)”是可选的,聚合函数aggfunc最后是被应用到了变量“values”中你所列举的项目上。

pivot = pd.pivot_table(data_train, index=['grade'], columns=['issueDateDT'], values=['loanAmnt'], aggfunc=np.sum)

print(pivot)

2.3.8 用pandas_profiling生成数据报告

pfr = pandas_profiling.ProfileReport(data_train)

pfr.to_file("I:/finance/example.html")

2.4 总结

数据探索性分析是我们初步了解数据,熟悉数据为特征工程做准备的阶段,甚至很多时候EDA阶段提取出来的特征可以直接当作规则来用。可见EDA的重要性,这个阶段的主要工作还是借助于各个简单的统计量来对数据整体的了解,分析各个类型变量相互之间的关系,以及用合适的图形可视化出来直观观察。