Docker——14——Docker swarm集群

Docker swarm

基本概念

Swarm 是Docker 引擎内置(原生)的集群管理和编排工具。 Docker Swarm 是 Docker 官方三剑客项目之一,提供 Docker 容器集群服务,是 Docker 官方对容器云生态进行支持的核心方案。

使用它,用户可以将多个 Docker 主机封装为单个大型的虚拟 Docker 主机,快速打造一套容器云平台。Swarm mode 内置 kv 存储功能,提供了众多的新特性,比如:具有容错能力的去中心化设计、内置服务发现、负载均衡、路由网格、动态伸缩、滚动更新、安全传输等。使得 Docker 原生的 Swarm 集群具备与 Mesos 、 Kubernetes 竞争的实力。使用 Swarm 集群之前需要了解以下几个概念。

node节点

Docker 的主机可以主动初始化一个 Swarm 集群或者加入一个已存在的 Swarm 集群,这样这个运行 Docker 的主机就成为一个 Swarm 集群的节点 (node) 。节点分为 管理 (manager) 节点和工作 (worker) 节点。

管理节点用于 Swarm 集群的管理, docker swarm 命令基本只能在管理节点执行(节点退出集群命令 docker swarm leave 可以在工作节点执行)。一个 Swarm 集群可以有多个管理节点,但只有一个管理节点可以成为 leader ,leader 通过 raft 协议实现。

工作节点是任务执行节点,管理节点将服务 ( service ) 下发至工作节点执行。管理节点默认也作为工作节点。也可以通过配置让服务只运行在管理节点。

docker swarm集群:三剑客之一

基本环境要求:

关闭防火墙、禁用selinux、3台dockerhost区别主机名、时间同步:

| node01 | node02 | node03 |

|---|---|---|

| 192.168.1.128 | 192.168.1.129 | 192.168.1.150 |

- docker版本必须是:v1.12版本以上

- Swarm:作用运行docker engin(引擎)的多个主机组成的集群

- node:每一个docker engin都是一个node(节点),分为 manager 和 worker

- manager node:负责执行容器的编排和集群的管理工作,保持并维护 swarm处于期望的状态。swarm可以有多个manager node,他们会自动协调并选举出一个Leader执行编排任务。但相反,不能没有manager node

- worker node:接受并执行由manager node 派发的任务,并且默认 manager node也是一个work node,不过可以将它设置为manager-only node.让它只负责编排和管理工作

- service:用来定义worker上执行的命令

swarm集群

统一设置系统时间与网络时间同步

yum -y install ntp ntpdate #安装ntpdate工具

ntpdate cn.pool.ntp.org #设置系统时间与网络时间同步

hwclock --systohc #将系统时间写入硬件时间

hwclock -w #强制系统时间写入CMOS中防止重启失效

或

clock -w

1、初始化集群

[root@docker01 ~]# docker swarm init --advertise-addr 192.168.1.128

Swarm initialized: current node (mk9bqh3spr38di2t812pubofo) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-3xqej1mdhm4skimz8q7x5e04pq9rx0wzta62estbk6zz8s9ohx-d8to13jid57tl8g916isyztdn 192.168.1.128:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

- - -advertise-addr:指定与其他Node通信的地址

- 上边返回的结果表示:初始化成功,并且,如果想要添加work节点运行下面的命令:

docker swarm join --token SWMTKN-1-4chxoewcpdmf6npkkz8uipdls0kgsws3m0sft7022fjcrcy2yp-ak8mrvvv5x4xqccm6aur55chu 192.168.1.128:2377 - 如果想要添加manager 节点: 运行下边的命令:

docker swarm join-token manager

docker02以worker的身份加入swarm集群:

[root@docker02 ~]# docker swarm join --token SWMTKN-1-3xqej1mdhm4skimz8q7x5e04pq9rx0wzta62estbk6zz8s9ohx-d8to13jid57tl8g916isyztdn 192.168.1.128:2377

This node joined a swarm as a worker.

PS:这里注意,token只有24小时的有效期

docker03以manager的身份加入swarm集群:

[root@docker01 ~]# docker swarm join-token manager

To add a manager to this swarm, run the following command:

docker swarm join --token SWMTKN-1-3xqej1mdhm4skimz8q7x5e04pq9rx0wzta62estbk6zz8s9ohx-2erfn3weuapktkqi7oo5f6k4k 192.168.1.128:2377

[root@docker03 ~]# docker swarm join --token SWMTKN-1-3xqej1mdhm4skimz8q7x5e04pq9rx0wzta62estbk6zz8s9ohx-2erfn3weuapktkqi7oo5f6k4k 192.168.1.128:2377

This node joined a swarm as a manager.

当其他两个节点加入成功之后,可以执行 docker node ls 查看节点详情

[root@docker01 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

mk9bqh3spr38di2t812pubofo * docker01 Ready Active Leader 18.09.0

5xu8xnpebepo5i75aucm1rxbn docker02 Ready Active 18.09.0

v1xefvx0qn21pau9xp7j5vfi2 docker03 Ready Active Reachable 18.09.0

基本操作命令:

- docker swarm leave : 申请离开一个集群,之后查看节点状态会变成 down.然后可以通过manager node 将其删除

- docker node rm xxx: 删除某个节点

- docker swarm join-token [manager | worker]:生成令牌,可以是manager身份或worker身份

- docker node demote(降级):将swarm节点的manager降级为work

- docker node promote(升级): 将warm节点的work升级为manager

- docker node update --availability (“active”|“pause”|“drain”)

PS:可以通过设置节点的状态,让manager节点不参加实际的运行容器的任务

2、部署docker swarm集群网络

overlay:覆盖型网络

[root@docker01 ~]# docker network create -d overlay --attachable docker

hqjvtr71gaofbrd2m73kaugn2

[root@docker01 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

……

hqjvtr71gaof docker overlay swarm

……

- attachable:这个参数必须要加,否则不能用于容器。在创建网络的时候,并没有部署一个存储服务,比如consul,那是因为docker swarm 自带存储。并且,在node1上创建的此网络,但在swarm的其他节点,是查看不到此网络信息的。但却能够直接使用此网络。

验证:其他节点是否能够使用此网络

[root@docker01 ~]# docker run -itd --name t1 --network docker busybox

[root@docker02 ~]# docker run -itd --name t2 --network docker busybox

[root@docker03 ~]# docker run -itd --name t3 --network docker busybox

[root@docker03 ~]# docker exec -it t3 sh

/ # ip a

1: lo: mtu 65536 qdisc noqueue qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

16: eth0@if17: mtu 1450 qdisc noqueue

link/ether 02:42:0a:00:00:06 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.6/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

18: eth1@if19: mtu 1500 qdisc noqueue

link/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.3/16 brd 172.18.255.255 scope global eth1

valid_lft forever preferred_lft forever

[root@docker02 ~]# docker exec -it t2 sh

/ # ping 10.0.0.6

PING 10.0.0.6 (10.0.0.6): 56 data bytes

64 bytes from 10.0.0.6: seq=0 ttl=64 time=0.707 ms

64 bytes from 10.0.0.6: seq=1 ttl=64 time=0.439 ms

……

[root@docker01 ~]# docker exec -it t1 sh

/ # ping 10.0.0.6

PING 10.0.0.6 (10.0.0.6): 56 data bytes

64 bytes from 10.0.0.6: seq=0 ttl=64 time=0.520 ms

64 bytes from 10.0.0.6: seq=1 ttl=64 time=0.362 ms

……

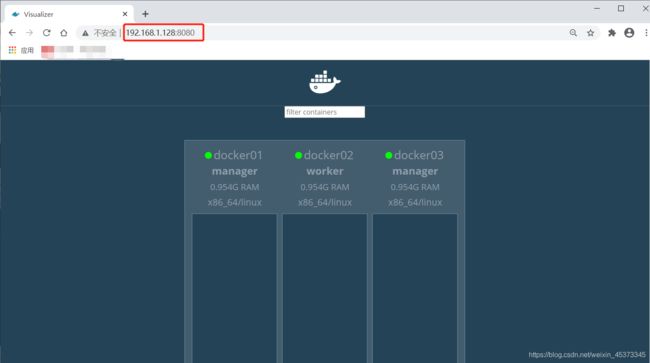

3、部署一个图形化webUI 界面

[root@docker01 ~]# docker run -d -p 8080:8080 -e HOST=192.168.1.128 -e PORT=8080 -v /var/run/docker.sock:/var/run/docker.sock --name visualizer dockersamples/visualizer

然后可以通过浏览器访问验证——————如果访问不到网页,需开启路由转发

echo net.ipv4.ip_forward = 1 >> /etc/sysctl.conf

sysctl -p

4、创建service(服务)

[root@docker01 ~]# docker service create --replicas 1 --network docker --name web1 -p 80 nginx

6cfou438qqicfnc3i7pbxraew

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged

[root@docker01 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

7jtez0beoy6e web1 replicated 1/1 nginx:latest *:30000->80/tcp

- - -replicas:副本数量(大概可以理解为:一个副本等于一个容器)

- 查看service:docker service ls

- 查看service 信息:docker service ps xxx

- 设置manager node 不参加工作

[root@docker01 ~]# docker node update node01 --availability drain

docker01

小练习:

[root@docker01 ~]# docker service create --replicas 5 --network docker --name web2 -p 80 nginx

zpvvvxke9ih4scoogxsyv9oll

overall progress: 5 out of 5 tasks

1/5: running [==================================================>]

2/5: running [==================================================>]

3/5: running [==================================================>]

4/5: running [==================================================>]

5/5: running [==================================================>]

verify: Service converged

[root@docker01 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

7jtez0beoy6e web1 replicated 1/1 nginx:latest *:30000->80/tcp

zpvvvxke9ih4 web2 replicated 5/5 nginx:latest *:30001->80/tcp

[root@docker01 ~]# docker service ps web2

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

sbkftqxp67xn web2.1 nginx:latest docker02 Running Running about a minute ago

nxcmzv6b1ehs web2.2 nginx:latest docker03 Running Running about a minute ago

n459098xtlt3 web2.3 nginx:latest docker02 Running Running about a minute ago

wzihac3ugkdi web2.4 nginx:latest docker03 Running Running about a minute ago

f38defhxi4s9 web2.5 nginx:latest docker01 Running Running 4 minutes ago

[root@docker01 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

27f028b65484 nginx:latest "/docker-entrypoint.…" 8 minutes ago Up 8 minutes 80/tcp web2.5.f38defhxi4s92lqhkmjn25mek

77fb6afd1cb7 nginx:latest "/docker-entrypoint.…" 19 minutes ago Up 19 minutes 80/tcp web1.1.3sfklbotp9jl7djb6oil2kngq

d7dc13b079cc dockersamples/visualizer "npm start" 34 minutes ago Up 34 minutes (healthy) 0.0.0.0:8080->8080/tcp visualizer

0207e8736fc9 busybox "sh" 42 minutes ago Up 42 minutes t1

测试删除

[root@docker02 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

520cfc06f06c nginx:latest "/docker-entrypoint.…" About a minute ago Up About a minute 80/tcp web2.3.ouhtqblm5yjyevnenf1ogmmlp

fc5ebd5d07b1 nginx:latest "/docker-entrypoint.…" 10 minutes ago Up 10 minutes 80/tcp web2.1.sbkftqxp67xn9c8hoea2r207y

b4e90248a7a3 busybox "sh" 45 minutes ago Up 45 minutes t2

[root@docker02 ~]# docker rm -f web2.1.sbkftqxp67xn9c8hoea2r207y

web2.1.sbkftqxp67xn9c8hoea2r207y

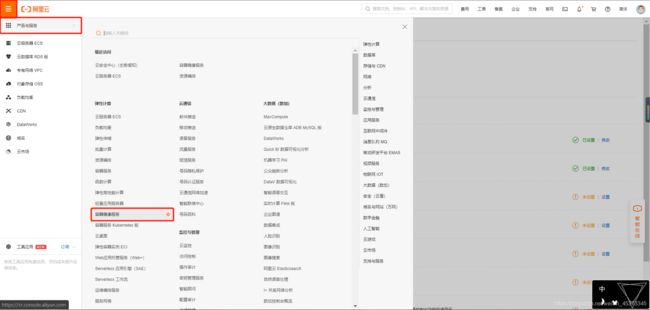

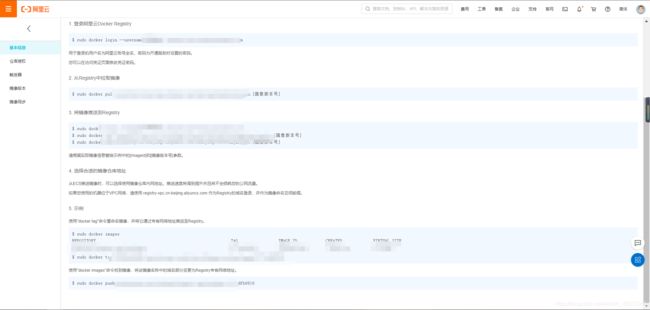

5、搭建私有仓库

阿里云私有镜像仓库

6、自定义镜像

要求:基于httpd镜像,更改主访问界面内容。镜像tag版本为v1.v2.v3,对应主机面内容为111,222,333.

更改主访问界面内容,导出镜像,打上 tag 标签

[root@docker01 ~]# docker pull nginx #下载镜像

[root@docker01 ~]# docker run -itd --name nginx -p 80:80 nginx #运行容器

[root@docker01 ~]# docker exec -it nginx bash

root@20c23163f07d:/# echo 111 > /usr/share/nginx/html/index.html

root@20c23163f07d:/# exit

[root@docker01 ~]# docker commit nginx nginx:v1

[root@docker01 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx v1 0b678e231a38 12 seconds ago 133MB

nginx latest 7e4d58f0e5f3 2 weeks ago 133MB

[root@docker01 ~]# docker tag nginx:v1 registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v1

[root@docker01 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx v1 0b678e231a38 45 seconds ago 133MB

registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx v1 0b678e231a38 45 seconds ago 133MB

nginx latest 7e4d58f0e5f3 2 weeks ago 133MB

[root@docker01 ~]# docker exec -it nginx bash

root@20c23163f07d:/# echo 222 > /usr/share/nginx/html/index.html

root@20c23163f07d:/# exit

[root@docker01 ~]# docker commit nginx nginx:v2

[root@docker01 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx v2 2344f45df85a 8 seconds ago 133MB

nginx v1 0b678e231a38 12 minutes ago 133MB

registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx v1 0b678e231a38 12 minutes ago 133MB

nginx latest 7e4d58f0e5f3 2 weeks ago 133MB

[root@docker01 ~]# docker tag nginx:v2 registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v2

[root@docker01 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx v2 2344f45df85a 35 seconds ago 133MB

registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx v2 2344f45df85a 35 seconds ago 133MB

nginx v1 0b678e231a38 12 minutes ago 133MB

registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx v1 0b678e231a38 12 minutes ago 133MB

nginx latest 7e4d58f0e5f3 2 weeks ago 133MB

[root@docker01 ~]# docker exec -it nginx bash

root@20c23163f07d:/# echo 333 > /usr/share/nginx/html/index.html

root@20c23163f07d:/# exit

[root@docker01 ~]# docker commit nginx nginx:v3

[root@docker01 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx v3 a9e592ebf7f5 6 seconds ago 133MB

nginx v2 2344f45df85a 3 minutes ago 133MB

registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx v2 2344f45df85a 3 minutes ago 133MB

nginx v1 0b678e231a38 15 minutes ago 133MB

registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx v1 0b678e231a38 15 minutes ago 133MB

nginx latest 7e4d58f0e5f3 2 weeks ago 133MB

[root@docker01 ~]# docker tag nginx:v3 registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v3

[root@docker01 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx v3 a9e592ebf7f5 21 seconds ago 133MB

registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx v3 a9e592ebf7f5 21 seconds ago 133MB

nginx v2 2344f45df85a 3 minutes ago 133MB

registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx v2 2344f45df85a 3 minutes ago 133MB

nginx v1 0b678e231a38 15 minutes ago 133MB

registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx v1 0b678e231a38 15 minutes ago 133MB

nginx latest 7e4d58f0e5f3 2 weeks ago 133MB

登录阿里云Docker Registry 推送镜像 nginxv1、v2、v3 镜像到私有仓库

#登录阿里云Docker Registry

[root@docker01 ~]# docker login --username=冥界的花 registry.cn-beijing.aliyuncs.com

Password:

……

Login Succeeded

#确定本地要上传的镜像

[root@docker01 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx v3 a9e592ebf7f5 21 seconds ago 133MB

registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx v3 a9e592ebf7f5 21 seconds ago 133MB

nginx v2 2344f45df85a 3 minutes ago 133MB

registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx v2 2344f45df85a 3 minutes ago 133MB

nginx v1 0b678e231a38 15 minutes ago 133MB

registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx v1 0b678e231a38 15 minutes ago 133MB

nginx latest 7e4d58f0e5f3 2 weeks ago 133MB

# 此时删除原来的镜像不会影响到新的镜像(就像你的朋友小明外号叫小胖,它不让你叫他小胖啦)

# [root@docker01 ~]# docker rmi busybox:latest

# Untagged: busybox:latest

# Untagged: busybox@sha256:ff73ae57286b2766922e4dd0e20031e7852c35d48fac28b48971b52ac609cdb5

#将镜像推送到Registry

[root@docker01 ~]# docker push registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v1

[root@docker01 ~]# docker push registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v2

[root@docker01 ~]# docker push registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v3

7、发布一个服务,基于上述镜像

要求: 副本数量为3个。服务的名称为:hao

[root@docker02 ~]# docker login --username=冥界的花 registry.cn-beijing.aliyuncs.com

Password:

……

Login Succeeded

[root@node02 ~]# docker pull registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v1

[root@node02 ~]# docker pull registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v2

[root@node02 ~]# docker pull registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v3

[root@docker03 ~]# docker login --username=冥界的花 registry.cn-beijing.aliyuncs.com

Password:

……

Login Succeeded

[root@node03 ~]# docker pull registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v1

[root@node03 ~]# docker pull registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v2

[root@node03 ~]# docker pull registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v3

[root@docker01 ~]# docker service create --replicas 3 --name hao -p 80:80 registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v1

image registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v1 could not be accessed on a registry to record

its digest. Each node will access registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v1 independently,

possibly leading to different nodes running different

versions of the image.

oj95up8sorunqnmdvf13pfd7c

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged

[root@docker01 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

187232c6b6d8 registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v1 "/docker-entrypoint.…" 49 seconds ago Up 48 seconds 80/tcp hao.1.m29iqekifd7ug6gk0t929r958

……

[root@docker01 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

oj95up8sorun hao replicated 3/3 registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v1 *:80->80/tcp

……

发布一个服务,基于上述镜像;要求: 副本数量为3个。服务的名称为:gao

[root@docker01 ~]# docker service create --replicas 3 --name gao -p 81:80 registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v2

image registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v2 could not be accessed on a registry to record

its digest. Each node will access registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v2 independently,

possibly leading to different nodes running different

versions of the image.

zxhhxbxj9hoph0on1uo4ze267

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged

[root@docker01 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f20557a44560 registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v2 "/docker-entrypoint.…" 31 seconds ago Up 24 seconds 80/tcp gao.1.ulducglbcspliepc1t2b5epgf

b0988e2504e0 registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v2 "/docker-entrypoint.…" 31 seconds ago Up 24 seconds 80/tcp gao.3.qgyjatow93i15avkabbrxsigz

0e66fb953fef registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v2 "/docker-entrypoint.…" 51 seconds ago Up 50 seconds 80/tcp gao.2.e4br2whkegk98vlw745edp24d

……

[root@docker01 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

zxhhxbxj9hop gao replicated 3/3 registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v2 *:81->80/tcp

……

发布一个服务,基于上述镜像;要求: 副本数量为3个。服务的名称为:bao

[root@docker01 ~]# docker service create --replicas 3 --name bao -p 82:80 registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v3

image registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v3 could not be accessed on a registry to record

its digest. Each node will access registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v3 independently,

possibly leading to different nodes running different

versions of the image.

ol2qmfxsid2uf9hkeslnewepf

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged

[root@docker01 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3386dd9cd3d9 registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v3 "/docker-entrypoint.…" 36 seconds ago Up 29 seconds 80/tcp bao.2.85nc609fa8o5w4eu8gtl83yni

f6613c948830 registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v3 "/docker-entrypoint.…" 36 seconds ago Up 29 seconds 80/tcp bao.1.b36prvgaj4qcxljkvgt12m545

f05098a558ea registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v3 "/docker-entrypoint.…" 57 seconds ago Up 55 seconds 80/tcp bao.3.rdgc97tp8w5cmev1doflv7aub

……

[root@docker01 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

ol2qmfxsid2u bao replicated 3/3 registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v3 *:82->80/tcp

……

默认的Ingress 网络,包括创建的自定义overlay网络,为后端真正为用户提供服务的container,提供了一个统一的入口

8、服务的扩容与缩容

[root@node01 ~]# docker service scale hao=6

[root@node01 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

9naf2wxvtz9q bao replicated 3/3 registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v3 *:82->80/tcp

k8xnmsoj9nzh gao replicated 3/3 registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v2 *:81->80/tcp

ymwib9q5nrgl hao replicated 6/6 registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v1 *:80->80/tcp

olcdj0ewt1oc web1 replicated 1/1 nginx:latest *:30000->80/tcp

xh7hgwxrwab5 web2 replicated 5/5 nginx:latest *:30001->80/tcp

扩容与缩容直接直接通过scale进行设置副本数量

9、服务的升级与回滚

[root@node01 ~]# docker service update --image registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v2 hao

平滑的更新

[root@node01 ~]# docker service update --image registry.cn-beijing.aliyuncs.com/shuijinglan/shuijinglan/nginx:v3 --update-parallelism 2 --update-delay 1m hao

PS:默认情况下,swarm一次只更新一个副本,并且两个副本之间没有等待时间,可以通过

- - -update-parallelism; 设置并行更新的副本数量。

- - -update-delay: 指定滚动更新的时间间隔。

回滚操作

[root@node01 ~]# docker service rollback hao

PS:注意,docker swarm的回滚操作,默认只能回滚到上一次操作的状

态,并不能连续回滚操作。

10、指定容器运行节点

[root@node01 ~]# docker node update --help

--label-add list Add or update a node label (key=value) #给节点添加标签

给node02上添加一个标签(磁盘容量最大)

[root@node01 ~]# docker node update --label-add disk=max node02

node02

查看标签信息

[root@node01 ~]# docker node inspect node02

……

"UpdatedAt": "2020-10-08T11:16:56.979631548Z",

"Spec": {

"Labels": {

"disk": "max"

……

删除标签

[root@node01 ~]# docker node update --label-rm disk node02

运行服务,指定节点

[root@node01 ~]# docker node update --label-add disk=max node02 #给node02上添加一个标签(磁盘容量最大)

[root@node01 ~]# docker service create --name test --replicas 3 --constraint 'node.labels.disk == max' nginx

[root@node01 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

……

s9jq0nryem9a test replicated 3/3 nginx:latest

……

[root@node01 ~]# docker service ps test

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

1yzgfjnh53h4 test.1 nginx:latest node02 Running Running 46 seconds ago

n9ixc8g070w8 test.2 nginx:latest node02 Running Running 46 seconds ago

fxz0d6cx7n92 test.3 nginx:latest node02 Running Running 46 seconds ago