Python爬虫之动态网页抓取(万科更新时间)

一.前言

爬取的页面分为静态页面和动态页面,静态的页面爬取很常见,就如豆瓣top250的爬取,展示的内容都在HTML源代码中。而动态页面,很多内容不会出现在HTML源代码中,例如使用JavaScript时,很可能出现这种情况。

静态网页例子:

豆瓣Top250页面标题https://movie.douban.com/top250?start=25&filter=

F12—>检查(选择触不可及),可以看到源码定位到触不可及标题这里。

在右键打开源码

可以看到此源码中存在该标题内容,因此爬取豆瓣Top250时候,这是一个静态页面,可以直接使用该URL进行爬取(也就是说我们可以把该URL当作真实地址进行爬取)。

动态网页例子:

万科更新时间https://baike.baidu.com/historylist/%E4%B8%87%E7%A7%91/6141470#page1

可以看到在该页面仍然可以用检查工具找到对应的位置,这里我们都会犯一个错误,就是由此认为其是一个静态页面,但真正的判断是右键继续查看源码。

可以发现HTML后面有一段代码,翻到其他页数看源码,发现最后的那一段代码仍然是相同的。

因此我们得到结论,它是一个动态页面,并不是真正的数据存储的地址,因此,我们需要寻找其真实地址。那么对于动态页面的爬取采用如下两种技术。

二.动态页面爬取技术

1.解析真实地址抓取

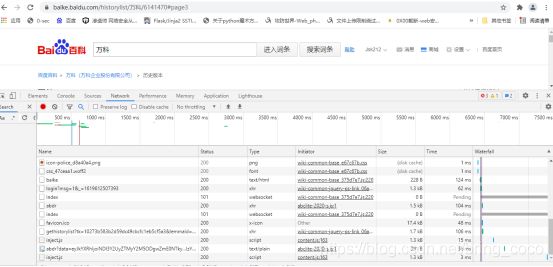

1.F12—>Network

2.寻找xhr文件或者json文件

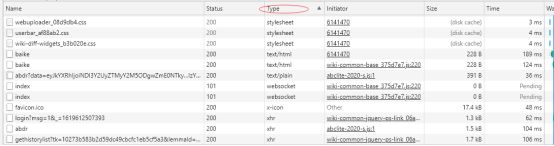

点击Type便于寻找(因为其会给你将Type分类)

发现下图的xhr文件的Name有点类似于我们爬取豆瓣时候的地址

点击该文件查看

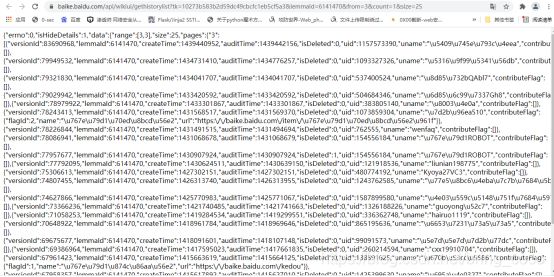

发现一堆数据,有时间,id,姓名等属性,因此断定该文件的url为真实地址。

因此,我们可以进行爬取该地址

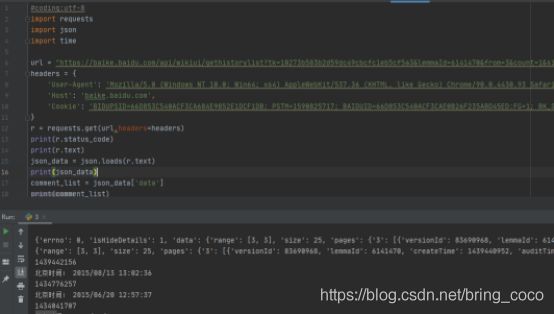

代码如下:

#coding:utf-8

import requests

url = "https://baike.baidu.com/api/wikiui/gethistorylist?tk=10273b583b2d59dc49cbcfc1eb5cf5a3&lemmaId=6141470&from=3&count=1&size=25"

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/90.0.4430.93 Safari/537.36',

'Host': 'baike.baidu.com',

'Cookie': 'BIDUPSID=66D853C540ACF3CA684E9852E1DCF1DB; PSTM=1590825717; BAIDUID=66D853C540ACF3CAE0B26F235ABD45ED:FG=1; BK_SEARCHLOG=%7B%22key%22%3A%5B%22hex%22%2C%22%E8%B5%9E%E5%8A%A9%E4%BA%BA%22%2C%22%E8%B5%9E%E5%8A%A9%E5%98%89%E5%AE%BE%22%2C%22SAML%22%2C%22%E7%94%A8%E6%88%B7%22%2C%22Rainbow%E8%A1%A8%E6%94%BB%E5%87%BB%E7%BB%95%E8%BF%87%E6%9C%80%E5%A4%A7%E5%A4%B1%E8%B4%A5%E7%99%BB%E5%BD%95%E9%99%90%E5%88%B6%22%2C%22%E7%89%B9%E6%9D%83%E5%8D%87%E7%BA%A7%E7%BD%91%E7%BB%9C%E5%AE%89%E5%85%A8%22%2C%22%E7%89%B9%E6%9D%83%E5%8D%87%E7%BA%A7%22%2C%22%E8%AE%A4%E5%8F%AF%22%2C%22%E5%90%8C%E8%B4%A8%E6%80%A7%22%5D%7D; H_WISE_SIDS=154770_153759_156158_155553_149355_156816_156287_150775_154259_148867_156096_154606_153243_153629_157262_157236_154172_156417_153065_156516_127969_154174_158527_150346_155803_146734_158745_131423_154037_107316_158054_158876_154189_155344_155255_157171_157790_144966_157401_154619_157814_158716_156726_157418_147551_157118_158367_158505_158589_157696_154639_154270_157472_110085_157006; BDUSS=klPNzU4NldvdkJOUG5DWk1zUWd0VUJPQjV4c3U2bHoxblBnS0NmcEtDT0hKR2hnRVFBQUFBJCQAAAAAAAAAAAEAAABOxNvnSnNrMjEyAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAIeXQGCHl0Bgf; BDUSS_BFESS=klPNzU4NldvdkJOUG5DWk1zUWd0VUJPQjV4c3U2bHoxblBnS0NmcEtDT0hKR2hnRVFBQUFBJCQAAAAAAAAAAAEAAABOxNvnSnNrMjEyAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAIeXQGCHl0Bgf; __yjs_duid=1_420ea1f33b6915a9547439f2725ac2811617111425851; Hm_lvt_55b574651fcae74b0a9f1cf9c8d7c93a=1617721790,1617721848,1617721949,1618794964; BAIDUID_BFESS=07052B6E9DBAC2EF4CB760778355D0A6:FG=1; __yjs_st=2_ZDc0Y2QxNzIyMmRmYzlmMjdjNjBmZmYzNGQ1MjcwN2IzMTU5Mzk5ODZmZGUxODJiYjQxMGU1NDQ2MGQwNjNmMmYzOTc1YmY4ZDcxZjgxYjNhYjI4M2I0ODU5ZTNmNGEyNGM4NDE0NTFmZDc3NjBjZGU0YWRmMTgwNmQxZjNhMzllNzE1MTYyZjNmYTMwNmNlNTZmMmEyNmI0MzJkMGY3MGI0Zjc3NGE0N2QxZDY2NjkxMjAwMmIyYzIyODg3NjkzNDQ3NWQ3ZjYyOWZiNTExMGEwZDRiOTVjNGZiNDg2NGU4ZDYzYmVmMzkxMTU3OTAyOGU5OWU3N2Q3YjRiMDk2N183Xzc0YjEzMTY3; BDORZ=FFFB88E999055A3F8A630C64834BD6D0; BDRCVFR[VbLWpd7HGum]=mk3SLVN4HKm; H_PS_PSSID=33839_33822_31254_33849_33760_33607_26350_33893; ab_sr=1.0.0_Y2YwMDFlM2ZmMTMzODEzNmYxNzE5M2M0YTk0ZDQ1ZTM1YTZjMzE5OTVkMzFlM2M2YzcxOWI3ZDBmODNlOTU4YTA5NDY1YTUwNTYwMTI4OTMyYWZiM2VlNGE5MzhiZmI2NDNjZmRjMmE4YWE1NTFmZGZmMjg5NWIzMWI4OTM1ODE=',

}

r = requests.get(url,headers=headers)

print(r.status_code)

print(r.text)

可以看到成功爬取,但是得到的内容并不是我们想要的,我们需要把json解析该数据

代码如下:

#coding:utf-8

import requests

import json

url = "https://baike.baidu.com/api/wikiui/gethistorylist?tk=10273b583b2d59dc49cbcfc1eb5cf5a3&lemmaId=6141470&from=3&count=1&size=25"

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/90.0.4430.93 Safari/537.36',

'Host': 'baike.baidu.com',

'Cookie': 'BIDUPSID=66D853C540ACF3CA684E9852E1DCF1DB; PSTM=1590825717; BAIDUID=66D853C540ACF3CAE0B26F235ABD45ED:FG=1; BK_SEARCHLOG=%7B%22key%22%3A%5B%22hex%22%2C%22%E8%B5%9E%E5%8A%A9%E4%BA%BA%22%2C%22%E8%B5%9E%E5%8A%A9%E5%98%89%E5%AE%BE%22%2C%22SAML%22%2C%22%E7%94%A8%E6%88%B7%22%2C%22Rainbow%E8%A1%A8%E6%94%BB%E5%87%BB%E7%BB%95%E8%BF%87%E6%9C%80%E5%A4%A7%E5%A4%B1%E8%B4%A5%E7%99%BB%E5%BD%95%E9%99%90%E5%88%B6%22%2C%22%E7%89%B9%E6%9D%83%E5%8D%87%E7%BA%A7%E7%BD%91%E7%BB%9C%E5%AE%89%E5%85%A8%22%2C%22%E7%89%B9%E6%9D%83%E5%8D%87%E7%BA%A7%22%2C%22%E8%AE%A4%E5%8F%AF%22%2C%22%E5%90%8C%E8%B4%A8%E6%80%A7%22%5D%7D; H_WISE_SIDS=154770_153759_156158_155553_149355_156816_156287_150775_154259_148867_156096_154606_153243_153629_157262_157236_154172_156417_153065_156516_127969_154174_158527_150346_155803_146734_158745_131423_154037_107316_158054_158876_154189_155344_155255_157171_157790_144966_157401_154619_157814_158716_156726_157418_147551_157118_158367_158505_158589_157696_154639_154270_157472_110085_157006; BDUSS=klPNzU4NldvdkJOUG5DWk1zUWd0VUJPQjV4c3U2bHoxblBnS0NmcEtDT0hKR2hnRVFBQUFBJCQAAAAAAAAAAAEAAABOxNvnSnNrMjEyAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAIeXQGCHl0Bgf; BDUSS_BFESS=klPNzU4NldvdkJOUG5DWk1zUWd0VUJPQjV4c3U2bHoxblBnS0NmcEtDT0hKR2hnRVFBQUFBJCQAAAAAAAAAAAEAAABOxNvnSnNrMjEyAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAIeXQGCHl0Bgf; __yjs_duid=1_420ea1f33b6915a9547439f2725ac2811617111425851; Hm_lvt_55b574651fcae74b0a9f1cf9c8d7c93a=1617721790,1617721848,1617721949,1618794964; BAIDUID_BFESS=07052B6E9DBAC2EF4CB760778355D0A6:FG=1; __yjs_st=2_ZDc0Y2QxNzIyMmRmYzlmMjdjNjBmZmYzNGQ1MjcwN2IzMTU5Mzk5ODZmZGUxODJiYjQxMGU1NDQ2MGQwNjNmMmYzOTc1YmY4ZDcxZjgxYjNhYjI4M2I0ODU5ZTNmNGEyNGM4NDE0NTFmZDc3NjBjZGU0YWRmMTgwNmQxZjNhMzllNzE1MTYyZjNmYTMwNmNlNTZmMmEyNmI0MzJkMGY3MGI0Zjc3NGE0N2QxZDY2NjkxMjAwMmIyYzIyODg3NjkzNDQ3NWQ3ZjYyOWZiNTExMGEwZDRiOTVjNGZiNDg2NGU4ZDYzYmVmMzkxMTU3OTAyOGU5OWU3N2Q3YjRiMDk2N183Xzc0YjEzMTY3; BDORZ=FFFB88E999055A3F8A630C64834BD6D0; BDRCVFR[VbLWpd7HGum]=mk3SLVN4HKm; H_PS_PSSID=33839_33822_31254_33849_33760_33607_26350_33893; ab_sr=1.0.0_Y2YwMDFlM2ZmMTMzODEzNmYxNzE5M2M0YTk0ZDQ1ZTM1YTZjMzE5OTVkMzFlM2M2YzcxOWI3ZDBmODNlOTU4YTA5NDY1YTUwNTYwMTI4OTMyYWZiM2VlNGE5MzhiZmI2NDNjZmRjMmE4YWE1NTFmZGZmMjg5NWIzMWI4OTM1ODE=',

}

r = requests.get(url,headers=headers)

print(r.status_code)

print(r.text)

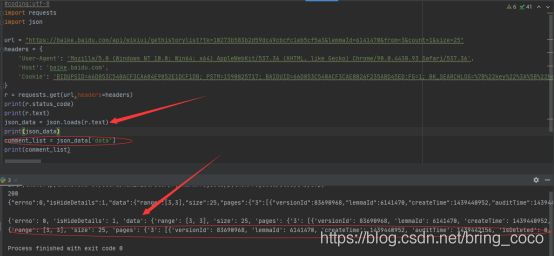

json_data = json.loads(r.text)

print(json_data)

comment_list = json_data['data']

print(comment_list)

可以看到json解析后的结果,也可以看到查询json解析后的data下的数据,因此可以从输出结果可以找到规律,我们要找更新时间,该元素在r.text的字典中的data字典中的pages字典下的3字典下的数组中,数组下的字典auditTime中

代码如下:

#coding:utf-8

import requests

import json

url = "https://baike.baidu.com/api/wikiui/gethistorylist?tk=10273b583b2d59dc49cbcfc1eb5cf5a3&lemmaId=6141470&from=3&count=1&size=25"

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/90.0.4430.93 Safari/537.36',

'Host': 'baike.baidu.com',

'Cookie': 'BIDUPSID=66D853C540ACF3CA684E9852E1DCF1DB; PSTM=1590825717; BAIDUID=66D853C540ACF3CAE0B26F235ABD45ED:FG=1; BK_SEARCHLOG=%7B%22key%22%3A%5B%22hex%22%2C%22%E8%B5%9E%E5%8A%A9%E4%BA%BA%22%2C%22%E8%B5%9E%E5%8A%A9%E5%98%89%E5%AE%BE%22%2C%22SAML%22%2C%22%E7%94%A8%E6%88%B7%22%2C%22Rainbow%E8%A1%A8%E6%94%BB%E5%87%BB%E7%BB%95%E8%BF%87%E6%9C%80%E5%A4%A7%E5%A4%B1%E8%B4%A5%E7%99%BB%E5%BD%95%E9%99%90%E5%88%B6%22%2C%22%E7%89%B9%E6%9D%83%E5%8D%87%E7%BA%A7%E7%BD%91%E7%BB%9C%E5%AE%89%E5%85%A8%22%2C%22%E7%89%B9%E6%9D%83%E5%8D%87%E7%BA%A7%22%2C%22%E8%AE%A4%E5%8F%AF%22%2C%22%E5%90%8C%E8%B4%A8%E6%80%A7%22%5D%7D; H_WISE_SIDS=154770_153759_156158_155553_149355_156816_156287_150775_154259_148867_156096_154606_153243_153629_157262_157236_154172_156417_153065_156516_127969_154174_158527_150346_155803_146734_158745_131423_154037_107316_158054_158876_154189_155344_155255_157171_157790_144966_157401_154619_157814_158716_156726_157418_147551_157118_158367_158505_158589_157696_154639_154270_157472_110085_157006; BDUSS=klPNzU4NldvdkJOUG5DWk1zUWd0VUJPQjV4c3U2bHoxblBnS0NmcEtDT0hKR2hnRVFBQUFBJCQAAAAAAAAAAAEAAABOxNvnSnNrMjEyAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAIeXQGCHl0Bgf; BDUSS_BFESS=klPNzU4NldvdkJOUG5DWk1zUWd0VUJPQjV4c3U2bHoxblBnS0NmcEtDT0hKR2hnRVFBQUFBJCQAAAAAAAAAAAEAAABOxNvnSnNrMjEyAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAIeXQGCHl0Bgf; __yjs_duid=1_420ea1f33b6915a9547439f2725ac2811617111425851; Hm_lvt_55b574651fcae74b0a9f1cf9c8d7c93a=1617721790,1617721848,1617721949,1618794964; BAIDUID_BFESS=07052B6E9DBAC2EF4CB760778355D0A6:FG=1; __yjs_st=2_ZDc0Y2QxNzIyMmRmYzlmMjdjNjBmZmYzNGQ1MjcwN2IzMTU5Mzk5ODZmZGUxODJiYjQxMGU1NDQ2MGQwNjNmMmYzOTc1YmY4ZDcxZjgxYjNhYjI4M2I0ODU5ZTNmNGEyNGM4NDE0NTFmZDc3NjBjZGU0YWRmMTgwNmQxZjNhMzllNzE1MTYyZjNmYTMwNmNlNTZmMmEyNmI0MzJkMGY3MGI0Zjc3NGE0N2QxZDY2NjkxMjAwMmIyYzIyODg3NjkzNDQ3NWQ3ZjYyOWZiNTExMGEwZDRiOTVjNGZiNDg2NGU4ZDYzYmVmMzkxMTU3OTAyOGU5OWU3N2Q3YjRiMDk2N183Xzc0YjEzMTY3; BDORZ=FFFB88E999055A3F8A630C64834BD6D0; BDRCVFR[VbLWpd7HGum]=mk3SLVN4HKm; H_PS_PSSID=33839_33822_31254_33849_33760_33607_26350_33893; ab_sr=1.0.0_Y2YwMDFlM2ZmMTMzODEzNmYxNzE5M2M0YTk0ZDQ1ZTM1YTZjMzE5OTVkMzFlM2M2YzcxOWI3ZDBmODNlOTU4YTA5NDY1YTUwNTYwMTI4OTMyYWZiM2VlNGE5MzhiZmI2NDNjZmRjMmE4YWE1NTFmZGZmMjg5NWIzMWI4OTM1ODE=',

}

r = requests.get(url,headers=headers)

print(r.status_code)

print(r.text)

json_data = json.loads(r.text)

print(json_data)

comment_list = json_data['data']

print(comment_list)

comment_list = json_data['data']['pages']['3']

for eachone in comment_list:

time1 = eachone['auditTime']

print(time1)

可以看到成功得到时间戳,但还不是我们想要的结果,我们将它改成北京时间

代码如下:

#coding:utf-8

import requests

import json

import time

url = "https://baike.baidu.com/api/wikiui/gethistorylist?tk=10273b583b2d59dc49cbcfc1eb5cf5a3&lemmaId=6141470&from=3&count=1&size=25"

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/90.0.4430.93 Safari/537.36',

'Host': 'baike.baidu.com',

'Cookie': 'BIDUPSID=66D853C540ACF3CA684E9852E1DCF1DB; PSTM=1590825717; BAIDUID=66D853C540ACF3CAE0B26F235ABD45ED:FG=1; BK_SEARCHLOG=%7B%22key%22%3A%5B%22hex%22%2C%22%E8%B5%9E%E5%8A%A9%E4%BA%BA%22%2C%22%E8%B5%9E%E5%8A%A9%E5%98%89%E5%AE%BE%22%2C%22SAML%22%2C%22%E7%94%A8%E6%88%B7%22%2C%22Rainbow%E8%A1%A8%E6%94%BB%E5%87%BB%E7%BB%95%E8%BF%87%E6%9C%80%E5%A4%A7%E5%A4%B1%E8%B4%A5%E7%99%BB%E5%BD%95%E9%99%90%E5%88%B6%22%2C%22%E7%89%B9%E6%9D%83%E5%8D%87%E7%BA%A7%E7%BD%91%E7%BB%9C%E5%AE%89%E5%85%A8%22%2C%22%E7%89%B9%E6%9D%83%E5%8D%87%E7%BA%A7%22%2C%22%E8%AE%A4%E5%8F%AF%22%2C%22%E5%90%8C%E8%B4%A8%E6%80%A7%22%5D%7D; H_WISE_SIDS=154770_153759_156158_155553_149355_156816_156287_150775_154259_148867_156096_154606_153243_153629_157262_157236_154172_156417_153065_156516_127969_154174_158527_150346_155803_146734_158745_131423_154037_107316_158054_158876_154189_155344_155255_157171_157790_144966_157401_154619_157814_158716_156726_157418_147551_157118_158367_158505_158589_157696_154639_154270_157472_110085_157006; BDUSS=klPNzU4NldvdkJOUG5DWk1zUWd0VUJPQjV4c3U2bHoxblBnS0NmcEtDT0hKR2hnRVFBQUFBJCQAAAAAAAAAAAEAAABOxNvnSnNrMjEyAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAIeXQGCHl0Bgf; BDUSS_BFESS=klPNzU4NldvdkJOUG5DWk1zUWd0VUJPQjV4c3U2bHoxblBnS0NmcEtDT0hKR2hnRVFBQUFBJCQAAAAAAAAAAAEAAABOxNvnSnNrMjEyAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAIeXQGCHl0Bgf; __yjs_duid=1_420ea1f33b6915a9547439f2725ac2811617111425851; Hm_lvt_55b574651fcae74b0a9f1cf9c8d7c93a=1617721790,1617721848,1617721949,1618794964; BAIDUID_BFESS=07052B6E9DBAC2EF4CB760778355D0A6:FG=1; __yjs_st=2_ZDc0Y2QxNzIyMmRmYzlmMjdjNjBmZmYzNGQ1MjcwN2IzMTU5Mzk5ODZmZGUxODJiYjQxMGU1NDQ2MGQwNjNmMmYzOTc1YmY4ZDcxZjgxYjNhYjI4M2I0ODU5ZTNmNGEyNGM4NDE0NTFmZDc3NjBjZGU0YWRmMTgwNmQxZjNhMzllNzE1MTYyZjNmYTMwNmNlNTZmMmEyNmI0MzJkMGY3MGI0Zjc3NGE0N2QxZDY2NjkxMjAwMmIyYzIyODg3NjkzNDQ3NWQ3ZjYyOWZiNTExMGEwZDRiOTVjNGZiNDg2NGU4ZDYzYmVmMzkxMTU3OTAyOGU5OWU3N2Q3YjRiMDk2N183Xzc0YjEzMTY3; BDORZ=FFFB88E999055A3F8A630C64834BD6D0; BDRCVFR[VbLWpd7HGum]=mk3SLVN4HKm; H_PS_PSSID=33839_33822_31254_33849_33760_33607_26350_33893; ab_sr=1.0.0_Y2YwMDFlM2ZmMTMzODEzNmYxNzE5M2M0YTk0ZDQ1ZTM1YTZjMzE5OTVkMzFlM2M2YzcxOWI3ZDBmODNlOTU4YTA5NDY1YTUwNTYwMTI4OTMyYWZiM2VlNGE5MzhiZmI2NDNjZmRjMmE4YWE1NTFmZGZmMjg5NWIzMWI4OTM1ODE=',

}

r = requests.get(url,headers=headers)

print(r.status_code)

print(r.text)

json_data = json.loads(r.text)

print(json_data)

comment_list = json_data['data']

print(comment_list)

comment_list = json_data['data']['pages']['3']

for eachone in comment_list:

time1 = eachone['auditTime']

print(time1)

time_tuple = time.localtime(time1)

bj_time = time.strftime("%Y/%m/%d %H:%M:%S",time_tuple)

print("北京时间:",bj_time)

2.Selenium模拟浏览器抓取

未完待续