搭建Kubernetes集群

Kubernetes介绍

- K8s的创造者,是众人皆知的行业巨头-Goole

- K8s 是容器集群管理系统,是一个开源的平台,可以实现容器集群的自动化部署,自动扩缩容,维护等功能。

K8s适用场景

- 有大量跨主机的容器需要管理

- 快速部署应用

- 快速扩展应用

- 无缝对接新的应用功能

- 节省资源,优化硬件资源的使用

K8s的架构

- 核心角色 master(管理节点) node(计算节点) image(镜像仓库)

[root@ecs-proxy ~]# mkdir /var/ftp/k8s (确保ftp服务是开启的)

[root@ecs-proxy ~]# mv *.rpm /var/ftp/k8s

[root@ecs-proxy ~]# cd /var/ftp/k8s

[root@ecs-proxy k8s]# ls

[root@ecs-proxy k8s]# createrepo .

[root@ecs-proxy k8s]# vim /etc/yum.repos.d/local.repo

[kubentes]

name = k8s

baseurl = ftp://192.168.1.252/k8s

enabled=1

gpgcheck=0

登录kube-master主机(同步yum仓库)安装etcd

[root@kube-master ~]# yum -y install etcd

[root@kube-master ~]# vim /etc/etcd/etcd.conf

修改第6行 ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379" #将监听地址改为0.0.0.0

[root@kube-master ~]# systemctl start etcd

[root@kube-master ~]# etcdctl mk /atomic.io/network/config '{"Network": "10.254.0.0/16","Backend": {"Type": "vxlan"}}'

[root@kube-master ~]# etcdctl ls /

/atomic.io

[root@kube-master ~]# etcdctl ls /atomic.io

/atomic.io/network

[root@kube-master ~]# etcdctl get /atomic.io/network/config

{

"Network": "10.254.0.0/16","Backend": {

"Type": "vxlan"}}

准备私有仓库,各节点安装docker软件

(一下所有操作需要在所有节点上操作)

[root@kube-master ~]# vim /etc/sysconfig/docker

INSECURE_REGISTRY='--insecure-registry 192.168.1.100:5000' //允许非加密方式访问仓库

ADD_REGISTRY='--add-registry 192.168.1.100:5000' //docker仓库地址

docker启动时默认会将FORWARD链关闭,修改配置文件,调整

[root@kube-master ~]# vim /usr/lib/systemd/system/docker.service

ExecStartPost=/sbin/iptables -P FORWARD ACCEPT

编写palybook

[root@kube-master ~]# cat k8s.yml

---

- name: install docker

hosts: k8s

tasks:

- name: 安装docker

yum: name=docker state=installed

- name: 安装私有仓库

yum: name=docker-distribution.x86_64 state=installed

- name: 启动服务

service: name=docker state=started enabled=yes

service: name=docker-distribution.service state=started enabled=yes

- name: 拷贝文件到其他节点

copy: src=/etc/sysconfig/docker dest=/etc/sysconfig/docker

- name:

copy:

src: /usr/lib/systemd/system/docker.service

dest: /usr/lib/systemd/system/docker.service

owner: root

group: root

mode: 0644

- name: 重启服务

service: name=docker state=restarted

- name: 关闭iptables

shell: /sbin/iptables -P FORWARD ACCEPT

在registry主机上制作镜像并上传

[root@registry ~]# docker run -it docker.io/centos:latest

进入容器后安装常用软件包,由于当前用的华为的云主机,需要将容器内的yum仓库配置与宿主机相同

[root@2s467af2ghta ~]#yum -y install vim bash-completion

[root@registry ~]#docker commit 44a myos.io #将容器打包制造成新镜像

[root@registry ~]#docker push myos:latest #上传到私有仓库

[root@kube-master ~]# ansible k8s -m shell -a'curl http://192.168.1.100:5000/v2/_catalog' #验证

打通网络

(目前容器跨主机无法通信)

#先在kube-master主机上安装

[root@kube-master ~]# yum -y install flannel.x86_64

[root@kube-master ~]# vim /etc/sysconfig/flanneld

修改第3行 FLANNEL_ETCD_ENDPOINTS="http://192.168.1.20:2379"#指定etcd的主机地址

: wq

[root@kube-master ~]# systemctl start flanneld.service

[root@kube-master ~]# systemctl enable flanneld.service

[root@kube-master ~]# ifconfig

#看到有flannel的一个网卡说明成功

为其他3台节点安装flannel网络并卸载防火墙

注意: **需要先停止docker在启动flannel后启动docker**

[root@kube-master ~]# vim flannel.yml

---

- name: install flannel

hosts: k8s

tasks:

- name: 卸载防火墙

yum: name=firewalld-* state=removed

- name: 安装flannel

yum:

name: flannel.x86_64

state: installed

- name: 同步配置文件

copy:

src: /etc/sysconfig/flanneld

dest: /etc/sysconfig/flanneld

- name: 启动服务

shell: systemctl enable flanneld.service

- name: 停止docker启动flannel

shell: systemctl stop docker #必须先停止docker

- shell: systemctl start flanneld #在启动flanned

- shell: systemctl start docker

查看所有节点上docker0的网段是否和flannel的网段一致

验证: 在不同的宿主机上启动两个容器看是否可以相互通信

#在node1上启动一个容器

[root@kube-node1 ~]# docker run -it myos:latest #启动容器

[root@a3ecb66d5849 /]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.254.27.2 netmask 255.255.255.0 broadcast 0.0.0.0

#在node2上启动一个容器

[root@kube-node2 ~]# docker run -it myos:latest

[root@248bf48304fa /]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.254.55.2 netmask 255.255.255.0 broadcast 0.0.0.0

[root@248bf48304fa /]# ping 10.254.27.2 #虽然不在一个网段但可以ping通

PING 10.254.27.2 (10.254.27.2) 56(84) bytes of data.

64 bytes from 10.254.27.2: icmp_seq=1 ttl=62 time=1.60 ms

64 bytes from 10.254.27.2: icmp_seq=2 ttl=62 time=0.378 ms

64 bytes from 10.254.27.2: icmp_seq=3 ttl=62 time=0.388 ms

部署kube-master节点

kube-master是什么

- master 组件提供集群的控制

- 对集群进行全局决策

- 检测和相应集群事件

- master主要有 api-server ,kube-scheduler,controller-manager,和etcd服务组成

配置kube-master

[root@kube-master ~]# yum -y install kubernetes-master #安装master服务

[root@kube-master ~]# yum -y list kubernetes-client #安装客户端程序

[root@kube-master ~]# cd /etc/kubernetes/

[root@kube-master kubernetes]# vim config #修改全局配置文件

修改22行 KUBE_MASTER="--master=http://192.168.1.20:8080" #指定master地址

[root@kube-master kubernetes]# vim apiserver

修改第8行 KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0" #指定api-server的监听地址

修改第17行 KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.1.20:2379" #指定etcd主机地址

修改22行 将ServiceAccount关键词去掉

主机名解析

为了能让master识别到所有的kube-node信息,需要配置/etc/hosts文件

[root@kube-master kubernetes]# vim /etc/hosts

192.168.1.20 kube-master

192.168.1.21 kube-node1

192.168.1.100 registry

192.168.1.22 kube-node2

192.168.1.23 kube-node3

启动服务并验证

[root@kube-master ~]# systemctl start kube-apiserver.service kube-controller-manager.service kube-scheduler.service

[root@kube-master ~]# systemctl enable kube-apiserver.service kube-scheduler.service kube-controller-manager.service

[root@kube-master ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

etcd-0 Healthy {

"health":"true"}

scheduler Healthy ok

controller-manager Healthy ok

部署kube-node节点

- kube-node 是什么

- kube-node是计算节点,是真正运行容器的节点

- 计算节点,改组件在水平扩展在多个节点上运行

- 维护运行的Pod 并提供Kubernetes运行环境

- kube-node有kubelet,kube-proxy 和 docker 组成

安装kubernetes-node

[root@kube-node1 ~]# yum -y install kubernetes-node

[root@kube-node1 ~]# vim /etc/kubernetes/config 修改全局配置文件

修改第22行 KUBE_MASTER="--master=http://192.168.1.20:8080" #指定master节点位置

[root@kube-node1 ~]# vim /etc/kubernetes/kubelet

KUBELET_ADDRESS="--address=0.0.0.0" #kubelet监听的地址

KUBELET_HOSTNAME="--hostname-override=kube-node1" #当前节点的主机名

KUBELET_ARGS="--cgroup-driver=systemd --fail-swap-on=false

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig #需要创建此路径的文件

--pod-infra-container-image=pod-infrastructure:latest" #此镜像必须可以下载(配置文件要将这些写在一行里)

生成配置文件

[root@kube-node1 ~]# kubectl config set-cluster local --server="http://192.168.1.20:8080"

[root@kube-node1 ~]# kubectl config set-context --cluster="local" local

[root@kube-node1 ~]# kubectl config set current-context local

[root@kube-node1 ~]# touch /etc/kubernetes/kubelet.kubeconfig #配置客户端如何通信

[root@kube-node1 ~]# cat /root/.kube/config #将配置文件写到/etc/kubernetes/kubelet.kubeconfig里

apiVersion: v1

clusters:

- cluster:

server: http://192.168.1.20:8080

name: local

contexts:

- context:

cluster: local

user: ""

name: local

current-context: local

kind: Config

preferences: {

}

users: []

将pod-infrastructure镜像上传到私有仓库(1.100上操作)

[root@registry ~]# docker load -i pod-infrastructure.tar

[root@registry ~]# docker push pod-infrastructure #上传

在node1上下载

[root@kube-node1 ~]# docker pull pod-infrastructure:latest

配置其他两个节点

步骤一样,不同的就是配置文件中的主机名不一样。

[root@kube-master ~]# kubectl get node #验证三个节点

NAME STATUS ROLES AGE VERSION

kube-node1 Ready <none> 20m v1.10.3

kube-node2 Ready <none> 7m v1.10.3

kube-node3 Ready <none> 46s v1.10.3

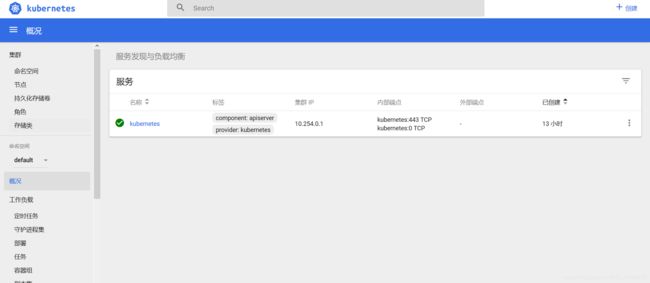

安装dashboard

dashboard是基于网页的Kubernetes用户界面。

您可以使用Dashboard 将容器应用部署到Kubernetes集群中,也可以对容器应用排错,还能管理集群资源。

将dashboard镜像上传到私有仓库

[root@registry ~]# lftp 192.168.1.252

lftp 192.168.1.252:~> cd pub

lftp 192.168.1.252:/pub> get kubernetes-dashboard.tar #下架仪表板镜像

102800384 bytes transferred

lftp 192.168.1.252:/pub> exit

[root@registry ~]# docker load -i kubernetes-dashboard.tar

[root@registry ~]# docker push kubernetes-dashboard-amd64 #将镜像传到仓库

[root@registry ~]# curl http://192.168.1.100:5000/v2/_catalog

{

"repositories":["kubernetes-dashboard-amd64","myos","pod-infrastructure"]}

[root@registry ~]# curl http://192.168.1.100:5000/v2/kubernetes-dashboard-amd64/tags/list

{

"name":"kubernetes-dashboard-amd64","tags":["v1.8.3"]}

创建管理面板(master上操作)

[root@kube-master ~]# lftp 192.168.1.252

lftp 192.168.1.252:~> cd pub

cd ok, cwd=/pub

lftp 192.168.1.252:/pub> get kube

kube-dashboard.yaml kubernetes-dashboard.tar

lftp 192.168.1.252:/pub> get kube-dashboard.yaml #下载配置文件

1438 bytes transferred

lftp 192.168.1.252:/pub> exit

[root@kube-master ~]# kubectl create -f kube-dashboard.yaml #启动管理面板

[root@kube-master ~]# curl http://192.168.1.21:30090 #访问任意节点返回一组html即可用浏览器测试访问

真机浏览器访问任意节点:30090就可以访问到管理页面(看到此页面表示成功)

http://192.168.1.21:30090

Kubectl介绍

- kubectl是用于控制Kubenetes集群的命令工具

[root@kube-master ~]# kubectl run test1 -i -t --image=192.168.1.100:5000/myos:latest #创建容器

[root@test1-67b44cc6bf-ntnt8 /]# exit #退出容器

[root@kube-master ~]# kubectl get pod #查看pod

NAME READY STATUS RESTARTS AGE

test1-67b44cc6bf-ntnt8 1/1 Running 1 16m

[root@kube-master ~]# kubectl exec -it test1-67b44cc6bf-ntnt8 /bin/bash #进入容器

[root@kube-master ~]# kubectl attach test1-67b44cc6bf-ntnt8 -c test1 -i -t #以上帝的身份进入容器 -c 指定资源的名称

[root@kube-master ~]# kubectl logs test1-67b44cc6bf-ntnt8 #查看历史命令

kubectl delete

- 删除资源

- 格式: kubectl delete 资源类型 资源名称

[root@kube-master ~]# kubectl delete pod test1-67b44cc6bf-ntnt8 #删除pod(但是不会真的删除)

pod "test1-67b44cc6bf-ntnt8" deleted

[root@kube-master ~]# kubectl get pod #它会在重启一个一模一样的容器

NAME READY STATUS RESTARTS AGE

test1-67b44cc6bf-ntnt8 1/1 Terminating 2 28m

test1-67b44cc6bf-sdn8r 1/1 Running 0 5s

要想真正删除掉需要删除资源

[root@kube-master ~]# kubectl get deployment #列出资源

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

test1 1 1 1 1 29m

[root@kube-master ~]# kubectl delete deployment test1 #删除资源test1

deployment.extensions "test1" deleted

[root@kube-master ~]# kubectl get deployment #再次查看

No resources found.

总结kubectl管理命令(管理kubernetes)

容器镜像的制作

[root@registry ~]#mkdir apache

[root@registry apache]#vim dockerfile

FROM myos:latest

RUN yum -y install httpd php

ENV LANG=C

ADD webhome.tar.gz /var/www/html

WORKDIR /var/www/html

EXPOSE 80

CMD ["httpd","-DFOREGROUND"]

[root@registry apache]#docker build -t myos:httpd . #创建镜像

[root@registry apache]#docker images

[root@registry apache]#docker push myos:httpd #上传到私有仓库

来到kube-node1上

[root@kube-master ~]# kubectl run -r 2 apache --image=192.168.1.100:5000/myos:httpd #-r 表示副本数为2(启动两个容器) apache 表示资源名称 --image=指定镜像名称

[root@kube-node1 ~]# kubectl get deployment #验证

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

apache 2 2 2 2 3s

[root@kube-node1 ~]# kubectl get pod -o wide #查看详细信息

NAME READY STATUS RESTARTS AGE IP NODE

apache-df885559b-57nxv 1/1 Running 0 9s 10.254.81.3 kube-node3

apache-df885559b-ccd65 1/1 Running 0 9s 10.254.27.2 kube-node1

service服务

- service会创建一个cluster ip,这个地址对应资源地址,不管pod如何变化,service总能找到对应pod,且cluster ip 保持不变,如果pod对应多个容器,service会自动在多个容器间时间负载均衡

[root@kube-master ~]# kubectl expose deployment apache --port=80 --target-port=80 --name=my-httpd

[root@kube-master ~]# kubectl get service #此ip仅现在集群内部访问

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.254.0.1 <none> 443/TCP 1d

my-httpd ClusterIP 10.254.42.155 <none> 80/TCP 7s

[root@kube-master ~]# kubectl run test -i -t --image=192.168.1.100:5000/myos:latest #启动一个测试容器

[root@test-69bf8fdc5-tk4p8 /]# curl 10.254.42.155 #在容器里访问

[root@kube-master ~]# kubectl exec -i -t apache-df885559b-98hfx /bin/bash

[root@apache-df885559b-98hfx html]# echo hello world > /var/www/html/index.html

[root@kube-master ~]# kubectl exec -i -t apache-df885559b-dwjfm /bin/bash

[root@apache-df885559b-dwjfm html]# echo hello kubernetes > /var/www/html/index.html

[root@kube-master ~]# kubectl exec -i -t test-69bf8fdc5-tk4p8 /bin/bash

[root@test-69bf8fdc5-tk4p8 /]# curl 10.254.42.155

hello world

[root@test-69bf8fdc5-tk4p8 /]# curl 10.254.42.155

hello kubernetes