Linux下基于qt的视频监控系统

目录

一、原始需求

二、环境安装

2.1 qt安装

2.2 opencv安装

三、系统设计

3.1、 整体流程设计

3.2 、数据传输交互流程

3.3 、数据库设计

四、关键代码

4.1、如何实现通信(TCP)

4.1.1 服务端

4.1.2 客户端

4.2、如何实现视频读取(V4L2)

4.3、如何实现图像处理(opencv)

4.4 登录验证

五、实现效果

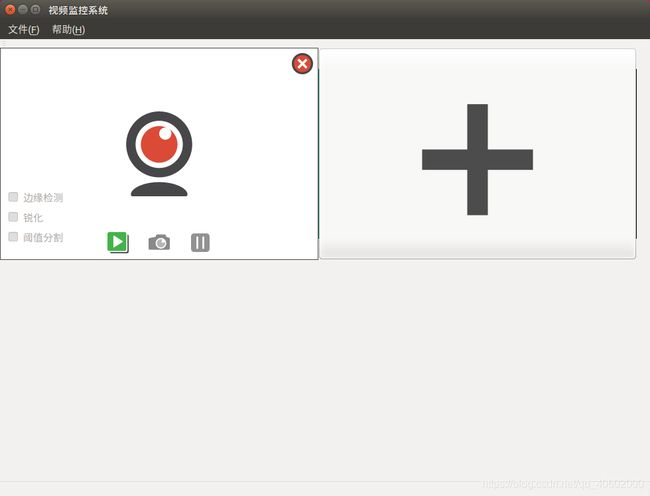

5.1、服务端GUI

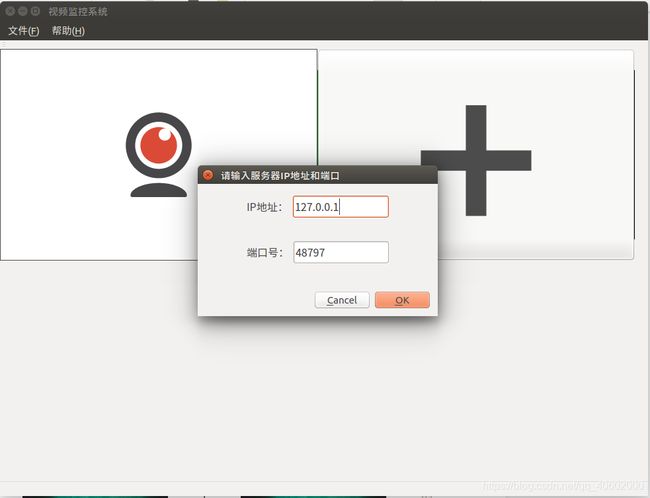

5.2、客户端GUI

六、参考文献

一、原始需求

Linux下基于qt的视频监控系统

- 服务端: 用v4l2实现视频采集、视频传输,用tcp实现服务器端与客户端通信

- 客户端:用qt实现

功能:

1、注册登录

2、暂停

3、截图

4、保存视频

5、视频上显示时间

6、可以对视频进行边缘检测、锐化、阈值分割

7、同时监控两个及以上个摄像头

二、环境安装

本人开发环境ubuntu 16.04 qt5.6.3 opencv3.4

2.1 qt安装

https://download.qt.io/new_archive/qt/5.6/5.6.3/

下载linux版本

修改可执行权限,Terminal中运行

一路傻瓜式安装即可

2.2 opencv安装

安装cmake

sudo apt-get install cmake安装依赖环境

sudo apt-get install build-essential libgtk2.0-dev libavcodec-dev libavformat-dev libjpeg-dev libswscale-dev libtiff5-dev

sudo apt-get install libgtk2.0-dev

sudo apt-get install pkg-config 下载opencv3.4版本

下载地址:https://opencv.org/releases/,点击Sources进行下载自己需要的版

下载完后解压文件,进入,当前位置打开Terminal

mkdir build

cd build

sudo cmake -D CMAKE_BUILD_TYPE=Release -D CMAKE_INSTALL_PREFIX=/usr/local ..

sudo make -j4

sudo make install到此所有依赖环境安装完毕

三、系统设计

3.1、 整体流程设计

3.2 、数据传输交互流程

3.3 、数据库设计

一张用户表记录注册信息即可

四、关键代码

4.1、如何实现通信(TCP)

4.1.1 服务端

在头文件里面定义一个server指针变量,一个socket指针变量

QTcpServer *tcpserver;

QTcpSocket *tcpsocket;实例化server,监听的对象设成Any,端口号设置一个固定值,客户端与服务端端口号一致才能通信成功

tcpserver = new QTcpServer(this);

tcpserver->listen(QHostAddress::Any, 48797);监听到有新连接接入时,连接到信号槽来处理连接的客户端

connect(tcpserver, SIGNAL(newConnection()), this,

SLOT(slotTcpNewConnection()));void MainWindow::slotTcpNewConnection() {

qDebug("NEW CONNECTION");

tcpsocket = tcpserver->nextPendingConnection(); //获取监听到的socket

/*获取对方IP和端口*/

QString ip = tcpsocket->peerAddress().toString();

quint16 port = tcpsocket->peerPort();

QString str = QString("[%1:%2]成功连接").arg(ip).arg(port);

ui->textBrowser->append(str); /*显示编辑区*/

//连接成功后我们再连接一个信号槽到准备接收信号槽函数中去

connect(tcpsocket, SIGNAL(readyRead()), this, SLOT(slotTcpReadyRead()));

//已连接套接字的断开信号与自身的稍后删除信号相连接

connect(tcpsocket, SIGNAL(disconnected()), this, SLOT(slotDisconnect()));

} 当收客户端发来的信息后,开启读取摄像头数据线程

void MainWindow::slotTcpReadyRead() {

QByteArray recvbuf = tcpsocket->readAll();

qDebug("recv:");

qDebug(recvbuf);

vapi.start();

}V4l2Api为线程,启动线程后,会发送sendImage信号,关联slotSendImage

V4l2Api vapi;

connect(&vapi, &V4l2Api::sendImage, this, &MainWindow::slotSendImage);void MainWindow::slotSendImage(QImage image) {

qDebug("send image:");

QImage tempimage = image;

tempimage =

tempimage.scaled(800, 480, Qt::KeepAspectRatio, Qt::FastTransformation)

.scaled(400, 240, Qt::KeepAspectRatio, Qt::SmoothTransformation);

QPixmap pixmap =

QPixmap::fromImage(tempimage); //把img转成位图,我们要转成jpg格式

QByteArray ba;

QBuffer buf(&ba); //把ba绑定到buf上,操作buf就等于操作ba

pixmap.save(&buf, "jpg", 50); //把pixmap保存成jpg,压缩质量50 数据保存到buf

//先写大小过去,告诉主机我们要传输的数据有多大

tcpsocket->write(QString("size=%1").arg(ba.size()).toLocal8Bit().data());

tcpsocket->waitForReadyRead(); //等待主机响应“ok”

tcpsocket->write(ba); //把图像数据写入传输给主机

tcpsocket->waitForReadyRead();

} 4.1.2 客户端

接收消息封装成线程,重写run

void RecvThread::run() {

isRunning = true;

QTcpSocket tcpsocket;

connect(&tcpsocket, SIGNAL(disconnected()), this, SIGNAL(disconnectSlot()));

tcpsocket.connectToHost(ip, port);

if (!tcpsocket.waitForConnected(3000)) {

qDebug() << "连接失败:" << tcpsocket.errorString();

emit disconnectSlot();

return;

} else {

qDebug() << "连接成功!";

}

//发送HTTP请求

tcpsocket.write("\r\n\r\n");

if (tcpsocket.waitForBytesWritten(3000)) {

qDebug() << "发送Http Request成功!";

} else {

qDebug() << "发送Http Request失败!";

return;

}

cv::VideoWriter videowriter; //录屏

int recvtimes=0; //接收次数

QByteArray jpgArr; //存储图片

int totalsize; //总大小

while (isRunning) {

if (tcpsocket.waitForReadyRead()) {

QByteArray buf = tcpsocket.readAll();

qDebug() << "recv buf: " << buf << "\n";

if (buf.contains("size=")) {

buf = buf.replace("size=", "");

totalsize = buf.toInt();

jpgArr.clear();

tcpsocket.write("ok"); //发送一个响应给客户机

tcpsocket.waitForBytesWritten(); //等待数据写入

} else {

//如果不是图片大小数据,就是图片数据,图片数据追加写到数组里面

jpgArr.append(buf);

}

if (jpgArr.length() == totalsize) {

QImage img_qt;

img_qt.loadFromData(jpgArr, "JPG");

cv::Mat src_img_cv; //源数据

src_img_cv = Util::QImage2cvMat(img_qt); //QImage转mat

...其他图像处理

img_qt = Util::cvMat2QImage(src_img_cv); //mat转QImage

emit transmitData(img_qt);

jpgArr.clear();

tcpsocket.write("ok");

}

}

} 4.2、如何实现视频读取(V4L2)

把v4l2封装在线程里,支持yuyv、jpeg格式转rgb算法

v4l2api.h

#ifndef V4L2API_H

#define V4L2API_H

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

using namespace std;

const int WIDTH = 640;

const int HEIGHT = 480;

//异常类

class VideoException : public exception {

public:

VideoException(string err) : errStr(err) {}

~VideoException() {}

const char *what() const noexcept { return errStr.c_str(); }

private:

string errStr;

};

struct VideoFrame {

char *start; //保存内核空间映射到用户空间的空间首地址

int length; //空间长度

};

// v4l2封装成线程

class V4l2Api : public QThread {

Q_OBJECT

public:

V4l2Api(const char *dname = "/dev/video0", int count = 4);

~V4l2Api();

/**

* @brief open 打开摄像头

*/

void open();

/**

* @brief close 关闭摄像头

*/

void close();

/**

* @brief grapImage

* @param imageBuffer

* @param length

*/

void grapImage(char *imageBuffer, int *length);

/**

* @brief yuyv_to_rgb888 yuyv转rgb算法

* @param yuyvdata

* @param rgbdata

* @param picw

* @param pich

* @return

*/

bool yuyv_to_rgb888(unsigned char *yuyvdata, unsigned char *rgbdata,

int picw = WIDTH, int pich = HEIGHT);

/**

* @brief jpeg_to_rgb888 jpeg转rgb算法

* @param jpegData

* @param size

* @param rgbdata

*/

void jpeg_to_rgb888(unsigned char *jpegData, int size,

unsigned char *rgbdata);

inline void setRunning(bool running);

/**

* @brief run定义run函数

*/

void run();

private:

/**

* @brief video_init

*/

void video_init();

/**

* @brief video_mmap

*/

void video_mmap();

private:

string deviceName; //摄像头名称

int vfd; //保存文件描述符

int count; //缓冲区个数

vector framebuffers;

volatile bool isRunning; //是否运行

signals:

/**

* @brief sendImage 发送图片

*/

void sendImage(QImage);

};

void V4l2Api::setRunning(bool running) { this->isRunning = running; }

#endif // V4

v4l2api.cpp

#include "v4l2api.h"

#include

#include

#include

V4l2Api::V4l2Api(const char *dname, int count)

: deviceName(dname), count(count) {

this->open();

}

V4l2Api::~V4l2Api() { this->close(); }

void V4l2Api::open() {

video_init();

video_mmap();

#if 1

//开始采集

enum v4l2_buf_type type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

int ret = ioctl(this->vfd, VIDIOC_STREAMON, &type);

if (ret < 0) {

perror("start fail");

}

#endif

}

void V4l2Api::close() {

enum v4l2_buf_type type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

int ret = ioctl(this->vfd, VIDIOC_STREAMOFF, &type);

if (ret < 0) {

perror("stop fail");

}

//释放映射

for (int i = 0; i < this->framebuffers.size(); i++) {

munmap(framebuffers.at(i).start, framebuffers.at(i).length);

}

}

void V4l2Api::grapImage(char *imageBuffer, int *length) {

// select (rfds, wfds, efds, time)

struct v4l2_buffer readbuf;

readbuf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

readbuf.memory = V4L2_MEMORY_MMAP;

// perror("read");

if (ioctl(this->vfd, VIDIOC_DQBUF, &readbuf) < 0) //取一针数据

{

perror("read image fail");

}

printf("%ld\n", readbuf.length);

*length = readbuf.length;

memcpy(imageBuffer, framebuffers[readbuf.index].start,

framebuffers[readbuf.index].length);

//把用完的队列空间放回队列中重复使用

if (ioctl(vfd, VIDIOC_QBUF, &readbuf) < 0) {

perror("destroy fail");

exit(1);

}

}

void V4l2Api::video_init() {

// 1.打开设备

this->vfd = ::open(deviceName.c_str(), O_RDWR);

if (this->vfd < 0) {

perror("open fail");

VideoException vexp("open fail"); //创建异常对象

//抛异常

throw vexp;

}

// 2.配置采集属性

struct v4l2_format vfmt;

vfmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE; //

vfmt.fmt.pix.width = WIDTH;

vfmt.fmt.pix.height = HEIGHT;

vfmt.fmt.pix.pixelformat =

V4L2_PIX_FMT_JPEG; //(设置视频输出格式,但是要摄像头支持4:2:2)

//通过ioctl把属性写入设备

int ret = ioctl(this->vfd, VIDIOC_S_FMT, &vfmt);

if (ret < 0) {

perror("set fail");

// VideoException vexp("set fail");//创建异常对象

// throw vexp;

}

//通过ioctl从设备获取属性

memset(&vfmt, 0, sizeof(vfmt));

vfmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

ret = ioctl(this->vfd, VIDIOC_G_FMT, &vfmt);

if (ret < 0) {

perror("get fail");

// VideoException vexp("get fail");//创建异常对象

// throw vexp;

}

if (vfmt.fmt.pix.width == WIDTH && vfmt.fmt.pix.height == HEIGHT &&

vfmt.fmt.pix.pixelformat == V4L2_PIX_FMT_JPEG) {

} else {

// VideoException vexp("set error 2");//创建异常对象

// throw vexp;

}

}

void V4l2Api::video_mmap() {

// 1申请缓冲区队列

struct v4l2_requestbuffers reqbuffer;

reqbuffer.count = this->count; //申请缓冲区队列长度

reqbuffer.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

reqbuffer.memory = V4L2_MEMORY_MMAP;

int ret = ioctl(this->vfd, VIDIOC_REQBUFS, &reqbuffer);

if (ret < 0) {

perror("req buffer fail");

// VideoException vexp("req buffer fail");//创建异常对象

// throw vexp;

}

// 2.映射

for (int i = 0; i < this->count; i++) {

struct VideoFrame frame;

struct v4l2_buffer mapbuffer;

mapbuffer.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

mapbuffer.index = i;

mapbuffer.memory = V4L2_MEMORY_MMAP;

//从队列中拿到内核空间

ret = ioctl(this->vfd, VIDIOC_QUERYBUF, &mapbuffer);

if (ret < 0) {

perror("query fail");

}

//映射

frame.length = mapbuffer.length;

frame.start = (char *)mmap(NULL, mapbuffer.length, PROT_READ | PROT_WRITE,

MAP_SHARED, this->vfd, mapbuffer.m.offset);

//空间放回队列中(内核空间)

ret = ioctl(this->vfd, VIDIOC_QBUF, &mapbuffer);

//把frame添加到容器framebuffers

framebuffers.push_back(frame);

}

}

bool V4l2Api::yuyv_to_rgb888(unsigned char *yuyvdata, unsigned char *rgbdata,

int picw, int pich) {

int i, j;

unsigned char y1, y2, u, v;

int r1, g1, b1, r2, g2, b2;

//确保所转的数据或要保存的地址有效

if (yuyvdata == NULL || rgbdata == NULL) {

return false;

}

int tmpw = picw / 2;

for (i = 0; i < pich; i++) {

for (j = 0; j < tmpw; j++) // 640/2 == 320

{

// yuv422

// R = 1.164*(Y-16) + 1.159*(V-128);

// G = 1.164*(Y-16) - 0.380*(U-128)+ 0.813*(V-128);

// B = 1.164*(Y-16) + 2.018*(U-128));

//下面的四个像素为:[Y0 U0 V0] [Y1 U1 V1] -------------[Y2 U2 V2] [Y3 U3

// V3]

//存放的码流为: Y0 U0 Y1 V1------------------------Y2 U2 Y3 V3

//映射出像素点为: [Y0 U0 V1] [Y1 U0 V1]--------------[Y2 U2 V3] [Y3 U2

// V3]

//获取每个像素yuyv数据 YuYv

y1 = *(yuyvdata + (i * tmpw + j) * 4); // yuv像素的Y

u = *(yuyvdata + (i * tmpw + j) * 4 + 1); // yuv像素的U

y2 = *(yuyvdata + (i * tmpw + j) * 4 + 2);

v = *(yuyvdata + (i * tmpw + j) * 4 + 3);

//把yuyv数据转换为rgb数据

r1 = y1 + 1.042 * (v - 128);

g1 = y1 - 0.34414 * (u - 128);

b1 = y1 + 1.772 * (u - 128);

r2 = y2 + 1.042 * (v - 128);

g2 = y2 - 0.34414 * (u - 128);

b2 = y2 + 1.772 * (u - 128);

if (r1 > 255)

r1 = 255;

else if (r1 < 0)

r1 = 0;

if (g1 > 255)

g1 = 255;

else if (g1 < 0)

g1 = 0;

if (b1 > 255)

b1 = 255;

else if (b1 < 0)

b1 = 0;

if (r2 > 255)

r2 = 255;

else if (r2 < 0)

r2 = 0;

if (g2 > 255)

g2 = 255;

else if (g2 < 0)

g2 = 0;

if (b2 > 255)

b2 = 255;

else if (b2 < 0)

b2 = 0;

//把rgb值保存于rgb空间 数据为反向

rgbdata[((pich - 1 - i) * tmpw + j) * 6] = (unsigned char)b1;

rgbdata[((pich - 1 - i) * tmpw + j) * 6 + 1] = (unsigned char)g1;

rgbdata[((pich - 1 - i) * tmpw + j) * 6 + 2] = (unsigned char)r1;

rgbdata[((pich - 1 - i) * tmpw + j) * 6 + 3] = (unsigned char)b2;

rgbdata[((pich - 1 - i) * tmpw + j) * 6 + 4] = (unsigned char)g2;

rgbdata[((pich - 1 - i) * tmpw + j) * 6 + 5] = (unsigned char)r2;

}

}

return true;

}

void V4l2Api::jpeg_to_rgb888(unsigned char *jpegData, int size,

unsigned char *rgbdata) {

//解码jpeg图片

// 1.定义解码对象struct jpeg_decompress_struct 错误处理对象struct

// jpeg_error_mgr;

struct jpeg_decompress_struct cinfo;

struct jpeg_error_mgr err;

// 2.初始化错误jpeg_std_error(err),创建初始化解码对象jpeg_create_decompress();

cinfo.err = jpeg_std_error(&err);

jpeg_create_decompress(&cinfo);

// 3.加载源数据jpeg_mem_src()

jpeg_mem_src(&cinfo, jpegData, size);

// 4.获取jpeg图片头数据

jpeg_read_header(&cinfo, true);

// 5.开始解码

jpeg_start_decompress(&cinfo);

// 6.分配存储一行像素所需要的空间//---RGB数据

// 640--cinfo.output_width, 480--cinfo.output_height

char *rowFrame = (char *)malloc(cinfo.output_width * 3);

int pos = 0;

// 7.一行一行循环读取(一次读取一行,要全部读完)

while (cinfo.output_scanline < cinfo.output_height) {

//读取一行数据--解码一行

jpeg_read_scanlines(&cinfo, (JSAMPARRAY)&rowFrame, 1);

//把rgb像素显示在lcd上 mmp

memcpy(rgbdata + pos, rowFrame, cinfo.output_width * 3);

pos += cinfo.output_width * 3;

}

free(rowFrame);

// 8.解码完成

jpeg_finish_decompress(&cinfo);

// 9.销毁解码对象

jpeg_destroy_decompress(&cinfo);

}

void V4l2Api::run() {

isRunning = true;

char buffer[WIDTH*HEIGHT*3];

char rgbbuffer[WIDTH*HEIGHT*3];

int times = 0;

int len;

while(isRunning)

{

grapImage(buffer, &len);

//yuyv_to_rgb888((unsigned char *)buffer, (unsigned char *)rgbbuffer);

jpeg_to_rgb888((unsigned char *)buffer, len, (unsigned char *)rgbbuffer);

//把RGB数据转为QImage

QImage image((uchar*)rgbbuffer, WIDTH, HEIGHT, QImage::Format_RGB888);

emit sendImage(image);

qDebug()<<"(("<

4.3、如何实现图像处理(opencv)

pro文件中加入

INCLUDEPATH += /usr/local/include \

/usr/local/include/opencv \

/usr/local/include/opencv2 \

LIBS += /usr/local/lib/libopencv_*.so \引入头文件

#include

#include

using namespace cv; 边缘检测

if(isEdgeDetect){ //边缘检测

cv::Mat dstPic, edge, grayImage;

//创建与src同类型和同大小的矩阵

dstPic.create(src_img_cv.size(), src_img_cv.type());

//将原始图转化为灰度图

cvtColor(src_img_cv, grayImage, COLOR_BGR2GRAY);

//先使用3*3内核来降噪

blur(grayImage, edge, Size(3, 3));

//运行canny算子

Canny(edge, edge, 3, 9, 3);

src_img_cv = edge;

}锐化

if(isSharpen){ //锐化

cv::Mat blur_img, usm;

GaussianBlur(src_img_cv, blur_img, Size(0, 0), 25);//高斯滤波

addWeighted(src_img_cv, 1.5, blur_img, -0.5, 0, usm);

src_img_cv = usm;

}阈值分割

if(isThreshold){ //阈值分割

double the = 150;//阈值

cv::Mat threshold_dst;

threshold(src_img_cv, threshold_dst, the, 255, THRESH_BINARY);//手动设置阈值

src_img_cv = threshold_dst;

}4.4 登录验证

使用sqlite数据库,封装成单例模式

sqlitesingleton.h

#ifndef SQLITESINGLETON_H

#define SQLITESINGLETON_H

#include

#include

#include

#include

#include

#include

class SQLiteSingleton {

public:

static SQLiteSingleton &getInstance();

/**

* @brief initDB 初始化数据库

* @param db_type

*/

void initDB(QString db_type = "QSQLITE");

/**

* @brief createTable 创建数据库表

*/

void createTable();

/**

* @brief insertUserTable 添加用户

* @param username 用户名

* @param pwd 密码

*/

bool insertUserTable(QString username, QString pwd);

/**

* @brief queryUserExist 查询用户是否存在

* @param username

* @param pwd

* @return

*/

bool queryUserExist(QString username, QString pwd);

private:

SQLiteSingleton();

~SQLiteSingleton();

QSqlDatabase db;

};

#endif // SQLITESINGLETO sqlitesingleton.cpp

#include "sqlitesingleton.h"

#include

#include

#include

SQLiteSingleton &SQLiteSingleton::getInstance() {

static SQLiteSingleton tSQLiteSingleton;

return tSQLiteSingleton;

}

void SQLiteSingleton::initDB(QString db_type) {

qDebug() << "初始化数据库";

if (db_type == "QSQLITE") {

db = QSqlDatabase::addDatabase("QSQLITE");

db.setDatabaseName("UserInfo.dat");

if (!db.open()) {

QSqlError lastError = db.lastError();

QMessageBox::warning(0, QObject::tr("Database Error"),

"数据库打开失败," + lastError.driverText());

return;

}

}

}

void SQLiteSingleton::createTable() {

QSqlQuery query(db);

bool ret = query.exec("create table user_info (username varchar(40) primary "

"key, password varchar(40))");

qDebug() << "create user_info " << ret;

}

bool SQLiteSingleton::insertUserTable(QString username, QString pwd) {

QSqlQuery query(db);

bool ret = query.exec(

QObject::tr("insert into user_info values('%1', '%2')").arg(username).arg(pwd));

qDebug() << "insertUserTable" << ret;

return ret;

}

bool SQLiteSingleton::queryUserExist(QString username, QString pwd) {

bool isExist = false;

QSqlQuery query(db);

bool ret = query.exec(

QObject::tr("select * from user_info where username='%1' and password='%2'")

.arg(username)

.arg(pwd));

qDebug() << "queryUserExist" << ret;

while (query.next()) {

isExist = true;

}

return isExist;

}

SQLiteSingleton::SQLiteSingleton() {}

SQLiteSingleton::~SQLiteSingleto 使用方法

初始化

SQLiteSingleton::getInstance().initDB();

SQLiteSingleton::getInstance().createTable();注册

SQLiteSingleton::getInstance().insertUserTable(username, pwd1);查询

isLoginSuccess = SQLiteSingleton::getInstance().queryUserExist(username, pwd);

五、实现效果

5.1、服务端GUI

5.2、客户端GUI

六、参考文献

- Opencv安装教程 https://blog.csdn.net/public669/article/details/99050101

- 基于QT的网络视频监控系统 https://github.com/muxiaozi/LiveCamera

- V4L2示例 https://github.com/justdoit-mqr/V4L2VideoProcess

- QT使用TCP传输图像或者数据的方法https://blog.csdn.net/ALong_Lj/article/details/107376794

- Opencv-锐化增强算法(USM)https://blog.csdn.net/weixin_41709536/article/details/100889849

- OpenCV —— 阈值分割(直方图技术法,熵算法,Otsu,自适应阈值算法)https://blog.csdn.net/m0_38007695/article/details/112395145