Transformer: Summary of Task(三)

文章目录

- Summary of the tasks (Transformer主要用于哪些任务)

-

- 1.Sequence Classification

- 2.Extractive Question Answering

- 3.Language Modeling

-

- Masked Language Modeling

- 4.Causal因果 Language Modeling

- 5.Text Generation

- 6. 命名实体识别Named Entity Recognition

- 7. Summarization

- 8.Translation 翻译

Summary of the tasks (Transformer主要用于哪些任务)

https://huggingface.co/transformers/task_summary.html

此页面显示了使用库时最常见的用例。 可用的模型允许许多不同的配置,并在用例中具有极大的多功能性。 这里介绍了最简单的方法,展示了诸如问题回答,sequence分类,命名实体识别等任务的用法。

以下例子都是用的是Auto-model,会自动根据用例的类型来调整正确的模型框架。

为了使模型在任务上表现良好,必须从与该任务相对应的检查点加载模型。 这些检查点通常在大量数据集上进行预训练,并在特定任务上进行微调。 这意味着:

-

并非所有模型都针对所有任务进行了微调。 如果要针对特定任务微调模型,则可以利用示例目录中的

run_ $ TASK.py脚本 -

经过微调的模型在特定数据集上进行了微调。 该数据集可能与您的用例和域重叠,也可能不重叠。 如前所述,您可以利用示例脚本来微调模型,也可以创建自己的训练脚本。

为了推断任务,该库提供了几种机制:

-

管道pipeline:非常易于使用的抽象,只需要两行代码即可。

-

直接使用模型:减少了抽象,但具有更大的灵活性和功能。通过直接访问Tokenizer(PyTorch / TensorFlow)和完整的推理能力,

1.Sequence Classification

Sequence分类是根据给定数量的类别对序列进行分类的任务。 序列分类的一个示例是GLUE数据集,它完全基于该任务。 如果您想根据GLUE序列分类任务微调模型,你可以利用run_glue.py 等脚本

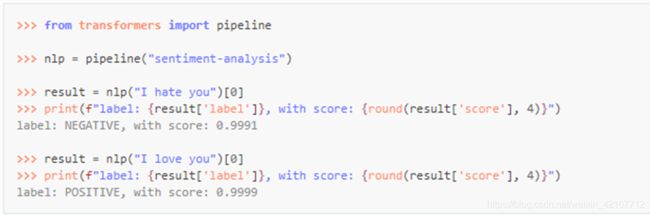

这是使用pipeline来进行情感分析的示例:识别序列是正还是负。 它在sst2上利用了经过微调的模型,这是一个GLUE任务。

2.Extractive Question Answering

略

3.Language Modeling

语言建模是使模型适配(fitting)语料库的任务,语料库可以是特定领域的。 所有流行的基于Transformer的模型都使用多种语言建模方法进行了训练,例如 BERT用的是mask语言建模,GPT-2用的是因果语言建模。

Language Modeling在预训练之外也很有用,例如,将模型(数据)分布更改为特定领域:使用在非常大的语料库上训练的语言模型,然后将其微调到新闻数据集或科学论文数据集上。例子

Masked Language Modeling

屏蔽语言建模的任务是用Masked token在序列中屏蔽token,并促使模型用适当的token填充该mask。 这使得模型能同时关注Mask的上下文。 这种训练为下游任务奠定了坚实的基础。

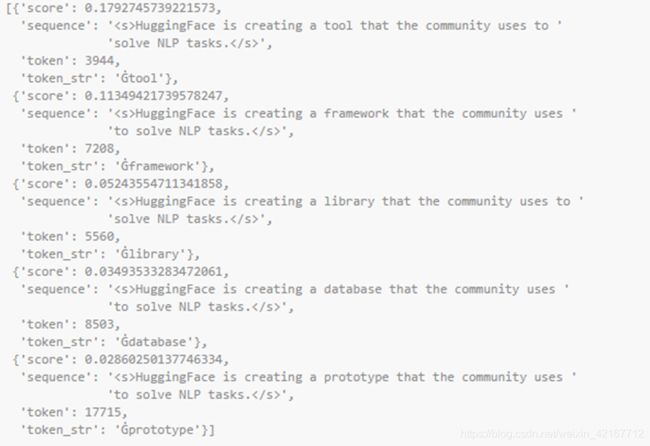

如下是个用pipeline来替换句子中mask的例子:

from transformers import pipeline

nlp = pipeline("fill-mask")

下面将输出:填充了掩码的序列,置信度得分和tokenizer词汇表中的token ID:

from pprint import pprint

pprint(nlp(f"HuggingFace is creating a {nlp.tokenizer.mask_token} that the community uses to solve NLP tasks."))

以下是做masked language model的步骤(使用model 和 tokenizer)

1.从检查点实例化tokenizer和model。 例子用的是DistilBERT模型,加载时附带着存储在检查点中的权重。

2.定义一个带有masked token的句子,并将原单词替换成tokenizer.mask_token。

3.将句子编码为ID列表,然后在该列表中找到被屏蔽token的位置。

4.在mask token所在的索引处进行预测:给出的预测范围与词汇表的大小相同,并且每个token都会有得分。 该模型会在这种情况下为其认为可能的token提供更高的分数。

5.使用PyTorch topk或TensorFlow top_k方法检索排名最靠前的5个token。

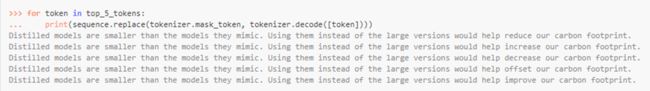

6.用token替换mask token并打印结果

from transformers import AutoModelWithLMHead, AutoTokenizer

import torch

tokenizer = AutoTokenizer.from_pretrained("distilbert-base-cased")

model = AutoModelWithLMHead.from_pretrained("distilbert-base-cased")

sequence = f"Distilled models are smaller than the models they mimic. Using them instead of the large versions would help {tokenizer.mask_token} our carbon footprint."

input = tokenizer.encode(sequence, return_tensors="pt")

mask_token_index = torch.where(input == tokenizer.mask_token_id)[1]

token_logits = model(input).logits

mask_token_logits = token_logits[0, mask_token_index, :]

top_5_tokens = torch.topk(mask_token_logits, 5, dim=1).indices[0].tolist()

4.Causal因果 Language Modeling

Causal language modeling 是用来预测一系列tokens(一句话)后面紧跟着的那个token。模型只用到要预测的那个token左侧的文本。主要用于文本生成任务

略

5.Text Generation

略

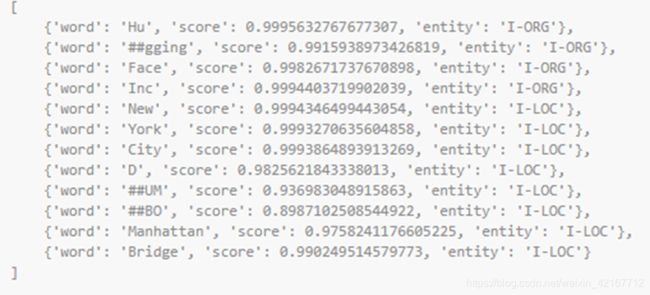

6. 命名实体识别Named Entity Recognition

将一个token分类,分类为person / organization / location等等

一个NER的数据集CoNLL-2003,如果你想要fine-tune NER任务,可以利用

run_ner.py

下面举例利用pipeline进行命名实体识别分类,9个类:

-

O, Outside of a named entity

-

B-MIS, Beginning of a miscellaneous(杂项) entity right after another miscellaneous entity

-

I-MIS, Miscellaneous entity 杂项实体

-

B-PER, Beginning of a person’s name right after another person’s name

-

I-PER, Person’s name

-

B-ORG, Beginning of an organisation right after another organisation

-

I-ORG, Organisation

-

B-LOC, Beginning of a location right after another location

-

I-LOC, Location

使用的是pipeline:

from transformers import pipeline

nlp = pipeline("ner")

sequence = "Hugging Face Inc. is a company based in New York City. Its headquarters are in DUMBO, therefore very"

"close to the Manhattan Bridge which is visible from the window."

print(nlp(sequence))

做NER的步骤(使用model和tokenizer):

from transformers import AutoModelForTokenClassification, AutoTokenizer

import torch

model = AutoModelForTokenClassification.from_pretrained("dbmdz/bert-large-cased-finetuned-conll03-english")

tokenizer = AutoTokenizer.from_pretrained("bert-base-cased")

label_list = [

"O", # Outside of a named entity

"B-MISC", # Beginning of a miscellaneous entity right after another miscellaneous entity

"I-MISC", # Miscellaneous entity

"B-PER", # Beginning of a person's name right after another person's name

"I-PER", # Person's name

"B-ORG", # Beginning of an organisation right after another organisation

"I-ORG", # Organisation

"B-LOC", # Beginning of a location right after another location

"I-LOC" # Location

]

sequence = "Hugging Face Inc. is a company based in New York City. Its headquarters are in DUMBO, therefore very" \

"close to the Manhattan Bridge."

# Bit of a hack to get the tokens with the special tokens

tokens = tokenizer.tokenize(tokenizer.decode(tokenizer.encode(sequence)))

inputs = tokenizer.encode(sequence, return_tensors="pt")

outputs = model(inputs).logits

predictions = torch.argmax(outputs, dim=2)

上面输出的是一个列表list,list中的每个元素是个元组,元组存储了(token,prediction)对

#打印结果

print([(token, label_list[prediction]) for token, prediction in zip(tokens, predictions[0].numpy())])

[('[CLS]', 'O'), ('Hu', 'I-ORG'), ('##gging', 'I-ORG'), ('Face', 'I-ORG'), ('Inc', 'I-ORG'), ('.', 'O'), ('is', 'O'), ('a', 'O'), ('company', 'O'), ('based', 'O'), ('in', 'O'), ('New', 'I-LOC'), ('York', 'I-LOC'), ('City', 'I-LOC'), ('.', 'O'), ('Its', 'O'), ('headquarters', 'O'), ('are', 'O'), ('in', 'O'), ('D', 'I-LOC'), ('##UM', 'I-LOC'), ('##BO', 'I-LOC'), (',', 'O'), ('therefore', 'O'), ('very', 'O'), ('##c', 'O'), ('##lose', 'O'), ('to', 'O'), ('the', 'O'), ('Manhattan', 'I-LOC'), ('Bridge', 'I-LOC'), ('.', 'O'), ('[SEP]', 'O')]

与使用pipeline不同的是,每个token都有预测结果,并且没有删除O:outsider 这个类(O代表着并不属于任何实体的token)

7. Summarization

讲一个文档总结成一个短文本

一个文档总结数据集的例子就是:CNN/Daily Mail dataset,包含了长的新闻文本用于文本总结

具体流程详见:document

使用pipeline进行文本总结的例子:

from transformers import pipeline

summarizer = pipeline("summarization")

ARTICLE = """ New York (CNN)When Liana Barrientos was 23 years old, she got married in Westchester County, New York.

A year later, she got married again in Westchester County, but to a different man and without divorcing her first husband.

Only 18 days after that marriage, she got hitched yet again. Then, Barrientos declared "I do" five more times, sometimes only within two weeks of each other.

In 2010, she married once more, this time in the Bronx. In an application for a marriage license, she stated it was her "first and only" marriage.

Barrientos, now 39, is facing two criminal counts of "offering a false instrument for filing in the first degree," referring to her false statements on the

2010 marriage license application, according to court documents.

Prosecutors said the marriages were part of an immigration scam.

On Friday, she pleaded not guilty at State Supreme Court in the Bronx, according to her attorney, Christopher Wright, who declined to comment further.

After leaving court, Barrientos was arrested and charged with theft of service and criminal trespass for allegedly sneaking into the New York subway through an emergency exit, said Detective

Annette Markowski, a police spokeswoman. In total, Barrientos has been married 10 times, with nine of her marriages occurring between 1999 and 2002.

All occurred either in Westchester County, Long Island, New Jersey or the Bronx. She is believed to still be married to four men, and at one time, she was married to eight men at once, prosecutors say.

Prosecutors said the immigration scam involved some of her husbands, who filed for permanent residence status shortly after the marriages.

Any divorces happened only after such filings were approved. It was unclear whether any of the men will be prosecuted.

The case was referred to the Bronx District Attorney\'s Office by Immigration and Customs Enforcement and the Department of Homeland Security\'s

Investigation Division. Seven of the men are from so-called "red-flagged" countries, including Egypt, Turkey, Georgia, Pakistan and Mali.

Her eighth husband, Rashid Rajput, was deported in 2006 to his native Pakistan after an investigation by the Joint Terrorism Task Force.

If convicted, Barrientos faces up to four years in prison. Her next court appearance is scheduled for May 18.

"""

print(summarizer(ARTICLE, max_length=130, min_length=30, do_sample=False))

[{

'summary_text': 'Liana Barrientos, 39, is charged with two counts of "offering a false instrument for filing in the first degree" In total, she has been married 10 times, with nine of her marriages occurring between 1999 and 2002. She is believed to still be married to four men.'}]

接下来是·使用model和tokenizer的文本总结样例步骤:

1.从检查点实例化一个tokenizer和model,summarization任务通常用的是encoder-decoder模型:Bart,T5

2.定义一个要被概述的文本article

3.添加T5特定的前缀"summarize: "

4.使用PreTrainedModel.generate() 生成模型

from transformers import AutoModelWithLMHead, AutoTokenizer

model = AutoModelWithLMHead.from_pretrained("t5-base")

tokenizer = AutoTokenizer.from_pretrained("t5-base")

# T5 uses a max_length of 512 so we cut the article to 512 tokens.

inputs = tokenizer.encode("summarize: " + ARTICLE, return_tensors="pt", max_length=512)

outputs = model.generate(inputs, max_length=150, min_length=40, length_penalty=2.0, num_beams=4, early_stopping=True)

8.Translation 翻译

略