Kubernetes ceph-csi 环境搭建

在kubernetes上搭建ceph csi环境

本文旨在说明如何在kubernetes集群中使用ceph-csi (版本 v2.0)做kubernetes的存储,官方的搭建的说明ceph-csi环境搭建

值得注意的是(官方的说明文档里面有):ceph-csi使用的是RBD的默认kernel模块,这个模块可能不支持所有的Ceph CRUSH或者RBD镜像的特征。

需要的环境

- kubernetes (实验中使用的是1.15版本)

- ceph 集群(实验中使用的是nautious版本)

- ceph luminous集群也可以,但是要修改部分命令

搭建过程

创建一个ceph pool

[root@node1 ~]# ceph osd pool create kubernetes

[root@node1 ~]# rbd pool init kubernetes

编辑CEPH-CSI

为kubernetes和ceph-csi创建一个新的用户

[root@node1 ~]# ceph auth get-or-create client.kubernetes mon 'profile rbd' osd 'profile rbd pool=kubernetes' mgr 'profile rbd pool=kubernetes'

[client.kubernetes]

key = AQD9o0Fd6hQRChAAt7fMaSZXduT3NWEqylNpmg==

- 注意:这里key后面对应的只是一个例子,实际配置中要以运行命令后产生的结果为准

- 这里的key使用user的key,后面配置中是需要用到的

- 如果是ceph luminous版本的集群,那么命令应该是

ceph auth get-or-create client.kubernetes mon 'allow r' osd 'allow rwx pool=kubernetes' -o ceph.client.kubernetes.keyring

生成ceph-csi的kubernetes configmap

[root@node1 ~]# ceph mon dump

dumped monmap epoch 1

epoch 1

fsid 82e65362-72f7-4206-b94d-04e2c5e2d70d

last_changed 2020-05-29 05:26:42.313889

created 2020-05-29 05:26:42.313889

min_mon_release 14 (nautilus)

0: [v2:10.80.0.41:3300/0,v1:10.80.0.41:6789/0] mon.node1

- 注意,这里一共有两个需要使用的信息,第一个是fsid(可以称之为集群id),第二个是监控节点信息0: [v2:10.80.0.41:3300/0,v1:10.80.0.41:6789/0] mon.node1(可能有多个,实验中只配置了1个)

- 另外,目前的ceph-csi只支持V1版本的协议,所以监控节点那里我们只能用v1的那个IP和端口号

用以上的的信息生成configmap:

[root@node1 ~]# cat < csi-config-map.yaml

---

apiVersion: v1

kind: ConfigMap

data:

config.json: |-

[

{

"clusterID": "82e65362-72f7-4206-b94d-04e2c5e2d70d",

"monitors": [

"10.80.0.41:6789"

]

}

]

metadata:

name: ceph-csi-config

EOF

在kubernetes集群上,将此configmap存储到集群

kubectl apply -f csi-config-map.yaml

生成ceph-csi cephx的secret

[root@node1 ~]# cat < csi-rbd-secret.yaml

---

apiVersion: v1

kind: Secret

metadata:

name: csi-rbd-secret

namespace: default

stringData:

userID: kubernetes

userKey: AQD9o0Fd6hQRChAAt7fMaSZXduT3NWEqylNpmg==

EOF

- 这里就用到了之前生成的用户的用户id(kubernetes)和key

将此配置存储到kubernetes中

kubectl apply -f csi-rbd-secret.yaml

配置ceph-csi插件(kubernetes上的rbac和提供存储功能的容器)

- rbac部分

kubectl apply -f https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-provisioner-rbac.yaml kubectl apply -f https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-nodeplugin-rbac.yaml - provisioner部分

$ wget https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-rbdplugin-provisioner.yaml $ kubectl apply -f csi-rbdplugin-provisioner.yaml $ wget https://raw.githubusercontent.com/ceph/ceph-csi/master/deploy/rbd/kubernetes/csi-rbdplugin.yaml $ kubectl apply -f csi-rbdplugin.yaml- 这里的两个yaml文件默认都会去拉取镜像quay.io/cephcsi/cephcsi:canary,如果要是用其他版本,请自行修改yaml文件

使用ceph块儿设备

创建storageclass

$ cat < csi-rbd-sc.yaml

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: csi-rbd-sc

provisioner: rbd.csi.ceph.com

parameters:

clusterID: 82e65362-72f7-4206-b94d-04e2c5e2d70d

imageFeatures: layering

pool: kubernetes

csi.storage.k8s.io/provisioner-secret-name: csi-rbd-secret

csi.storage.k8s.io/provisioner-secret-namespace: default

csi.storage.k8s.io/node-stage-secret-name: csi-rbd-secret

csi.storage.k8s.io/node-stage-secret-namespace: default

reclaimPolicy: Delete

mountOptions:

- discard

EOF

$ kubectl apply -f csi-rbd-sc.yaml

- 第一,这里的clusterID对应之前的步骤中的fsid

- 第二,这里新增了一项,imageFeatures,这个是用来确定创建的image的特征的,这个默认是用的rbd内核中的特征列表,但是我们用的linux内核不一定支持所有的特征

- storageclass的yaml文件比较全的文件csi-ceph-sc.yaml

--- apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: csi-rbd-sc provisioner: rbd.csi.ceph.com # If topology based provisioning is desired, delayed provisioning of # PV is required and is enabled using the following attribute # For further information read TODO# volumeBindingMode: WaitForFirstConsumer parameters: # String representing a Ceph cluster to provision storage from. # Should be unique across all Ceph clusters in use for provisioning, # cannot be greater than 36 bytes in length, and should remain immutable for # the lifetime of the StorageClass in use. # Ensure to create an entry in the config map named ceph-csi-config, based on # csi-config-map-sample.yaml, to accompany the string chosen to # represent the Ceph cluster in clusterID below clusterID: # If you want to use erasure coded pool with RBD, you need to create # two pools. one erasure coded and one replicated. # You need to specify the replicated pool here in the `pool` parameter, it is # used for the metadata of the images. # The erasure coded pool must be set as the `dataPool` parameter below. # dataPool: ec-data-pool pool: rbd # RBD image features, CSI creates image with image-format 2 # CSI RBD currently supports only `layering` feature. imageFeatures: layering # The secrets have to contain Ceph credentials with required access # to the 'pool'. csi.storage.k8s.io/provisioner-secret-name: csi-rbd-secret csi.storage.k8s.io/provisioner-secret-namespace: default csi.storage.k8s.io/controller-expand-secret-name: csi-rbd-secret csi.storage.k8s.io/controller-expand-secret-namespace: default csi.storage.k8s.io/node-stage-secret-name: csi-rbd-secret csi.storage.k8s.io/node-stage-secret-namespace: default # Specify the filesystem type of the volume. If not specified, # csi-provisioner will set default as `ext4`. csi.storage.k8s.io/fstype: ext4 # uncomment the following to use rbd-nbd as mounter on supported nodes # mounter: rbd-nbd # Prefix to use for naming RBD images. # If omitted, defaults to "csi-vol-". # volumeNamePrefix: "foo-bar-" # Instruct the plugin it has to encrypt the volume # By default it is disabled. Valid values are "true" or "false". # A string is expected here, i.e. "true", not true. # encrypted: "true" # Use external key management system for encryption passphrases by specifying # a unique ID matching KMS ConfigMap. The ID is only used for correlation to # config map entry. # encryptionKMSID: # Add topology constrained pools configuration, if topology based pools # are setup, and topology constrained provisioning is required. # For further information read TODO # topologyConstrainedPools: | # [{"poolName":"pool0", # "dataPool":"ec-pool0" # optional, erasure-coded pool for data # "domainSegments":[ # {"domainLabel":"region","value":"east"}, # {"domainLabel":"zone","value":"zone1"}]}, # {"poolName":"pool1", # "dataPool":"ec-pool1" # optional, erasure-coded pool for data # "domainSegments":[ # {"domainLabel":"region","value":"east"}, # {"domainLabel":"zone","value":"zone2"}]}, # {"poolName":"pool2", # "dataPool":"ec-pool2" # optional, erasure-coded pool for data # "domainSegments":[ # {"domainLabel":"region","value":"west"}, # {"domainLabel":"zone","value":"zone1"}]} # ] reclaimPolicy: Delete allowVolumeExpansion: true mountOptions: - discard - 这里有必要讲一下ceph的feature

- 实验所使用的ceph集群是基于centos7.5的,其linux内核版本为3.10,默认只支持ceph layering 特征

在没有指定imageFeature时,去申请pvc,会产生如下bug

ceph nautious默认的image需要支持的特征有Warning FailedMapVolume 0s (x4 over 5s) kubelet, k8s-1 MapVolume.SetUp failed for volume "pvc-5340f32e-fa2e-4f53-af1d-7154430af7a2" : rpc error: code = Internal desc = rbd: map failed exit status 6, rbd output: rbd: sysfs write failed RBD image feature set mismatch. Try disabling features unsupported by the kernel with "rbd feature disable". In some cases useful info is found in syslog - try "dmesg | tail". rbd: map failed: (6) No such device or addressfeatures: layering, exclusive-lock, object-map, fast-diff, deep-flatten; linux内核3.10版本只支持layering,所以这里有必要限制一下,当然我们也可以升级linux内核版本,不过这个代价就比较大了

- 实验所使用的ceph集群是基于centos7.5的,其linux内核版本为3.10,默认只支持ceph layering 特征

创建PVC

$ cat < raw-block-pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: raw-block-pvc

spec:

accessModes:

- ReadWriteOnce

volumeMode: Block

resources:

requests:

storage: 1Gi

storageClassName: csi-rbd-sc

EOF

$ kubectl apply -f raw-block-pvc.yaml

创建使用PVC的Pod

$ cat < raw-block-pod.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: pod-with-raw-block-volume

spec:

containers:

- name: fc-container

image: fedora:26

command: ["/bin/sh", "-c"]

args: ["tail -f /dev/null"]

volumeDevices:

- name: data

devicePath: /dev/xvda

volumes:

- name: data

persistentVolumeClaim:

claimName: raw-block-pvc

EOF

$ kubectl apply -f raw-block-pod.yaml

基于Ceph filesystem的pvc和pod

- pvc

$ cat <pvc.yaml --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: rbd-pvc spec: accessModes: - ReadWriteOnce volumeMode: Filesystem resources: requests: storage: 1Gi storageClassName: csi-rbd-sc EOF $ kubectl apply -f pvc.yaml - pod

$ cat <pod.yaml --- apiVersion: v1 kind: Pod metadata: name: csi-rbd-demo-pod spec: containers: - name: web-server image: nginx volumeMounts: - name: mypvc mountPath: /var/lib/www/html volumes: - name: mypvc persistentVolumeClaim: claimName: rbd-pvc readOnly: false EOF $ kubectl apply -f pod.yaml

后续遇到的问题

ceph-csi 2.1 3.0版本部署时遇到的新问题

- 新版本的ceph-csi中需要一个ConfigMap,其名为ceph-csi-encryption-kms-config

- 解决方案:

- 找到需要用到它的yaml,注释掉所有相关引用

- 引用了该ConfigMap的yaml文件

deploy/rbd/kubernetes/csi-rbdplugin-provisioner.yaml

deploy/rbd/kubernetes/csi-rbdplugin.yaml

- 引用了该ConfigMap的yaml文件

- 部署一个

- 样例路径

ceph-csi/examples/kms/vault/kms-config.yaml

- 样例路径

- 找到需要用到它的yaml,注释掉所有相关引用

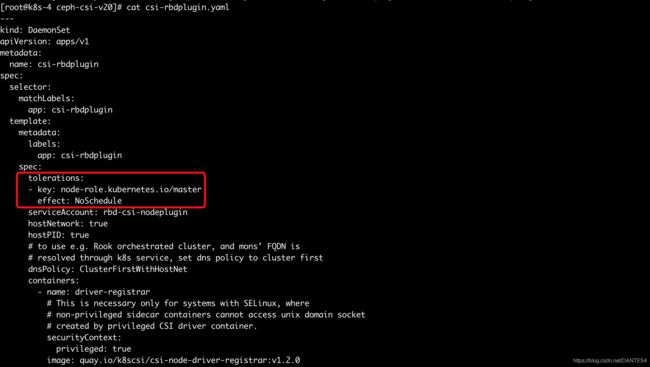

ceph-csi 使用过程中出现的"driver name rbd.csi.ceph.com not found in the list of registered CSI drivers"

- 遇到的问题描述

部署完成ceph-csi之后,携带的例子也跑通了,继续部署对master节点亲和性100%的pod,结果pod无法mount通过ceph-csi申请的PV - 问题原因

ceph-csi官方的deploy目录下的daemonset的配置中,默认是不允许在master节点上部署pod的,这样导致,master节点上通过ceph-csi申请volume的pod,可以申请到PV但却无法挂载到pod - 解决方案

在ceph-csi/deploy/rbd/kubernetes/csi-rbdplugin.yaml中的DaemonSet的spec中添加如下配置:

示例如下:tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule