Kubernetes集群部署:k8s部署、pod管理、资源清单、控制器、Service

文章目录

-

- 一、kubernetes部署

-

- 1.master端部署

- 2.node端部署

- 二、pod管理

-

- 创建pod

- 资源清单

- 自主式清单编写,用于在同一个pod内创建多个容器

- 标签

- 三、pod生命周期

-

- 1.配置pods和容器

- 2.init容器

- 四、控制器管理pod

-

- 1.ReplicaSet rs控制器部署

- 2.daemonset控制器

- 3.job

- 4.cronjob

- 五、Service

-

- 1.k8s网络通信,面试,主要问通信的原理

- 2.Service的几种服务方式

-

- 1)无头服务,不需要ip,直接用域名解析myservice.default.svc.cluster.local

- 2)2种方式,指定一个LoadBalancer类型的service

- 3)3种方式,自己分地址

- 4)4种方式,ExternalName,内部访问外部

- 5)适用于公有云上的 Kubernetes 服务

- 六、flannel网络

-

- flannel支持多种后端:Vxlan、host-gw(不支持跨网段)、UDP(性能差)

- 七、ingress服务

-

- 1.部署ingress服务

- 2.使用ingress服务

- 3.nginx的负载均衡控制

-

-

- 生成key和证书

- 认证,生成认证文件

-

- 八、calico网络插件

-

- 网络策略NetworkPolicy

-

- 1)限定指定pod访问(根据标签判断)

- 2)允许指定pod访问

- 3)禁止namespace中所有pod之间的相互访问

- 4)禁止其他namespace的访问服务

- 5)允许指定的namespace访问

- 6)允许外网访问

一、kubernetes部署

- Kubernetes对计算资源进行了更高层次的抽象,通过将容器进行细致的组合,将最终的应用服务交给用户。

- 官方网站:https://kubernetes.io/docs/setup/production-environment/container-runtimes/#docker

1.master端部署

可以新开2个虚拟机,安装docker

%server2作为master端

[root@server2 yum.repos.d]# ls

docker-ce.repo dvd.repo redhat.repo

[root@server2 yum.repos.d]# vim dvd.repo

[root@server2 ~]# vim /etc/hosts#解析要改

[root@server2 ~]# cd docker-ce/

[root@server2 docker-ce]# ls

containerd.io-1.4.3-3.1.el7.x86_64.rpm

container-selinux-2.119.2-1.911c772.el7_8.noarch.rpm

docker-ce-20.10.2-3.el7.x86_64.rpm

docker-ce-cli-20.10.2-3.el7.x86_64.rpm

docker-ce-rootless-extras-20.10.2-3.el7.x86_64.rpm

fuse3-libs-3.6.1-4.el7.x86_64.rpm

fuse-overlayfs-0.7.2-6.el7_8.x86_64.rpm

repodata

slirp4netns-0.4.3-4.el7_8.x86_64.rpm

[root@server2 docker-ce]# yum install docker-ce docker-ce-cli

[root@server2 docker-ce]# systemctl start docker

[root@server2 docker]# systemctl enable docker

[root@server2 docker]# vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://reg.westos.org"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

%拉取阿里云镜像,加快部署

[root@server2 docker]# vim /etc/yum.repos.d/k8s.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

# yum install -y kubelet kubeadm kubectl

# systemctl enable --now kubelet

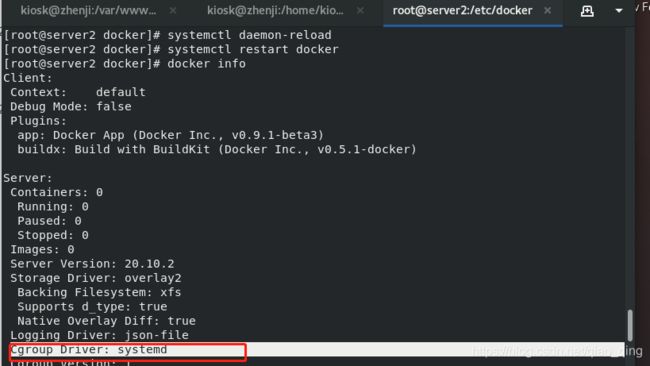

[root@server13 ~]# systemctl daemon-reload

[root@server13 ~]# systemctl restart docker

[root@server13 ~]# docker info#是systemd

Cgroup Driver: systemd

[root@server2 ~]# cd /etc/sysctl.d/

[root@server2 sysctl.d]# cat docker.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-arptables = 1

[root@server2 ~]# systemctl restart docker

#断掉server2,重连

[root@server2 ~]# kubeadm config print init-defaults 查看默认配置

[root@server2 ~]# kubeadm config images list --image-repository registry.aliyuncs.com/google_containers 列出所需镜像

[root@server2 ~]# kubeadm config images pull --image-repository registry.aliyuncs.com/google_containers 拉取镜像

[root@server2 ~]# kubeadm init --pod-network-cidr=10.244.0.0/16 --image-repository registry.aliyuncs.com/google_containers 初始化

[root@server2 sysctl.d]# mkdir -p $HOME/.kube

[root@server2 sysctl.d]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@server2 sysctl.d]# chown $(id -u):$(id -g) $HOME/.kube/config

[root@server2 sysctl.d]# echo "source <(kubectl completion bash)" >> ~/.bashrc#命令补齐,重连生效

[root@server2 sysctl.d]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

#直接拷贝也可以kube-flannel.yml

安装网络组件

查看节点

[root@server2 sysctl.d]# kubectl get pod --namespace kube-system

[root@server2 sysctl.d]# kubectl get node

[root@server2 sysctl.d]# docker save quay.io/coreos/flannel:v0.12.0-amd64 registry.aliyuncs.com/google_containers/pause:3.2 registry.aliyuncs.com/google_containers/coredns:1.7.0 registry.aliyuncs.com/google_containers/kube-proxy:v1.20.2 > node.tar

#导出node节点所需镜像并导入各节点

2.node端部署

%server3作为node端

[root@server3 yum.repos.d]# ls

docker-ce.repo dvd.repo redhat.repo

[root@server3 yum.repos.d]# vim dvd.repo

[root@server3 ~]# vim /etc/hosts#解析要改

[root@server3 ~]# cd docker-ce/

[root@server3 docker-ce]# ls

containerd.io-1.4.3-3.1.el7.x86_64.rpm

container-selinux-2.119.2-1.911c772.el7_8.noarch.rpm

docker-ce-20.10.2-3.el7.x86_64.rpm

docker-ce-cli-20.10.2-3.el7.x86_64.rpm

docker-ce-rootless-extras-20.10.2-3.el7.x86_64.rpm

fuse3-libs-3.6.1-4.el7.x86_64.rpm

fuse-overlayfs-0.7.2-6.el7_8.x86_64.rpm

repodata

slirp4netns-0.4.3-4.el7_8.x86_64.rpm

[root@server3 docker-ce]# yum install docker-ce docker-ce-cli

[root@server3 docker-ce]# systemctl start docker

[root@server3 docker]# systemctl enable docker

[root@server3 docker]# vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://reg.westos.org"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

%拉取阿里云镜像,加快部署

[root@server3 docker]# vim /etc/yum.repos.d/k8s.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

# yum install -y kubelet kubeadm kubectl

# systemctl enable --now kubelet

[root@server3 ~]# systemctl daemon-reload

[root@server3 ~]# systemctl restart docker

[root@server3 ~]# docker info#是systemd

Cgroup Driver: systemd

[root@server3 ~]# cd /etc/sysctl.d/

[root@server3 sysctl.d]# cat docker.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-arptables = 1

[root@server3 ~]# systemctl restart docker

#断掉server2,重连

[root@server3 ~]# docker load -i node.tar

[root@server3 ~]# kubeadm join 172.25.0.2:6443 --token a55bse.7wbzxx70srbrr7ko --discovery-token-ca-cert-hash sha256:03b69cf689dc4cedfd52cd63167d06fdef69aa76d271b19428dd39fa254a0319

[root@server2 ~]# kubectl get nodes#有server2,3

[root@server2 sysctl.d]# kubectl get pod --namespace kube-system

二、pod管理

- pod是可以创建和管理k8s计算的最小可部署单元,一个pod代表着集群中运行的一个进程,每个pod都有唯一的ip

- 一个pod类似一个碗豆荚(资源共享),包含一个或多个容器(通常是docker),多个容器之间共享IPC、network和UTC namespace

- 官方手册:https://kubernetes.io/docs/reference/generated/kubectl/kubectl-commands

[root@server2 ~]# kubectl get pod -n kube-system #都要是running

kubectl get node

[root@server2 ~]# kubectl get pod

No resources found in default namespace.

[root@server2 ~]# kubectl get ns

NAME STATUS AGE

default Active 21d

kube-node-lease Active 21d

kube-public Active 21d

kube-system Active 21d

[root@server2 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7f89b7bc75-pdw7l 0/1 Pending 0 21d

coredns-7f89b7bc75-r28lt 0/1 Pending 0 21d

etcd-server2 1/1 Running 1 21d

kube-apiserver-server2 1/1 Running 1 21d

kube-controller-manager-server2 1/1 Running 2 21d

kube-proxy-4vnhg 1/1 Running 1 21d

kube-proxy-tgbxj 1/1 Running 1 21d

kube-scheduler-server2 1/1 Running 1 21d

创建pod

[root@server2 ~]# kubectl run nginx --image=myapp:v1

kubectl run -h --help

[root@server2 ~]# kubectl get pod

[root@server2 ~]# kubectl get pod -n default

[root@server2 ~]# kubectl get pod

[root@server2 ~]# kubectl get pod -o wide#wide查看信息

[root@server2 ~]# curl 10.244.2.9#nginx的ip

[root@server2 ~]# kubectl run demo --image=busyboxplus -it

[root@server2 ~]# kubectl get pod

[root@server2 ~]# kubectl get pod -o wide

[root@server2 ~]# kubectl attach demo -it#demo是pod的名字,-c是pod内的哪个容器

/ #curl 10.244.2.9

[root@server2 ~]# kubectl delete pod nginx

[root@server2 ~]# kubectl log demo#查看那日志

[root@server2 ~]# kubectl create deployment -h

[root@server2 ~]# kubectl create deployment nginx --image=myapp:v1#通过控制器创建pod,可以创建多个

[root@server2 ~]# kubectl get all

[root@server2 ~]# kubectl delete pod nginx-67f9d9c97f-84tl4

[root@server2 ~]# kubectl get pod#控制器和rs没变,又重新创建了一个id

[root@server2 ~]# kubectl scale deployment --replicas=2 nginx #拉伸#有内部仓库会比较快

[root@server2 ~]# kubectl get pod

[root@server2 ~]# kubectl get pod -o wide

[root@server2 ~]# kubectl scale deployment --replicas=6 nginx#创建6个

[root@server2 ~]# kubectl scale deployment --replicas=3 nginx#创建3个,会优先删除运行时间少的

[root@server2 ~]# kubectl get pod -o wide

[root@server2 ~]# kubectl delete pod nginx-67f9d9c97f-gtb5m

[root@server2 ~]# kubectl get pod#删除一个之后,会自动添加一个,满足=3

%%%%对外暴露,能让用户访问,且是负载均衡的

[root@server2 ~]# kubectl expose deployment nginx --port=80#控制器deployment的nginx

[root@server2 ~]# kubectl get svc

[root@server2 ~]# curl 10.100.83.124#nginx的ip

[root@server2 ~]# curl 10.100.83.124/hostname.html#负载均衡

[root@server2 ~]# kubectl describe svc nginx#Endpoints有三个节点,是port的ip地址

[root@server2 ~]# kubectl get pod -o wide#看port的ip地址

[root@server2 ~]# kubectl describe svc nginx

[root@server2 ~]# curl 10.100.83.124#上一命令的ip

[root@server2 ~]# curl 10.100.83.124/hostname.html#负载均衡,还有健康检查机制,有挂了就去掉,新增的就添加

%暴露默认是集群内部,现在让集群外部也可以访问

[root@server2 ~]# kubectl edit svc nginx

修改:type:NodePort

[root@server2 ~]# kubectl get svc#已经改成NodePort

[root@zhenji]# curl 172.25.0.2:32208/hostname.html#32208动态ip,Nodeip:NodePort

[root@zhenji]# curl 172.25.0.3:32208/hostname.html

[root@zhenji]# curl 172.25.0.4:32208/hostname.html

%更新

[root@server2 ~]# kubectl set -h

[root@server2 ~]# kubectl set image -h

[root@server2 ~]# kubectl get all

[root@server2 ~]# kubectl get pod

[root@server2 ~]# kubectl describe pod nginx-67f9d9c97f-4qcbc#查看

[root@server2 ~]# kubectl set image deployment nginx myapp=myapp:v2#改变镜像为myapp:v2

[root@server2 ~]# kubectl rollout history deployment nginx#更新的历史记录

[root@server2 ~]# kubectl get pod#更新后rs名变了

[root@server2 ~]# kubectl rollout undo deployment nginx --to-revision=1#回滚到版本1

[root@server2 ~]# kubectl rollout history deployment nginx#查看历史版本

资源清单

[root@server2 ~]# kubectl delete svc nginx

[root@server2 ~]# kubectl delete deplouments.apps nginx

[root@server2 ~]# kubectl get all #释放资源

[root@server2 ~]# kubectl api-versions #查询命令

[root@server2 ~]# kubectl explain pod.apiVersion

[root@server2 ~]# kubectl explain pod.metadata

[root@server2 ~]# vim pod.yml

apiVersion: v1

kind: Pod#种类,资源类型

metadata:#元数据

name: nginx

namespace: default

spec:

containers:

- name: nginx

image: myapp:v1

imagePullPolicy: IfNotPresent

[root@server2 ~]# kubectl apply -f pod.yml #执行

[root@server2 ~]# kubectl get pod

[root@server2 ~]# kubectl describe pod nginx

#创建一个控制器

[root@server2 ~]# kubectl explain deployment

[root@server2 ~]# vim pod.yml

[root@server2 ~]# cat pod.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: default

spec:

replicas: 3

selector:

matchLabels:

run: nginx

template:

metadata:

labels:

run: nginx

spec:

containers:

- name: nginx

image: myapp:v1

imagePullPolicy: IfNotPresent

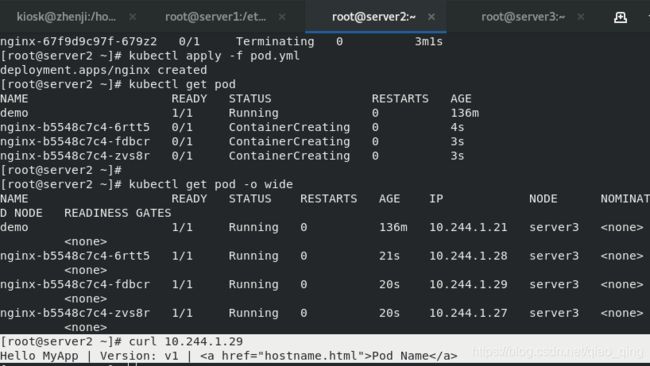

[root@server2 ~]# kubectl apply -f pod.yml #执行

[root@server2 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demo 1/1 Running 0 128m 10.244.1.21 server3

nginx 1/1 Running 0 63m 10.244.1.22 server3

nginx-b5548c7c4-pg4ch 1/1 Running 0 45m 10.244.1.25 server3

nginx-b5548c7c4-rjtb6 1/1 Running 0 45m 10.244.1.24 server3

nginx-b5548c7c4-zp5l4 1/1 Running 0 45m 10.244.1.23 server3

[root@server2 ~]# curl 10.244.1.24

Hello MyApp | Version: v1 | Pod Name

[root@server2 ~]# kubectl delete -f pod.yml

deployment.apps "nginx" deleted

[root@server2 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

demo 1/1 Running 0 129m

nginx 1/1 Running 0 64m

[root@server2 ~]# kubectl get pod demo -o yaml#可以看

[root@server2 ~]# kubectl create deployment nginx --image=myapp:v1

[root@server2 ~]# kubectl get all

[root@server2 ~]# kubectl get deployments.apps nginx -o yaml#输出成yml格式,可以看作模板

[root@server2 ~]# kubectl delete deployments.apps nginx

[root@server2 ~]# kubectl apply -f pod.yml

[root@server2 ~]# kubectl get pod

[root@server2 ~]# kubectl get pod -o wide

[root@server2 ~]# curl 10.244.1.29

Hello MyApp | Version: v1 | Pod Name

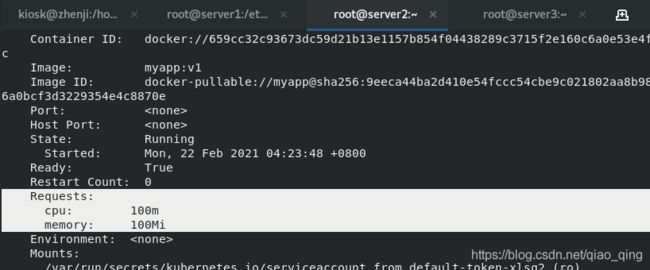

%%资源限制,最大限制limit,最小限制request

[root@server2 ~]# kubectl -n kube-system describe pod coredns-7f89b7bc75-8pg2s#看Requests:#控制cpu和内存

[root@server2 ~]# vim pod.yml

[root@server2 ~]# cat pod.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: default

spec:

replicas: 3

selector:

matchLabels:

run: nginx

template:

metadata:

labels:

run: nginx

spec:

containers:

- name: nginx

image: myapp:v1

imagePullPolicy: IfNotPresent

resources:

requests:

cpu: 100m

memory: 100Mi#最少70Mi

[root@server2 ~]# kubectl apply -f pod.yml

deployment.apps/nginx configured

[root@server2 ~]# kubectl get pod

[root@server2 ~]# kubectl describe pod nginx-66f766df7c-gkd89#查看,一致

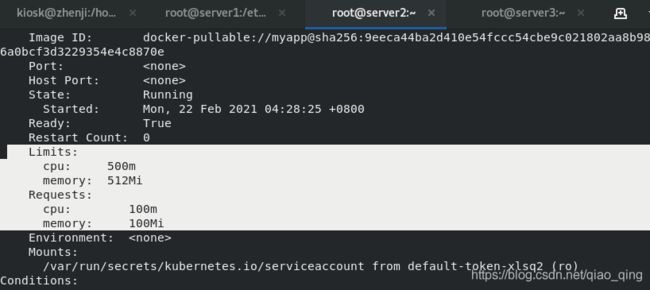

[root@server2 ~]# kubectl explain pod.spec.containers.resources#看limits ,控制cpu和内存

[root@server2 ~]# vim pod.yml

[root@server2 ~]# cat pod.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: default

spec:

replicas: 3

selector:

matchLabels:

run: nginx

template:

metadata:

labels:

run: nginx

spec:

containers:

- name: nginx

image: myapp:v1

imagePullPolicy: IfNotPresent

resources:

requests:

cpu: 100m

memory: 100Mi#最少70Mi

limits:

cpu: 0.5

memory: 512Mi

[root@server2 ~]# kubectl apply -f pod.yml

[root@server2 ~]# kubectl get pod

[root@server2 ~]# kubectl describe pod nginx-79cc587f-qt7bt

Limits:

cpu: 500m

memory: 512Mi

Requests:

cpu: 100m

memory: 100Mi

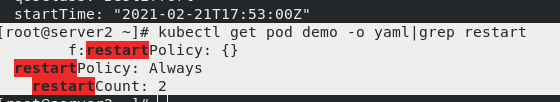

[root@server2 ~]# kubectl get pod demo -o yaml|grep restart#自己默认会一直自启动

[root@server2 ~]# kubectl get nodes --show-labels #查看节点

NAME STATUS ROLES AGE VERSION LABELS

server2 Ready control-plane,master 22d v1.20.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=server2,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node-role.kubernetes.io/master=

server3 Ready 22d v1.20.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=server3,kubernetes.io/os=linux

[root@server2 ~]# vim pod.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: default

spec:

replicas: 3#副本

selector:

matchLabels:

run: nginx#标签,自己定义的

template:

metadata:

labels:

run: nginx#和上面标签保持一致,所管理的标签

spec:

nodeSelector:#锁定节点

kubernetes.io/hostname: server3

#nodeName: server3#方法2,简单

hostNetwork: true

containers:

- name: nginx

image: myapp:v1

imagePullPolicy: IfNotPresent

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 0.5

memory: 512Mi

[root@server2 ~]# kubectl apply -f pod.yml #执行

nodeSelector:#锁定节点

kubernetes.io/hostname: server3

#nodeName: server3#方法2,简单

[root@server2 ~]# kubectl get pod -o wide#查看节点在server3上

[root@server2 ~]# docker pull game2048#是共有仓库中的,可以直接拉取。而私有仓库需要认证spec.nodeSelector

[root@server2 ~]# kubectl apply -f pod.yml #执行

hostNetwork: true

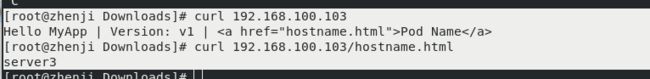

[root@zhenji]# curl 192.168.100.103

Hello MyApp | Version: v1 | Pod Name

[root@zhenji]# curl 192.168.100.103/hostname.html

server3

自主式清单编写,用于在同一个pod内创建多个容器

[root@server2 ~]# vim pod.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: default

spec:

replicas: 3#副本

selector:

matchLabels:

run: nginx#标签,自己定义的

template:

metadata:

labels:

run: nginx#和上面标签保持一致,所管理的标签

spec:

#nodeSelector:#锁定节点

# kubernetes.io/hostname: server3

#nodeName: server3#方法2,简单

#hostNetwork: true

containers:

- name: nginx

image: myapp:v1

imagePullPolicy: IfNotPresent

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 0.5

memory: 512Mi

- name: busybox

image: busybox

imagePullPolicy: IfNotPresent

stdin: true

tty: true

添加 - name: busybox

image: busybox

imagePullPolicy: IfNotPresent

stdin: true

tty: true

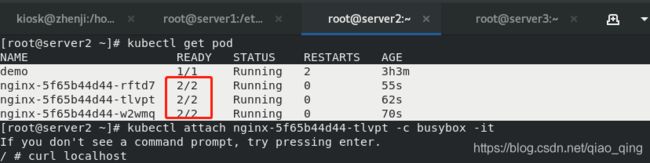

[root@server2 ~]# kubectl get pod#2/2说明有两个容器

[root@server2 ~]# kubectl attach nginx-5f65b44d44-tlvpt -c busybox -it#-c指定容器busybox

[root@server2 ~]# kubectl logs nginx-5f65b44d44-tlvpt nginx #可以排错

[root@server2 ~]# kubectl describe pod nginx-5f65b44d44-tlvpt

[root@server2 ~]# vim pod.yml

注释 #- name: busybox

# image: busybox

# imagePullPolicy: IfNotPresent

# stdin: true

# tty: true

标签

[root@server2 ~]# kubectl label nodes server3 app=nginx#添加

[root@server2 ~]# kubectl get nodes server3 --show-labels

NAME STATUS ROLES AGE VERSION LABELS

server3 Ready 22d v1.20.2 app=nginx,beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=server3,kubernetes.io/os=linux

[root@server2 ~]# kubectl label nodes server3 app=myapp#修改

error: 'app' already has a value (nginx), and --overwrite is false

[root@server2 ~]# kubectl label nodes server3 app=myapp --overwrite#overwrite覆盖

node/server3 labeled

[root@server2 ~]# kubectl get nodes server3 --show-labels

NAME STATUS ROLES AGE VERSION LABELS

server3 Ready 22d v1.20.2 app=myapp,beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=server3,kubernetes.io/os=linux

[root@server2 ~]# kubectl label nodes server3 app-#删除

[root@server2 ~]# kubectl label pod demo run=demo2

error: 'run' already has a value (demo), and --overwrite is false

[root@server2 ~]# kubectl label pod demo run=demo2 --overwrite

pod/demo labeled

[root@server2 ~]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

demo 1/1 Running 2 3h24m run=demo2

[root@server2 ~]# kubectl get pod -l run#查看

[root@server2 ~]# kubectl get pod -L run

三、pod生命周期

%看kubernetes官方网站:https://kubernetes.io/docs/setup/,pod生命周期

#init容器不支持Readliness探针,one by one

#首先是容器环境初始化,初始化一个之后再初始化第二个…;再启动。。。

#init容器能做什么?

1.配置pods和容器

[root@server2 ~]# docker images

registry.aliyuncs.com/google_containers/pause#pod最基础的环境

[root@server2 ~]# vim live.yml

[root@server2 ~]# cat live.yml

apiVersion: v1

kind: Pod

metadata:

labels:

test: liveness

name: liveness-exec

spec:

containers:

- name: liveness

image: k8s.gcr.io/busybox

args:

- /bin/sh

- -c

- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5

periodSeconds: 5

[root@server2 ~]# kubectl create -f live.yml

pod/liveness-exec created

[root@server2 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

demo 1/1 Running 2 3h51m

liveness-exec 1/1 Running 0 30s

[root@server2 ~]# kubectl describe pod liveness-exec#查看过程

[root@server2 ~]# kubectl get pod#restart次数会一直增加

NAME READY STATUS RESTARTS AGE

demo 1/1 Running 2 3h53m

liveness-exec 1/1 Running 1 2m5s

[root@server2 ~]# vim live.yml

[root@server2 ~]# cat live.yml

apiVersion: v1

kind: Pod

metadata:

labels:

test: liveness

name: liveness-exec

spec:

containers:

- name: liveness

image: myapp:v1

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 2

periodSeconds: 3

readinessProbe:

httpGet:

path: /hostname.html

port: 80

initialDelaySeconds: 3

periodSeconds: 3

[root@server2 ~]# kubectl delete -f live.yml

[root@server2 ~]# kubectl create -f live.yml

[root@server2 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

demo 1/1 Running 2 4h1m

liveness-exec 1/1 Running 0 2m4s

#path: /hostname.html的路径存在时liveness-exec 1/1才会生效

#内部默认检测的是8080端口,需要说明port: 80,不然会一致重启寻找8080

2.init容器

%官方init文档:https://kubernetes.io/zh/docs/concepts/workloads/pods/init-containers/

[root@server2 ~]# vim service.yml

[root@server2 ~]# cat service.yml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 80

---

[root@server2 ~]# mv init.yml service.yml

[root@server2 ~]# kubectl create -f service.yml

[root@server2 ~]# kubectl describe svc myservice

Name: myservice

Namespace: default

Labels:

Annotations:

Selector:

Type: ClusterIP

IP Families:

IP: 10.106.68.120

IPs: 10.106.68.120

Port: 80/TCP

TargetPort: 80/TCP

Endpoints:

Session Affinity: None

Events:

[root@server2 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

demo 1/1 Running 4 14h

liveness-exec 1/1 Running 0 10h

[root@server2 ~]# kubectl attach demo -it

Defaulting container name to demo.

Use 'kubectl describe pod/demo -n default' to see all of the containers in this pod.

If you don't see a command prompt, try pressing enter.

/ # nslookup myservice#能解析到

[root@server2 ~]# vim init.yml

[root@server2 ~]# cat init.yml

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

spec:

containers:

- name: myapp-container

image: myapp:v1

initContainers:

- name: init-myservice

image: busybox

command: ['sh', '-c', "until nslookup myservice.default.svc.cluster.local; do echo waiting for myservice; sleep 2; done"]

[root@server2 ~]# kubectl delete -f service.yml

[root@server2 ~]# kubectl create -f init.yml#初始化容器

[root@server2 ~]# kubectl get pod

[root@server2 ~]# kubectl logs myapp-pod init-myservice#日志会报错#没有得到相应的解析,会一直在作解析

[root@server2 ~]# kubectl create -f service.yml

[root@server2 ~]# kubectl get pod#此时解析到了myservice

[root@server2 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

demo 1/1 Running 7 15h

liveness-exec 1/1 Running 0 11h

myapp-pod 0/1 Init:0/1 0 7m55s

[root@server2 ~]# kubectl delete -f service.yml

service "myservice" deleted

[root@server2 ~]# kubectl delete -f init.yml

四、控制器管理pod

1.ReplicaSet rs控制器部署

%官方:https://kubernetes.io/zh/docs/concepts/workloads/controllers/replicaset/

[root@server2 ~]# cat rs.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: myapp:v1

[root@server2 ~]# kubectl apply -f rs.yml

deployment.apps/deployment created

[root@server2 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

demo 1/1 Running 8 16h

deployment-6456d7c676-4zq72 1/1 Running 0 26s

deployment-6456d7c676-8cn4q 1/1 Running 0 26s

deployment-6456d7c676-g77z8 1/1 Running 0 26s

liveness-exec 1/1 Running 1 12h

[root@server2 ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

deployment-6456d7c676 3 3 3 32s

[root@server2 ~]# vim rs.yml #replicas: 6

[root@server2 ~]# kubectl apply -f rs.yml

[root@server2 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

demo 1/1 Running 8 16h

deployment-6456d7c676-2zlmx 1/1 Running 0 26s

deployment-6456d7c676-67tzx 1/1 Running 0 26s

deployment-6456d7c676-8cn4q 0/1 Terminating 0 4m14s

deployment-6456d7c676-dj45h 1/1 Running 0 26s

deployment-6456d7c676-g77z8 0/1 Terminating 0 4m14s

deployment-6456d7c676-m8sr7 1/1 Running 0 26s

deployment-6456d7c676-m8vmm 1/1 Running 0 26s

deployment-6456d7c676-rwdjr 0/1 Terminating 0 54s

deployment-6456d7c676-ss62r 1/1 Running 0 26s

liveness-exec 1/1 Running 1 12h

[root@server2 ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

deployment-6456d7c676 6 6 6 99s

[root@server2 ~]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

demo 1/1 Running 8 16h run=demo2

deployment-6456d7c676-2zlmx 1/1 Running 0 4m10s app=nginx,pod-template-hash=6456d7c676

deployment-6456d7c676-m8vmm 1/1 Running 0 4m10s app=nginx,pod-template-hash=6456d7c676

deployment-6456d7c676-ss62r 1/1 Running 0 4m10s app=nginx,pod-template-hash=6456d7c676

liveness-exec 1/1 Running 1 12h test=liveness

[root@server2 ~]# kubectl label pod deployment-6456d7c676-2zlmx app=myapp

error: 'app' already has a value (nginx), and --overwrite is false

[root@server2 ~]# kubectl label pod deployment-6456d7c676-2zlmx app=myapp --overwrite

[root@server2 ~]# kubectl get pod -L app#有四个,多了个myapp,有一个nginx是新建的,通过标签匹配的,没有3个nginx,就会新建1个,满足3个nginx

NAME READY STATUS RESTARTS AGE APP

demo 1/1 Running 8 16h

deployment-6456d7c676-2zlmx 1/1 Running 0 7m33s myapp

deployment-6456d7c676-hjpvm 1/1 Running 0 43s nginx

deployment-6456d7c676-m8vmm 1/1 Running 0 7m33s nginx

deployment-6456d7c676-ss62r 1/1 Running 0 7m33s nginx

liveness-exec 1/1 Running 1 12h

[root@server2 ~]# vim rs.yml

image: myapp:v2

[root@server2 ~]# kubectl apply -f rs.yml

deployment.apps/deployment configured

[root@server2 ~]# kubectl get pod -L app#3个nginx会滚动更新,是根据标签匹配pod的。标签改了myapp就不受控制

[root@server2 ~]# kubectl delete pod deployment-6456d7c676-2zlmx#可以删除myapp

[root@server2 ~]# kubectl get rs#把原来v1的rs回收,再新建一个v2

NAME DESIRED CURRENT READY AGE

deployment-6456d7c676 0 0 0 12m

deployment-6d4f5bf58f 3 3 3 2m32s

[root@server2 ~]# vim rs.yml

image: myapp:v1

[root@server2 ~]# kubectl apply -f rs.yml

[root@server2 ~]# kubectl get rs#rs又回到了v1

NAME DESIRED CURRENT READY AGE

deployment-6456d7c676 3 3 3 13m

deployment-6d4f5bf58f 0 0 0 3m24s

[root@server2 ~]# kubectl expose deployment deployment --port=80#暴露端口

service/deployment exposed

[root@server2 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

deployment ClusterIP 10.98.179.252 80/TCP 4s

kubernetes ClusterIP 10.96.0.1 443/TCP 22d

[root@server2 ~]# kubectl describe svc deployment

Selector: app=nginx#标签

[root@server2 ~]# kubectl get svc deployment -o yaml

selector:

app: nginx

[root@server2 ~]# kubectl label pod deployment-6456d7c676-cpxzp app=myapp

error: 'app' already has a value (nginx), and --overwrite is false

[root@server2 ~]# kubectl label pod deployment-6456d7c676-cpxzp app=myapp --overwrite

pod/deployment-6456d7c676-cpxzp labeled

[root@server2 ~]# kubectl get pod -L app

NAME READY STATUS RESTARTS AGE APP

demo 1/1 Running 8 17h

deployment-6456d7c676-cpxzp 1/1 Running 0 58m myapp

deployment-6456d7c676-mdf5g 1/1 Running 0 12s nginx

deployment-6456d7c676-q9gd4 1/1 Running 0 59m nginx

deployment-6456d7c676-zx7gq 1/1 Running 0 58m nginx

liveness-exec 1/1 Running 1 13h

[root@server2 ~]# kubectl describe svc deployment

Endpoints: 10.244.1.67:80,10.244.1.69:80,10.244.1.70:80#还是3个,myapp不在

[root@server2 ~]# kubectl get pod -o wide

[root@server2 ~]# kubectl edit svc deployment

app: myapp

service/deployment edited

[root@server2 ~]# kubectl describe svc deployment#根据标签识别

Endpoints: 10.244.1.68:80

[root@server2 ~]# kubectl delete -f rs.yml

deployment.apps "deployment" deleted

[root@server2 ~]# kubectl delete svc deployment

[root@server2 ~]# kubectl get all

[root@server2 ~]# kubectl delete pod/deployment-6456d7c676-cpxzp#myapp需要手工删除

2.daemonset控制器

[root@server2 ~]# cat daemonset.yml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: daemonset-example

labels:

k8s-app: zabbix-agent

spec:

selector:

matchLabels:

name: zabbix-agent

template:

metadata:

labels:

name: zabbix-agent

spec:

containers:

- name: zabbix-agent

image: zabbix-agent

[root@server1 ~]# docker pull zabbix/zabbix-agent

[root@server1 ~]# docker tag zabbix/zabbix-agent:latest reg.westos.org/library/zabbix-agent:latest

[root@server1 ~]# docker push reg.westos.org/library/zabbix-agent:latest

[root@server2 ~]# kubectl apply -f daemonset.yml

[root@server2 ~]# kubectl get pod#每个节点一个,会自动分配每个节点一个

[root@server2 ~]# kubectl delete -f daemonset.yml

3.job

[root@server2 ~]# cat job.yml

apiVersion: batch/v1

kind: Job

metadata:

name: pi

spec:

template:

spec:

containers:

- name: pi

image: perl

command: ["perl", "-Mbignum=bpi", "-wle", "print bpi(2000)"]

restartPolicy: Never

backoffLimit: 4

[root@server1 ~]# docker pull perl

[root@server1 ~]# docker tag perl:latest reg.westos.org/library/perl:latest

[root@server1 ~]# docker push reg.westos.org/library/perl:latest

[root@server2 ~]# kubectl delete -f daemonset.yml

[root@server2 ~]# kubectl create -f daemonset.yml

[root@server2 ~]# kubectl get pod#用于批量计算,一次性的

[root@server2 ~]# kubectl delete -f daemonset.yml

4.cronjob

[root@server2 ~]# cat cronjob.yml

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: hello

spec:

schedule: "*/1 * * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: hello

image: busyboxplus

imagePullPolicy: IfNotPresent

args:

- /bin/sh

- -c

- date; echo Hello from the Kubernetes cluster

restartPolicy: OnFailure

[root@server2 ~]# kubectl create -f daemonset.yml

[root@server2 ~]# kubectl get all

[root@server2 ~]# kubectl get pod#用于批量计算,周期性的,每分钟一次

[root@server2 ~]# kubectl logs hello

[root@server2 ~]# kubectl delete -f daemonset.yml

五、Service

1.k8s网络通信,面试,主要问通信的原理

k8s通过CNI接口接入其他插件来实现网络通讯。目前比较流行的插件有flannel,calico等。

CNI插件存放位置:# cat /etc/cni/net.d/10-flannel.conflist

插件使用的解决方案如下:

虚拟网桥,虚拟网卡,多个容器共用一个虚拟网卡进行通信。

多路复用:MacVLAN,多个容器共用一个物理网卡进行通信。

硬件交换:SR-LOV,一个物理网卡可以虚拟出多个接口,这个性能最好。

容器间通信:同一个pod内的多个容器间的通信,通过lo即可实现;

pod之间的通信:

同一节点的pod之间通过cni网桥转发数据包。

不同节点的pod之间的通信需要网络插件支持。

pod和service通信: 通过iptables或ipvs实现通信,ipvs取代不了iptables,因为ipvs只能做负载均衡,而做不了nat转换。

pod和外网通信:iptables的MASQUERADE。

Service与集群外部客户端的通信;(ingress、nodeport、loadbalancer)

%开启kube-proxy的ipvs模式

[root@server3 ~]# yum install -y ipvsadm #server3节点,所有节点安装

[root@server2 ~]# yum install -y ipvsadm

[root@server2 ~]# kubectl edit cm kube-proxy -n kube-system #修改ipvs模式

mode: "ipvs"

[root@server2 ~]# kubectl get pod -n kube-system |grep kube-proxy

kube-proxy-4vnhg 1/1 Running 5 22d

kube-proxy-tgbxj 1/1 Running 4 22d

[root@server2 ~]# kubectl get pod -n kube-system |grep kube-proxy | awk '{system("kubectl delete pod "$1" -n kube-system")}'#更新kube-proxy pod

[root@server2 ~]# kubectl get pod -n kube-system |grep kube-proxy#以更新

kube-proxy-h5qvh 1/1 Running 0 27s

kube-proxy-ltvsm 1/1 Running 0 93s

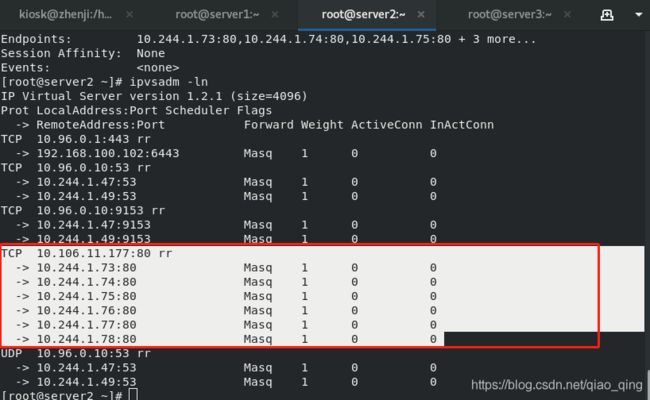

[root@server2 ~]# kubectl expose deployment deployment --port=80

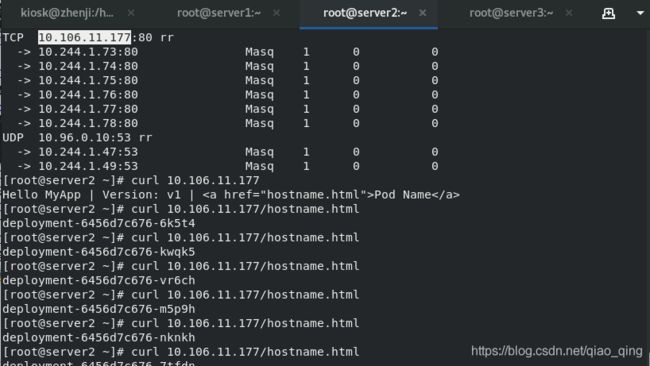

[root@server2 ~]# ipvsadm -ln

[root@server2 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

deployment ClusterIP 10.106.11.177 80/TCP 4s

kubernetes ClusterIP 10.96.0.1 443/TCP 22d

[root@server2 ~]# vim rs.yml #=6,LVS就会有6个ipvsadm -nl看

[root@server2 ~]# ipvsadm -ln

#负载均衡

[root@server2 ~]# curl 10.106.11.177

Hello MyApp | Version: v1 | Pod Name

[root@server2 ~]# curl 10.106.11.177/hostname.html

[root@server2 ~]# ip addr #ipvs模式会创建一个虚拟网卡kube-ipvs0,并分配service IP

[root@server2 ~]# vim demo.yml

[root@server2 ~]# cat demo.yml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

selector:

app: myapp

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo2

spec:

replicas: 3

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp

image: myapp:v2

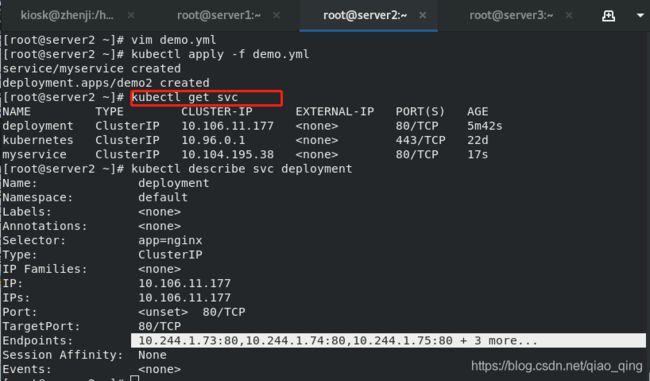

[root@server2 ~]# kubectl apply -f demo.yml

service/myservice created

deployment.apps/demo2 created

[root@server2 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

deployment ClusterIP 10.106.11.177 80/TCP 5m42s

kubernetes ClusterIP 10.96.0.1 443/TCP 22d

myservice ClusterIP 10.104.195.38 80/TCP 17s

[root@server2 ~]# kubectl describe svc deployment

IP: 10.106.11.177

Endpoints: 10.244.1.73:80,10.244.1.74:80,10.244.1.75:80 + 3 more...

[root@server2 ~]# ipvsadm -ln看有相应的ip10.106.11.177

[root@server2 ~]# cd /etc/cni/net.d/#配置文件的位置

[root@server2 net.d]# ls

10-flannel.conflist

[root@server2 net.d]# ps ax|grep flanneld#进程flannel

3448 pts/0 S+ 0:00 grep --color=auto f lanneld

6023 ? Ssl 0:08 /opt/bin/flanneld --ip-masq --kube-subnet-mgr

[root@server2 net.d]# cd /run/flannel/

[root@server2 flannel]# ls

subnet.env

[root@server2 flannel]# cat subnet.env

FLANNEL_NETWORK=10.244.0.0/16

FLANNEL_SUBNET=10.244.0.1/24

FLANNEL_MTU=1450

FLANNEL_IPMASQ=true

[root@server2 flannel]# ip addr#flannel的地址会分到ip addr上的flannel1上

[root@server2 flannel]# ip n#都有缓存

192.168.100.103 dev eth0 lladdr 52:54:00:c7:72:f8 REACHABLE

192.168.100.1 dev eth0 lladdr f8:6e:ee:cb:df:75 STALE

169.254.169.254 dev eth0 FAILED

10.244.1.0 dev flannel.1 lladdr ea:85:e2:25:4e:bc PERMANENT

192.168.100.141 dev eth0 lladdr d0:bf:9c:82:8b:f4 REACHABLE

fe80::1 dev eth0 lladdr f8:6e:ee:cb:df:75 router STALE

2.Service的几种服务方式

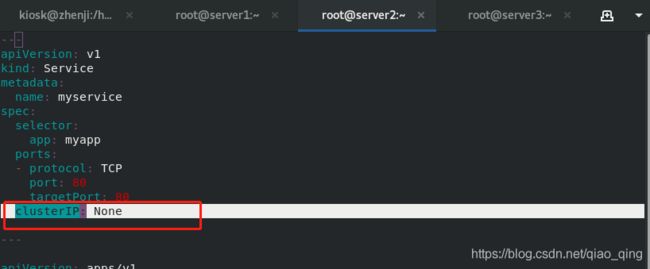

1)无头服务,不需要ip,直接用域名解析myservice.default.svc.cluster.local

[root@server2 ~]# kubectl delete -f demo.yml

service "myservice" deleted

deployment.apps "demo2" deleted

[root@server2 ~]# vim demo.yml

clusterIP: None

[root@server2 ~]# kubectl apply -f demo.yml

[root@server2 ~]# kubectl get svc#没有地址

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

deployment ClusterIP 10.106.11.177 80/TCP 25m

kubernetes ClusterIP 10.96.0.1 443/TCP 22d

myservice ClusterIP None 80/TCP 35s

[root@server2 ~]# kubectl delete -f rs.yml #kubeclt delete 没用的

deployment.apps "deployment" deleted

[root@server2 ~]# kubectl delete svc deployment

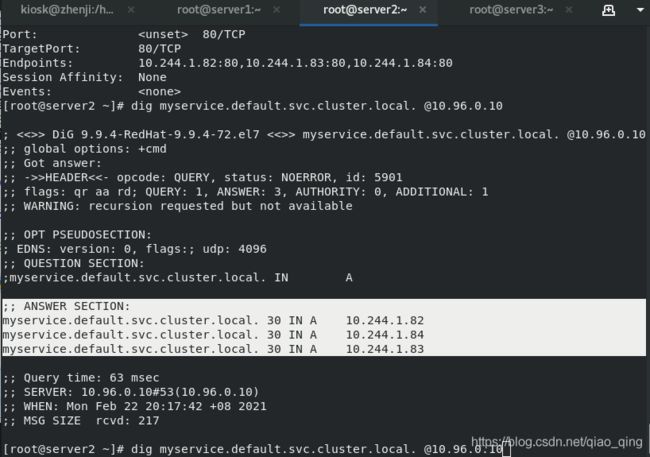

[root@server2 ~]# kubectl describe svc myservice#有points

Endpoints: 10.244.1.82:80,10.244.1.83:80,10.244.1.84:80

[root@server2 ~]# dig myservice.default.svc.cluster.local. @10.96.0.10

[root@server2 ~]# kubectl apply -f demo.yml

[root@server2 ~]# kubectl attach demo -it

/ # nslookup myservice#可以看到解析

[root@server2 ~]# vvim demo.yml #拉伸到6个

[root@server2 ~]# kubectl apply -f demo.yml

[root@server2 ~]# kubectl attach demo -it

/ # nslookup myservice#可以看到解析有6个

[root@server2 ~]# rpm -qf /usr/bin/dig

bind-utils-9.9.4-72.el7.x86_64

[root@server2 ~]# dig -t A myservice.default.svc.cluster.local. @10.96.0.10

[root@server2 ~]# dig -t A kube-dns.kube-system.svc.cluster.local. @10.96.0.10

[root@server2 ~]# kubectl delete -f demo.yml

[root@server2 ~]# vim demo.yml

#clusterIP: None

type: NodePort

[root@server2 ~]# kubectl apply -f demo.yml

[root@server2 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 22d

myservice NodePort 10.108.105.69 80:30183/TCP 88s

[root@server2 ~]# ipvsadm -ln

[root@server2 ~]# kubectl describe svc myservice

#负载均衡

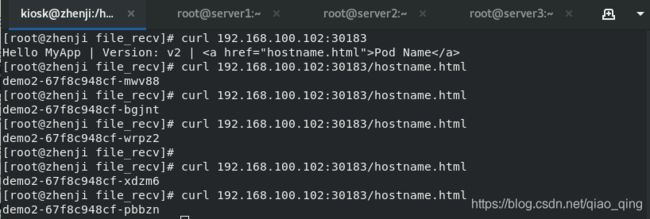

[root@zhenji file_recv]# curl 192.168.100.102:30183

Hello MyApp | Version: v2 | Pod Name

[root@zhenji file_recv]# curl 192.168.100.102:30183/hostname.html

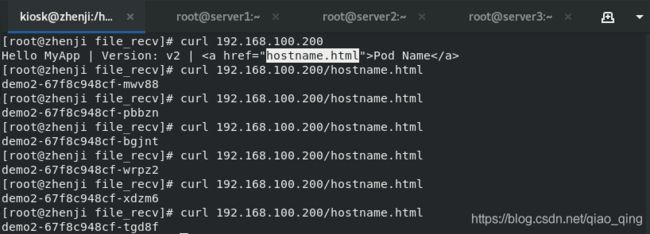

2)2种方式,指定一个LoadBalancer类型的service

[root@server2 ~]# vim demo.yml

type:LoadBalancer

[root@server2 ~]# kubectl delete svc myservice

[root@server2 ~]# kubectl apply -f demo.yml

[root@server2 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 22d

myservice LoadBalancer 10.101.253.48 80:32697/TCP 7s

[root@server2 ~]# ipvsadm -ln

[root@server2 ~]# kubectl describe svc myservice

3)3种方式,自己分地址

[root@server2 ~]# vim demo.yml

#type: LoadBalancer

externalIPs:

- 192.168.100.200

[root@server2 ~]# kubectl apply -f demo.yml

service/myservice configured

deployment.apps/demo2 unchanged

[root@server2 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 22d

myservice ClusterIP 10.101.253.48 192.168.100.200 80/TCP 2m36s

[root@zhenji file_recv]# curl 192.168.100.200

Hello MyApp | Version: v2 | Pod Name

[root@zhenji file_recv]# curl 192.168.100.200/hostname.html

demo2-67f8c948cf-mwv88

[root@zhenji file_recv]# curl 192.168.100.200/hostname.html

4)4种方式,ExternalName,内部访问外部

[root@server2 ~]# vim exsvc.yml

apiVersion: v1

kind: Service

metadata:

name: exsvc

spec:

type: ExternalName

externalName: www.westos.org

[root@server2 ~]# kubectl apply -f demo.yml

service/myservice configured

deployment.apps/demo2 unchanged

[root@server2 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 22d

myservice ClusterIP 10.101.253.48 192.168.100.200 80/TCP 2m36s

[root@server2 ~]# vim exsvc.yml

[root@server2 ~]# kubectl apply -f exsvc.yml

service/exsvc created

[root@server2 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

exsvc ExternalName www.westos.org 5s

kubernetes ClusterIP 10.96.0.1 443/TCP 22d

myservice ClusterIP 10.101.253.48 192.168.100.200 80/TCP 5m18s

[root@server2 ~]# kubectl attach demo -it

/ # nslookup exsvc

[root@server2 ~]# dig -t A exsvc.default.svc.cluster.local. @10.96.0.10

[root@server2 ~]# vim exsvc.yml

externalName: www.baidu.org

[root@server2 ~]# kubectl apply -f exsvc.yml

service/exsvc configured

[root@server2 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

exsvc ExternalName www.baidu.org 3m7s

kubernetes ClusterIP 10.96.0.1 443/TCP 22d

myservice ClusterIP 10.101.253.48 192.168.100.200 80/TCP 8m20s

[root@server2 ~]# dig -t A exsvc.default.svc.cluster.local. @10.96.0.10 #集群内部不变,只有外部的解析变了

5)适用于公有云上的 Kubernetes 服务

%metallb安装

%%%官网 :https://metallb.universe.tf/installation/

[root@server2 ~]# kubectl edit configmap -n kube-system kube-proxy

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: "ipvs"

ipvs:

strictARP: true#改成true

[root@server2 ~]# kubectl get pod -n kube-system |grep kube-proxy | awk '{system("kubectl delete pod "$1" -n kube-system")}'#更新kube-proxy pod

wget 两个

[root@server2 ~]# mkdir metallb

[root@server2 ~]# cd metallb

[root@server2 metallb]# wget https://raw.githubusercontent.com/metallb/metallb/v0.9.5/manifests/namespace.yaml

[root@server2 metallb]# wget https://raw.githubusercontent.com/metallb/metallb/v0.9.5/manifests/metallb.yaml

[root@server2 metallb]# cat metallb.yaml#看下载的镜像

%pull两个镜像

[root@server1 ~]# docker pull metallb/controller:v0.9.5

[root@server1 ~]# docker pull metallb/speaker:v0.9.5

[root@server2 metallb]# kubectl apply -f metallb.yaml

[root@server2 metallb]# kubectl apply -f namespace.yaml

[root@server2 metallb]# kubectl create secret generic -n metallb-system memberlist --from-literal=secretkey="$(openssl rand -base64 128)"#创建地址池,第一次创建的时候需要执行,以后不用

[root@server2 metallb]# kubectl -n metallb-system get all

[root@server2 metallb]# kubectl -n metallb-system get service

[root@server2 metallb]# kubectl -n metallb-system get secrets

[root@server2 metallb]# kubectl -n llb-system get pod#running

[root@server2 metallb]# vim config.yaml

[root@server2 metallb]# cat config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 172.25.0.100-172.25.0.200#自己的网段

[root@server2 metallb]# kubectl apply -f config.yaml

[root@server2 metallb]# cp /root/ingress/nginx-svc.yml .

[root@server2 metallb]# vim nginx-svc.yml

[root@server2 metallb]# cat nginx-svc.yml

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancer

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: myapp:v1

[root@server2 metallb]# kubectl apply -f nginx-svc.yml

[root@server2 metallb]# kubectl get svc#有nginx-svc的EXTERNAL-IP是指定网段172.25.0.100-172.25.0.200中的172.25.0.100

[root@server2 metallb]# cul 10.111.151.57#nginx-svc的CLUSTER-IP,通的

[root@server2 metallb]# kubectl get pod#running

[root@zhenji Desktop]# curl 172.25.0.100

[root@zhenji Desktop]# curl 172.25.0.100/hostname.html#负载均衡

[root@server2 metallb]# cp nginx-svc.yml demo-svc.yml

[root@server2 metallb]# vim demo-svc.yml

---

apiVersion: v1

kind: Service

metadata:

name: demo-svc

spec:

selector:

app: demo

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancer

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo

spec:

replicas: 2

selector:

matchLabels:

app: demo

template:

metadata:

labels:

app: demo

spec:

containers:

- name: demo

image: myapp:v2

:%s/nginx/demo/g

[root@server2 metallb]# kubectl apply -f demo-svc.yml

[root@server2 metallb]# kubectl get pod#running

[root@server2 metallb]# kubectl get svc#172.25.0.101

[root@zhenji Desktop]# curl 172.25.0.101#v2

[root@zhenji Desktop]# curl 172.25.0.100#v1

[root@server2 metallb]# kubectl -n metallb-system describe cm config#Name:config,表示搭建成功

[root@server2 metallb]# kubectl -n ingress-nginx get all

[root@server2 metallb]# kubectl -n get ingress-nginx delete daemosets.apps ingress-ginx-controller#补齐

[root@server2 ingress]# cp nginx.yml demo.yml

[root@server2 ingress]# cat demo.yml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo

spec:

#tls:

# - hosts:

# - www1.westos.org

# secretName: tls-secret

rules:

- host: www1.westos.org

http:

paths:

- path: /

backend:

serviceName: nginx-svc

servicePort: 80

[root@server2 ingress]# kubectl apply -f demo.yml

[root@server2 ingress]# describ ingress ingress-demo

#user->vip->ingress->service->pod

[root@server2 ingress]# apply -f nginx-svc.yml

[root@zhenji Desktop]# curl www1.westos.org#通

[root@zhenji Desktop]# curl www1.westos.org/hostname.yml

[root@server2 ingress]# vim demo.yml #看证书

[root@server2 ingress]# cat demo.yml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo

spec:

tls:

- hosts:

- www1.westos.org

secretName: tls-secret

rules:

- host: www1.westos.org

http:

paths:

- path: /

backend:

serviceName: nginx-svc

servicePort: 80

[root@server2 ingress]# apply -f demo.yml

#证书时默认的,要改证书,删除,新生成一个证书

[root@server2 ingress]# rm -fr tls.*

[root@server2 ingress]# openssl req -x509 -sha256 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=www1.westos.org/O=www1.westos.org"

[root@server2 ingress]# kubectl delete secrets tls-secret

[root@server2 ingress]# kubectl create secret tls tls-secret --key tls.key --cert tls.crt

[root@server2 ingress]# delete -f demo.yml

[root@server2 ingress]# apply -f demo.yml

[root@server2 ingress]# kubectl -n namespace logs pod #看日志

[root@zhenji Desktop]# curl www1.westos.org -I

网页访问www1.westos.org

#请求链路:client->vip(metallb)->ingress-nginx->nginx-svc->pod

六、flannel网络

- flannel vxlan跨主机通信原理,面试问怎么通信的

VXLAN,即Virtual Extensible LAN(虚拟可扩展局域网),是Linux本身支持的一网种网络虚拟化技术。VXLAN可以完全在内核态实现封装和解封装工作,从而通过“隧道”机制,构建出覆盖网络(Overlay Network)。

VTEP:VXLAN Tunnel End Point(虚拟隧道端点),在Flannel中 VNI的默认值是1,这也是为什么宿主机的VTEP设备都叫flannel.1的原因。

Cni0: 网桥设备,每创建一个pod都会创建一对 veth pair。其中一端是pod中的eth0,另一端是Cni0网桥中的端口(网卡)。

Flannel.1: TUN设备(虚拟网卡),用来进行 vxlan 报文的处理(封包和解包)。不同node之间的pod数据流量都从overlay设备以隧道的形式发送到对端。

Flanneld:flannel在每个主机中运行flanneld作为agent,它会为所在主机从集群的网络地址空间中,获取一个小的网段subnet,本主机内所有容器的IP地址都将从中分配。同时Flanneld监听K8s集群数据库,为flannel.1设备提供封装数据时必要的mac、ip等网络数据信息。

当容器发送IP包,通过veth pair 发往cni网桥,再路由到本机的flannel.1设备进行处理。

VTEP设备之间通过二层数据帧进行通信,源VTEP设备收到原始IP包后,在上面加上一个目的MAC地址,封装成一个内部数据帧,发送给目的VTEP设备。

内部数据桢,并不能在宿主机的二层网络传输,Linux内核还需要把它进一步封装成为宿主机的一个普通的数据帧,承载着内部数据帧通过宿主机的eth0进行传输。

Linux会在内部数据帧前面,加上一个VXLAN头,VXLAN头里有一个重要的标志叫VNI,它是VTEP识别某个数据桢是不是应该归自己处理的重要标识。

flannel.1设备只知道另一端flannel.1设备的MAC地址,却不知道对应的宿主机地址是什么。在linux内核里面,网络设备进行转发的依据,来自FDB的转发数据库,这个flannel.1网桥对应的FDB信息,是由flanneld进程维护的。

linux内核在IP包前面再加上二层数据帧头,把目标节点的MAC地址填进去,MAC地址从宿主机的ARP表获取。

此时flannel.1设备就可以把这个数据帧从eth0发出去,再经过宿主机网络来到目标节点的eth0设备。目标主机内核网络栈会发现这个数据帧有VXLAN Header,并且VNI为1,Linux内核会对它进行拆包,拿到内部数据帧,根据VNI的值,交给本机flannel.1设备处理,flannel.1拆包,根据路由表发往cni网桥,最后到达目标容器。

[root@server2 ~]# kubectl get pod -o wide#应该有server3和server4两个不同网段

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demo 1/1 Running 11 19h 10.244.1.50 server3

demo2-67f8c948cf-bgjnt 1/1 Running 0 75m 10.244.1.87 server3

demo2-67f8c948cf-wrpz2 1/1 Running 0 75m 10.244.1.86 server3

liveness-exec 1/1 Running 1 15h 10.244.1.48 server3

[root@server2 ~]# kubectl attach demo -it

Defaulting container name to demo.

Use 'kubectl describe pod/demo -n default' to see all of the containers in this pod.

If you don't see a command prompt, try pressing enter.

/ # ping 10.244.1.86

PING 10.244.1.86 (10.244.1.86): 56 data bytes

64 bytes from 10.244.1.86: seq=0 ttl=64 time=12.373 ms

64 bytes from 10.244.1.86: seq=1 ttl=64 time=0.085 ms

源地址-》目标地址 mack地址

[root@server3 ~]# arp -n

[root@server2 ~]# kubectl attach demo -it

Defaulting container name to demo.

Use 'kubectl describe pod/demo -n default' to see all of the containers in this pod.

If you don't see a command prompt, try pressing enter.

/ # ip addr show eth0

3: eth0@if9: mtu 1450 qdisc noqueue

link/ether ee:32:aa:6d:60:18 brd ff:ff:ff:ff:ff:ff

inet 10.244.1.50/24 brd 10.244.1.255 scope global eth0

valid_lft forever preferred_lft forever

/ # ping 10.244.1.50

PING 10.244.1.50 (10.244.1.50): 56 data bytes

64 bytes from 10.244.1.50: seq=0 ttl=64 time=0.170 ms

64 bytes from 10.244.1.50: seq=1 ttl=64 time=0.057 ms

flannel支持多种后端:Vxlan、host-gw(不支持跨网段)、UDP(性能差)

[root@server2 ~]# kubectl -n kube-system edit cm kube-flannel-cfg

改Type:"host-gw"

[root@server2 ~]# kubectl -n kube-system get pod

[root@server2 ~]# kubectl get pod -n kube-system |grep flannel | awk '{system("kubectl delete pod "$1" -n kube-system")}'#更新生效

[root@server2 ~]# kubectl -n kube-system get pod

[root@server2 ~]# kubectl attach demo -it#ping的通

/ # ip addr show eth0

3: eth0@if9: mtu 1450 qdisc noqueue

link/ether ee:32:aa:6d:60:18 brd ff:ff:ff:ff:ff:ff

inet 10.244.1.50/24 brd 10.244.1.255 scope global eth0

valid_lft forever preferred_lft forever

/ # ping 10.244.1.50

PING 10.244.1.50 (10.244.1.50): 56 data bytes

64 bytes from 10.244.1.50: seq=0 ttl=64 time=0.204 ms

64 bytes from 10.244.1.50: seq=1 ttl=64 time=0.063 ms

[root@server4 ~]# route -n #主机网关模式,每个节点都有

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.100.1 0.0.0.0 UG 0 0 0 eth0

10.244.0.0 192.168.100.102 255.255.255.0 UG 0 0 0 eth0

[root@server3 ~]# tcpdump -i eth0 -nn host 172.25.0.4

[root@server3 ~]# kubectl -n kube-system edit cm kube-fannel-cfg

改type: "vxlan",

"Directrouting": True#如果是同网段的使用路由模式,不同网段用vxlan

[root@server2 ~]# kubectl get pod -n kube-system |grep fannel | awk '{system("kubectl delete pod "$1" -n kube-system")}'#更新生效

[root@server2 ~]# kubectl get pod -n kube-system

route -n #

server3上抓包

tcpdump -i eth0 -nn host 172.25.0.4

tcpdump -i flannel.1 -nn#没有,说明没有经过他

#只解决网络通信,不解决网络策略

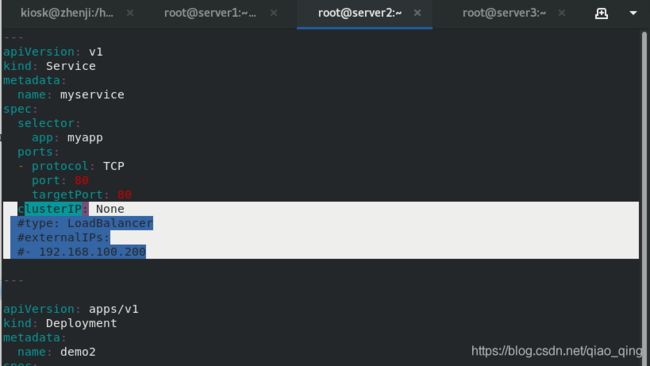

七、ingress服务

%%kubernetes官方网站:https://kubernetes.io/docs/setup/production-environment/container-runtimes/#docker

1.部署ingress服务

[root@server2 ~]# vim demo.yml

#clusterIP: None

#type: LoadBalancer

#externalIPs:

#- 192.168.100.200

[root@server2 ~]# kubectl apply -f demo.yml

https://kubernetes.github.io/ingress-nginx/deploy/#bare-metal

#server1上部署私有仓库,因为不知道master会部署到哪个节点上

[root@server1 ~]# docker load -i ingress-nginx.tar

[root@server1 ~]# docker images | grep ingress

[root@server1 ~]# docker tag quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.33.0 reg.westos.org/library/nginx-ingress-controller:0.33.0

[root@server1 ~]# docker images | grep webhook

[root@server1 ~]# docker tag jettech/kube-webhook-certgen:v1.2.0 reg.westos.org/library/kube-webhook-certgen:v1.2.0

[root@server1 ~]# docker push reg.westos.org/library/nginx-ingress-controller:0.33.0

[root@server1 ~]# docker login reg.westos.org

[root@server1 harbor]# docker push reg.westos.org/library/kube-webhook-certgen:v1.2.0

#官方文档,wget下来

[root@server2 ]# mkdir ingress

[root@server2 ~]# cd ingress/

[root@server2 ingress]# ls

deploy.yaml

[root@server2 ingress]# kubectl apply -f deploy.yaml

[root@server2 ingress]# kubectl get ns

ingress-nginx Active 12s

[root@server2 ingress]# kubectl get all -n ingress-nginx

[root@server2 ingress]# kubectl get pod -o wide -n ingress-nginx

[root@server2 ingress]# kubectl logs -n ingress-nginx ingress-nginx-controller-mbpql#排错

[root@server2 ingress]# kubectl -n ingress-nginx get pod

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-f6g7k 0/1 Completed 0 26h

ingress-nginx-admission-patch-g2sbs 0/1 Completed 0 26h

ingress-nginx-controller-47b2l 0/1 Running 0 6s

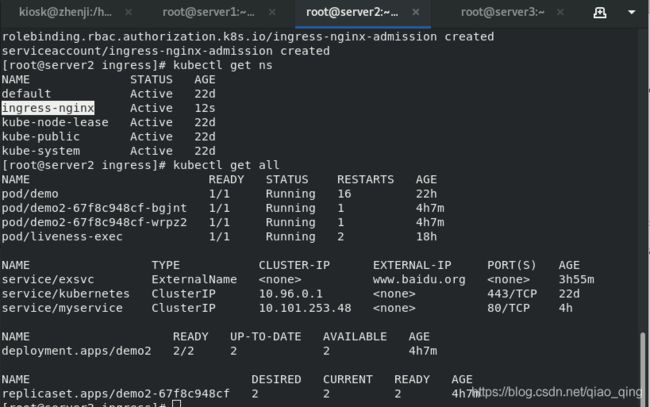

2.使用ingress服务

[root@server2 ingress]# kubectl delete svc exsvc

[root@server2 ingress]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 24d

myservice ClusterIP 10.101.253.48 80/TCP 30h

[root@server2 ingress]# vim nginx.yml

[root@server2 ingress]# cat nginx.yml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo

spec:

rules:

- host: www1.westos.org

http:

paths:

- path: /

backend:

serviceName: myservice

servicePort: 80

[root@server2 ingress]# kubectl apply -f nginx.yml

[root@zhenji Desktop]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.4.17.241 www.westos.org linux.westos.org lee.westos.org login.westos.org wsgi.westos.org

192.168.100.103 www1.westos.org www2.westos.org linux.westos.org lee.westos.org login.westos.org wsgi.westos.org

[root@zhenji Desktop]# curl www1.westos.org

[root@zhenji Desktop]# curl www1.westos.org/hostname.html #负载均衡控制

[root@zhenji Desktop]# curl www1.westos.org/hostname.html

[root@zhenji Desktop]# curl www1.westos.org/hostname.html

3.nginx的负载均衡控制

[root@server2 ingress]# vim nginx-svc.yml

[root@server2 ingress]# cat nginx-svc.yml

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: myapp:v1

[root@server2 ingress]# kubectl apply -f nginx-svc.yml

[root@server2 ingress]# kubectl get pod -L app

[root@server2 ingress]# kubectl describe svc nginx-svc

Endpoints: 10.244.1.105:80,10.244.1.106:80

[root@server2 ingress]# vim nginx.yml

[root@server2 ingress]# cat nginx.yml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo

spec:

rules:

- host: www1.westos.org

http:

paths:

- path: /

backend:

serviceName: myservice

servicePort: 80

- host: www2.westos.org

http:

paths:

- path: /

backend:

serviceName: nginx-svc

servicePort: 80

[root@server2 ingress]# kubectl apply -f nginx.yml

[root@server2 ingress]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-demo www1.westos.org,www2.westos.org 192.168.100.103 80 10m

[root@server2 ingress]# kubectl describe ingress ingress-demo#定义到不同的后端

[root@server2 ingress]# kubectl -n ingress-nginx exec -it ingress-nginx-controller-47b2l -- bash

bash-5.0$ cd /etc/nginx/

bash-5.0$ ls#就是nginx配置文件

生成key和证书

[root@server2 ingress]# openssl req -x509 -sha256 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=nginxsvc/O=nginxsvc"

Generating a 2048 bit RSA private key

............................................................................+++

........................+++

writing new private key to 'tls.key'

-----

[root@server2 ingress]# ll

total 36

-rw------- 1 root root 17728 Feb 24 02:05 deploy.yaml

-rw-r--r-- 1 root root 421 Feb 24 03:40 nginx-svc.yml

-rw-r--r-- 1 root root 380 Feb 24 03:43 nginx.yml

-rw-r--r-- 1 root root 1143 Feb 24 03:57 tls.crt

-rw-r--r-- 1 root root 1704 Feb 24 03:57 tls.key

[root@server2 ingress]# kubectl create secret tls tls-secret --key tls.key --cert tls.crt

secret/tls-secret created

[root@server2 ingress]# kubectl get secrets

NAME TYPE DATA AGE

default-token-xlsq2 kubernetes.io/service-account-token 3 24d

tls-secret kubernetes.io/tls 2 10s

[root@server2 ingress]# kubectl describe secrets tls-secret

#启用tls

[root@server2 ingress]# cat nginx.yml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo

spec:

tls:

- hosts:

- www1.westos.org

secretName: tls-secret

rules:

- host: www1.westos.org

http:

paths:

- path: /

backend:

serviceName: myservice

servicePort: 80

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo2

spec:

rules:

- host: www2.westos.org

http:

paths:

- path: /

backend:

serviceName: nginx-svc

servicePort: 80

[root@server2 ingress]# kubectl apply -f nginx.yml

[root@server2 ingress]# kubectl describe ingress ingress-demo#tls已经成功

[root@zhenji Desktop]# curl www1.westos.org -I#重定向了

HTTP/1.1 308 Permanent Redirect

Server: nginx/1.19.0

Date: Tue, 23 Feb 2021 20:02:50 GMT

Content-Type: text/html

Content-Length: 171

Connection: keep-alive

Location: https://www1.westos.org/

[root@zhenji Desktop]# curl www2.westos.org -I #没有重定向

HTTP/1.1 200 OK

Server: nginx/1.19.0

Date: Tue, 23 Feb 2021 20:06:45 GMT

Content-Type: text/html

Content-Length: 65

Connection: keep-alive

Last-Modified: Fri, 02 Mar 2018 03:39:12 GMT

ETag: "5a98c760-41"

Accept-Ranges: bytes

认证,生成认证文件

[root@server2 ingress]# yum provides */htpasswd

[root@server2 ingress]# yum install httpd-tools

#建立两个用户

[root@server2 ingress]# htpasswd -c auth wxh

New password:

Re-type new password:

Adding password for user wxh

[root@server2 ingress]# htpasswd auth admin

New password:

Re-type new password:

Adding password for user admin

[root@server2 ingress]# kubectl create secret generic basic-auth --from-file=auth#存进去

secret/basic-auth created

[root@server2 ingress]# kubectl get secrets

NAME TYPE DATA AGE

basic-auth Opaque 1 5s

default-token-xlsq2 kubernetes.io/service-account-token 3 24d

tls-secret kubernetes.io/tls 2 10m

[root@server2 ingress]# kubectl get secret basic-auth -o yaml

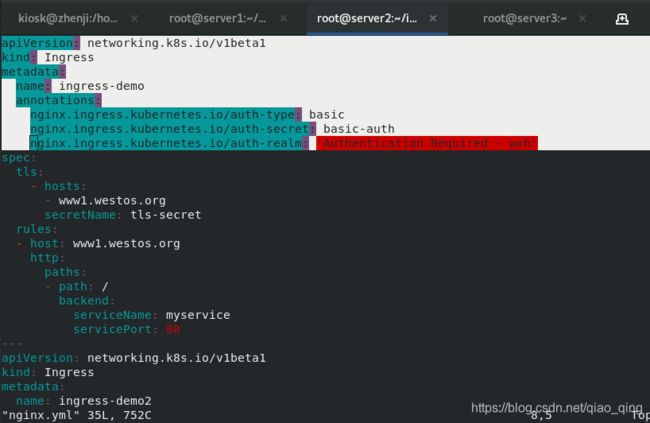

[root@server2 ingress]# vim nginx.yml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo

annotations:#添加,#查看用户信息

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/auth-secret: basic-auth

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - wxh'

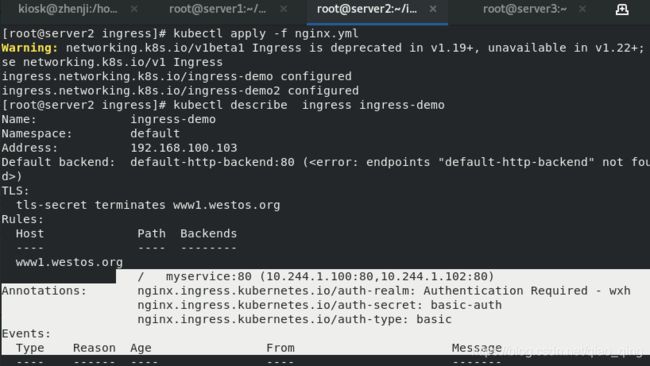

[root@server2 ingress]# kubectl apply -f nginx.yml

[root@server2 ingress]# kubectl describe ingress ingress-demo

#网页访问www1.westos.org/hostname.html 用户 密码

%%直接重定义到hostname.html界面上去

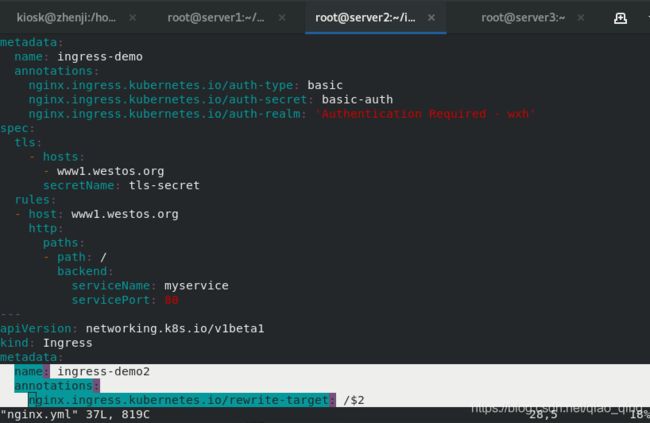

[root@server2 ingress]# vim nginx.yml

添加annotatiions:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo2

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /hostname.html

[root@server2 ingress]# kubectl apply -f nginx.yml

网页访问www2.westos.org就可以

%%%%ingress地址重写

[root@server2 ingress]# vim nginx.yml

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-demo2

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

spec:

rules:

- host: www2.westos.org

http:

paths:

- backend:

serviceName: nginx-svc

servicePort: 80

path: /westos(/|$)(.*)

[root@server2 ingress]# kubectl apply -f nginx.yml

[root@server2 ingress]# kubectl describe ingress ingress-demo2

[root@zhenji Desktop]# curl www2.westos.org/westos

Hello MyApp | Version: v1 | Pod Name

[root@zhenji Desktop]# curl www2.westos.org/westos/hostname.html

deployment-6456d7c676-wjq5w

[root@zhenji Desktop]# curl www2.westos.org/westos/hostname.html

deployment-6456d7c676-p9gsr

网页访问www2.westos.org/westos/hostname.html #关键字westos可以忽略不计

八、calico网络插件

- 官网:https://docs.projectcalico.org/getting-started/kubernetes/self-managed-onprem/onpremises

- calico简介:

flannel实现的是网络通信,calico的特性是在pod之间的隔离。

通过BGP路由,但大规模端点的拓扑计算和收敛往往需要一定的时间和计算资源。

纯三层的转发,中间没有任何的NAT和overlay,转发效率最好。

Calico 仅依赖三层路由可达。Calico 较少的依赖性使它能适配所有 VM、Container、白盒或者混合环境场景。

网络策略NetworkPolicy

1)限定指定pod访问(根据标签判断)

[root@server2 ~]# mkdir calico#插件

[root@server2 ~]# cd calico

[root@server2 calico]# vim deny-nginx.yaml

[root@server2 calico]# cat deny-nginx.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-nginx

spec:

podSelector:

matchLabels:

app: nginx

[root@server2 calico]# apply -f deny-nginx.yml

[root@zhenji Desktop]# curl -k www1.westos.org -I

[root@server2 calico]# wget https://docs.projectcalico.org/manifests/calico.yaml#官方calico.yaml

[root@server2 calico]# cat calico.yaml#看需要的镜像4个

image: calico/cni:v3.16.1

image: calico/kube-controllers:v3.16.1

image: calico/pod2daemon-flexvol:v3.16.1

image: calico/node:v3.16.1

[root@server2 ~]# docker search calico

[root@server2 ~]# docker pull calico/kube-controllers:v3.18.0#下载太慢,版本高

[root@server2 ~]# docker pull calico/kube-controllers:v3.16.1

[root@server2 ~]# docker pull calico/pod2daemon-flexvol:v3.16.1

[root@server2 ~]# docker pull calico/node:v3.16.1

[root@server2 ~]# docker pull calico/cni:v3.16.1

[root@server2 ~]# docker tag calico/kube-controllers:v3.16.1#tag4个,分别push到私有仓库

[root@server2 calico]# rm -fr calico.yaml#版本不一样,换一个符合v3.16.1的calico.yaml

[root@zhenji file_recv]# scp calico.yaml [email protected]:/root/calico

[email protected]'s password:

calico.yaml 100% 182KB 70.5MB/s 00:00

[root@server2 calico]# vim calico.yaml#注意镜像的路径,在library

注意镜像的路径,在library?

#1.搜IPIP,用于隧道

- name: CALICO_IPV4POOL_IPIP

value: "off"

#2.VXLAN

- name: CALICO_IPV4POOL_VXLAN

value: "Never"

#3.网段与之前的一致

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

[root@server2 calico]# kubectl delete kube-flannel.yml#删除之前的策略

[root@server2 calico]# kubectl delete deployments.apps deployment

[root@server2 calico]# kubectl delete svc nginx-svc

[root@server2 calico]# kubectl -n kube-system get pod#都是running

[root@server2 ~]# cd /etc/cni/net.d/

[root@server2 net.d]# ls

10-flannel.conflist

[root@server2 net.d]# mv 10-flannel.conflist /mnt/#master和node所有节点都mv走

[root@server2 calico]# kubectl apply -f calico.yaml

[root@server2 calico]# kubectl apply -f nginx-svc.yml

[root@server2 calico]# kubectl get pod -L app #两个deployment在运行

[root@server2 calico]# kubectl get pod -o wide

[root@server2 calico]# kubectl get svc#nginx-svc的CLUSTER-IP:10.101.39.153

[root@server2 calico]# curl 10.101.39.153#通

[root@server2 calico]# curl 10.101.39.153/hostname.html#负载均衡

[root@server2 calico]# cat deny-nginx.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-nginx

spec:

podSelector:

matchLabels:

app: nginx

[root@server2 calico]# apply -f deny-nginx.yml

[root@server2 calico]# kubectl get networkpolicies.networking.k8s.io

[root@server2 calico]# kubectl get svc#nginx-svc的CLUSTER-IP:10.101.39.153

[root@server2 calico]# curl 10.101.39.153#带有相应标签app: nginx的pod都拒绝

[root@server2 calico]# kubectl get pod -L app

[root@server2 calico]# kubectl run nginx --iamge=myapp:v2#自主式pod,没有标签

[root@server2 calico]# kubectl get pod -L app#没有标签APP

[root@server2 calico]# kubectl get pod -o wide#ip 是10.244.22.2

[root@server2 calico]# kubectl attach demo -it

/ # ping 10.244.22.2#能ping通自主式pod,没有标签的,带有nginx的不通

2)允许指定pod访问

[root@server2 calico]# vim access-demo.yaml

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: access-nginx

spec:

podSelector:

matchLabels:

app: nginx

ingress:

- from:

- podSelector:

matchLabels:

app: demo

[root@server2 calico]# kubectl apply -f access-demo.yaml

[root@server2 calico]# kubectl get pod -o wide#ip 是10.244.22.2

[root@server2 calico]# kubectl get pod -L app#没有标签APP

[root@server2 calico]# kubectl lable pod demo app=demo#加标签demo

[root@server2 calico]# kubectl get pod -L app#nginx的标签是demo

[root@server2 calico]# kubectl attch demo -it

/ # ping 10.244.22.2能ping通demo的Ip

3)禁止namespace中所有pod之间的相互访问

[root@server2 calico]# vim deny-pod.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny

namespace: demo

spec:

podSelector: {}#没指定策略就是默认禁止

[root@server2 calico]# kubectl create namespace demo #创建namespace

[root@server2 calico]# kubectl get ns#有demo

[root@server2 calico]# kubectl run demo1 --image=busyboxplus -it -n demo#-n指定demo1

[root@server2 calico]# kubectl run demo2 --image=busyboxplus -it -n demo#-n指定demo2

[root@server2 calico]# kubectl get pod -n demo#有demo1和demo2

[root@server2 calico]# kubectl -n demo get pod --show-lables #run 给的标签run=demo1

[root@server2 calico]# kubectl -n demo get pod -L run

[root@server2 calico]# kubectl get pod -o wide#看ip:nginx是10.244.22.2

[root@server2 calico]# kubectl attach demo1 -it -n demo

/ # ping 10.244.22.3能ping通demo的Ip

[root@server2 calico]# kubectl apply -f deny-pod.yaml

[root@server2 calico]# kubectl get networkpolicies. -n demo#有default-deny策略

[root@server2 calico]# kubectl attach demo1 -it demo#ping不通

/ # ping 10.244.22.3#不能ping通demo的Ip

[root@server2 calico]# kubectl attach -it demo#其他namespace的pod也ping不通22.3,

/ # ping 10.244.22.3#不能ping通demo的Ip

4)禁止其他namespace的访问服务

[root@server2 calico]# vim deny-ns.yaml

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: deny-namespace

spec:

podSelector:

matchLabels:

ingress:

- from:

- podSelector: {}

[root@server2 calico]# kubectl apply -f deny-ns.yaml

[root@server2 calico]# kubectl attach demo2 -it -n demo#所有的namespace都不能访问

/ # curl 10.244.22.2

5)允许指定的namespace访问

[root@server2 calico]# vim access-ns.yaml

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: access-namespace

spec:

podSelector:

matchLabels:

run: nginx

ingress:

- from:

- namespaceSelector:

matchLabels:

role: prod

[root@server2 calico]# kubectl create namespace test

[root@server2 calico]# kubectl lable ns test role=prod#改标签,与access-ns.yaml一致

[root@server2 calico]# kubectl get ns --show-lables

[root@server2 calico]# kubectl get pod --show-lables#看标签

[root@server2 calico]# kubectl get pod -o wide#看ip:nginx是10.244.22.2

[root@server2 calico]# kubectl apply -f access-ns.yaml

[root@server2 calico]# kubectl attach demo3 -it -n demo#是允许的namespace,可以访问

/ # curl 10.244.22.2

[root@server2 calico]# kubectl attach demo2 -it -n demo#不能访问,根据标签匹配

/ # curl 10.244.22.2

6)允许外网访问

[root@server2 ingress]# cat demo.yml

[root@server2 ingress]# kubectl apply -f demo.yml

[root@server2 ingress]# kubectl get ingress#有ingress-demo

[root@server2 ingress]# kubectl describe ingress ingress-demo#有相应的Rules:nginx-svc

[root@server2 ingress]# kubectl -n ingress-nginx get svc#看ip172.25.0.100

[root@zhenji ~]# curl www1.westos.org#不通,被禁止了deny-nginx.yaml里面禁止nginx标签

[root@server2 ingress]# cd

[root@server2 ingress]# cd calico

[root@server2 calico]# vim access-ex.yaml

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: web-allow-external

spec:

podSelector:

matchLabels:

app: web

ingress:

- ports:

- port: 80

from: []

[root@server2 calico]# kubectl apply -f access-ex.yaml

[root@zhenji ~]# curl www1.westos.org#通,从外部访问80