Hadoop大数据平台

文章目录

-

- 1.HDFS单机版

- 2.伪分布式

- 3.全分布式

-

- 热添加节点(在线添加server4节点)

- 4.分布式存储

- 5.Zookeeper 高可用集群(hadoop自带的nn高可用)

-

- 1.集群搭建

- 2.hadoop配置

- 3.启动 hdfs 集群(按顺序启动)

- 4.上传文件

- 5.测试故障自动切换

- 6.yarn 管理器的高可用

- 7.测试 yarn 故障切换

- 8.Hbase 分布式部署

1.HDFS单机版

##新建五个快照server1,2,3,4,5

官网:https://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-common/SingleCluster.html

##创建hadoop用户,在普通用户下执行

[root@server1 ~]# useradd hadoop

[root@server1 ~]# su - hadoop

[hadoop@server1 ~]$ pwd

/home/hadoop

[hadoop@server1 ~]$ ls##下载hadoop和jdk

hadoop-3.2.1.tar.gz jdk-8u181-linux-x64.tar.gz

[hadoop@server1 ~]$ tar zxf jdk-8u181-linux-x64.tar.gz

[hadoop@server1 ~]$ ls

hadoop-3.2.1.tar.gz jdk1.8.0_181 jdk-8u181-linux-x64.tar.gz

[hadoop@server1 ~]$ ln -s jdk1.8.0_181/ java##软连接

[hadoop@server1 ~]$ cd hadoop

[hadoop@server1 hadoop]$ ls

bin etc include lib libexec LICENSE.txt NOTICE.txt README.txt sbin share

[hadoop@server1 hadoop]$ cd etc/hadoop/

[hadoop@server1 hadoop]$ vim hadoop-env.sh

##修改路径

export JAVA_HOME=/home/hadoop/java

export HADOOP_HOME=/home/hadoop/hadoop

[hadoop@server1 hadoop]$ cd ..

[hadoop@server1 etc]$ cd ..

[hadoop@server1 hadoop]$ pwd

/home/hadoop/hadoop

[hadoop@server1 hadoop]$ mkdir input

[hadoop@server1 hadoop]$ cp etc/hadoop/*.xml input

[hadoop@server1 hadoop]$ ls input/

[hadoop@server1 hadoop]$ bin/hadoop jar

[hadoop@server1 hadoop]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar

[hadoop@server1 hadoop]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar grep input output 'dfs[a-z.]+'##output目录是不存在的,如存在会报错

[hadoop@server1 hadoop]$ ls

bin include lib LICENSE.txt output sbin

etc input libexec NOTICE.txt README.txt share

[hadoop@server1 hadoop]$ cd output/

[hadoop@server1 output]$ ls

part-r-00000 _SUCCESS

[hadoop@server1 output]$ cat *

1 dfsadmin

2.伪分布式

[hadoop@server1 hadoop]$ ls

bin include lib LICENSE.txt output sbin

etc input libexec NOTICE.txt README.txt share

[hadoop@server1 hadoop]$ vim etc/hadoop/core-site.xml

#最后添加

fs.defaultFS

hdfs://localhost:9000

[hadoop@server1 hadoop]$ vim etc/hadoop/hdfs-site.xml

#最后添加

dfs.replication

1

##免密

[hadoop@server1 hadoop]$ ssh-keygen

[hadoop@server1 hadoop]$ logout

[root@server1 ~]# passwd hadoop

[root@server1 ~]# su - hadoop

[hadoop@server1 ~]$ ssh-copy-id localhost

[hadoop@server1 ~]$ ssh localhost

[hadoop@server1 ~]$ logout

[hadoop@server1 ~]$ cd hadoop/etc/hadoop/

[hadoop@server1 hadoop]$ ll workers

-rw-r--r-- 1 hadoop hadoop 10 Sep 10 2019 workers

[hadoop@server1 hadoop]$ pwd

/home/hadoop/hadoop

[hadoop@server1 hadoop]$ bin/hdfs namenode -format##初始化

[hadoop@server1 hadoop]$ sbin/start-dfs.sh

网页访问172.25.3.1:9870

[hadoop@server1 ~]$ vim .bash_profile

PATH=$PATH:$HOME/.local/bin:$HOME/bin:$HOME/java/bin

[hadoop@server1 ~]$ source .bash_profile

[hadoop@server1 ~]$ jps##运行的进程

24850 Jps

24679 SecondaryNameNode

24377 NameNode

24490 DataNode

[hadoop@server1 ~]$ cd hadoop

[hadoop@server1 hadoop]$ bin/hdfs dfs -ls##会报错,需创建家目录

ls: `.': No such file or directory

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user/hadoop

[hadoop@server1 hadoop]$ bin/hdfs dfs -ls

[hadoop@server1 hadoop]$ ls

bin include lib LICENSE.txt NOTICE.txt README.txt share

etc input libexec logs output sbin

[hadoop@server1 hadoop]$ rm -fr output/

[hadoop@server1 hadoop]$ bin/hdfs dfs -put input##上传到分布式文件系统里

刷新网页访问172.25.3.1:9870,有上传的文件

[hadoop@server1 hadoop]$ rm -fr input

[hadoop@server1 hadoop]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar wordcount input output

[hadoop@server1 hadoop]$ bin/hdfs dfs -ls##命令查看上传内容

Found 2 items

drwxr-xr-x - hadoop supergroup 0 2021-04-24 10:52 input

drwxr-xr-x - hadoop supergroup 0 2021-04-24 10:57 output

[hadoop@server1 hadoop]$ bin/hdfs dfs -cat output/*

[hadoop@server1 hadoop]$ bin/hdfs dfs -get output##可以下载下来

[hadoop@server1 hadoop]$ rm -fr output/##删除本地的不会影响网页

[hadoop@server1 hadoop]$ bin/hdfs dfs -rm -r output##删除网页上的

[hadoop@server1 hadoop]$ sbin/stop-dfs.sh

3.全分布式

##新建快照server2,3

[hadoop@server1 hadoop]$ sbin/stop-dfs.sh

[root@server1 ~]# yum install nfs-utils -y

[root@server2 ~]# useradd hadoop

[root@server2 ~]# echo westos | passwd --stdin hadoop

[root@server2 ~]# yum install nfs-utils -y

[root@server3 ~]# useradd hadoop

[root@server3 ~]# echo westos | passwd --stdin hadoop

[root@server3 ~]# yum install nfs-utils -y

##nfs挂接到一个节点上,server1,2,3都是免密、同步的

[root@server1 ~]# id hadoop

uid=1001(hadoop) gid=1001(hadoop) groups=1001(hadoop)

[root@server1 ~]# vim /etc/exports

[root@server1 ~]# cat /etc/exports

/home/hadoop *(rw,anonuid=1001,anongid=1001)

[root@server1 ~]# systemctl start nfs

[root@server1 ~]# showmount -e

Export list for server1:

/home/hadoop *

[root@server2 ~]# showmount -e 172.25.3.1

Export list for 172.25.3.1:

/home/hadoop *

[root@server2 ~]# mount 172.25.3.1:/home/hadoop/ /home/hadoop/

172.25.3.1:/home/hadoop 17811456 3007744 14803712 17% /home/hadoop

[root@server2 ~]# su - hadoop

[hadoop@server2 ~]$ ls

hadoop hadoop-3.2.1 hadoop-3.2.1.tar.gz java jdk1.8.0_181 jdk-8u181-linux-x64.tar.gz

[hadoop@server2 ~]$ cd hadoop

[hadoop@server2 hadoop]$ ls

bin etc include lib libexec LICENSE.txt logs NOTICE.txt README.txt sbin share

[root@server3 ~]# showmount -e 172.25.3.1

Export list for 172.25.3.1:

/home/hadoop *

[root@server3 ~]# mount 172.25.3.1:/home/hadoop/ /home/hadoop/

172.25.3.1:/home/hadoop 17811456 3007744 14803712 17% /home/hadoop

[root@server3 ~]# su - hadoop

[hadoop@server3 ~]$ ls

hadoop hadoop-3.2.1 hadoop-3.2.1.tar.gz java jdk1.8.0_181 jdk-8u181-linux-x64.tar.gz

[hadoop@server3 ~]$ cd hadoop

[hadoop@server3 hadoop]$ ls

bin etc include lib libexec LICENSE.txt logs NOTICE.txt README.txt sbin share

[hadoop@server2 hadoop]$ ssh server2

##挂接到一个节点上,server1,2,3都是免密、同步的

[root@server1 ~]# su - hadoop

[hadoop@server1 ~]$ cd hadoop

[hadoop@server1 hadoop]$ vim etc/hadoop/core-site.xml

fs.defaultFS

hdfs://server1:9000

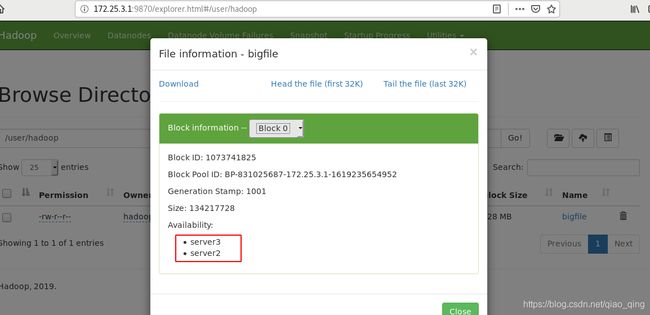

[hadoop@server1 hadoop]$ vim etc/hadoop/hdfs-site.xml##两个节点。可以保存两份

dfs.replication

2

[hadoop@server1 hadoop]$ vim etc/hadoop/workers

[hadoop@server1 hadoop]$ cat etc/hadoop/workers

server2

server3

[hadoop@server1 hadoop]$ rm -fr /tmp/*

[hadoop@server1 hadoop]$ bin/hdfs namenode -format##重新格式化,启动

[hadoop@server1 hadoop]$ sbin/start-dfs.sh

Starting namenodes on [server1]

Starting datanodes

Starting secondary namenodes [server1]

##work节点上运行的是datanode,用于存储数据

[hadoop@server1 hadoop]$ jps

29253 Jps

28908 NameNode

29133 SecondaryNameNode

[hadoop@server2 hadoop]$ jps

24130 DataNode

24191 Jps

[hadoop@server3 hadoop]$ jps

24124 Jps

24061 DataNode

[hadoop@server1 hadoop]$ dd if=/dev/zero of=bigfile bs=1M count=200

[hadoop@server1 hadoop]$ bin/hdfs dfsadmin -report

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user/hadoop

[hadoop@server1 hadoop]$ bin/hdfs dfs -put bigfile

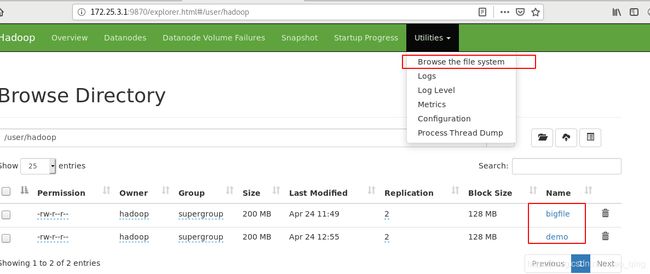

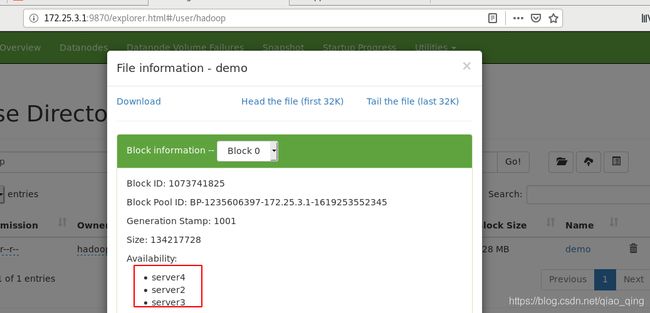

刷新网页访问172.25.3.1:9870,有上传的文件bigfile

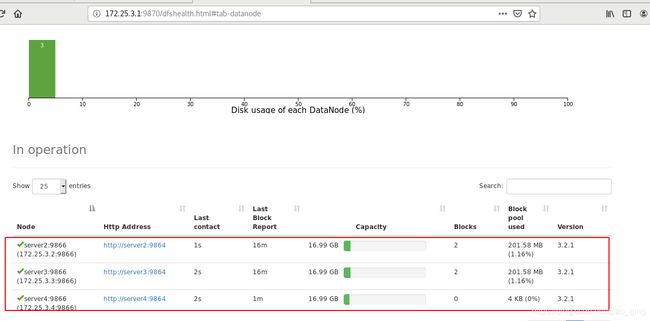

热添加节点(在线添加server4节点)

##新建快照server4

[root@server4 ~]# useradd hadoop

[root@server4 ~]# yum install nfs-utils -y

[root@server4 ~]# mount 172.25.3.1:/home/hadoop/ /home/hadoop/

172.25.3.1:/home/hadoop 17811456 3007744 14803712 17% /home/hadoop

[root@server4 ~]# su - hadoop

[hadoop@server4 ~]$ ls

hadoop hadoop-3.2.1 hadoop-3.2.1.tar.gz java jdk1.8.0_181 jdk-8u181-linux-x64.tar.gz

[hadoop@server4 ~]$ cat hadoop/etc/hadoop/workers

server2

server3

server4

[hadoop@server4 ~]$ cd hadoop

[hadoop@server4 hadoop]$ bin/hdfs --daemon start datanode

[hadoop@server4 hadoop]$ jps

13909 Jps

13846 DataNode

[hadoop@server4 hadoop]$ mv bigfile demo

[hadoop@server4 hadoop]$ bin/hdfs dfs -put demo

刷新网页访问172.25.3.1:9870,有上传的文件demo,在server4上上传,会就近分配给server4,再给其他,保证负载均衡

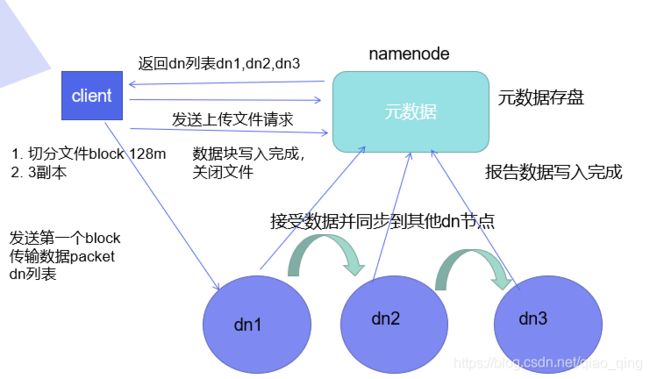

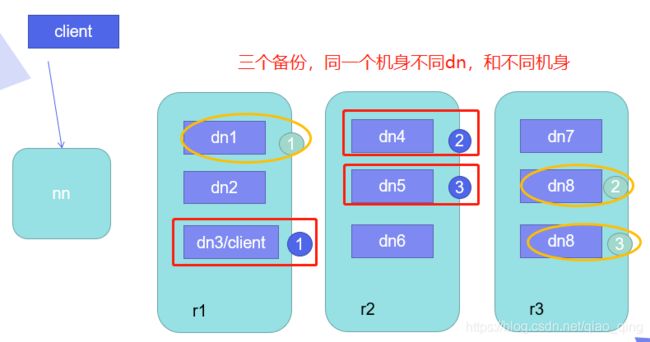

4.分布式存储

hdfs读写和容错机制原理漫画讲解:https://www.dazhuanlan.com/2019/10/23/5db06032e0463/

官方文档:hadoop+zookeeper

[hadoop@server1 hadoop]$ vim etc/hadoop/mapred-site.xml

mapreduce.framework.name

yarn

mapreduce.application.classpath

$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*:$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*

[hadoop@server1 hadoop]$ vim etc/hadoop/hadoop-env.sh

#添加

export HADOOP_MAPRED_HOME=/home/hadoop/hadoop

[hadoop@server1 hadoop]$ vim etc/hadoop/yarn-site.xml

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.env-whitelist #白名单

JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME

[hadoop@server1 hadoop]$ sbin/start-yarn.sh

Starting resourcemanager

Starting nodemanagers

server4: Warning: Permanently added 'server4,172.25.3.4' (ECDSA) to the list of known hosts.

[hadoop@server1 hadoop]$ jps##ResourceManager、NodeManager资源、作业管理器

30312 ResourceManager

28908 NameNode

30620 Jps

29133 SecondaryNameNode

[hadoop@server2 hadoop]$ jps

24130 DataNode

24727 Jps

24633 NodeManager

网页访问http://172.25.3.1:8088/

刷新网页访问172.25.3.1:9870,有上传的文件demo,在server4上上传,会就近分配给server4,再给其他,保证负载均衡

5.Zookeeper 高可用集群(hadoop自带的nn高可用)

%5个快照,server1和5两个master是2G,三个node1G。Zookeeper 集群至少三台,总节点数为奇数个

%新开server5,2G

1.集群搭建

[root@server5 ~]# useradd hadoop

[root@server5 ~]# yum install nfs-utils -y

[root@server5 ~]# su - hadoop

[root@server5 ~]# mount 172.25.3.1:/home/hadoop/ /home/hadoop/

172.25.3.1:/home/hadoop 17811456 3007744 14803712 17% /home/hadoop

[hadoop@server1 hadoop]$ sbin/stop-yarn.sh

[hadoop@server1 hadoop]$ sbin/stop-dfs.sh

[hadoop@server1 hadoop]$ jps

31263 Jps

[hadoop@server1 hadoop]$ rm -fr /tmp/*

[hadoop@server2 hadoop]$ rm -fr /tmp/*

[hadoop@server3 hadoop]$ rm -fr /tmp/*

[hadoop@server4 hadoop]$ rm -fr /tmp/*

[hadoop@server1 ~]$ ls##下载zookeeper-3.4.9.tar.gz

zookeeper-3.4.9.tar.gz

[hadoop@server1 ~]$ tar zxf zookeeper-3.4.9.tar.gz

[hadoop@server1 ~]$ cd zookeeper-3.4.9/conf/

[hadoop@server1 conf]$ ls

configuration.xsl log4j.properties zoo_sample.cfg

[hadoop@server1 conf]$ cp zoo_sample.cfg zoo.cfg

[hadoop@server1 conf]$ vim zoo.cfg

##最后添加

server.1=172.25.3.2:2888:3888

server.2=172.25.3.3:2888:3888

server.3=172.25.3.4:2888:3888

参数

server.x=[hostname]:nnnnn[:nnnnn]

这里的 x 是一个数字,与 myid 文件中的 id 是一致的。右边可以配置两个端口,第一个端口

用于 F 和 L 之间的数据同步和其它通信,第二个端口用于 Leader 选举过程中投票通信。

[hadoop@server2 ~]$ mkdir /tmp/zookeeper

[hadoop@server2 ~]$ echo 1 > /tmp/zookeeper/myid

[hadoop@server3 ~]$ mkdir /tmp/zookeeper

[hadoop@server3 ~]$ echo 2 > /tmp/zookeeper/myid

[hadoop@server4 ~]$ mkdir /tmp/zookeeper

[hadoop@server4 ~]$ echo 3 > /tmp/zookeeper/myid

###在各节点启动服务

[hadoop@server2 ~]$ cd zookeeper-3.4.9/

[hadoop@server2 zookeeper-3.4.9]$ bin/zkServer.sh start

[hadoop@server3 zookeeper-3.4.9]$ bin/zkServer.sh start

[hadoop@server4 zookeeper-3.4.9]$ bin/zkServer.sh start

[hadoop@server3 zookeeper-3.4.9]$ bin/zkServer.sh status

Mode: leader

[hadoop@server4 zookeeper-3.4.9]$ bin/zkServer.sh status

Mode: follower

2.hadoop配置

[hadoop@server1 ~]$ vim hadoop/etc/hadoop/core-site.xml

fs.defaultFS

hdfs://masters

ha.zookeeper.quorum

172.25.3.2:2181,172.25.3.3:2181,172.25.3.4:2181

[hadoop@server1 ~]$ vim hadoop/etc/hadoop/hdfs-site.xml

dfs.replication

3

dfs.nameservices

masters

dfs.ha.namenodes.masters

h1,h2

dfs.namenode.rpc-address.masters.h1

172.25.3.1:9000

dfs.namenode.http-address.masters.h1

172.25.3.1:9870

dfs.namenode.rpc-address.masters.h2

172.25.3.5:9000

dfs.namenode.http-address.masters.h2

172.25.3.5:9870

dfs.namenode.shared.edits.dir

qjournal://172.25.3.2:8485;172.25.3.3:8485;172.25.3.4:8485/masters

dfs.journalnode.edits.dir

/tmp/journaldata

dfs.ha.automatic-failover.enabled

true

dfs.client.failover.proxy.provider.masters

org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

dfs.ha.fencing.methods

sshfence

shell(/bin/true)

dfs.ha.fencing.ssh.private-key-files

/home/hadoop/.ssh/id_rsa

dfs.ha.fencing.ssh.connect-timeout

30000

3.启动 hdfs 集群(按顺序启动)

###第一次启动,2,3,4上启动日志节点,然后server1初始化

[hadoop@server2 ~]$ cd hadoop

[hadoop@server2 hadoop]$ bin/hdfs --daemon start journalnode

[hadoop@server2 hadoop]$ jps

25078 Jps

24907 QuorumPeerMain

25038 JournalNode

[hadoop@server3 hadoop]$ bin/hdfs --daemon start journalnode

[hadoop@server4 hadoop]$ bin/hdfs --daemon start journalnode

[hadoop@server1 hadoop]$ bin/hdfs namenode -format##格式化HDFS 集群

[hadoop@server1 hadoop]$ ls /tmp/

hadoop-hadoop hadoop-hadoop-namenode.pid hsperfdata_hadoop

[hadoop@server1 hadoop]$ scp -r /tmp/hadoop-hadoop server5:/tmp

[hadoop@server1 hadoop]$ bin/hdfs zkfc -formatZK##格式化 zookeeper

##启动 hdfs 集群(只需在 h1 上执行即可)

[hadoop@server1 hadoop]$ sbin/start-dfs.sh

[hadoop@server1 hadoop]$ jps

2885 DFSZKFailoverController##故障控制器

2938 Jps

2524 NameNode

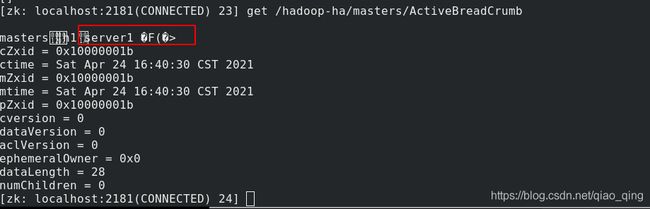

查看各节点状态

[hadoop@server3 zookeeper-3.4.9]$ bin/zkCli.sh

[zk: localhost:2181(CONNECTED) 21] ls /hadoop-ha/masters

[zk: localhost:2181(CONNECTED) 23] get /hadoop-ha/masters/ActiveBreadCrumb

mastersh1server1 �F(�>

##网页访问172.25.3.1:9870,172.25.3.1:9870。server1正常运行,server5是standy

4.上传文件

[hadoop@server1 hadoop]$ bin/hdfs dfs -ls

ls: `.': No such file or directory

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user/hadoop

[hadoop@server1 hadoop]$ ls

bin demo etc include lib libexec LICENSE.txt logs NOTICE.txt README.txt sbin share

[hadoop@server1 hadoop]$ bin/hdfs dfs -put demo

5.测试故障自动切换

##server1挂掉后server5接管,文件可以正常查看

[hadoop@server1 hadoop]$ jps

2885 DFSZKFailoverController

2524 NameNode

3215 Jps

[hadoop@server1 hadoop]$ kill 2524

[hadoop@server1 hadoop]$ bin/hdfs --daemon start namenode

##网页访问172.25.3.1:9870,172.25.3.1:9870.server5正常运行,server1是standy

6.yarn 管理器的高可用

[hadoop@server1 hadoop]$ vim etc/hadoop/yarn-site.xml

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.env-whitelist

JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME

yarn.resourcemanager.ha.enabled

true

yarn.resourcemanager.cluster-id

RM_CLUSTER

yarn.resourcemanager.ha.rm-ids

rm1,rm2

yarn.resourcemanager.hostname.rm1

172.25.3.1

yarn.resourcemanager.hostname.rm2

172.25.3.5

yarn.resourcemanager.recovery.enabled

true

yarn.resourcemanager.store.class

org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore

yarn.resourcemanager.zk-address

172.25.3.2:2181,172.25.3.3:2181,172.25.3.4:2181

[hadoop@server1 hadoop]$ sbin/start-yarn.sh

Starting resourcemanagers on [ 172.25.3.1 172.25.3.5]

Starting nodemanagers

[hadoop@server1 hadoop]$ jps

4272 Jps

3313 NameNode

2885 DFSZKFailoverController

3961 ResourceManager

[hadoop@server3 zookeeper-3.4.9]$ bin/zkCli.sh

[zk: localhost:2181(CONNECTED) 26] get /yarn-leader-election/RM_CLUSTER/ActiveBreadCrumb

RM_CLUSTERrm1###rm1在运行

网页访问http://172.25.3.1:8088

7.测试 yarn 故障切换

[hadoop@server1 hadoop]$ jps

4352 Jps

3313 NameNode

2885 DFSZKFailoverController

3961 ResourceManager

[hadoop@server1 hadoop]$ kill 3961

##网页访问http://172.25.3.5:8088,此时server5是正常的

[hadoop@server3 zookeeper-3.4.9]$ bin/zkCli.sh

[zk: localhost:2181(CONNECTED) 26] get /yarn-leader-election/RM_CLUSTER/ActiveBreadCrumb

RM_CLUSTERrm2###rm2在运行

[hadoop@server1 hadoop]$ bin/yarn --daemon start resourcemanager##再把server1起开,server1是standby

##网页访问http://172.25.3.5:8088

8.Hbase 分布式部署

[hadoop@server1 ~]$ ls

hbase-1.2.4-bin.tar.gz

[hadoop@server1 hbase-1.2.4]$ vim conf/hbase-env.sh

# The java implementation to use. Java 1.7+ required.

export JAVA_HOME=/home/hadoop/java

export HADOOP_HOME=/home/hadoop/hadoop

export HBASE_MANAGES_ZK=false

[hadoop@server1 hbase-1.2.4]$ vim conf/hbase-site.xml

hbase.rootdir

hdfs://masters/hbase

hbase.cluster.distributed

true

hbase.zookeeper.quorum

172.25.3.2,172.25.3.3,172.25.3.4

hbase.master

h1

[hadoop@server1 hbase-1.2.4]$ vim conf/regionservers

[hadoop@server1 hbase-1.2.4]$ cat conf/regionservers

172.25.3.3

172.25.3.4

172.25.3.2

[hadoop@server1 hbase-1.2.4]$ bin/hbase-daemon.sh start master

[hadoop@server1 hbase-1.2.4]$ jps

3313 NameNode

4434 ResourceManager

2885 DFSZKFailoverController

4950 Jps

4775 HMaster

[hadoop@server5 ~]$ cd hbase-1.2.4/

[hadoop@server5 hbase-1.2.4]$ bin/hbase-daemon.sh start master

[hadoop@server5 hbase-1.2.4]$ jps

26032 Jps

15094 DFSZKFailoverController

25927 HMaster

14971 NameNode

25565 ResourceManager

##网页访问http://172.25.3.1:16010