springboot整合elasticsearch+ik分词器+kibana

SpringBoot整合Elasticsearch+IK+Kibana

ElasticSearch是一个基于Lucene的搜索服务器。它提供了一个分布式多用户能力的全文搜索引擎,基于RESTful web接口。

Elasticsearch是用Java开发的,并作为Apache许可条款下的开放源码发布,是当前流行的企业级搜索引擎。设计用于云计算中,能够达到实时搜索,稳定,可靠,快速,安装使用方便。

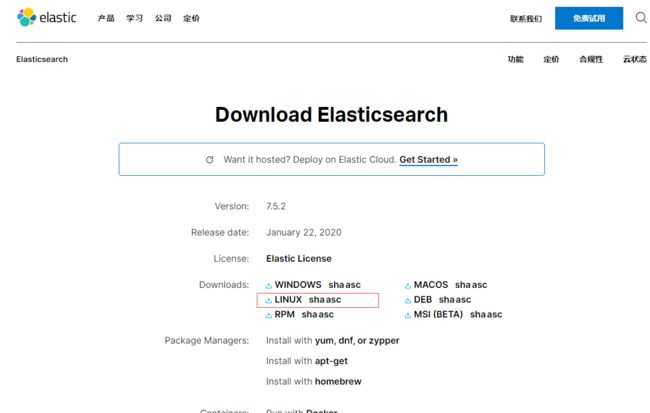

安装相关软件

| 软件名称 | 软件版本 | 下载地址 |

|---|---|---|

| Elasticsearch | 6.2.4 | elasticsearch官网下载 |

| IK中文分词器 | 6.2.4 | ik分词器官网下载 |

| kibana | 6.2.4 | kibana官网下载 |

1,安装Elasticsearch

安装教程百度一搜一大把,这里就不作详细解释,只说明如下几点

- 修改jvm.options的内存分配参数,否则容易造成内存不足的启动失败问题

- 修改elasticsearch.yml配置文件,修改地址,端口,节点名称等信息

注意:elasticsearch自带jdk,注意Linux环境中的jdk和自带的jdk冲突!

安装完成之后启动es,默认启动端口为9200

./bin/elasticsearch

./bin/elasticsearch -d # 后台运行

浏览器访问: http://ip:9200/ 会得到相应的版本信息,如

{

"name": "Bb-td48",

"cluster_name": "elasticsearch",

"cluster_uuid": "_IM0iQAeToWALU0tq7rsZQ",

"version": {

"number": "6.2.4",

"build_hash": "ccec39f",

"build_date": "2018-04-12T20:37:28.497551Z",

"build_snapshot": false,

"lucene_version": "7.2.1",

"minimum_wire_compatibility_version": "5.6.0",

"minimum_index_compatibility_version": "5.0.0"

},

"tagline": "You Know, for Search"

}

2,ES安装IK分词器

为什么要在elasticsearch中要使用ik这样的中文分词呢,那是因为es提供的分词是英文分词,对于中文的分词就做的非常不好了,因此我们需要一个中文分词器来用于搜索和使用。今天我们就尝试安装下IK分词。

1、去github 下载对应的分词插件,根据不同版本下载不同的分词插件

https://github.com/medcl/elasticsearch-analysis-ik/releases

2、到es的plugins 目录创建文件夹

cd your-es-root/plugins/ && mkdir ik

3、解压ik分词插件到ik文件夹

unzip elasticsearch-analysis-ik-6.4.3.zip

3,ES安装Kibana(可视化工具)

Kibana可以到官网去下载,不过网速都是特别感人,这里提供一个华为云镜像地址,下载速度嗖嗖的!

https://mirrors.huaweicloud.com/kibana/

里面有所有版本的Kibana提供下载!

解压

tar -zxvf kibana-6.3.2-linux-x86_64.tar.gz

修改配置文件

vim config/kibana.yml

# 放开注释,将默认配置改成如下:

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.url: "http://192.168.202.128:9200"

kibana.index: ".kibana"

启动

bin/kibana

启动失败 报错如下

这个很明显是没有权限,一次切换root用户 给es用户这个文件的权限

chown -R 用户名:用户名 /usr/local/elasticsearch/kibana/kibana-7.8.0/

但是下面的警告出事了,这里并不是找不到啥导致报错,而是服务器内存不足造成的,所以,我选择放弃!

相信不用kibana照样玩转elasticsearch!

4,springboot工程集成elasticsearch

(1)整合maven依赖

org.springframework.boot

spring-boot-starter-data-elasticsearch

还有lombok,自己加一下

上面的是整合依赖,由于测试等原因,加上其他的依赖

org.springframework.boot

spring-boot-starter-data-elasticsearch

org.projectlombok

lombok

junit

junit

org.springframework.boot

spring-boot-starter-test

commons-beanutils

commons-beanutils

1.9.3

(2)application.yml

spring:

data:

elasticsearch:

cluster-name: my-application

cluster-nodes: 101.201.101.206:9300

(3)实体类

@Data

@AllArgsConstructor

@NoArgsConstructor

@Document(indexName = "item", type = "docs", shards = 1, replicas = 0)

public class Item {

@Id

private Long id;

@Field(type = FieldType.Text, analyzer = "ik_max_word")

private String title; //标题

@Field(type = FieldType.Keyword)

private String category;// 分类

@Field(type = FieldType.Keyword)

private String brand; // 品牌

@Field(type = FieldType.Double)

private Double price; // 价格

@Field(index = false, type = FieldType.Keyword)

private String images; // 图片地址

}

Spring Data通过注解来声明字段的映射属性,有下面的三个注解:

-

@Document

作用在类,标记实体类为文档对象,一般有两个属性

- indexName:对应索引库名称

- type:对应在索引库中的类型

- shards:分片数量,默认5

- replicas:副本数量,默认1

-

@Id作用在成员变量,标记一个字段作为id主键 -

@Field

作用在成员变量,标记为文档的字段,并指定字段映射属性:

- type:字段类型,是是枚举:FieldType

- index:是否索引,布尔类型,默认是true

- store:是否存储,布尔类型,默认是false

- analyzer:分词器名称

(4)repository

需要提供一个repository仓库

public interface ItemRepository extends ElasticsearchRepository<Item, Long> {

/**

* 根据价格区间查询

*

* @param price1

* @param price2

* @return

*/

List<Item> findByPriceBetween(double price1, double price2);

}

(5)测试

这里对elasticsearch做增删改查!

@RunWith(SpringRunner.class)

@SpringBootTest

public class SpringbootElasticsearchApplicationTests {

@Autowired

private ElasticsearchTemplate elasticsearchTemplate;

@Autowired

private ItemRepository itemRepository;

/**

* 创建索引

*/

@Test

public void createIndex() {

// 创建索引,会根据Item类的@Document注解信息来创建

elasticsearchTemplate.createIndex(Item.class);

// 配置映射,会根据Item类中的id、Field等字段来自动完成映射

elasticsearchTemplate.putMapping(Item.class);

}

/**

* 删除索引

*/

@Test

public void deleteIndex() {

elasticsearchTemplate.deleteIndex("item");

}

/**

* 新增

*/

@Test

public void insert() {

Item item = new Item(1L, "小米手机7", "手机", "小米", 2999.00, "https://img12.360buyimg.com/n1/s450x450_jfs/t1/14081/40/4987/124705/5c371b20E53786645/c1f49cd69e6c7e6a.jpg");

itemRepository.save(item);

}

/**

* 批量新增

*/

@Test

public void insertList() {

List<Item> list = new ArrayList<>();

list.add(new Item(2L, "坚果手机R1", "手机", "锤子", 3999.00, "https://img12.360buyimg.com/n1/s450x450_jfs/t1/14081/40/4987/124705/5c371b20E53786645/c1f49cd69e6c7e6a.jpg"));

list.add(new Item(3L, "华为META20", "手机", "华为", 4999.00, "https://img12.360buyimg.com/n1/s450x450_jfs/t1/14081/40/4987/124705/5c371b20E53786645/c1f49cd69e6c7e6a.jpg"));

list.add(new Item(4L, "iPhone X", "手机", "iPhone", 5100.00, "https://img12.360buyimg.com/n1/s450x450_jfs/t1/14081/40/4987/124705/5c371b20E53786645/c1f49cd69e6c7e6a.jpg"));

list.add(new Item(5L, "iPhone XS", "手机", "iPhone", 5999.00, "https://img12.360buyimg.com/n1/s450x450_jfs/t1/14081/40/4987/124705/5c371b20E53786645/c1f49cd69e6c7e6a.jpg"));

// 接收对象集合,实现批量新增

itemRepository.saveAll(list);

}

/**

* 修改

*

* :修改和新增是同一个接口,区分的依据就是id,这一点跟我们在页面发起PUT请求是类似的。

*/

/**

* 删除所有

*/

@Test

public void delete() {

itemRepository.deleteAll();

}

/**

* 基本查询

*/

@Test

public void query() {

// 查询全部,并按照价格降序排序

Iterable<Item> items = itemRepository.findAll(Sort.by("price").descending());

items.forEach(item -> System.out.println("item = " + item));

}

/**

* 自定义方法

*/

@Test

public void queryByPriceBetween() {

// 根据价格区间查询

List<Item> list = itemRepository.findByPriceBetween(5000.00, 6000.00);

list.forEach(item -> System.out.println("item = " + item));

}

/**

* 自定义查询

*/

@Test

public void search() {

// 构建查询条件

NativeSearchQueryBuilder queryBuilder = new NativeSearchQueryBuilder();

// 添加基本分词查询

queryBuilder.withQuery(QueryBuilders.matchQuery("title", "小米手机"));

// 搜索,获取结果

Page<Item> items = itemRepository.search(queryBuilder.build());

// 总条数

long total = items.getTotalElements();

System.out.println("total = " + total);

items.forEach(item -> System.out.println("item = " + item));

}

/**

* 分页查询

*/

@Test

public void searchByPage() {

// 构建查询条件

NativeSearchQueryBuilder queryBuilder = new NativeSearchQueryBuilder();

// 添加基本分词查询

queryBuilder.withQuery(QueryBuilders.termQuery("category", "手机"));

// 分页:

int page = 0;

int size = 2;

queryBuilder.withPageable(PageRequest.of(page, size));

// 搜索,获取结果

Page<Item> items = itemRepository.search(queryBuilder.build());

long total = items.getTotalElements();

System.out.println("总条数 = " + total);

System.out.println("总页数 = " + items.getTotalPages());

System.out.println("当前页:" + items.getNumber());

System.out.println("每页大小:" + items.getSize());

items.forEach(item -> System.out.println("item = " + item));

}

/**

* 排序

*/

@Test

public void searchAndSort() {

// 构建查询条件

NativeSearchQueryBuilder queryBuilder = new NativeSearchQueryBuilder();

// 添加基本分词查询

queryBuilder.withQuery(QueryBuilders.termQuery("category", "手机"));

// 排序

queryBuilder.withSort(SortBuilders.fieldSort("price").order(SortOrder.ASC));

// 搜索,获取结果

Page<Item> items = this.itemRepository.search(queryBuilder.build());

// 总条数

long total = items.getTotalElements();

System.out.println("总条数 = " + total);

items.forEach(item -> System.out.println("item = " + item));

}

/**

* 聚合为桶

*/

@Test

public void testAgg() {

NativeSearchQueryBuilder queryBuilder = new NativeSearchQueryBuilder();

// 不查询任何结果

queryBuilder.withSourceFilter(new FetchSourceFilter(new String[]{

""}, null));

// 1、添加一个新的聚合,聚合类型为terms,聚合名称为brands,聚合字段为brand

queryBuilder.addAggregation(AggregationBuilders.terms("brands").field("brand"));

// 2、查询,需要把结果强转为AggregatedPage类型

AggregatedPage<Item> aggPage = (AggregatedPage<Item>) itemRepository.search(queryBuilder.build());

// 3、解析

// 3.1、从结果中取出名为brands的那个聚合,

// 因为是利用String类型字段来进行的term聚合,所以结果要强转为StringTerm类型

StringTerms agg = (StringTerms) aggPage.getAggregation("brands");

// 3.2、获取桶

List<StringTerms.Bucket> buckets = agg.getBuckets();

// 3.3、遍历

for (StringTerms.Bucket bucket : buckets) {

// 3.4、获取桶中的key,即品牌名称

System.out.println(bucket.getKeyAsString());

// 3.5、获取桶中的文档数量

System.out.println(bucket.getDocCount());

}

}

/**

* 嵌套聚合,求平均值

*/

@Test

public void testSubAgg() {

NativeSearchQueryBuilder queryBuilder = new NativeSearchQueryBuilder();

// 不查询任何结果

queryBuilder.withSourceFilter(new FetchSourceFilter(new String[]{

""}, null));

// 1、添加一个新的聚合,聚合类型为terms,聚合名称为brands,聚合字段为brand

queryBuilder.addAggregation(

AggregationBuilders.terms("brands").field("brand")

.subAggregation(AggregationBuilders.avg("priceAvg").field("price")) // 在品牌聚合桶内进行嵌套聚合,求平均值

);

// 2、查询,需要把结果强转为AggregatedPage类型

AggregatedPage<Item> aggPage = (AggregatedPage<Item>) this.itemRepository.search(queryBuilder.build());

// 3、解析

// 3.1、从结果中取出名为brands的那个聚合,

// 因为是利用String类型字段来进行的term聚合,所以结果要强转为StringTerm类型

StringTerms agg = (StringTerms) aggPage.getAggregation("brands");

// 3.2、获取桶

List<StringTerms.Bucket> buckets = agg.getBuckets();

// 3.3、遍历

for (StringTerms.Bucket bucket : buckets) {

// 3.4、获取桶中的key,即品牌名称 3.5、获取桶中的文档数量

System.out.println(bucket.getKeyAsString() + ",共" + bucket.getDocCount() + "台");

// 3.6.获取子聚合结果:

InternalAvg avg = (InternalAvg) bucket.getAggregations().asMap().get("priceAvg");

System.out.println("平均售价:" + avg.getValue());

}

}

}

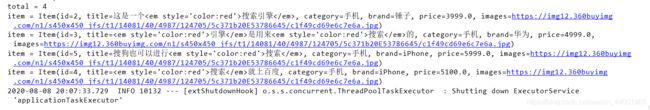

(6)搜索分词高亮显示

下面是测试类中的一个查询方法,并进行高亮显示

@org.junit.Test

public void search() {

// 构建查询条件

NativeSearchQueryBuilder queryBuilder = new NativeSearchQueryBuilder();

// 添加基本分词查询

queryBuilder.withQuery(QueryBuilders.matchQuery("title", "搜索引擎"));

HighlightBuilder.Field hfield= new HighlightBuilder.Field("title")

.preTags("")

.postTags("")

.fragmentSize(100);

queryBuilder.withHighlightFields(hfield);

// 搜索,获取结果

Page<Item> items = itemRepository.search(queryBuilder.build());

// 总条数

long total = items.getTotalElements();

System.out.println("total = " + total);

items.forEach(item -> System.out.println("item = " + item));

}

但是没有效果,百度发现,这个版本的mapper实现类没有设置高亮显示的字段,改正后的结果

新建一个MyResultMapper ,继承AbstractResultMapper 并对其方法进行重写,结果如下

其中需要上面的BeanUtils的依赖!

package com.example.elasticsearch.springbootelasticsearch.repository;

import com.fasterxml.jackson.core.JsonEncoding;

import com.fasterxml.jackson.core.JsonFactory;

import com.fasterxml.jackson.core.JsonGenerator;

import org.apache.commons.beanutils.PropertyUtils;

import org.elasticsearch.action.get.GetResponse;

import org.elasticsearch.action.get.MultiGetItemResponse;

import org.elasticsearch.action.get.MultiGetResponse;

import org.elasticsearch.action.search.SearchResponse;

import org.elasticsearch.common.document.DocumentField;

import org.elasticsearch.common.text.Text;

import org.elasticsearch.search.SearchHit;

import org.elasticsearch.search.fetch.subphase.highlight.HighlightField;

import org.springframework.data.domain.Pageable;

import org.springframework.data.elasticsearch.ElasticsearchException;

import org.springframework.data.elasticsearch.annotations.Document;

import org.springframework.data.elasticsearch.annotations.ScriptedField;

import org.springframework.data.elasticsearch.core.AbstractResultMapper;

import org.springframework.data.elasticsearch.core.DefaultEntityMapper;

import org.springframework.data.elasticsearch.core.EntityMapper;

import org.springframework.data.elasticsearch.core.aggregation.AggregatedPage;

import org.springframework.data.elasticsearch.core.aggregation.impl.AggregatedPageImpl;

import org.springframework.data.elasticsearch.core.mapping.ElasticsearchPersistentEntity;

import org.springframework.data.elasticsearch.core.mapping.ElasticsearchPersistentProperty;

import org.springframework.data.elasticsearch.core.mapping.SimpleElasticsearchMappingContext;

import org.springframework.data.mapping.context.MappingContext;

import org.springframework.stereotype.Component;

import org.springframework.util.Assert;

import org.springframework.util.StringUtils;

import java.io.ByteArrayOutputStream;

import java.io.IOException;

import java.lang.reflect.InvocationTargetException;

import java.nio.charset.Charset;

import java.util.*;

@Component

public class MyResultMapper extends AbstractResultMapper {

private final MappingContext<? extends ElasticsearchPersistentEntity<?>, ElasticsearchPersistentProperty> mappingContext;

public MyResultMapper() {

this(new SimpleElasticsearchMappingContext());

}

public MyResultMapper(MappingContext<? extends ElasticsearchPersistentEntity<?>, ElasticsearchPersistentProperty> mappingContext) {

super(new DefaultEntityMapper(mappingContext));

Assert.notNull(mappingContext, "MappingContext must not be null!");

this.mappingContext = mappingContext;

}

public MyResultMapper(EntityMapper entityMapper) {

this(new SimpleElasticsearchMappingContext(), entityMapper);

}

public MyResultMapper(

MappingContext<? extends ElasticsearchPersistentEntity<?>, ElasticsearchPersistentProperty> mappingContext,

EntityMapper entityMapper) {

super(entityMapper);

Assert.notNull(mappingContext, "MappingContext must not be null!");

this.mappingContext = mappingContext;

}

@Override

public <T> AggregatedPage<T> mapResults(SearchResponse response, Class<T> clazz, Pageable pageable) {

long totalHits = response.getHits().getTotalHits();

float maxScore = response.getHits().getMaxScore();

List<T> results = new ArrayList<>();

for (SearchHit hit : response.getHits()) {

if (hit != null) {

T result = null;

if (!StringUtils.isEmpty(hit.getSourceAsString())) {

result = mapEntity(hit.getSourceAsString(), clazz);

} else {

result = mapEntity(hit.getFields().values(), clazz);

}

setPersistentEntityId(result, hit.getId(), clazz);

setPersistentEntityVersion(result, hit.getVersion(), clazz);

setPersistentEntityScore(result, hit.getScore(), clazz);

populateScriptFields(result, hit);

results.add(result);

}

}

return new AggregatedPageImpl<T>(results, pageable, totalHits, response.getAggregations(), response.getScrollId(),

maxScore);

}

private String concat(Text[] texts) {

StringBuilder sb = new StringBuilder();

for (Text text : texts) {

sb.append(text.toString());

}

return sb.toString();

}

private <T> void populateScriptFields(T result, SearchHit hit) {

if (hit.getFields() != null && !hit.getFields().isEmpty() && result != null) {

for (java.lang.reflect.Field field : result.getClass().getDeclaredFields()) {

ScriptedField scriptedField = field.getAnnotation(ScriptedField.class);

if (scriptedField != null) {

String name = scriptedField.name().isEmpty() ? field.getName() : scriptedField.name();

DocumentField searchHitField = hit.getFields().get(name);

if (searchHitField != null) {

field.setAccessible(true);

try {

field.set(result, searchHitField.getValue());

} catch (IllegalArgumentException e) {

throw new ElasticsearchException(

"failed to set scripted field: " + name + " with value: " + searchHitField.getValue(), e);

} catch (IllegalAccessException e) {

throw new ElasticsearchException("failed to access scripted field: " + name, e);

}

}

}

}

}

for (HighlightField field : hit.getHighlightFields().values()) {

try {

PropertyUtils.setProperty(result, field.getName(), concat(field.fragments()));

} catch (InvocationTargetException | IllegalAccessException | NoSuchMethodException e) {

throw new ElasticsearchException("failed to set highlighted value for field: " + field.getName()

+ " with value: " + Arrays.toString(field.getFragments()), e);

}

}

}

private <T> T mapEntity(Collection<DocumentField> values, Class<T> clazz) {

return mapEntity(buildJSONFromFields(values), clazz);

}

private String buildJSONFromFields(Collection<DocumentField> values) {

JsonFactory nodeFactory = new JsonFactory();

try {

ByteArrayOutputStream stream = new ByteArrayOutputStream();

JsonGenerator generator = nodeFactory.createGenerator(stream, JsonEncoding.UTF8);

generator.writeStartObject();

for (DocumentField value : values) {

if (value.getValues().size() > 1) {

generator.writeArrayFieldStart(value.getName());

for (Object val : value.getValues()) {

generator.writeObject(val);

}

generator.writeEndArray();

} else {

generator.writeObjectField(value.getName(), value.getValue());

}

}

generator.writeEndObject();

generator.flush();

return new String(stream.toByteArray(), Charset.forName("UTF-8"));

} catch (IOException e) {

return null;

}

}

@Override

public <T> T mapResult(GetResponse response, Class<T> clazz) {

T result = mapEntity(response.getSourceAsString(), clazz);

if (result != null) {

setPersistentEntityId(result, response.getId(), clazz);

setPersistentEntityVersion(result, response.getVersion(), clazz);

}

return result;

}

@Override

public <T> LinkedList<T> mapResults(MultiGetResponse responses, Class<T> clazz) {

LinkedList<T> list = new LinkedList<>();

for (MultiGetItemResponse response : responses.getResponses()) {

if (!response.isFailed() && response.getResponse().isExists()) {

T result = mapEntity(response.getResponse().getSourceAsString(), clazz);

setPersistentEntityId(result, response.getResponse().getId(), clazz);

setPersistentEntityVersion(result, response.getResponse().getVersion(), clazz);

list.add(result);

}

}

return list;

}

private <T> void setPersistentEntityId(T result, String id, Class<T> clazz) {

if (clazz.isAnnotationPresent(Document.class)) {

ElasticsearchPersistentEntity<?> persistentEntity = mappingContext.getRequiredPersistentEntity(clazz);

ElasticsearchPersistentProperty idProperty = persistentEntity.getIdProperty();

// Only deal with String because ES generated Ids are strings !

if (idProperty != null && idProperty.getType().isAssignableFrom(String.class)) {

persistentEntity.getPropertyAccessor(result).setProperty(idProperty, id);

}

}

}

private <T> void setPersistentEntityVersion(T result, long version, Class<T> clazz) {

if (clazz.isAnnotationPresent(Document.class)) {

ElasticsearchPersistentEntity<?> persistentEntity = mappingContext.getPersistentEntity(clazz);

ElasticsearchPersistentProperty versionProperty = persistentEntity.getVersionProperty();

// Only deal with Long because ES versions are longs !

if (versionProperty != null && versionProperty.getType().isAssignableFrom(Long.class)) {

// check that a version was actually returned in the response, -1 would indicate that

// a search didn't request the version ids in the response, which would be an issue

Assert.isTrue(version != -1, "Version in response is -1");

persistentEntity.getPropertyAccessor(result).setProperty(versionProperty, version);

}

}

}

private <T> void setPersistentEntityScore(T result, float score, Class<T> clazz) {

if (clazz.isAnnotationPresent(Document.class)) {

ElasticsearchPersistentEntity<?> entity = mappingContext.getRequiredPersistentEntity(clazz);

if (!entity.hasScoreProperty()) {

return;

}

entity.getPropertyAccessor(result) //

.setProperty(entity.getScoreProperty(), score);

}

}

}

5,springboot+elasticsearch实战

虎你呢,没了!再见!

上面的已经够用了,兄弟萌!