准备工作

- keras

- tensorflow

- numpy

- PIL

下载MNIST数据集

from keras.dataset import mnist

mnist.load_data(path)

path是保存的路径

模型结构

这个模型用了两个Convolution2D层,两个MaxPooling2D层,一个Flatten层,两个全连接Dense层,使用的激活函数是relu,优化器是adam

训练代码

from keras.model import Sequential

from keras.layers import Convolution2D, Dense, Flatten, Activation, MaxPooling2D

from keras.utils import to_catagorical

from keras.optimizers import Adam

import numpy as np

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train = x_train.reshape(60000, 28, 28, 1)

x_test = x_test.reshape(10000, 28, 28, 1)

y_test = to_categorical(y_test, 10)

y_train = to_categorical(y_train, 10)

# design model

model = Sequential()

model.add(Convolution2D(25, (5, 5), input_shape=(28, 28, 1)))

model.add(MaxPooling2D(2, 2))

model.add(Activation('relu'))

model.add(Convolution2D(50, (5, 5)))

model.add(MaxPooling2D(2, 2))

model.add(Activation('relu'))

model.add(Flatten())

model.add(Dense(50))

model.add(Activation('relu'))

model.add(Dense(10))

model.add(Activation('softmax'))

adam = Adam(lr=0.001)

# compile model

model.compile(optimizer=adam,loss='categorical_crossentropy',metrics=['accuracy'])

# training model

model.fit(x_train, y_train, batch_size=100, epochs=5)

# test model

print model.evaluate(x_test, y_test, batch_size=100)

# save model

model.save('/Users/zhang/Desktop/my_model2.h5')

训练效果

Using TensorFlow backend.

Epoch 1/5

2017-09-12 14:49:32.779373: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.1 instructions, but these are available on your machine and could speed up CPU computations.

2017-09-12 14:49:32.779389: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.2 instructions, but these are available on your machine and could speed up CPU computations.

2017-09-12 14:49:32.779393: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX instructions, but these are available on your machine and could speed up CPU computations.

2017-09-12 14:49:32.779398: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX2 instructions, but these are available on your machine and could speed up CPU computations.

2017-09-12 14:49:32.779401: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use FMA instructions, but these are available on your machine and could speed up CPU computations.

60000/60000 [==============================] - 33s - loss: 2.5862 - acc: 0.8057

Epoch 2/5

60000/60000 [==============================] - 32s - loss: 0.0603 - acc: 0.9820

Epoch 3/5

60000/60000 [==============================] - 32s - loss: 0.0409 - acc: 0.9873

Epoch 4/5

60000/60000 [==============================] - 32s - loss: 0.0338 - acc: 0.9895

Epoch 5/5

60000/60000 [==============================] - 33s - loss: 0.0259 - acc: 0.9922

9900/10000 [============================>.] - ETA: 0s[0.054905540546023986, 0.98440000832080843]

- 可以看到在测试集上识别准确率达到了98.44%。

- 其实在训练过程中存在运气问题,对于batch_size=100来说,如果从一开始没有跑出最优值,可能就进入了死胡同,导致训练的准确率一直只有9%。

- 经过一轮训练就能达到80%的准确率,这样的训练效果很有可能导致过拟合,虽然在测试集上有98%的准确率。

测试

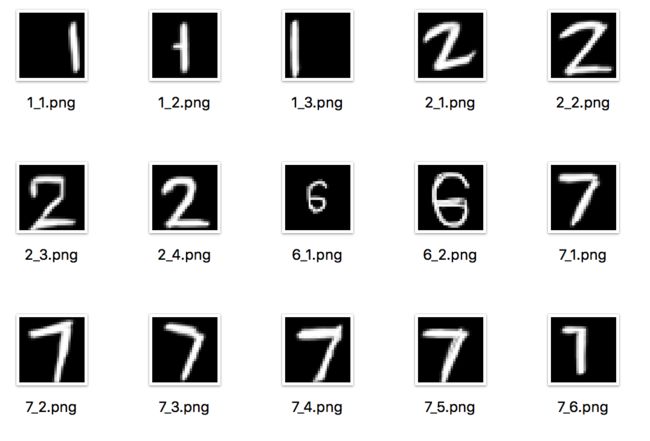

我用ps画出了几个手写数字的图片进行测试

这些都是28*28的图片

测试代码如下

from keras.models import load_model

import numpy as np

from PIL import Image

def ImageToMatrix(filename):

im = Image.open(filename)

#change to greyimage

im=im.convert("L")

data = im.getdata()

data = np.matrix(data,dtype='int')

return data

model = load_model('/Users/zhang/Desktop/my_model.h5')

while 1:

i = input('number:')

j = input('type:')

data = ImageToMatrix('/Users/zhang/Desktop/picture/'+str(i)+'_'+str(j)+'.png')

data = np.array(data)

data = data.reshape(1, 28, 28, 1)

print 'test['+str(i)+'_'+str(j)+'], num='+str(i)+':'

print model.predict_classes(

data, batch_size=1, verbose=0

)

选取了几个结果

number:7

type:1

test[7_1], num=7:

[7]

number:7

type:2

test[7_2], num=7:

[7]

number:7

type:3

test[7_3], num=7:

[7]

number:2

type:1

test[2_1], num=2:

[2]

number:1

type:1

test[1_1], num=1:

[4]

number:1

type:2

test[1_2], num=1:

[1]

number:1

type:3

test[1_3], num=1:

[1]

number:6

type:1

test[6_1], num=6:

[5]

总结:

- 该模型对于小字体没法正常识别(和训练集字体大小有关)

- 对于类似 '1' 等数字,如果放在图片边缘,如:1_1,没法准确识别

- 当然,对于颠倒方向,横放竖放的数字也没法准确识别

- 在mnist的测试集中,可以说是10000张图片只有大约200张识别错误

改进方案

- 对读取进来的图片进行先行处理(颠倒,居中,缩放等等),使得识别更加容易

- 对训练集进行处理,使用不同方向的数字训练集(训练量加大)

- 对神经层进行改进(由于没有深入了解,其实这次的神经网络也是瞎编的,只不过使用了卷积层和全连接层的结合)