- 《Java开发者必备:jstat、jmap、jstack实战指南》 ——从零掌握JVM监控三剑客

admin_Single

javajvm开发语言

《Java开发者必备:jstat、jmap、jstack实战指南》——从零掌握JVM监控三剑客文章目录**《Java开发者必备:jstat、jmap、jstack实战指南》**@[toc]**摘要****核心工具与场景****关键实践****诊断流程****工具选型决策表****调优原则****未来趋势****第一章:GC基础:垃圾回收机制与监控的关系****1.1内存世界的"垃圾分类"——GC分

- 初识Spring MVC并使用Maven搭建SpringMVC

NPU_Li Meng

SpringSpringMVCMavenWeb

SpringMVC基于MVC模式(模型(Model)-视图(View)-控制器(Controller))实现,能够帮助你构建像Spring框架那样灵活和松耦合的Web应用程序。核心类与接口DispatcherServlet前置控制器HandlerMapping处理器映射Controller控制器ViewResolver视图解析器View视图处理SpringMVC的请求流向当用户在浏览器中点击链接或

- 《Operating System Concepts》阅读笔记:p272-p285

codists

读书笔记操作系统

《OperatingSystemConcepts》学习第27天,p272-p285总结,总计14页。一、技术总结1.semaphoreAsemaphoreSisanintegervariablethat,apartfrominitialization,isaccessedonlythroughtwostandardatomicoperations:wait()andsignal().2.monit

- 分片文件异步合并上传

零三零等哈来

javaspring前端

对于大文件,为了避免上传导致网络带宽不够用,还有避免内存溢出,我们采用分片异步上传。controller层,在前端对文件进行分片,先计算文件md5码,方便后续文件秒传,然后再计算可以分成多少个分片,得到分片总数以及当前分片下标。@RequestMapping("/uploadFile")@SentinelResource(value="uploadFile",blockHandler="uploa

- springCloud集成tdengine(原生和mapper方式) 其一

张小娟

springcloudtdenginemybatis

第一种mapper方式,原生方式在主页看第二章一、添加pom文件com.zaxxerHikariCPcom.taosdata.jdbctaos-jdbcdriver3.5.3二、在nacos中配置好数据库连接spring:datasource:url:jdbc:TAOS://localhost:6030/testusername:rootpassword:yourPassWorddriver-cl

- MyBatis StatementHandler是如何创建 Statement 对象的? 如何执行 SQL 语句?

冰糖心书房

Mybatis源码系列2025Java面试系列mybatissql数据库

在MyBatis中,StatementHandler负责创建Statement对象并执行SQL语句。以下是其具体流程:1.StatementHandler.prepare()方法:创建JDBCStatement对象StatementHandler.prepare(Connectionconnection,IntegertransactionTimeout)方法是核心方法,负责基于MappedSta

- springCloud集成tdengine(原生和mapper方式) 其二 原生篇

张小娟

springcloudtdenginespring

mapper篇请看另一篇文章一、引入pom文件com.taosdata.jdbctaos-jdbcdriver3.5.3二、在nacos中填写数据库各种value值tdengine:datasource:location:yourLocationusername:rootpassword:yourPassword三、编写TDengineUtil文件下方util文件里面,包含创建database的方

- 每日面试题-假设有一个 1G 大的 HashMap,此时用户请求过来刚好触发它的扩容,会怎样?让你改造下 HashMap 的实现该怎样优化?

晚夜微雨问海棠呀

java开发语言

一、原理解析:HashMap扩容机制的核心问题当HashMap的size>capacity*loadFactor时触发扩容(默认负载因子0.75)。扩容流程如下:创建新数组:容量翻倍(newCap=oldCap{privateNode[]oldTable;privateNode[]newTable;privatevolatileintmigrationIndex=0;//迁移进度指针publicv

- 每日算法题-Nim 游戏 - 台阶

晚夜微雨问海棠呀

算法游戏

给定一个台阶数n,玩家每次可以选择跳跃1到m个台阶,最后一个台阶到达者获胜。假设两位玩家都采取最优策略,判断先手玩家是否会获胜。输入格式一行包含两个整数n和m(1≤n,m≤10^9)。输出格式如果先手玩家能获胜,输出"Yes";否则输出"No"。n,m=map(int,input().split())ifnm时,若n%(m+1)≠0,先手可以通过策略使剩余台阶数变为(m+1)的倍数,将必败态转移给

- PV操作(Java代码)进程同步实战指南

Cloud_.

java开发语言操作系统并发

引言在Java并发编程中,资源同步如同精密仪器的齿轮咬合,任何偏差都可能导致系统崩溃。本文将以Java视角解析经典PV操作原理,通过真实可运行的代码示例,带你掌握线程同步的底层实现逻辑。一、Java信号量实现机制1.1Semaphore类解析importjava.util.concurrent.Semaphore;//创建包含5个许可的信号量(相当于计数信号量)Semaphoresemaphore

- Kubernets命名空间

忍界英雄

dockerk8s

Kubernets命名空间什么是命名空间命名空间(Namespace)是一种用于组织和隔离Kubernetes资源的机制。在Kubernetes集群中,命名空间将物理集群划分为多个逻辑部分,每个部分都拥有自己的一组资源(如Pod、Service、ConfigMap等),彼此之间互不干扰,实现资源的隔离管理。不仅Kubernetes具备命名空间的概念,在Docker等容器技术中,也通过命名空间(Na

- 手写Tomcat:实现基本功能

2301_81535770

tomcatjava

首先,Tomcat是一个软件,所有的项目都能在Tomcat上加载运行,Tomcat最核心的就是Servlet集合,本身就是HashMap。Tomcat需要支持Servlet,所以有servlet底层的资源:HttpServlet抽象类、HttpRequest和HttpResponse,否则我们无法新建Servlet。这样我们就可以在webapps写项目了,一个项目有两大资源:servlet资源和静

- 基于Redis分布锁+事务补偿解决数据不一致性问题

yiridancan

并发编程Redis分布式redis数据库缓存

基于Redis的分布式设备库存服务设计与实现概述本文介绍一个基于Redis实现的分布式设备库存服务方案,通过分布式锁、重试机制和事务补偿等关键技术,保证在并发场景下库存操作的原子性和一致性。该方案适用于物联网设备管理、分布式资源调度等场景。代码实现importjava.util.HashMap;importjava.util.Map;importorg.slf4j.Logger;importorg

- 41、如果`std::map`的键类型是自定义类型,需要怎么做?(附 仿函数)

桃酥403

桃酥的学习笔记(C++篇)c++stl

在C++中使用自定义类型作为std::map的键时,必须定义键的比较规则,具体可通过以下两种方式实现:方法一:在自定义类型中重载运算符myMap;方法二:自定义比较函数对象如果无法修改自定义类型(例如类型来自第三方库),也就是不能在自定义类型中重载小于运算符,此时我们可定义一个**仿函数(Functor)**来操作这个自定义类型。在初始化map时,这个仿函数就作为std::map的第三个参数:st

- Cesium实践(1)—— Hello World

迦南giser

WebGIS#Cesiumwebgiscesium

文章目录前言Cesium是什么Cesium核心类ViewerSceneEntityDataSourceCollection创建第一个Cesium应用工程搭建Cesium版helloworld总结前言工作大半年来主要的技术栈是mapbox-gl和threejs,但是作为一名GIS专业毕业生,一直对Cesium充满兴趣。Cesium不仅保持了threejs的三维绘制能力,而且内置大量渲染地理数据的AP

- node-imap-sync-client, imap 客户端库, 同步专用

eli960

MAIL前端javascriptnode.js

node-imap-sync-client说明网址:https://gitee.com/linuxmail/node-imap-sync-client同步操作imap客户端,见例子examples本imap客户端,特点:全部命令都是promise风格主要用于和IMAPD服务器同步邮箱数据和邮件数据支持文件夹的创建/删除/移动(改名)支持邮件的复制/移动/删除/标记/上传支持获取文件夹下邮件UID列

- 代码训练day7哈希表2

徵686

散列表数据结构

1.四数相加IIleetcode454哈希表判断是否存在classSolution{//四数相加ii统计个数publicintfourSumCount(int[]nums1,int[]nums2,int[]nums3,int[]nums4){HashMapmap=newHashMapmagazine.length())returnfalse;//java字符串长度s.length()for(cha

- sqlmap笔记

君如尘

网络安全-渗透笔记笔记

1.运行环境sqlmap是用Python编写的,因此首先需要确保你的系统上安装了Python。sqlmap支持Python2.6、2.7和Python3.4及以上版本。2.常用命令通用格式:bythonsqlmap.py-r注入点地址--参数-rpost请求-uget请求--level=测试等级--risk=测试风险-v显示详细信息级别-p针对某个注入点注入-threads更改线程数,加速--ba

- Python实现微信自动发送消息

热心市民小汪

python微信开发语言

实现需求:Python定时发送微信消息importpyautoguiaspgimportpyperclipaspcfromapscheduler.schedulers.blockingimportBlockingScheduler"""实现定时自动发送消息"""#操作间隔为1秒pg.PAUSE=1name='Hello~'msg='是时候点餐啦!!'defmain():#打开微信pg.hotkey

- Python画词云图,Python画圆形词云图,API详解

请一直在路上

python开发语言

在Python中,词云图的常用库是wordcloud。以下是核心API参数的详细讲解,以及一个完整的使用示例。一、参数类型默认值说明参数类型默认值说明widthint400词云图的宽度(像素)heightint200词云图的高度(像素)background_colorstr“black”背景颜色,可以是颜色名称(如“white”)或十六进制值(如“#FFFFFF”)colormapstr/matp

- 员工管理(3)-删除员工-修改员工-全局异常处理器-员工信息统计

汐栊

java数据库开发语言

目录员工管理:删除员工:Controller层:Service层:Mapper接口:接受参数的两种方式:修改员工:查询回显:Controller层:Service层:Mapper接口:修改数据:Controller层:Service层:Mapper接口:程序优化:员工信息统计:职位统计开发Controller层:Service层:Mapper接口:性别统计:员工管理:删除员工:明确三层架构职责:C

- L2-050懂蛇语c++(pta天梯赛。测试点1。)

zzy678

c++

这个题目看上去还挺简单的,但是自己做的时候就超时了一开始只有19分。我自己stl学的不是很好,然后一开始自己用的pair和vector一起写的发现了一些小问题改了之后才得19。。。其中两个就是超时问题。可能查找太慢?之后又查看了一些别人写的,参考了使用map和vector混用的方法就很好过了,但是那个测试点1就是过不了。最后,我发现就是首字的处理方式应该优化。一个小小小坑。大家注意。#includ

- OpenStack阶梯计价实战:Hashmap模块从入门到精准计费

冯·诺依曼的

openstack云计算linux

目录Hashmap模块概述核心概念解析配置步骤详解应用场景分析注意事项与扩展1.Hashmap模块概述OpenStack的Rating模块负责资源使用量的计费统计,而Hashmap是其核心组件,用于定义灵活的计价规则。通过Hashmap,管理员可以:根据资源类型(如CPU、存储、网络)设置差异化单价实现阶梯计价(如使用量超过阈值后单价打折)将资源与服务、服务组绑定,支持复杂计费策略2.核心概念解析

- echart绘制海南地图时增加南海诸岛显示(现成geojson数据)

火火PM打怪中

工作中的问题

使用场景:产品经理要求展示海南地图时,需要显示南海诸岛;问题:南海诸岛在中国地图上的显示,是echart在mapName=‘china’时,默认fix进去。但是海南省不会默认fix进去解决方案:将南海诸岛的geojson数据想办法弄到,将其直接放在海南省地图数据里面(将三沙市删除)处理结果:{"type":"FeatureCollection","features":[{"id":"460100"

- Description of a Poisson Imagery Super Resolution Algorithm 论文阅读

青铜锁00

论文阅读Radar论文阅读

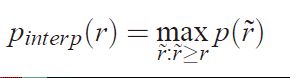

DescriptionofaPoissonImagerySuperResolutionAlgorithm1.研究目标与意义1.1研究目标1.2实际意义2.创新方法与模型2.1核心思路2.2关键公式与推导2.2.1贝叶斯框架与概率模型2.2.2MAP估计的优化目标2.2.3超分辨率参数α2.3对比传统方法的优势3.实验验证与结果3.1实验设计3.2关键结果4.未来研究方向(实波束雷达领域)4.1挑战

- 蓝桥杯备赛计划

laitywgx

蓝桥杯职场和发展

1-2小时的蓝桥杯PythonB组冲刺日程表(持续1个月,聚焦高频考点):第一周:核心算法突破Day1(周一)学习重点:动态规划(01背包问题)学习资源:AcWing《蓝桥杯辅导课》第8讲(背包问题模板)代码模板速记:#一维01背包模板n,V=map(int,input().split())dp=[0]*(V+1)for_inrange(n):w,v=map(int,input().split()

- AutoImageProcessor代码分析

fydw_715

Transformers人工智能

以下是对AutoImageProcessor类的整理,按照类属性、类方法、静态方法、实例属性和实例方法分类,并对每个方法的功能进行了描述。类属性无显式定义的类属性。全局方法IMAGE_PROCESSOR_MAPPING_NAMES1.遍历IMAGE_PROCESSOR_MAPPING_NAMES字典formodel_type,image_processorsinIMAGE_PROCESSOR_MA

- OpenLayers集成天地图服务开发指南

喆星时瑜

WebGIS#天地图OpenLayersGIS天地图WebGISHTML地图地图API

以下是一份面向GIS初学者的OpenLayers开发详细教程,深度解析代码:一、开发环境搭建1.1OpenLayers库引入ol.css:包含地图控件、图层等可视化样式ol.js:OpenLayers核心功能库推荐使用固定版本号(如v7.3.0)确保稳定性1.2地图容器设置.map{//设置地图控件显示尺寸height:95vh;width:95vw;}使用视口单位(vh/vw)实现响应式布局保留

- C++ 地图 + 配对组合!3 分钟吃透 map 和 pair 的黄金搭档

Reese_Cool

STL数据结构与算法c++算法开发语言stl

文章目录pair一、基本概念二、pair的声明与初始化三、成员访问与修改四、常用操作1.比较运算2.交换值3.tie函数(解包pair)五、pair的应用场景六、pair与结构体/类的对比七、pair与tuple的对比八、代码示例1.返回多个值2.存储键值对九、总结map一、基本概念二、map的声明与初始化三、常用操作四、map的应用场景五、注意事项在C++编程里,map和pair是标准库中十分实

- Python匿名函数Lambda,不止是省略函数名这么简单

橙色小博

python的学习之旅python开发语言

目录1.前言2.Lambda函数的基本用法3.关于Lambda函数的应用3.1与map函数结合3.2lambda与if-else语句3.3多参数lambda3.4嵌套lambda3.5字典与lambda(也是我本人最喜欢的用法)3.6lambda其他用法4.总结:Lambda的编程哲学1.前言在Python的广阔天地里,Lambda函数宛如一颗璀璨的明珠,以其简洁优雅的姿态,为代码增添了一份独特的

- TOMCAT在POST方法提交参数丢失问题

357029540

javatomcatjsp

摘自http://my.oschina.net/luckyi/blog/213209

昨天在解决一个BUG时发现一个奇怪的问题,一个AJAX提交数据在之前都是木有问题的,突然提交出错影响其他处理流程。

检查时发现页面处理数据较多,起初以为是提交顺序不正确修改后发现不是由此问题引起。于是删除掉一部分数据进行提交,较少数据能够提交成功。

恢复较多数据后跟踪提交FORM DATA ,发现数

- 在MyEclipse中增加JSP模板 删除-2008-08-18

ljy325

jspxmlMyEclipse

在D:\Program Files\MyEclipse 6.0\myeclipse\eclipse\plugins\com.genuitec.eclipse.wizards_6.0.1.zmyeclipse601200710\templates\jsp 目录下找到Jsp.vtl,复制一份,重命名为jsp2.vtl,然后把里面的内容修改为自己想要的格式,保存。

然后在 D:\Progr

- JavaScript常用验证脚本总结

eksliang

JavaScriptjavaScript表单验证

转载请出自出处:http://eksliang.iteye.com/blog/2098985

下面这些验证脚本,是我在这几年开发中的总结,今天把他放出来,也算是一种分享吧,现在在我的项目中也在用!包括日期验证、比较,非空验证、身份证验证、数值验证、Email验证、电话验证等等...!

&nb

- 微软BI(4)

18289753290

微软BI SSIS

1)

Q:查看ssis里面某个控件输出的结果:

A MessageBox.Show(Dts.Variables["v_lastTimestamp"].Value.ToString());

这是我们在包里面定义的变量

2):在关联目的端表的时候如果是一对多的关系,一定要选择唯一的那个键作为关联字段。

3)

Q:ssis里面如果将多个数据源的数据插入目的端一

- 定时对大数据量的表进行分表对数据备份

酷的飞上天空

大数据量

工作中遇到数据库中一个表的数据量比较大,属于日志表。正常情况下是不会有查询操作的,但如果不进行分表数据太多,执行一条简单sql语句要等好几分钟。。

分表工具:linux的shell + mysql自身提供的管理命令

原理:使用一个和原表数据结构一样的表,替换原表。

linux shell内容如下:

=======================开始

- 本质的描述与因材施教

永夜-极光

感想随笔

不管碰到什么事,我都下意识的想去探索本质,找寻一个最形象的描述方式。

我坚信,世界上对一件事物的描述和解释,肯定有一种最形象,最贴近本质,最容易让人理解

&

- 很迷茫。。。

随便小屋

随笔

小弟我今年研一,也是从事的咱们现在最流行的专业(计算机)。本科三流学校,为了能有个更好的跳板,进入了考研大军,非常有幸能进入研究生的行业(具体学校就不说了,怕把学校的名誉给损了)。

先说一下自身的条件,本科专业软件工程。主要学习就是软件开发,几乎和计算机没有什么区别。因为学校本身三流,也就是让老师带着学生学点东西,然后让学生毕业就行了。对专业性的东西了解的非常浅。就那学的语言来说

- 23种设计模式的意图和适用范围

aijuans

设计模式

Factory Method 意图 定义一个用于创建对象的接口,让子类决定实例化哪一个类。Factory Method 使一个类的实例化延迟到其子类。 适用性 当一个类不知道它所必须创建的对象的类的时候。 当一个类希望由它的子类来指定它所创建的对象的时候。 当类将创建对象的职责委托给多个帮助子类中的某一个,并且你希望将哪一个帮助子类是代理者这一信息局部化的时候。

Abstr

- Java中的synchronized和volatile

aoyouzi

javavolatilesynchronized

说到Java的线程同步问题肯定要说到两个关键字synchronized和volatile。说到这两个关键字,又要说道JVM的内存模型。JVM里内存分为main memory和working memory。 Main memory是所有线程共享的,working memory则是线程的工作内存,它保存有部分main memory变量的拷贝,对这些变量的更新直接发生在working memo

- js数组的操作和this关键字

百合不是茶

js数组操作this关键字

js数组的操作;

一:数组的创建:

1、数组的创建

var array = new Array(); //创建一个数组

var array = new Array([size]); //创建一个数组并指定长度,注意不是上限,是长度

var arrayObj = new Array([element0[, element1[, ...[, elementN]]]

- 别人的阿里面试感悟

bijian1013

面试分享工作感悟阿里面试

原文如下:http://greemranqq.iteye.com/blog/2007170

一直做企业系统,虽然也自己一直学习技术,但是感觉还是有所欠缺,准备花几个月的时间,把互联网的东西,以及一些基础更加的深入透析,结果这次比较意外,有点突然,下面分享一下感受吧!

&nb

- 淘宝的测试框架Itest

Bill_chen

springmaven框架单元测试JUnit

Itest测试框架是TaoBao测试部门开发的一套单元测试框架,以Junit4为核心,

集合DbUnit、Unitils等主流测试框架,应该算是比较好用的了。

近期项目中用了下,有关itest的具体使用如下:

1.在Maven中引入itest框架:

<dependency>

<groupId>com.taobao.test</groupId&g

- 【Java多线程二】多路条件解决生产者消费者问题

bit1129

java多线程

package com.tom;

import java.util.LinkedList;

import java.util.Queue;

import java.util.concurrent.ThreadLocalRandom;

import java.util.concurrent.locks.Condition;

import java.util.concurrent.loc

- 汉字转拼音pinyin4j

白糖_

pinyin4j

以前在项目中遇到汉字转拼音的情况,于是在网上找到了pinyin4j这个工具包,非常有用,别的不说了,直接下代码:

import java.util.HashSet;

import java.util.Set;

import net.sourceforge.pinyin4j.PinyinHelper;

import net.sourceforge.pinyin

- org.hibernate.TransactionException: JDBC begin failed解决方案

bozch

ssh数据库异常DBCP

org.hibernate.TransactionException: JDBC begin failed: at org.hibernate.transaction.JDBCTransaction.begin(JDBCTransaction.java:68) at org.hibernate.impl.SessionImp

- java-并查集(Disjoint-set)-将多个集合合并成没有交集的集合

bylijinnan

java

import java.util.ArrayList;

import java.util.Arrays;

import java.util.HashMap;

import java.util.HashSet;

import java.util.Iterator;

import java.util.List;

import java.util.Map;

import java.ut

- Java PrintWriter打印乱码

chenbowen00

java

一个小程序读写文件,发现PrintWriter输出后文件存在乱码,解决办法主要统一输入输出流编码格式。

读文件:

BufferedReader

从字符输入流中读取文本,缓冲各个字符,从而提供字符、数组和行的高效读取。

可以指定缓冲区的大小,或者可使用默认的大小。大多数情况下,默认值就足够大了。

通常,Reader 所作的每个读取请求都会导致对基础字符或字节流进行相应的读取请求。因

- [天气与气候]极端气候环境

comsci

环境

如果空间环境出现异变...外星文明并未出现,而只是用某种气象武器对地球的气候系统进行攻击,并挑唆地球国家间的战争,经过一段时间的准备...最大限度的削弱地球文明的整体力量,然后再进行入侵......

那么地球上的国家应该做什么样的防备工作呢?

&n

- oracle order by与union一起使用的用法

daizj

UNIONoracleorder by

当使用union操作时,排序语句必须放在最后面才正确,如下:

只能在union的最后一个子查询中使用order by,而这个order by是针对整个unioning后的结果集的。So:

如果unoin的几个子查询列名不同,如

Sql代码

select supplier_id, supplier_name

from suppliers

UNI

- zeus持久层读写分离单元测试

deng520159

单元测试

本文是zeus读写分离单元测试,距离分库分表,只有一步了.上代码:

1.ZeusMasterSlaveTest.java

package com.dengliang.zeus.webdemo.test;

import java.util.ArrayList;

import java.util.List;

import org.junit.Assert;

import org.j

- Yii 截取字符串(UTF-8) 使用组件

dcj3sjt126com

yii

1.将Helper.php放进protected\components文件夹下。

2.调用方法:

Helper::truncate_utf8_string($content,20,false); //不显示省略号 Helper::truncate_utf8_string($content,20); //显示省略号

&n

- 安装memcache及php扩展

dcj3sjt126com

PHP

安装memcache tar zxvf memcache-2.2.5.tgz cd memcache-2.2.5/ /usr/local/php/bin/phpize (?) ./configure --with-php-confi

- JsonObject 处理日期

feifeilinlin521

javajsonJsonOjbectJsonArrayJSONException

写这边文章的初衷就是遇到了json在转换日期格式出现了异常 net.sf.json.JSONException: java.lang.reflect.InvocationTargetException 原因是当你用Map接收数据库返回了java.sql.Date 日期的数据进行json转换出的问题话不多说 直接上代码

&n

- Ehcache(06)——监听器

234390216

监听器listenerehcache

监听器

Ehcache中监听器有两种,监听CacheManager的CacheManagerEventListener和监听Cache的CacheEventListener。在Ehcache中,Listener是通过对应的监听器工厂来生产和发生作用的。下面我们将来介绍一下这两种类型的监听器。

- activiti 自带设计器中chrome 34版本不能打开bug的解决

jackyrong

Activiti

在acitivti modeler中,如果是chrome 34,则不能打开该设计器,其他浏览器可以,

经证实为bug,参考

http://forums.activiti.org/content/activiti-modeler-doesnt-work-chrome-v34

修改为,找到

oryx.debug.js

在最头部增加

if (!Document.

- 微信收货地址共享接口-终极解决

laotu5i0

微信开发

最近要接入微信的收货地址共享接口,总是不成功,折腾了好几天,实在没办法网上搜到的帖子也是骂声一片。我把我碰到并解决问题的过程分享出来,希望能给微信的接口文档起到一个辅助作用,让后面进来的开发者能快速的接入,而不需要像我们一样苦逼的浪费好几天,甚至一周的青春。各种羞辱、谩骂的话就不说了,本人还算文明。

如果你能搜到本贴,说明你已经碰到了各种 ed

- 关于人才

netkiller.github.com

工作面试招聘netkiller人才

关于人才

每个月我都会接到许多猎头的电话,有些猎头比较专业,但绝大多数在我看来与猎头二字还是有很大差距的。 与猎头接触多了,自然也了解了他们的工作,包括操作手法,总体上国内的猎头行业还处在初级阶段。

总结就是“盲目推荐,以量取胜”。

目前现状

许多从事人力资源工作的人,根本不懂得怎么找人才。处在人才找不到企业,企业找不到人才的尴尬处境。

企业招聘,通常是需要用人的部门提出招聘条件,由人

- 搭建 CentOS 6 服务器 - 目录

rensanning

centos

(1) 安装CentOS

ISO(desktop/minimal)、Cloud(AWS/阿里云)、Virtualization(VMWare、VirtualBox)

详细内容

(2) Linux常用命令

cd、ls、rm、chmod......

详细内容

(3) 初始环境设置

用户管理、网络设置、安全设置......

详细内容

(4) 常驻服务Daemon

- 【求助】mongoDB无法更新主键

toknowme

mongodb

Query query = new Query(); query.addCriteria(new Criteria("_id").is(o.getId())); &n

- jquery 页面滚动到底部自动加载插件集合

xp9802

jquery

很多社交网站都使用无限滚动的翻页技术来提高用户体验,当你页面滑到列表底部时候无需点击就自动加载更多的内容。下面为你推荐 10 个 jQuery 的无限滚动的插件:

1. jQuery ScrollPagination

jQuery ScrollPagination plugin 是一个 jQuery 实现的支持无限滚动加载数据的插件。

2. jQuery Screw

S