(十三)集成学习(下)——Stacking

参考:DataWhale教程链接

集成学习(上)所有Task:

(一)集成学习上——机器学习三大任务

(二)集成学习上——回归模型

(三)集成学习上——偏差与方差

(四)集成学习上——回归模型评估与超参数调优

(五)集成学习上——分类模型

(六)集成学习上——分类模型评估与超参数调优

(七)集成学习中——投票法

(八)集成学习中——bagging

(九)集成学习中——Boosting简介&AdaBoost

(十)集成学习中——GBDT

(十一)集成学习中——XgBoost、LightGBM

(十二)集成学习(下)——Blending

(十三)集成学习(下)——Stacking

Stacking集成学习算法

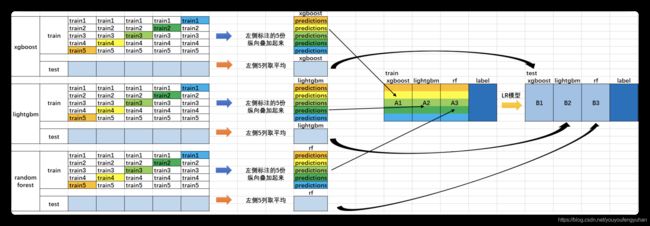

Stacking是一种比赛中常用的trick,严格它来说并不是一种算法,而是精美而又复杂的,对模型集成的一种策略。Stacking集成算法可以理解为一个两层的集成,第一层含有多个基础分类器,把输出的预测结果作为第二层的输入特征, 第二层的分类器通常是逻辑回归。

Blending存在的问题:Blending在第二层集成的时候中只会用了验证集的数据产生的特征,对数据的使用浪费比较大。

Stacking:采用交叉验证的思路,产生多组验证集,且可以充分利用训练集。

Blending与Stacking对比:

| 集成方法 | Blending | Stacking |

|---|---|---|

| 集成的特征 | 一次划分,特征简单,数据少 | cv交叉验证,特征略复杂,数据多 |

| 泛化能力 | 可能会过拟合 | 健壮性好 |

看一下Stacking是如何集成算法的:(参考案例:https://www.cnblogs.com/Christina-Notebook/p/10063146.html)

由于sklearn并没有直接对Stacking的方法,因此我们需要下载mlxtend工具包(pip install mlxtend)

# 1. 简单堆叠3折CV分类

from sklearn import datasets

iris = datasets.load_iris()

X, y = iris.data[:, 1:3], iris.target

from sklearn.model_selection import cross_val_score

from sklearn.linear_model import LogisticRegression

from sklearn.neighbors import KNeighborsClassifier

from sklearn.naive_bayes import GaussianNB

from sklearn.ensemble import RandomForestClassifier

from mlxtend.classifier import StackingCVClassifier

RANDOM_SEED = 42

clf1 = KNeighborsClassifier(n_neighbors=1)

clf2 = RandomForestClassifier(random_state=RANDOM_SEED)

clf3 = GaussianNB()

lr = LogisticRegression()

# Starting from v0.16.0, StackingCVRegressor supports

# `random_state` to get deterministic result.

sclf = StackingCVClassifier(classifiers=[clf1, clf2, clf3], # 第一层分类器

meta_classifier=lr, # 第二层分类器

random_state=RANDOM_SEED)

print('3-fold cross validation:\n')

for clf, label in zip([clf1, clf2, clf3, sclf], ['KNN', 'Random Forest', 'Naive Bayes','StackingClassifier']):

scores = cross_val_score(clf, X, y, cv=3, scoring='accuracy')

print("Accuracy: %0.2f (+/- %0.2f) [%s]" % (scores.mean(), scores.std(), label))

3-fold cross validation:

Accuracy: 0.91 (+/- 0.01) [KNN]

Accuracy: 0.95 (+/- 0.01) [Random Forest]

Accuracy: 0.91 (+/- 0.02) [Naive Bayes]

Accuracy: 0.93 (+/- 0.02) [StackingClassifier]

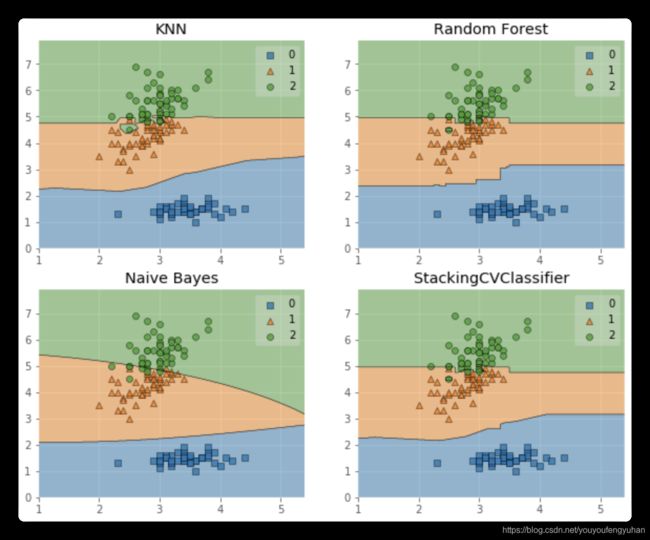

# 我们画出决策边界

from mlxtend.plotting import plot_decision_regions

import matplotlib.gridspec as gridspec

import itertools

gs = gridspec.GridSpec(2, 2)

fig = plt.figure(figsize=(10,8))

for clf, lab, grd in zip([clf1, clf2, clf3, sclf],

['KNN',

'Random Forest',

'Naive Bayes',

'StackingCVClassifier'],

itertools.product([0, 1], repeat=2)):

clf.fit(X, y)

ax = plt.subplot(gs[grd[0], grd[1]])

fig = plot_decision_regions(X=X, y=y, clf=clf)

plt.title(lab)

plt.show()

使用第一层所有基分类器所产生的类别概率值作为meta-classfier的输入。需要在StackingClassifier 中增加一个参数设置:use_probas = True。

另外,还有一个参数设置average_probas = True,那么这些基分类器所产出的概率值将按照列被平均,否则会拼接。

例如:

基分类器1:predictions=[0.2,0.2,0.7]

基分类器2:predictions=[0.4,0.3,0.8]

基分类器3:predictions=[0.1,0.4,0.6]

1)若use_probas = True,average_probas = True,

则产生的meta-feature 为:[0.233, 0.3, 0.7]

2)若use_probas = True,average_probas = False,

则产生的meta-feature 为:[0.2,0.2,0.7,0.4,0.3,0.8,0.1,0.4,0.6]

# 2.使用概率作为元特征

clf1 = KNeighborsClassifier(n_neighbors=1)

clf2 = RandomForestClassifier(random_state=1)

clf3 = GaussianNB()

lr = LogisticRegression()

sclf = StackingCVClassifier(classifiers=[clf1, clf2, clf3],

use_probas=True, #

meta_classifier=lr,

random_state=42)

print('3-fold cross validation:\n')

for clf, label in zip([clf1, clf2, clf3, sclf],

['KNN',

'Random Forest',

'Naive Bayes',

'StackingClassifier']):

scores = cross_val_score(clf, X, y,

cv=3, scoring='accuracy')

print("Accuracy: %0.2f (+/- %0.2f) [%s]"

% (scores.mean(), scores.std(), label))

3-fold cross validation:

Accuracy: 0.91 (+/- 0.01) [KNN]

Accuracy: 0.95 (+/- 0.01) [Random Forest]

Accuracy: 0.91 (+/- 0.02) [Naive Bayes]

Accuracy: 0.95 (+/- 0.02) [StackingClassifier]

# 3. 堆叠5折CV分类与网格搜索(结合网格搜索调参优化)

from sklearn.linear_model import LogisticRegression

from sklearn.neighbors import KNeighborsClassifier

from sklearn.naive_bayes import GaussianNB

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import GridSearchCV

from mlxtend.classifier import StackingCVClassifier

# Initializing models

clf1 = KNeighborsClassifier(n_neighbors=1)

clf2 = RandomForestClassifier(random_state=RANDOM_SEED)

clf3 = GaussianNB()

lr = LogisticRegression()

sclf = StackingCVClassifier(classifiers=[clf1, clf2, clf3],

meta_classifier=lr,

random_state=42)

params = {

'kneighborsclassifier__n_neighbors': [1, 5],

'randomforestclassifier__n_estimators': [10, 50],

'meta_classifier__C': [0.1, 10.0]}

grid = GridSearchCV(estimator=sclf,

param_grid=params,

cv=5,

refit=True)

grid.fit(X, y)

cv_keys = ('mean_test_score', 'std_test_score', 'params')

for r, _ in enumerate(grid.cv_results_['mean_test_score']):

print("%0.3f +/- %0.2f %r"

% (grid.cv_results_[cv_keys[0]][r],

grid.cv_results_[cv_keys[1]][r] / 2.0,

grid.cv_results_[cv_keys[2]][r]))

print('Best parameters: %s' % grid.best_params_)

print('Accuracy: %.2f' % grid.best_score_)

0.947 +/- 0.03 {'kneighborsclassifier__n_neighbors': 1, 'meta_classifier__C': 0.1, 'randomforestclassifier__n_estimators': 10}

0.933 +/- 0.02 {'kneighborsclassifier__n_neighbors': 1, 'meta_classifier__C': 0.1, 'randomforestclassifier__n_estimators': 50}

0.940 +/- 0.02 {'kneighborsclassifier__n_neighbors': 1, 'meta_classifier__C': 10.0, 'randomforestclassifier__n_estimators': 10}

0.940 +/- 0.02 {'kneighborsclassifier__n_neighbors': 1, 'meta_classifier__C': 10.0, 'randomforestclassifier__n_estimators': 50}

0.953 +/- 0.02 {'kneighborsclassifier__n_neighbors': 5, 'meta_classifier__C': 0.1, 'randomforestclassifier__n_estimators': 10}

0.953 +/- 0.02 {'kneighborsclassifier__n_neighbors': 5, 'meta_classifier__C': 0.1, 'randomforestclassifier__n_estimators': 50}

0.953 +/- 0.02 {'kneighborsclassifier__n_neighbors': 5, 'meta_classifier__C': 10.0, 'randomforestclassifier__n_estimators': 10}

0.953 +/- 0.02 {'kneighborsclassifier__n_neighbors': 5, 'meta_classifier__C': 10.0, 'randomforestclassifier__n_estimators': 50}

Best parameters: {'kneighborsclassifier__n_neighbors': 5, 'meta_classifier__C': 0.1, 'randomforestclassifier__n_estimators': 10}

Accuracy: 0.95

# 如果我们打算多次使用回归算法,我们要做的就是在参数网格中添加一个附加的数字后缀,如下所示:

from sklearn.model_selection import GridSearchCV

# Initializing models

clf1 = KNeighborsClassifier(n_neighbors=1)

clf2 = RandomForestClassifier(random_state=RANDOM_SEED)

clf3 = GaussianNB()

lr = LogisticRegression()

sclf = StackingCVClassifier(classifiers=[clf1, clf1, clf2, clf3],

meta_classifier=lr,

random_state=RANDOM_SEED)

params = {

'kneighborsclassifier-1__n_neighbors': [1, 5],

'kneighborsclassifier-2__n_neighbors': [1, 5],

'randomforestclassifier__n_estimators': [10, 50],

'meta_classifier__C': [0.1, 10.0]}

grid = GridSearchCV(estimator=sclf,

param_grid=params,

cv=5,

refit=True)

grid.fit(X, y)

cv_keys = ('mean_test_score', 'std_test_score', 'params')

for r, _ in enumerate(grid.cv_results_['mean_test_score']):

print("%0.3f +/- %0.2f %r"

% (grid.cv_results_[cv_keys[0]][r],

grid.cv_results_[cv_keys[1]][r] / 2.0,

grid.cv_results_[cv_keys[2]][r]))

print('Best parameters: %s' % grid.best_params_)

print('Accuracy: %.2f' % grid.best_score_)

0.940 +/- 0.02 {'kneighborsclassifier-1__n_neighbors': 1, 'kneighborsclassifier-2__n_neighbors': 1, 'meta_classifier__C': 0.1, 'randomforestclassifier__n_estimators': 10}

0.940 +/- 0.02 {'kneighborsclassifier-1__n_neighbors': 1, 'kneighborsclassifier-2__n_neighbors': 1, 'meta_classifier__C': 0.1, 'randomforestclassifier__n_estimators': 50}

0.940 +/- 0.02 {'kneighborsclassifier-1__n_neighbors': 1, 'kneighborsclassifier-2__n_neighbors': 1, 'meta_classifier__C': 10.0, 'randomforestclassifier__n_estimators': 10}

0.940 +/- 0.02 {'kneighborsclassifier-1__n_neighbors': 1, 'kneighborsclassifier-2__n_neighbors': 1, 'meta_classifier__C': 10.0, 'randomforestclassifier__n_estimators': 50}

0.960 +/- 0.02 {'kneighborsclassifier-1__n_neighbors': 1, 'kneighborsclassifier-2__n_neighbors': 5, 'meta_classifier__C': 0.1, 'randomforestclassifier__n_estimators': 10}

0.953 +/- 0.02 {'kneighborsclassifier-1__n_neighbors': 1, 'kneighborsclassifier-2__n_neighbors': 5, 'meta_classifier__C': 0.1, 'randomforestclassifier__n_estimators': 50}

0.953 +/- 0.02 {'kneighborsclassifier-1__n_neighbors': 1, 'kneighborsclassifier-2__n_neighbors': 5, 'meta_classifier__C': 10.0, 'randomforestclassifier__n_estimators': 10}

0.953 +/- 0.02 {'kneighborsclassifier-1__n_neighbors': 1, 'kneighborsclassifier-2__n_neighbors': 5, 'meta_classifier__C': 10.0, 'randomforestclassifier__n_estimators': 50}

0.960 +/- 0.02 {'kneighborsclassifier-1__n_neighbors': 5, 'kneighborsclassifier-2__n_neighbors': 1, 'meta_classifier__C': 0.1, 'randomforestclassifier__n_estimators': 10}

0.953 +/- 0.02 {'kneighborsclassifier-1__n_neighbors': 5, 'kneighborsclassifier-2__n_neighbors': 1, 'meta_classifier__C': 0.1, 'randomforestclassifier__n_estimators': 50}

0.953 +/- 0.02 {'kneighborsclassifier-1__n_neighbors': 5, 'kneighborsclassifier-2__n_neighbors': 1, 'meta_classifier__C': 10.0, 'randomforestclassifier__n_estimators': 10}

0.953 +/- 0.02 {'kneighborsclassifier-1__n_neighbors': 5, 'kneighborsclassifier-2__n_neighbors': 1, 'meta_classifier__C': 10.0, 'randomforestclassifier__n_estimators': 50}

0.953 +/- 0.02 {'kneighborsclassifier-1__n_neighbors': 5, 'kneighborsclassifier-2__n_neighbors': 5, 'meta_classifier__C': 0.1, 'randomforestclassifier__n_estimators': 10}

0.953 +/- 0.02 {'kneighborsclassifier-1__n_neighbors': 5, 'kneighborsclassifier-2__n_neighbors': 5, 'meta_classifier__C': 0.1, 'randomforestclassifier__n_estimators': 50}

0.953 +/- 0.02 {'kneighborsclassifier-1__n_neighbors': 5, 'kneighborsclassifier-2__n_neighbors': 5, 'meta_classifier__C': 10.0, 'randomforestclassifier__n_estimators': 10}

0.953 +/- 0.02 {'kneighborsclassifier-1__n_neighbors': 5, 'kneighborsclassifier-2__n_neighbors': 5, 'meta_classifier__C': 10.0, 'randomforestclassifier__n_estimators': 50}

Best parameters: {'kneighborsclassifier-1__n_neighbors': 1, 'kneighborsclassifier-2__n_neighbors': 5, 'meta_classifier__C': 0.1, 'randomforestclassifier__n_estimators': 10}

Accuracy: 0.96

# 4.在不同特征子集上运行的分类器的堆叠

##不同的1级分类器可以适合训练数据集中的不同特征子集。以下示例说明了如何使用scikit-learn管道和ColumnSelector:

from sklearn.datasets import load_iris

from mlxtend.classifier import StackingCVClassifier

from mlxtend.feature_selection import ColumnSelector

from sklearn.pipeline import make_pipeline

from sklearn.linear_model import LogisticRegression

iris = load_iris()

X = iris.data

y = iris.target

pipe1 = make_pipeline(ColumnSelector(cols=(0, 2)), # 选择第0,2列

LogisticRegression())

pipe2 = make_pipeline(ColumnSelector(cols=(1, 2, 3)), # 选择第1,2,3列

LogisticRegression())

sclf = StackingCVClassifier(classifiers=[pipe1, pipe2],

meta_classifier=LogisticRegression(),

random_state=42)

sclf.fit(X, y)

StackingCVClassifier(classifiers=[Pipeline(steps=[('columnselector',

ColumnSelector(cols=(0, 2))),

('logisticregression',

LogisticRegression())]),

Pipeline(steps=[('columnselector',

ColumnSelector(cols=(1, 2,

3))),

('logisticregression',

LogisticRegression())])],

meta_classifier=LogisticRegression(), random_state=42)

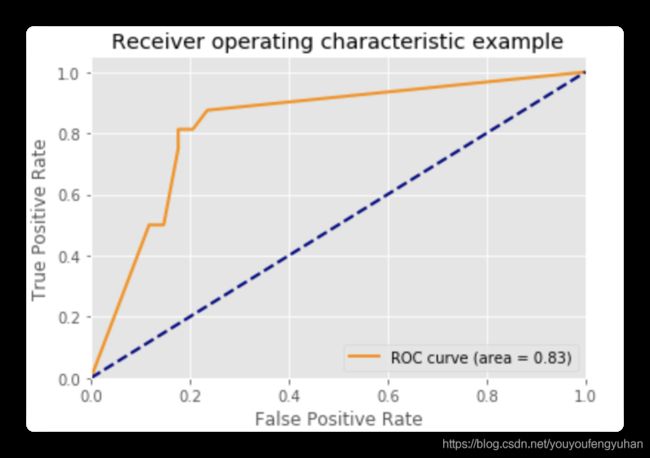

# 5.ROC曲线 decision_function

### 像其他scikit-learn分类器一样,它StackingCVClassifier具有decision_function可用于绘制ROC曲线的方法。

### 请注意,decision_function期望并要求元分类器实现decision_function。

from sklearn import model_selection

from sklearn.linear_model import LogisticRegression

from sklearn.neighbors import KNeighborsClassifier

from sklearn.svm import SVC

from sklearn.ensemble import RandomForestClassifier

from mlxtend.classifier import StackingCVClassifier

from sklearn.metrics import roc_curve, auc

from sklearn.model_selection import train_test_split

from sklearn import datasets

from sklearn.preprocessing import label_binarize

from sklearn.multiclass import OneVsRestClassifier

iris = datasets.load_iris()

X, y = iris.data[:, [0, 1]], iris.target

# Binarize the output

y = label_binarize(y, classes=[0, 1, 2])

n_classes = y.shape[1]

RANDOM_SEED = 42

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.33, random_state=RANDOM_SEED)

clf1 = LogisticRegression()

clf2 = RandomForestClassifier(random_state=RANDOM_SEED)

clf3 = SVC(random_state=RANDOM_SEED)

lr = LogisticRegression()

sclf = StackingCVClassifier(classifiers=[clf1, clf2, clf3],

meta_classifier=lr)

# Learn to predict each class against the other

classifier = OneVsRestClassifier(sclf)

y_score = classifier.fit(X_train, y_train).decision_function(X_test)

# Compute ROC curve and ROC area for each class

fpr = dict()

tpr = dict()

roc_auc = dict()

for i in range(n_classes):

fpr[i], tpr[i], _ = roc_curve(y_test[:, i], y_score[:, i])

roc_auc[i] = auc(fpr[i], tpr[i])

# Compute micro-average ROC curve and ROC area

fpr["micro"], tpr["micro"], _ = roc_curve(y_test.ravel(), y_score.ravel())

roc_auc["micro"] = auc(fpr["micro"], tpr["micro"])

plt.figure()

lw = 2

plt.plot(fpr[2], tpr[2], color='darkorange',

lw=lw, label='ROC curve (area = %0.2f)' % roc_auc[2])

plt.plot([0, 1], [0, 1], color='navy', lw=lw, linestyle='--')

plt.xlim([0.0, 1.0])

plt.ylim([0.0, 1.05])

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('Receiver operating characteristic example')

plt.legend(loc="lower right")

plt.show()