部署Hbase + Phoenix,并通过DBeaver连接Phoenix

1.Hbase安装步骤

https://blog.csdn.net/muyingmiao/article/details/103002598

2 Phoenix的安装

2.1 Phoenix 的官网

http://phoenix.apache.org/

2.2 Phoenix 安装文件地址

http://www.apache.org/dyn/closer.lua/phoenix/

https://mirrors.tuna.tsinghua.edu.cn/apache/phoenix/

如果不想自己编译对应的版本, 可以使用官网相应的版本,比如使用CDH5.16.1的,可以选择如下版本:http://www.apache.org/dyn/closer.lua/phoenix/apache-phoenix-4.14.0-cdh5.14.2/bin/apache-phoenix-4.14.0-cdh5.14.2-bin.tar.gz

2.3 Phoenix安装步骤

- Download and expand the latest phoenix-[version]-bin.tar.

- Add the phoenix-[version]-server.jar to the classpath of all HBase region server and

masterand remove any previous version. An easy way to do this is to copy it into the HBase lib directory (use phoenix-core-[version].jar for Phoenix 3.x) - Restart HBase.

- Add the phoenix-[version]-client.jar to the classpath of any Phoenix client.(把clinet的jar包添加到海狸)

具体操作如下

2.3.1.下载(CDH环境:Hadoop Hbase + 4.14.0-cdh5.11.2(比如这个版本))

2.3.2.将phoenix的server的依赖包拷贝到hbase的lib下

[hadoop@hadoop002 phoenix]$ cp phoenix-4.14.0-cdh5.14.2-server.jar ../hbase-1.2.0-cdh5.15.1/lib/2.3.3.修改hbase的hbase-site.xml配置文件,添加如下4个参数

hbase.table.sanity.checks

false

hbase.regionserver.wal.codec

org.apache.hadoop.hbase.regionserver.wal.IndexedWALEditCodec

phoenix.schema.isNamespaceMappingEnabled

true

phoenix.schema.mapSystemTablesToNamespace

true

2.3.4.重启Hbase

[hadoop@hadoop002 bin]$ ./stop-hbase.sh

stopping hbase....................

[hadoop@hadoop002 bin]$ ./start-hbase.sh

starting master, logging to /home/hadoop/app/hbase-1.2.0-cdh5.15.1//logs/hbase-hadoop-master-hadoop002.out

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

hadoop002: starting regionserver, logging to /home/hadoop/app/hbase-1.2.0-cdh5.15.1/bin/../logs/hbase-hadoop-regionserver-hadoop002.out

hadoop002: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

hadoop002: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

[hadoop@hadoop002 bin]$ jps

9874 SecondaryNameNode

21667 QuorumPeerMain

9398 NameNode

17094 HMaster

10151 NodeManager

9513 DataNode

17561 Jps

22780 nacos-server.jar

17404 HRegionServer

10030 ResourceManager

[hadoop@hadoop002 bin]$ 2.3.5.拷贝Hbase的配置文件hbase-site.xml到phoenix的bin目录下,替换之前phoenix的hbase-site.xml

建议做软连接

[hadoop@hadoop002 bin]$ ln -s /home/hadoop/app/hbase-1.2.0-cdh5.15.1/conf/hbase-site.xml hbase-site.xml

[hadoop@hadoop002 bin]$ ll

total 144

drwxrwxr-x 2 hadoop hadoop 24 Jun 5 2018 argparse-1.4.0

drwxrwxr-x 4 hadoop hadoop 96 Jun 5 2018 config

-rw-rw-r-- 1 hadoop hadoop 32864 Jun 5 2018 daemon.py

-rwxrwxr-x 1 hadoop hadoop 1881 Jun 5 2018 end2endTest.py

-rw-rw-r-- 1 hadoop hadoop 1621 Jun 5 2018 hadoop-metrics2-hbase.properties

-rw-rw-r-- 1 hadoop hadoop 3056 Jun 5 2018 hadoop-metrics2-phoenix.properties

lrwxrwxrwx 1 hadoop hadoop 58 Mar 21 09:30 hbase-site.xml -> /home/hadoop/app/hbase-1.2.0-cdh5.15.1/conf/hbase-site.xml如果是CDH的HDFS的HA环境的话,要把/etc/hdfs/conf/core-site.xml /etc/hdfs/conf/hdfs-site.xml 两个文件拷贝到bin目录下

2.3.6.phoenix初始化,注意python的版本要用默认的2.7.5

[hadoop@hadoop002 bin]$ ./sqlline.py hadoop002:2181

Setting property: [incremental, false]

Setting property: [isolation, TRANSACTION_READ_COMMITTED]

issuing: !connect jdbc:phoenix:hadoop002:2181 none none org.apache.phoenix.jdbc.PhoenixDriver

Connecting to jdbc:phoenix:hadoop002:2181

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/app/apache-phoenix-4.14.0-cdh5.14.2-bin/phoenix-4.14.0-cdh5.14.2-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/app/hadoop-2.6.0-cdh5.15.1/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

20/03/21 09:45:15 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Connected to: Phoenix (version 4.14)

Driver: PhoenixEmbeddedDriver (version 4.14)

Autocommit status: true

Transaction isolation: TRANSACTION_READ_COMMITTED

Building list of tables and columns for tab-completion (set fastconnect to true to skip)...

133/133 (100%) Done

Done

sqlline version 1.2.0

0: jdbc:phoenix:hadoop002:2181>

2.3.7 Phoenix Schema 映射及配置注意

http://phoenix.apache.org/namspace_mapping.html

在Phoenix4.8.0版本之前,Phoenix的schema和Hbase 的Namespace是没有开启映射参数的。

| Property | Description | Default |

| phoenix.schema.isNamespaceMappingEnabled | If it is enabled, then the tables created with schema will be mapped to namespace.This needs to be set at client and server both. if set once, should not be rollback. Old client will not work after this property is enabled. | false |

| phoenix.schema.mapSystemTablesToNamespace | Enabling this property will take affect when phoenix.connection.isNamespaceMappingEnabled is also set to true. If it is enabled, SYSTEM tables if present will automatically migrated to SYSTEM namespace. And If set to false , then system tables will be created in default namespace only. This needs to be set at client and server both. | true |

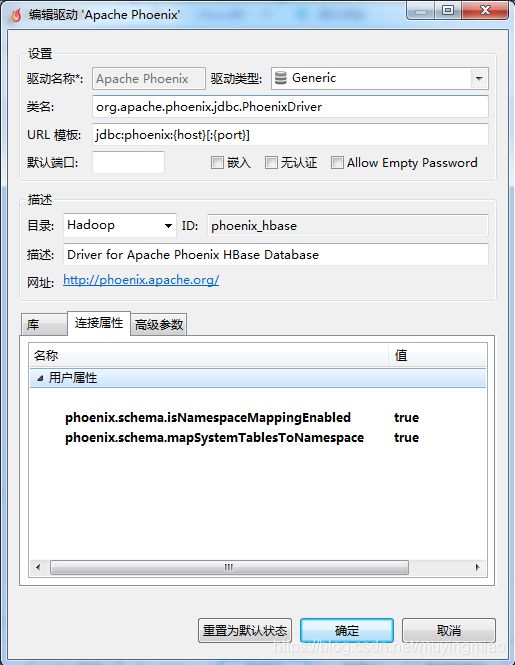

client端和server端都要设置(如下2个参数已经在上述的配置文件中添加),最好是clinet端使用server的配置文件的软连接。

phoenix.schema.isNamespaceMappingEnabled

true

phoenix.schema.mapSystemTablesToNamespace

true

设置好后,在phoenix中创建schema后

0: jdbc:phoenix:hadoop002:2181> !tables

+------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+----+

| TABLE_CAT | TABLE_SCHEM | TABLE_NAME | TABLE_TYPE | REMARKS | TYPE_NAME | SELF_REFERENCING_COL_NAME | REF_GENERATION | INDEX_STATE | IMMUTABLE_ROWS | SA |

+------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+----+

| | TEST | TEST_IDX | INDEX | | | | | ACTIVE | false | nu |

| | SYSTEM | CATALOG | SYSTEM TABLE | | | | | | false | nu |

| | SYSTEM | FUNCTION | SYSTEM TABLE | | | | | | false | nu |

| | SYSTEM | LOG | SYSTEM TABLE | | | | | | true | 32 |

| | SYSTEM | SEQUENCE | SYSTEM TABLE | | | | | | false | nu |

| | SYSTEM | STATS | SYSTEM TABLE | | | | | | false | nu |

| | TEST | TEST | TABLE | | | | | | false | nu |

+------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+----+

0: jdbc:phoenix:hadoop002:2181>

在Hbase 的namespace中也会展示相应的大写的名字

phoenix创建schema

0: jdbc:phoenix:hadoop002:2181> create schema muyingmiao;在Hbase的shell下查看

hbase(main):002:0> list_namespace

NAMESPACE

MUYINGMIAO

SYSTEM

TEST

default

hbase

ruozedata

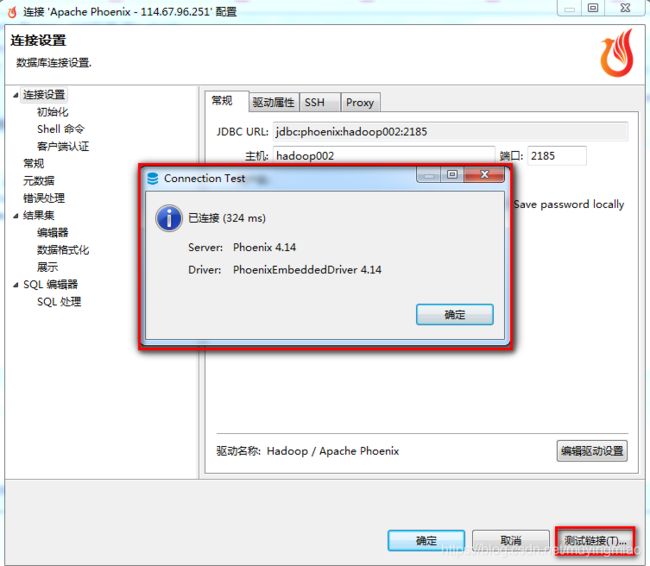

6 row(s) in 0.0140 seconds3.通过DBeaver连接Phoenix

3.1下载并安装DBeaver

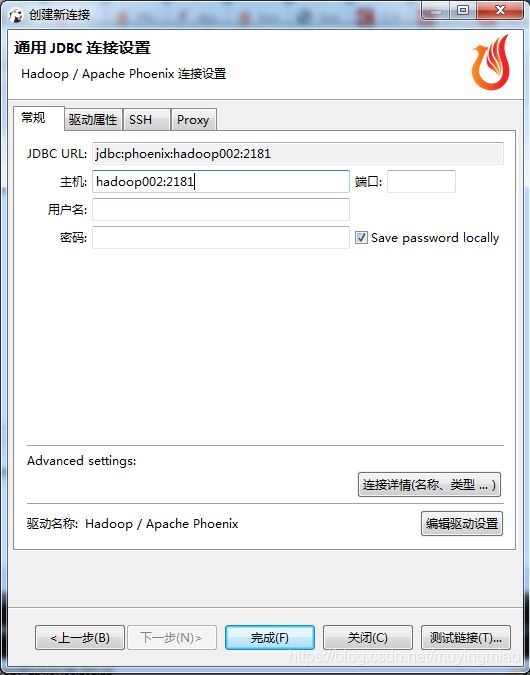

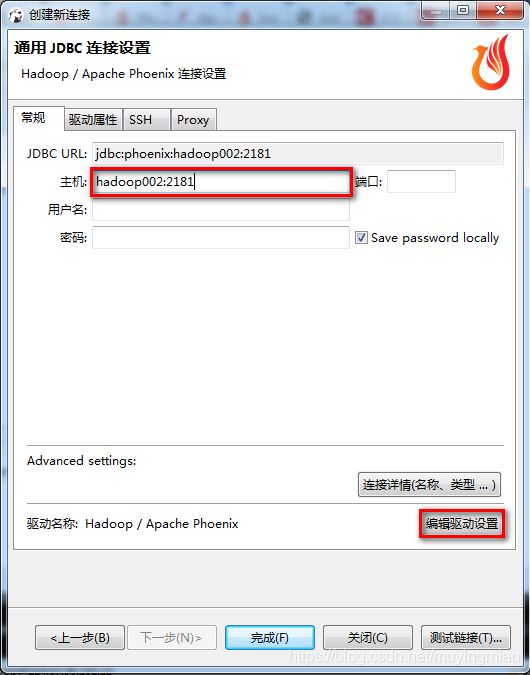

3.2 配置DBeaver的JDBC

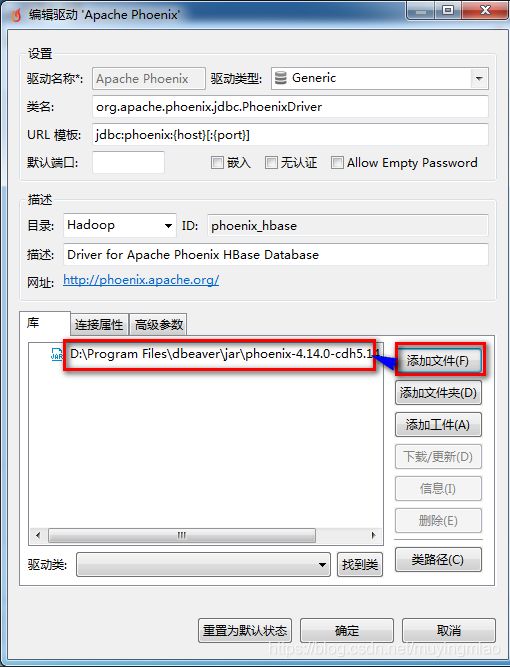

3.3 将phoenix-4.14.0-cdh5.14.2-client.jar 下载到本地,在Dbserver中配置驱动设置

3.5 在C:\Windows\System32\drivers\etc\hosts文件中配置

ip hostname

3.6 测试连接

3.7 如果是云服务器,要打开60000,60010,2181,60020端口

如果报错

Unexpected version format: 11.0.5

需要在dbeave.ini文件中配置本地的JDK路径,比如我自己的

-vm

C:/app/Java/jdk1.8.0_221/bin

-startup

plugins/org.eclipse.equinox.launcher_1.5.600.v20191014-2022.jar

--launcher.library

plugins/org.eclipse.equinox.launcher.win32.win32.x86_64_1.1.1100.v20190907-0426

-vmargs

-XX:+IgnoreUnrecognizedVMOptions

--add-modules=ALL-SYSTEM

-Xms64m

-Xmx1024m3.8 Phoenix 一些shell命令

!tables :查看所有的系统表和用户表

4 Phoenix的数据类型

http://phoenix.apache.org/language/datatypes.html

MySQL中的INT对应phoenix中的INTEGER

MySQL中的CHAR/VARCHAR的长度在phoenix要翻倍,防止数据同步到phoenix出现长度不够的问题

5.在phoenix中创建schema和table、index

5.1 创建schema,table和index

0: jdbc:phoenix:hadoop002:2181> create schema test;

No rows affected (0.056 seconds)

0: jdbc:phoenix:hadoop002:2181> CREATE TABLE test.test ( id BIGINT not null primary key, name varchar(200), age integer);

No rows affected (2.341 seconds)

0: jdbc:phoenix:hadoop002:2181> upsert into test.test values(1,'user01',18);

1 row affected (0.04 seconds)

0: jdbc:phoenix:hadoop002:2181> select * from test.test;

+-----+---------+------+

| ID | NAME | AGE |

+-----+---------+------+

| 1 | user01 | 18 |

+-----+---------+------+

1 row selected (0.033 seconds)

0: jdbc:phoenix:hadoop002:2181> CREATE INDEX test_idx ON test.test(name, age);

2 rows affected (6.315 seconds)

0: jdbc:phoenix:hadoop002:2181> !tables

+------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+----+

| TABLE_CAT | TABLE_SCHEM | TABLE_NAME | TABLE_TYPE | REMARKS | TYPE_NAME | SELF_REFERENCING_COL_NAME | REF_GENERATION | INDEX_STATE | IMMUTABLE_ROWS | SA |

+------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+----+

| | TEST | TEST_IDX | INDEX | | | | | ACTIVE | false | nu |

| | SYSTEM | CATALOG | SYSTEM TABLE | | | | | | false | nu |

| | SYSTEM | FUNCTION | SYSTEM TABLE | | | | | | false | nu |

| | SYSTEM | LOG | SYSTEM TABLE | | | | | | true | 32 |

| | SYSTEM | SEQUENCE | SYSTEM TABLE | | | | | | false | nu |

| | SYSTEM | STATS | SYSTEM TABLE | | | | | | false | nu |

| | TEST | TEST | TABLE | | | | | | false | nu |

+------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+----+

0: jdbc:phoenix:hadoop002:2181>

5.2 Hbase查询的底层实现是从左到右字典查询

0: jdbc:phoenix:hadoop002:2181> explain select age from test.test where name = 'user01';

+-------------------------------------------------------------------------------------+-----------------+----------------+--------------+

| PLAN | EST_BYTES_READ | EST_ROWS_READ | EST_INFO_TS |

+-------------------------------------------------------------------------------------+-----------------+----------------+--------------+

| CLIENT 1-CHUNK PARALLEL 1-WAY ROUND ROBIN RANGE SCAN OVER TEST:TEST_IDX ['user01'] | null | null | null |

| SERVER FILTER BY FIRST KEY ONLY | null | null | null |

+-------------------------------------------------------------------------------------+-----------------+----------------+--------------+

2 rows selected (0.045 seconds)

0: jdbc:phoenix:hadoop002:2181> explain select name from test.test where age = 18;

+-------------------------------------------------------------------------+-----------------+----------------+--------------+

| PLAN | EST_BYTES_READ | EST_ROWS_READ | EST_INFO_TS |

+-------------------------------------------------------------------------+-----------------+----------------+--------------+

| CLIENT 1-CHUNK PARALLEL 1-WAY ROUND ROBIN FULL SCAN OVER TEST:TEST_IDX | null | null | null |

| SERVER FILTER BY FIRST KEY ONLY AND TO_INTEGER("AGE") = 18 | null | null | null |

+-------------------------------------------------------------------------+-----------------+----------------+--------------+

2 rows selected (0.03 seconds)

0: jdbc:phoenix:hadoop002:2181>

在Hbase中查看

hbase(main):004:0> list_namespace

NAMESPACE

SYSTEM

TEST

default

hbase

ruozedata

5 row(s) in 0.0230 seconds

hbase(main):005:0> list_namespace_tables 'TEST'

TABLE

TEST

1 row(s) in 0.0280 seconds

hbase(main):006:0>

其他扩展知识:

Apache Hbase

1.https://hbase.apache.org/book.html#quickstart

2.Hbase的集群配置文件hbase-site.xml

hbase.rootdir

hdfs://mycluster/hbase

hbase.cluster.distributed

true

hbase.tmp.dir

/hadoop/hbase/tmp

hbase.master

60000

hbase.zookeeper.property.dataDir

/hadoop/zookeeper/data

hbase.zookeeper.quorum

sht-sgmhadoopdn-01,sht-sgmhadoopdn-02,sht-sgmhadoopdn-03

hbase.zookeeper.property.clientPort

2181

!--ZooKeeper 会话超时。Hbase 把这个值传递改 zk 集群,向它推荐一个会话的最大超时时间 -->

zookeeper.session.timeout

120000

hbase.regionserver.restart.on.zk.expire

true

3.单机部署配置文件

hbase.rootdir

hdfs://hostname:9000/hbase

hbase.cluster.distributed

true

hbase.tmp.dir

/home/hadoop/tmp/hbase

hbase.master

60000

hbase.zookeeper.property.dataDir

/home/hadoop/data/zookeeper

hbase.zookeeper.quorum

hostname

hbase.zookeeper.property.clientPort

2181

zookeeper.session.timeout

120000

hbase.regionserver.restart.on.zk.expire

true