pytorch损失函数与优化器

目录

- 损失函数

- 优化器

- 模型建立

- 线性回归

- 总结

损失函数

torch.nn 中存在很多封装好的损失函数。比如均方差损失,用 torch.nn.MSELoss() 表示。

import torch

import torch.nn as nn

# 初始化数据集

X = torch.tensor([1, 2, 3, 4], dtype=torch.float32)

Y = torch.tensor([2, 4, 6, 8], dtype=torch.float32)

w = torch.tensor(0.0, dtype=torch.float32, requires_grad=True)

def forward(x):

# 正向传播函数

return w * x

# 这里使用均方差损失计算预测值和真实值之间的距离

loss = nn.MSELoss()

# 测试此时的损失

loss(forward(X), Y)

# 输出结果

# tensor(30., grad_fn=)

优化器

优化器可以理解为一种利用梯度下降算法自动求解所需参数的工具包。在 PyTorch 中提供了 torch.optim 方法优化我们的模型。 torch.optim 工具包中存在着各种梯度下降的改进算法,比如 SGD、Momentum、RMSProp 和 Adam 等。

例如SGD优化器定义如下:

optimizer = torch.optim.SGD([w], lr=learning_rate)

其中第一个参数,表示的是损失函数中的权重,即我们需要求取的值。lr 表示的是梯度下降的步长。

使用优化器,我们可以一次性对所有的变量进行更新。函数如下:

optimizer.step():对模型(神经网络)中的参数进行更新,即所有参数值向梯度相反方向走一步。optimizer.zero_grad():对损失函数的相关变量进行梯度的清空。

线性回归求解如下:

# 定义损失和优化器

learning_rate = 0.01

n_iters = 100

loss = nn.MSELoss()

optimizer = torch.optim.SGD([w], lr=learning_rate)

# 模型的训练过程

for epoch in range(n_iters):

y_predicted = forward(X)

# 计算损失

l = loss(Y, y_predicted)

# 计算梯度

l.backward()

# 更新权重,即向梯度方向走一步

optimizer.step()

# 清空梯度

optimizer.zero_grad()

if epoch % 10 == 0:

print('epoch ', epoch+1, ': w = ', w, ' loss = ', l)

print(f'根据训练模型预测,当 x =5 时,y 的值为: {forward(5):.3f}')

epoch 1 : w = tensor(0.3000, requires_grad=True) loss = tensor(30., grad_fn=<MeanBackward0>)

epoch 11 : w = tensor(1.6653, requires_grad=True) loss = tensor(1.1628, grad_fn=<MeanBackward0>)

epoch 21 : w = tensor(1.9341, requires_grad=True) loss = tensor(0.0451, grad_fn=<MeanBackward0>)

epoch 31 : w = tensor(1.9870, requires_grad=True) loss = tensor(0.0017, grad_fn=<MeanBackward0>)

epoch 41 : w = tensor(1.9974, requires_grad=True) loss = tensor(6.7705e-05, grad_fn=<MeanBackward0>)

epoch 51 : w = tensor(1.9995, requires_grad=True) loss = tensor(2.6244e-06, grad_fn=<MeanBackward0>)

epoch 61 : w = tensor(1.9999, requires_grad=True) loss = tensor(1.0176e-07, grad_fn=<MeanBackward0>)

epoch 71 : w = tensor(2.0000, requires_grad=True) loss = tensor(3.9742e-09, grad_fn=<MeanBackward0>)

epoch 81 : w = tensor(2.0000, requires_grad=True) loss = tensor(1.4670e-10, grad_fn=<MeanBackward0>)

epoch 91 : w = tensor(2.0000, requires_grad=True) loss = tensor(5.0768e-12, grad_fn=<MeanBackward0>)

根据训练模型预测,当 x =5 时,y 的值为: 10.000

模型建立

PyTorch 中为我们提供了预定义模型,我们不用手动定义 forward 函数。如 torch.nn.Linear(input_size, output_size) 表示线性函数模型。

- input_size:输入数据的维度

- output_size:输出数据的维度

一个线性问题的求解分为下面三个步骤:

- 定义模型(即正向传播函数)。

- 定义损失和优化器。

- 模型的训练(正向传播、反向传播、更新梯度、梯度下降、循环)。

# 由于使用 PyTorch ,因此所有的变量都为张量

X = torch.tensor([[1], [2], [3], [4]], dtype=torch.float32)

Y = torch.tensor([[2], [4], [6], [8]], dtype=torch.float32)

X_test = torch.tensor([5], dtype=torch.float32)

# 1. 定义模型

n_samples, n_features = X.shape

# 这里输入和输出的维度相同

model = nn.Linear(n_features, n_features)

# 2. 定义优化器和损失函数

learning_rate = 0.1

n_iters = 100

loss = nn.MSELoss()

# 在定义优化器时,直接利用 model.parameters() 表示模型中所有需要求的权重

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

# 3. 模型的训练,固定的步骤:正向传播、计算损失、反向传播、更新权重、梯度清空

for epoch in range(n_iters):

# 正向传播

y_predicted = model(X)

# 损失

l = loss(Y, y_predicted)

# 反向传播

l.backward()

# 更新权重

optimizer.step()

# 清空梯度

optimizer.zero_grad()

if epoch % 10 == 0:

[w, b] = model.parameters() # unpack parameters

print('epoch ', epoch+1, ': w = ', w[0][0].item(), ' loss = ', l)

print(f'根据训练模型预测,当 x =5 时,y 的值为:', forward(X_test))

epoch 1 : w = 3.220730781555176 loss = tensor(53.6913, grad_fn=<MeanBackward0>)

epoch 11 : w = 1.7456011772155762 loss = tensor(0.1413, grad_fn=<MeanBackward0>)

epoch 21 : w = 1.7912062406539917 loss = tensor(0.0672, grad_fn=<MeanBackward0>)

epoch 31 : w = 1.8455491065979004 loss = tensor(0.0366, grad_fn=<MeanBackward0>)

epoch 41 : w = 1.8860255479812622 loss = tensor(0.0199, grad_fn=<MeanBackward0>)

epoch 51 : w = 1.9158995151519775 loss = tensor(0.0109, grad_fn=<MeanBackward0>)

epoch 61 : w = 1.9379432201385498 loss = tensor(0.0059, grad_fn=<MeanBackward0>)

epoch 71 : w = 1.9542090892791748 loss = tensor(0.0032, grad_fn=<MeanBackward0>)

epoch 81 : w = 1.9662114381790161 loss = tensor(0.0018, grad_fn=<MeanBackward0>)

epoch 91 : w = 1.9750678539276123 loss = tensor(0.0010, grad_fn=<MeanBackward0>)

根据训练模型预测,当 x =5 时,y 的值为: tensor([[9.9052]], grad_fn=<MulBackward0>)

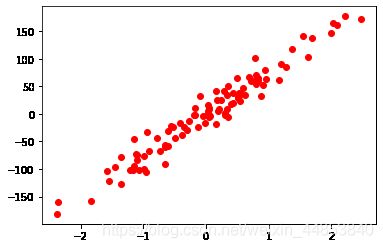

线性回归

设定数据集:

import numpy as np

from sklearn import datasets

import matplotlib.pyplot as plt

%matplotlib inline

X_numpy, y_numpy = datasets.make_regression(

n_samples=100, n_features=1, noise=20, random_state=4)

plt.plot(X_numpy, y_numpy, 'ro')

import torch

import torch.nn as nn

X = torch.from_numpy(X_numpy.astype(np.float32))

y = torch.from_numpy(y_numpy.astype(np.float32))

y = y.view(y.shape[0], 1)

接下来用 pytorch 来初始化这个线性模型:

n_samples, n_features = X.shape

input_size = n_features

output_size = 1

model = nn.Linear(input_size, output_size)

接下来定义学习率、损失函数和优化器:

learning_rate = 0.01

criterion = nn.MSELoss()

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

将数据传入模型中,然后利用梯度下降算法不断的迭代,找到最佳的模型:

num_epochs = 100

for epoch in range(num_epochs):

# Forward pass and loss

y_predicted = model(X)

loss = criterion(y_predicted, y)

# Backward pass and update

loss.backward()

optimizer.step()

# zero grad before new step

optimizer.zero_grad()

if (epoch+1) % 10 == 0:

print(f'epoch: {epoch+1}, loss = {loss.item():.4f}')

# 测试代码:将通过模型预测出来的值展示到图像中

# 预测结果并转为 NumPy 的形式

predicted = model(X).detach().numpy()

plt.plot(X_numpy, y_numpy, 'ro')

plt.plot(X_numpy, predicted, 'b')

plt.show()

epoch: 10, loss = 3968.5198

epoch: 20, loss = 2797.9084

epoch: 30, loss = 2000.1969

epoch: 40, loss = 1456.4781

epoch: 50, loss = 1085.7996

epoch: 60, loss = 833.0376

epoch: 70, loss = 660.6458

epoch: 80, loss = 543.0453

epoch: 90, loss = 462.8058

epoch: 100, loss = 408.0471

总结

模型定义的步骤:

- 利用

nn.Linear定义模型。 - 利用

nn.MSELoss定义损失。 - 利用

torch.optim定义优化器。 - 利用梯度下降算法进行模型的训练。

模型训练的步骤:

- 利用

model(X)进行正向传播。 - 利用

loss(Y, y_predicted)计算模型损失。 - 利用

loss.backward()计算模型梯度。 - 利用

optimizer.step()更新权重。 - 利用

optimizer.zero_grad()清空梯度。 - 重复 1-5 的操作。