shaderlab 屏幕后处理

来自Unity Shader入门精要

一.调整屏幕亮度、饱和度和对比度

unity屏幕后处理的原理是将渲染好的场景作为一张屏幕大小Quad的Texture,贴在屏幕上,然后允许用户自定义该Quad的Shader,将Shader依托给该Quad的Material来实现后处理效果。

创建该Material的方法

protected Material CreateMaterial(Shader shader, Material material)

{

if (shader == null)

return null;

if (shader.isSupported && material && material.shader == shader)

return material;

else

{

material = new Material(shader);

material.hideFlags = HideFlags.DontSave;

if (material)

return material;

else

return null;

}

}

但是这个Material是用程序生成的,所以我们需要用脚本来控制他,同时用脚本来控制unity底层对后处理渲染流程的操作。

public class BrightnessSaturationAndContrast : PostEffectBase

{

[Range(0.0f, 3.0f)]

public float brightness = 1.0f;

[Range(0.0f, 3.0f)]

public float saturation = 1.0f;

[Range(0.0f, 3.0f)]

public float contrast = 1.0f;

public Shader briSatConShader;

private Material briSatConMaterial;

public Material material

{

get

{

briSatConMaterial = CreateMaterial(briSatConShader, briSatConMaterial);

return briSatConMaterial;

}

}

void OnRenderImage(RenderTexture src, RenderTexture dest) //场景渲染完毕的回调函数

{

if (material != null) // 这个material也就是我们之前创建好的材质

{

// 依托着色器反射来通过名字赋值变量

material.SetFloat("_Brightness", brightness); // 亮度

material.SetFloat("_Saturation", saturation); // 饱和度

material.SetFloat("_Contrast", contrast); // 对比度

Graphics.Blit(src, dest, material); // src为场景渲染结果,dest为呈现到屏幕上的对象,以material为材质渲染src然后拷贝到dest上

}

else

Graphics.Blit(src, dest); // 如果材质创建失败,直接拷贝场景到屏幕上

}

}

那么接下来就要定义shader了

// Upgrade NOTE: replaced 'mul(UNITY_MATRIX_MVP,*)' with 'UnityObjectToClipPos(*)'

Shader "Custom/BriSatConShader"

{

Properties

{

_MainTex ("Albedo (RGB)", 2D) = "white" {}

_Brightness ("Brightness", Float) = 1

_Saturation ("Saturation", Float) = 1

_Contrast ("Contrast", Float) = 1

}

SubShader

{

Tags { "RenderType"="Transparent" }

Pass

{

Blend SrcAlpha OneMinusSrcAlpha

ZTest Always

Cull Off

ZWrite Off

CGPROGRAM

#pragma vertex VS

#pragma fragment PS

#include "UnityCG.cginc"

sampler2D _MainTex;

half _Brightness;

half _Saturation;

half _Contrast;

struct Input

{

float4 vertex : POSITION;

float2 texcoord : TEXCOORD0;

};

struct Output

{

float4 posH : SV_POSITION;

float2 uv : TEXCOORD0;

};

Output VS(Input v)

{

Output o;

o.posH = UnityObjectToClipPos(v.vertex);

o.uv = v.texcoord;

return o;

}

fixed4 PS(Output o) : SV_Target

{

fixed4 renderTex = tex2D(_MainTex, o.uv);

// Apply brightness 亮度

fixed3 finalColor = renderTex.rgb * _Brightness;

// Apply saturation 饱和度

fixed luminance = 0.2125 * renderTex.r + 0.7154 * renderTex.g + 0.0721 * renderTex.b;

fixed3 luminanceColor = fixed3(luminance, luminance, luminance);

finalColor = lerp(luminanceColor, finalColor, _Saturation);

// Apply contrast 对比度

fixed3 avgColor = fixed3(0.5, 0.5, 0.5);

finalColor = lerp(avgColor, finalColor, _Contrast);

return fixed4(finalColor, renderTex.a);

}

ENDCG

}

}

FallBack Off

}

顶点着色器不用额外操作,因为就渲染Quad那四个顶点,操作都在像素着色器里

至于饱和度和对比度公式为啥是那样,我不知道,反正公式就是公式。

二. 边缘检测

需要用到卷积,老生常谈了,颜色放中间,来回乘嘛。

用到的是Sobel算子

卷积核为

书中将Gx和Gy反过来了,不过无所谓。

公式为![]()

为了简化操作,约等于![]()

这个结果就是当前像素的梯度,梯度反应该像素与周围颜色的变化程度,梯度越大,越有可能是两种颜色的边界,也就是边缘点。

控制脚本代码,三个参数,边界颜色,背景颜色和与原图像的过渡程度(edgeOnly)

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class EdgeDetection : PostEffectBase

{

[Range(0.0f, 1.0f)]

public float edgesOnly = 0.0f;

public Color edgeColor = Color.black;

public Color backgroundColor = Color.white;

public Shader edShader;

private Material edMaterial = null;

public Material Mat

{

get

{

edMaterial = CreateMaterial(edShader, edMaterial);

return edMaterial;

}

}

void OnRenderImage(RenderTexture src, RenderTexture dest)

{

if (Mat != null)

{

Mat.SetFloat("_EdgeOnly", edgesOnly);

Mat.SetColor("_EdgeColor", edgeColor);

Mat.SetColor("_BackgroundColor", backgroundColor);

Graphics.Blit(src, dest, Mat);

}

else

{

Graphics.Blit(src, dest);

}

}

}

着色器代码

// Upgrade NOTE: replaced 'mul(UNITY_MATRIX_MVP,*)' with 'UnityObjectToClipPos(*)'

Shader "Custom/EdgeDetectionShader"

{

Properties

{

_MainTex ("Base (RGB)", 2D) = "white" {}

_EdgeOnly ("Edge only", Float) = 1.0

_EdgeColor ("Edge Color", Color) = (0, 0, 0, 1)

_BackgroundColor ("Background Color", Color) = (1, 1, 1, 1)

}

SubShader

{

Pass

{

ZTest Always

Cull Off

ZWrite Off

CGPROGRAM

#include "UnityCG.cginc"

#pragma vertex VS

#pragma fragment PS

sampler2D _MainTex;

half4 _MainTex_TexelSize;

fixed _EdgeOnly;

fixed4 _EdgeColor;

fixed4 _BackgroundColor;

struct Input

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

};

struct Output

{

float4 posH : SV_POSITION;

half2 uv[9] : TEXCOORD0;

};

Output VS(Input v)

{

Output o;

o.posH = UnityObjectToClipPos(v.vertex);

o.uv[0] = v.uv + _MainTex_TexelSize.xy * half2(-1, -1);

o.uv[1] = v.uv + _MainTex_TexelSize.xy * half2(0, -1);

o.uv[2] = v.uv + _MainTex_TexelSize.xy * half2(1, -1);

o.uv[3] = v.uv + _MainTex_TexelSize.xy * half2(-1, 0);

o.uv[4] = v.uv + _MainTex_TexelSize.xy * half2(0, 0);

o.uv[5] = v.uv + _MainTex_TexelSize.xy * half2(1, 0);

o.uv[6] = v.uv + _MainTex_TexelSize.xy * half2(-1, 1);

o.uv[7] = v.uv + _MainTex_TexelSize.xy * half2(0, 1);

o.uv[8] = v.uv + _MainTex_TexelSize.xy * half2(1, 1);

return o;

}

fixed luminance(fixed4 color) // 转为灰度

{

return 0.2125 * color.r + 0.7154 * color.g + 0.0721 * color.b;

}

half Sobel(Output o)

{

const half Gx[9] = { -1, -2, -1,

0, 0, 0,

1, 2, 1 };

const half Gy[9] = { -1, 0, 1,

-2, 0, 2,

-1, 0, 1 };

half texColor;

half edgeX = 0;

half edgeY = 0;

for (int i = 0; i < 9; i++)

{

texColor = luminance(tex2D(_MainTex, o.uv[i]));

edgeX += texColor * Gx[i];

edgeY += texColor * Gy[i];

}

half edge = 1 - abs(edgeX) - abs(edgeY);

return edge;

}

fixed4 PS(Output o) : SV_Target

{

half edge = Sobel(o);

fixed4 withEdgeColor = lerp(_EdgeColor, tex2D(_MainTex, o.uv[4]), edge);

fixed4 onlyEdgeColor = lerp(_EdgeColor, _BackgroundColor, edge);

return lerp(withEdgeColor, onlyEdgeColor, _EdgeOnly);

}

ENDCG

}

}

FallBack Off

}

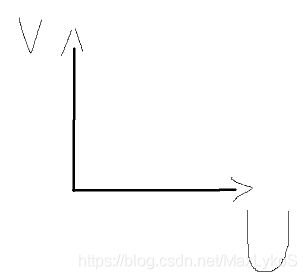

unity的UV坐标系为

那么v.uv + _MainTex_TexelSize.xy * half2(-1, -1)也就是当前像素的左下角了,把9个uv坐标再顶点着色器里算好,通过插值后在像素着色器里一样能用,这样能减少计算,因为只有4个顶点要算。

另外着色器里的kernel比前面的定义进行了180翻转,因为这是卷积定义,容易忘了。

接下来就是对应位置的像素灰度乘对应的sobel权重,最后加起来就好了,结果就是该像素的梯度,范围在0到1。

得到像素梯度后

fixed4 withEdgeColor = lerp(_EdgeColor, tex2D(_MainTex, o.uv[4]), edge);根据梯度返回边缘颜色和实际象素的插值,得到的是边界和原像素的混合

fixed4 onlyEdgeColor = lerp(_EdgeColor, _BackgroundColor, edge);根据梯度返回边缘颜色和边缘颜色色参数的插值,得到的是边界和背景色的混合

lerp(withEdgeColor, onlyEdgeColor, _EdgeOnly)根据边缘显示程度参数,插值上面两个颜色

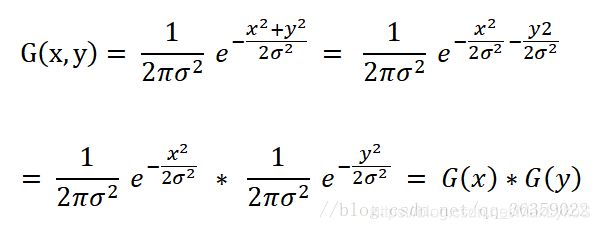

三. 高斯模糊

这个原理大体跟上面类似,还是通过卷积,将中心像素颜色与周围像素做加权平均,已达到模糊的目的,同时可以通过多次模糊和降低图像质量来增大模糊程度。

高斯模糊kernel:![]()

标准方差σ一般取1,e前面的系数忽略不计,直接看成1,根据公式可以得到一个5*5的卷积核,但是这样卷积开销太大,可以通过将二维卷积核拆成两个分别在水平方向和竖直方向的一维卷积核来加速计算。

每个格子算出来的数最后别忘了要加起来为1,也就是分别要再除一下总和。

最后的两个kernel是这样:

okay,可以开始写代码了。

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class GaussianBlur : PostEffectBase

{

public Shader blurShader;

private Material blurMaterial;

public Material Mat

{

get

{

blurMaterial = CreateMaterial(blurShader, blurMaterial);

return blurMaterial;

}

}

[Range(0, 4)]

public int iterations = 3;

[Range(0.2f, 3.0f)]

public float blurSpread = 0.6f;

[Range(1, 8)]

public int downSample = 2;

private void OnRenderImage(RenderTexture source, RenderTexture destination)

{

if (Mat != null)

{

int rtW = source.width/ downSample; // 降低图片尺寸可以让结果更糊

int rtH = source.height / downSample;

RenderTexture buffer0 = RenderTexture.GetTemporary(rtW, rtH, 0); // 创建临时RenderTexture

buffer0.filterMode = FilterMode.Bilinear; // https://blog.csdn.net/qq_39300235/article/details/106095781

Graphics.Blit(source, buffer0);

for (int i = 0; i < iterations; i++)

{

Mat.SetFloat("_BlurSize", 1.0f + blurSpread);

RenderTexture buffer1 = RenderTexture.GetTemporary(rtW, rtH, 0);

// Render the vertical pass

Graphics.Blit(buffer0, buffer1, Mat, 0);

RenderTexture.ReleaseTemporary(buffer0);

buffer0 = buffer1;

buffer1 = RenderTexture.GetTemporary(rtW, rtH, 0);

// Render the horizontal pass

Graphics.Blit(buffer0, buffer1, Mat, 1);

RenderTexture.ReleaseTemporary(buffer0);

buffer0 = buffer1;

}

Graphics.Blit(buffer0, destination);

RenderTexture.ReleaseTemporary(buffer0);

}

else

{

Graphics.Blit(source, destination);

}

}

}

用两个RenderTexture做缓存多次重复渲染已达到高度模糊的目的,问题来了,为啥不能把pass号这个参数去掉让他一次性把两个渲染完呢?如果是一次渲染两个pass的话,结果只有第二个pass的,这里的渲染方式与前面光照里的ForwardRendering不同,前向渲染的时候会开辟一个帧缓存来存储每一次Pass的渲染结果,每个Pass做混合。但是这里后处理的渲染路径不是ForwardRendering,所以要自己手动模拟帧缓存的这个过程。

着色器代码

Shader "Custom/GaussianBlur"

{

Properties

{

_MainTex ("Texture", 2D) = "white" {}

}

SubShader

{

Pass

{

ZTest Always

Cull Off

ZWrite Off

CGPROGRAM

#include "UnityCG.cginc"

#pragma vertex VS

#pragma fragment PS

sampler2D _MainTex;

half4 _MainTex_TexelSize;

float _BlurSize;

struct Input

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

};

struct Output

{

float4 posH : SV_POSITION;

float2 uv[5] : TEXCOORD0;

};

Output VS (Input v)

{

Output o;

o.posH = UnityObjectToClipPos(v.vertex);

o.uv[0] = v.uv;

o.uv[1] = v.uv + float2(0.0, _MainTex_TexelSize.y * 1.0) * _BlurSize;

o.uv[2] = v.uv - float2(0.0, _MainTex_TexelSize.y * 1.0) * _BlurSize;

o.uv[3] = v.uv + float2(0.0, _MainTex_TexelSize.y * 2.0) * _BlurSize;

o.uv[4] = v.uv - float2(0.0, _MainTex_TexelSize.y * 2.0) * _BlurSize;

return o;

}

fixed4 PS (Output o) : SV_Target

{

const fixed weight[3] = { 0.4026, 0.2442, 0.0545 };

fixed3 sum = tex2D(_MainTex, o.uv[0]) * weight[0];

sum += tex2D(_MainTex, o.uv[1]) * weight[1];

sum += tex2D(_MainTex, o.uv[2]) * weight[1];

sum += tex2D(_MainTex, o.uv[3]) * weight[2];

sum += tex2D(_MainTex, o.uv[4]) * weight[2];

return fixed4(sum, 1);

}

ENDCG

}

Pass

{

ZTest Always

Cull Off

ZWrite Off

CGPROGRAM

#include "UnityCG.cginc"

#pragma vertex VS

#pragma fragment PS

sampler2D _MainTex;

half4 _MainTex_TexelSize;

float _BlurSize;

struct Input

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

};

struct Output

{

float4 vertex : SV_POSITION;

float2 uv[5] : TEXCOORD0;

};

Output VS(Input v)

{

Output o;

o.vertex = UnityObjectToClipPos(v.vertex);

o.uv[0] = v.uv;

o.uv[1] = v.uv + float2(_MainTex_TexelSize.x * 1.0, 0.0) * _BlurSize;

o.uv[2] = v.uv - float2(_MainTex_TexelSize.x * 1.0, 0.0) * _BlurSize;

o.uv[3] = v.uv + float2(_MainTex_TexelSize.x * 2.0, 0.0) * _BlurSize;

o.uv[4] = v.uv - float2(_MainTex_TexelSize.x * 2.0, 0.0) * _BlurSize;

return o;

}

fixed4 PS(Output o) : SV_Target

{

const fixed weight[3] = { 0.4026, 0.2442, 0.0545 };

fixed3 sum = tex2D(_MainTex, o.uv[0]) * weight[0];

sum += tex2D(_MainTex, o.uv[1]) * weight[1];

sum += tex2D(_MainTex, o.uv[2]) * weight[1];

sum += tex2D(_MainTex, o.uv[3]) * weight[2];

sum += tex2D(_MainTex, o.uv[4]) * weight[2];

return fixed4(sum, 1);

}

ENDCG

}

}

FallBack Off

}

本质上还是卷积,就是换了个卷积核,但是分成了两个Pass,_BlurSize控制混合像素的距离。

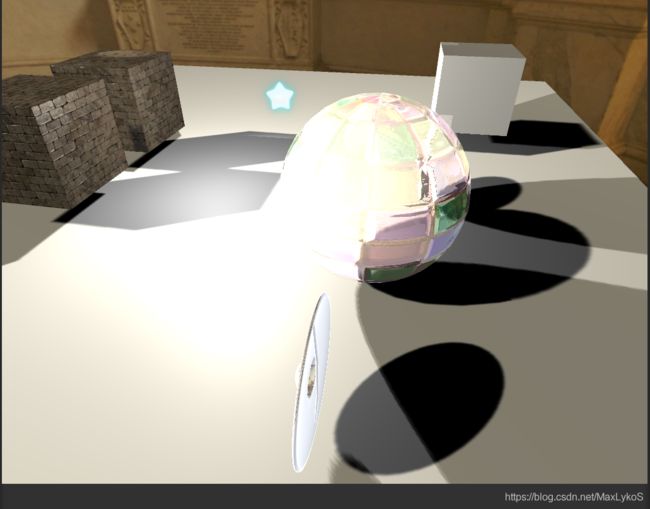

四. Bloom

Bloom的实现承接高斯模糊,本质上是先将屏幕转为灰度图(使用阈值控制一下),然后进行高斯模糊,最后将模糊的灰度图与原图像相加,实现亮的地方更亮更糊的效果,简称泛光。一共4个pass

脚本

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class Bloom : PostEffectBase

{

public Shader bloomShader;

private Material bloomMaterial;

public Material Mat

{

get

{

bloomMaterial = CreateMaterial(bloomShader, bloomMaterial);

return bloomMaterial;

}

}

[Range(0, 4)]

public int iterations = 3;

[Range(0.2f, 3.0f)]

public float blurSpread = 0.6f;

[Range(1, 8)]

public int downSample = 2;

[Range(0.0f, 4.0f)]

public float luminanceThreshold = 0.6f;

private void OnRenderImage(RenderTexture source, RenderTexture destination)

{

if (Mat != null)

{

Mat.SetFloat("_LuminanceThreshold", luminanceThreshold);

int rtW = source.width / downSample;

int rtH = source.height / downSample;

RenderTexture buffer0 = RenderTexture.GetTemporary(rtW, rtH, 0);

buffer0.filterMode = FilterMode.Bilinear;

Graphics.Blit(source, buffer0, Mat, 0);

for (int i = 0; i < iterations; i++)

{

Mat.SetFloat("_BlurSize", 1 + i * blurSpread);

RenderTexture buffer1 = RenderTexture.GetTemporary(rtW, rtH, 0);

// Render vertical blur

Graphics.Blit(buffer0, buffer1, Mat, 1);

RenderTexture.ReleaseTemporary(buffer0);

buffer0 = buffer1;

buffer1 = RenderTexture.GetTemporary(rtW, rtH, 0);

// Render horizontal blur

Graphics.Blit(buffer0, buffer1, Mat, 2);

RenderTexture.ReleaseTemporary(buffer0);

buffer0 = buffer1;

}

Mat.SetTexture("_Bloom", buffer0);

Graphics.Blit(source, destination, Mat, 3);

RenderTexture.ReleaseTemporary(buffer0);

}

else

{

Graphics.Blit(source, destination);

}

}

}

shader

// Upgrade NOTE: replaced 'mul(UNITY_MATRIX_MVP,*)' with 'UnityObjectToClipPos(*)'

Shader "Hidden/Bloom"

{

Properties

{

_MainTex ("Texture", 2D) = "white" {}

_Bloom("Bloom (RGB)", 2D) = "black" {}

_LuminanceThreshold("Luminance Threshold", Float) = 0.5

_BlurSize("Blur Size", Float) = 1.0

}

SubShader

{

Cull Off

ZWrite Off

ZTest Always

Pass

{

CGPROGRAM

#pragma vertex VS

#pragma fragment PSExtractBright

sampler2D _MainTex;

float _LuminanceThreshold;

#include "UnityCG.cginc"

struct Input

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

};

struct Output

{

float2 uv : TEXCOORD0;

float4 vertex : SV_POSITION;

};

Output VS(Input v)

{

Output o;

o.vertex = UnityObjectToClipPos(v.vertex);

o.uv = v.uv;

return o;

}

fixed luminance(fixed4 color) // 计算灰度

{

return 0.2125 * color + 0.7154 * color.g + 0.0721 * color.b;

}

fixed4 PSExtractBright(Output o) : SV_Target

{

fixed c = tex2D(_MainTex, o.uv);

fixed val = clamp(luminance(c) - _LuminanceThreshold, 0.0, 1.0);

return c * val;

}

ENDCG

}

Pass

{

CGPROGRAM

#pragma vertex VS

#pragma fragment PS

sampler2D _MainTex;

half4 _MainTex_TexelSize;

float _BlurSize;

struct Input

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

};

struct Output

{

float4 posH : SV_POSITION;

float2 uv[5] : TEXCOORD0;

};

Output VS(Input v)

{

Output o;

o.posH = UnityObjectToClipPos(v.vertex);

o.uv[0] = v.uv;

o.uv[1] = v.uv + float2(0, _MainTex_TexelSize.y * 1.0) * _BlurSize;

o.uv[2] = v.uv - float2(0, _MainTex_TexelSize.y * 1.0) * _BlurSize;

o.uv[3] = v.uv + float2(0, _MainTex_TexelSize.y * 2.0) * _BlurSize;

o.uv[4] = v.uv - float2(0, _MainTex_TexelSize.y * 2.0) * _BlurSize;

return o;

}

fixed4 PS(Output o) : SV_Target

{

const float weight[3] = {0.4026, 0.2442, 0.0545};

fixed3 sum = tex2D(_MainTex, o.uv[0]) * weight[0];

sum += tex2D(_MainTex, o.uv[1]) * weight[1];

sum += tex2D(_MainTex, o.uv[2]) * weight[1];

sum += tex2D(_MainTex, o.uv[3]) * weight[2];

sum += tex2D(_MainTex, o.uv[4]) * weight[2];

return fixed4(sum, 1.0);

}

ENDCG

}

Pass

{

CGPROGRAM

#pragma vertex VS

#pragma fragment PS

sampler2D _MainTex;

half4 _MainTex_TexelSize;

float _BlurSize;

struct Input

{

float4 vertex : POSITION;

float2 uv : TEXCOORD;

};

struct Output

{

float4 posH : POSITION;

float2 uv[5] : TEXCOORD0;

};

Output VS(Input v)

{

Output o;

o.posH = UnityObjectToClipPos(v.vertex);

o.uv[0] = v.uv;

o.uv[1] = v.uv + float2(_MainTex_TexelSize.x * 1.0, 0.0) * _BlurSize;

o.uv[2] = v.uv - float2(_MainTex_TexelSize.x * 1.0, 0.0) * _BlurSize;

o.uv[3] = v.uv + float2(_MainTex_TexelSize.x * 2.0, 0.0) * _BlurSize;

o.uv[4] = v.uv - float2(_MainTex_TexelSize.x * 2.0, 0.0) * _BlurSize;

return o;

}

fixed4 PS(Output o) : SV_Target

{

const float weight[3] = {0.4026, 0.2442, 0.0545};

fixed3 color = tex2D(_MainTex, o.uv[0]) * weight[0];

color += tex2D(_MainTex, o.uv[1]) * weight[1];

color += tex2D(_MainTex, o.uv[2]) * weight[1];

color += tex2D(_MainTex, o.uv[3]) * weight[2];

color += tex2D(_MainTex, o.uv[4]) * weight[2];

return fixed4(color, 1.0f);

}

ENDCG

}

Pass

{

CGPROGRAM

#pragma vertex VS

#pragma fragment PSBloom

sampler2D _MainTex;

sampler2D _Bloom;

struct Input

{

float4 vertex : POSITION;

float2 uv : TEXCOORD;

};

struct Output

{

float4 posH : SV_POSITION;

float2 uv : TEXCOORD0;

};

Output VS(Input v)

{

Output o;

o.posH = UnityObjectToClipPos(v.vertex);

o.uv = v.uv;

return o;

}

fixed4 PSBloom(Output o) : SV_Target

{

return tex2D(_MainTex, o.uv) + tex2D(_Bloom, o.uv);

}

ENDCG

}

}

}

五. 运动模糊

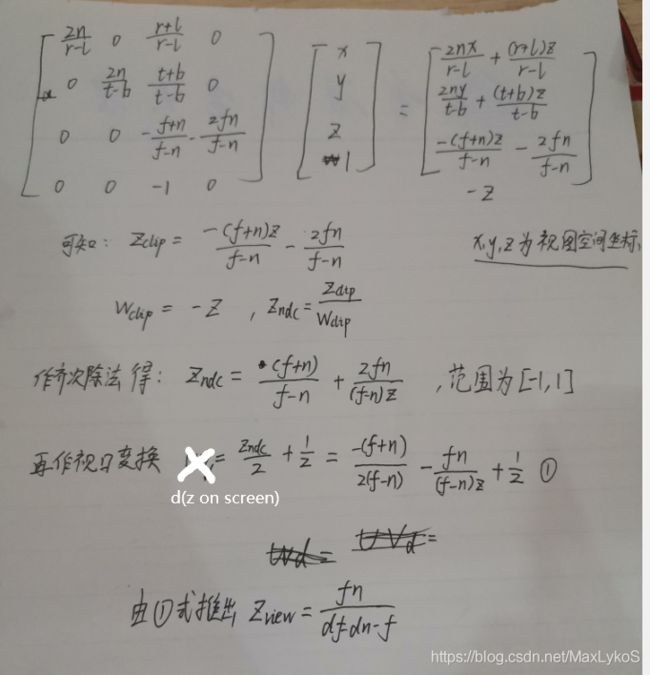

五.1 关于如何在像素着色器里使用深度图反推该像素的深度

深度图定义:unity使用一个单独的Pass渲染物体后保存在一张纹理中,深度图里的颜色值表示视口空间下该顶点经过插值后得到的z坐标分量。注:DirectX顶点在NDC空间中z范围为[0, 1],opengl(unity)为[-1, 1]。

opengl和unity的透视投影矩阵

得到Zview,也就是顶点或像素在视图空间下的深度(摄像机在视图空间的中心,正相对应的z值均为负值),范围为[-Near, -Far],将Zview除以-Far即可将范围缩小到[Near/Far, 1]内,即Z01 = n/(f+dn-df)

在shaderlab里,这个计算函数叫LinearEyeDepth和Linear01Depth。

| 函数名 | 作用 |

|---|---|

| LinearEyeDepth | 返回Zview |

| Linear01Depth | 返回Z01 |

五.2 关于如何在着色器中倒推顶点世界坐标

五.3 实现原理

- 在像素着色器里算出该像素当前的世界坐标和NDC坐标,

- 然后通过当前帧的世界坐标乘上一帧的VP矩阵再做透视除法得到该像素在上一帧中的NDC坐标。

- 将当前帧的NDC坐标和上一帧的NDC坐标做差,算出速度

- 通过速度大小对uv做扰动,进行一个一维的均值模糊

代码

using UnityEngine;

using System.Collections;

public class MotionBlurWithDepthTexture : PostEffectBase

{

public Shader motionBlurShader;

private Material motionBlurMaterial = null;

private Camera cam;

public Camera Cam

{

get

{

if (cam == null)

cam = GetComponent<Camera>();

return cam;

}

}

private Matrix4x4 previousVPMatrix;

private void OnEnable()

{

Camera.main.depthTextureMode |= DepthTextureMode.Depth;

//Camera.main.depthTextureMode |= DepthTextureMode.DepthNormals;

}

public Material Mat

{

get

{

motionBlurMaterial = CreateMaterial(motionBlurShader, motionBlurMaterial);

return motionBlurMaterial;

}

}

[Range(0.0f, 0.9f)]

public float blurSize = 0.5f;

void OnDisable()

{

Camera.main.depthTextureMode &= ~DepthTextureMode.Depth;

Camera.main.depthTextureMode &= ~DepthTextureMode.DepthNormals;

}

void OnRenderImage(RenderTexture src, RenderTexture dest)

{

if (Mat != null)

{

Mat.SetFloat("_BlurSize", blurSize);

Mat.SetMatrix("_PreviousVPMatrix", previousVPMatrix);

Matrix4x4 currentVPMatrix = Cam.projectionMatrix * Cam.worldToCameraMatrix;

Matrix4x4 currentVPInverseMatrix = currentVPMatrix.inverse;

Mat.SetMatrix("_CurrentVPInverseMatrix", currentVPInverseMatrix);

previousVPMatrix = currentVPMatrix;

Graphics.Blit(src,dest, Mat);

}

else

{

Graphics.Blit(src, dest);

}

}

}

Shader "Custom/Motion Blur"

{

Properties

{

_MainTex("Base (RGB)", 2D) = "white" {}

}

SubShader

{

Tags {"RenderType" = "Transparent"}

Pass

{

ZTest Always

Cull Off

ZWrite Off

CGPROGRAM

#pragma vertex VS

#pragma fragment PS

#include "UnityCG.cginc"

sampler2D _MainTex;

sampler2D _CameraDepthTexture;

float4x4 _PreviousVPMatrix;

float4x4 _CurrentVPInverseMatrix;

float4x4 _PreviousVPInverseMatrix;

float _BlurSize;

struct Input

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

};

struct Output

{

float4 posH : SV_POSITION;

float2 uv : TEXCOORD0;

float2 uvd : TEXCOORD1;

};

Output VS(Input v)

{

Output o;

o.posH = UnityObjectToClipPos(v.vertex);

o.uv = v.uv;

o.uvd = v.uv;

return o;

}

fixed4 PS(Output o) : SV_Target

{

// 得到该像素的深度值

fixed d = tex2D(_CameraDepthTexture, o.uvd).r;

// posNDC是该像素的NDC空间坐标,范围[-1, 1]

float4 posNDC = float4(o.uv.x * 2 - 1, o.uv.y * 2 - 1, d * 2 - 1, 1);

// 该像素在视图空间下的z值

float dView = LinearEyeDepth(d);

// 逆向透视除法得到齐次坐标

float4 posH = posNDC * -(dView);

// 根据齐次坐标得到世界坐标

float4 posW = mul(_CurrentVPInverseMatrix, posH);

// 声明这是当前帧顶点的NDC坐标

float4 currentPos = posNDC;

// 得到该顶点在上一帧的齐次坐标

float4 previousPos = mul(_PreviousVPMatrix, posW);

// 进行透视除法得到上一帧该顶点的NDC坐标

previousPos /= previousPos.w;

// 得到两个坐标的速度,因为两个值的范围都是[-1, 1],除以2将他们的差限制在[-1, 1]内

float2 velocity = (currentPos.xy - previousPos.xy) / 2.0f;

float2 uv = o.uv;

float4 c = tex2D(_MainTex, uv);

uv + velocity * _BlurSize;

for (int it = 1; it < 3; it++, uv += velocity * _BlurSize)

{

float4 currentColor = tex2D(_MainTex, uv);

c += currentColor;

}

c /= 3;

return fixed4(c.rgb, 1.0f);

}

ENDCG

}

}

}

```shaderlab

但是这个代码还存在一些问题,那就是天空盒也会跟着模糊,但是摄像机动的时候天空盒是不动的(相对于屏幕),因此看着会很违和。如何在post effect中忽略掉天空盒?

解决方法也很简单,鉴于dView就是像素在视图空间下z坐标而且坐标中心是摄像机,那么可以以dView为阈值,在shader中加判断,大于一定数值的像素不做模糊。

float4 c = tex2D(_MainTex, o.uv);

if (dView > _BlurDistance)

return c;

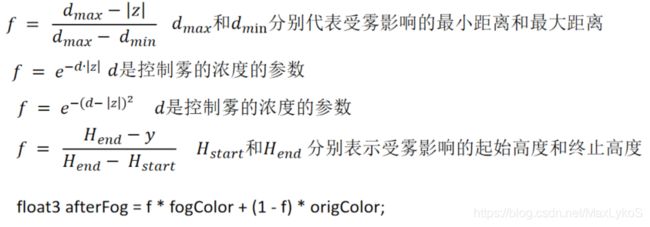

六. 全局雾效

六.1 在像素着色器中计算像素的世界坐标更快的方法

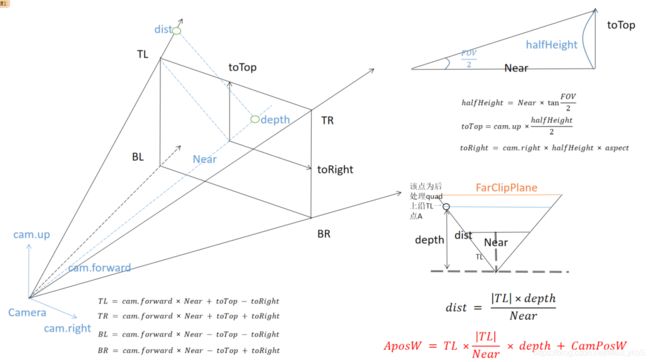

思想是通过记录摄像机到四个顶点的射线,通过插值得到摄像机到像素的射线,然后对摄像机世界坐标沿着射线做偏移,得到像素的世界坐标。偏移的数量取决于像素的深度值(相似三角形)。

图中的A点是特指屏幕左上角(opengl),屏幕上四个顶点的射线就是TL、TR、BL和BR,在着色器中分配。因为这四个射线都是世界空间中的,因此符合线性插值的条件。

着色器中代码float3 worldPos = linearDepth * o.interpolatedRay.xyz + _WorldSpaceCameraPos;

六.2 雾化

代码

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class FogWithDepthTexture : PostEffectBase

{

public Shader fogWithDTShader;

private Material fogMaterial;

private Material Mat

{

get

{

fogMaterial = CreateMaterial(fogWithDTShader, fogMaterial);

return fogMaterial;

}

}

[Range(0.0f, 3.0f)]

public float fogDensity = 1.0f;

public Color fogColor = Color.white;

public float fogStart = 0.0f;

public float fogEnd = 2.0f;

private void OnRenderImage(RenderTexture source, RenderTexture destination)

{

if (Mat != null)

{

Matrix4x4 frustumCorners = Matrix4x4.identity;

float fov = Camera.main.fieldOfView;

float near = Camera.main.nearClipPlane;

float far = Camera.main.farClipPlane;

float aspect = Camera.main.aspect;

float halfHeight = near * Mathf.Tan(fov * 0.5f * Mathf.Deg2Rad);

Vector3 toRight = Camera.main.transform.right * halfHeight * aspect;

Vector3 toTop = Camera.main.transform.up * halfHeight;

Vector3 TL = Camera.main.transform.forward * near + toTop - toRight;

float scale = TL.magnitude / near;

TL.Normalize();

TL *= scale;

Vector3 TR = Camera.main.transform.forward * near + toTop + toRight;

TR.Normalize();

TR *= scale;

Vector3 BL = Camera.main.transform.forward * near - toTop - toRight;

BL.Normalize();

BL *= scale;

Vector3 BR = Camera.main.transform.forward * near - toTop + toRight;

BR.Normalize();

BR *= scale;

frustumCorners.SetRow(0, BL);

frustumCorners.SetRow(1, BR);

frustumCorners.SetRow(2, TR);

frustumCorners.SetRow(3, TL);

Mat.SetMatrix("_FrustumCornersRay", frustumCorners);

Mat.SetMatrix("_ViewProjectionInverseMatrix", (Camera.main.projectionMatrix * Camera.main.worldToCameraMatrix).inverse);

Mat.SetFloat("_FogDensity", fogDensity);

Mat.SetColor("_FogColor", fogColor);

Mat.SetFloat("_FogStart", fogStart);

Mat.SetFloat("_FogEnd", fogEnd);

Graphics.Blit(source, destination, Mat);

}

else

Graphics.Blit(source, destination);

}

}

// Upgrade NOTE: replaced 'mul(UNITY_MATRIX_MVP,*)' with 'UnityObjectToClipPos(*)'

Shader "Custom/FogWithDepthTexture"

{

Properties

{

_MainTex ("Albedo (RGB)", 2D) = "white" {}

_FogDensity ("Fog Density", Float) = 1.0

_FogColor ("Fog Color", Color) = (1,1,1,1)

_FogStart ("Fog Start", Float) = 0.0

_FogEnd ("Fog End", Float) = 1.0

}

SubShader

{

Tags{"RenderType" = "Transparent"}

Pass

{

ZTest Always

Cull Off

ZWrite Off

CGPROGRAM

float4x4 _FrustumCornersRay;

sampler2D _MainTex;

half4 _MainTex_TexelSize;

sampler2D _CameraDepthTexture;

half _FogDensity;

fixed3 _FogColor;

float _FogStart;

float _FogEnd;

#pragma vertex VS

#pragma fragment PS

#include "UnityCG.cginc"

struct Input

{

float4 vertex: POSITION;

float2 uv: TEXCOORD0;

};

struct Output

{

float4 posH : SV_POSITION;

float2 uv : TEXCOORD0;

float2 uv_depth : TEXCOORD1;

float4 interpolatedRay : TEXCOORD2;

};

Output VS(Input v)

{

Output o;

o.posH = UnityObjectToClipPos(v.vertex);

o.uv = v.uv;

o.uv_depth = v.uv;

int index = 0;

if (v.uv.x < 0.5f && v.uv.y < 0.5)

index = 0;

else if (v.uv.x > 0.5 && v.uv.y < 0.5)

index = 1;

else if (v.uv.x > 0.5 && v.uv.y > 0.5)

index = 2;

else

index = 3;

o.interpolatedRay = _FrustumCornersRay[index];

return o;

}

fixed4 PS(Output o) : SV_Target

{

float linearDepth = LinearEyeDepth(tex2D(_CameraDepthTexture, o.uv_depth));

float3 worldPos = _WorldSpaceCameraPos + linearDepth * o.interpolatedRay.xyz;

float fogDensity = (_FogEnd - worldPos.y) / (_FogEnd - _FogStart);

fogDensity = saturate(fogDensity * _FogDensity);

fixed4 finalColor = tex2D(_MainTex, o.uv);

finalColor.rgb = lerp(finalColor.rgb, _FogColor, fogDensity);

return finalColor;

}

ENDCG

}

}

}

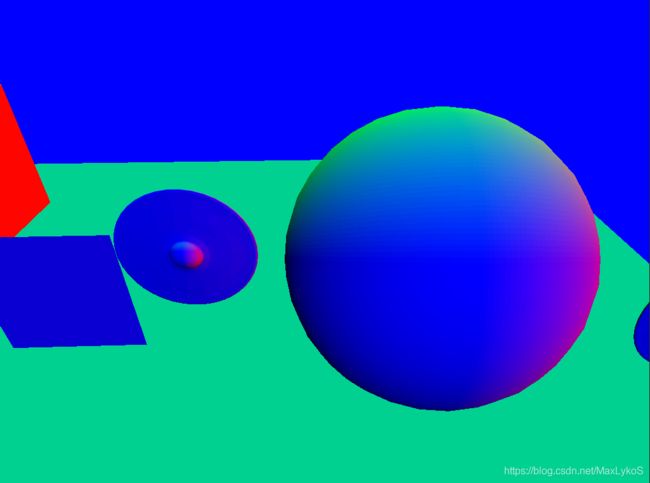

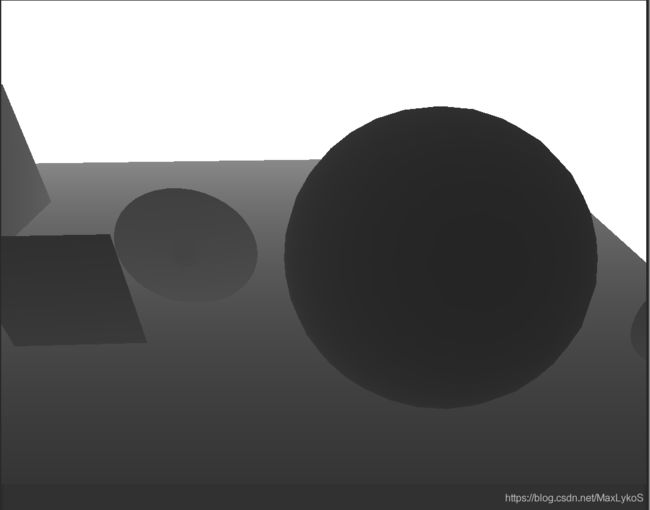

七. 使用深度纹理做边缘检测

上面的边缘检测是使用的最终渲染结果的画面截图,画面细节比较多。

法线图

深度图

可以看出用深度图和法线图做边缘检测的效果比较好,因为他们的图像信息少,边缘明显。

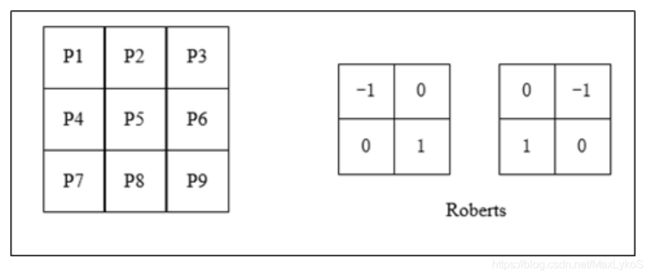

使用Roberts滤波

比较右上-左下和左上-右下四个方向的法线和深度差异,作为评价存在边界的标准。其他的代码跟上面的边缘检测差不多,懒得记了。

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class EdgeDetectionWithDepthTexture : PostEffectBase

{

public Shader edgeDetectionShader;

private Material edgeDetectionMat;

public Material Mat

{

get

{

edgeDetectionMat = CreateMaterial(edgeDetectionShader, edgeDetectionMat);

return edgeDetectionMat;

}

}

private void OnEnable()

{

Camera.main.depthTextureMode |= DepthTextureMode.DepthNormals;

}

[Range(0.0f, 1.0f)]

public float edgesOnly = 0.0f;

public Color edgeColor = Color.black;

public Color backgroundColor = Color.white;

public float sampleDistance = 1.0f;

public float sensitivityDepth = 1.0f;

public float sensitivityNormals = 1.0f;

//[ImageEffectOpaque]

private void OnRenderImage(RenderTexture source, RenderTexture destination)

{

if (Mat != null)

{

Mat.SetFloat("_EdgeOnly", edgesOnly);

Mat.SetColor("_EdgeColor", edgeColor);

Mat.SetColor("_BackgroundColor", backgroundColor);

Mat.SetFloat("SampleDistance", sampleDistance);

Mat.SetVector("_Sensitivity", new Vector4(sensitivityNormals, sensitivityDepth, 0.0f, 0.0f));

Graphics.Blit(source, destination, Mat);

}

else

Graphics.Blit(source, destination);

}

}

// Upgrade NOTE: replaced 'mul(UNITY_MATRIX_MVP,*)' with 'UnityObjectToClipPos(*)'

Shader "Custom/EdgeDetectionWithDepthTexture"

{

Properties

{

_MainTex ("Albedo (RGB)", 2D) = "white" {}

_EdgeOnly ("Edge Only", Float) = 1.0

_EdgeColor ("Edge Color", Color) = (0,0,0,1)

_BackgroundColor("Background Color", Color) = (1,1,1,1)

_SampleDistance("Sample Distance", Float) = 1.0

_Sensitivity ("Sensitivity", Vector) = (1,1,1,1)

}

SubShader

{

Tags { "RenderType"="Transparent" }

Cull Off

ZWrite Off

ZTest Off

Pass

{

CGINCLUDE

#include "UnityCG.cginc"

sampler2D _MainTex;

float2 _MainTex_TexelSize;

fixed _EdgeOnly;

fixed4 _EdgeColor;

fixed4 _BackgroundColor;

float _SampleDistance;

half4 _Sensitivity;

sampler2D _CameraDepthNormalsTexture;

struct Input

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

};

struct Output

{

float4 posH : SV_POSITION;

half2 uv[5] : TEXCOORD0;

};

ENDCG

CGPROGRAM

#pragma vertex VS

#pragma fragment PS

Output VS(Input v)

{

Output o;

o.posH = UnityObjectToClipPos(v.vertex);

o.uv[0] = v.uv;

o.uv[1] = v.uv + _MainTex_TexelSize * half2(1, 1) * _SampleDistance;

o.uv[2] = v.uv + _MainTex_TexelSize * half2(-1, -1) * _SampleDistance;

o.uv[3] = v.uv + _MainTex_TexelSize * half2(1, -1) * _SampleDistance;

o.uv[4] = v.uv + _MainTex_TexelSize * half2(-1, 1) * _SampleDistance;

return o;

}

half CheckSame(half4 center, half4 sample)

{

half2 centerNormal = center.xy;

float centerDepth = DecodeFloatRG(center.zw);

half2 sampleNormal = sample.xy;

float sampleDepth = DecodeFloatRG(sample.zw);

// difference in normals

// do not bother decoding normals - there's no need there

half2 diffNormal = abs(centerNormal - sampleNormal) * _Sensitivity.x;

int isSameNormal = (diffNormal.x + diffNormal.y) < 0.1;

// difference in depth

float diffDepth = abs(centerDepth - sampleDepth) * _Sensitivity.y;

// scale the required threshold by the distance

int isSameDepth = diffDepth < 0.1 * centerDepth;

// return;

// 1 - if normals and depth are similar enough

// 0 - otherwise

return isSameNormal * isSameDepth ? 1.0 : 0.0;

}

fixed4 PS(Output o) : SV_TARGET

{

float depthCenter;

float3 normalCenter;

DecodeDepthNormal(tex2D(_CameraDepthNormalsTexture, o.uv[0]), depthCenter, normalCenter);

return fixed4(depthCenter, depthCenter, depthCenter, 1.0f);

//return fixed4(normalCenter.xyz, 1.0f);

half4 sample1 = tex2D(_CameraDepthNormalsTexture, o.uv[1]);

half4 sample2 = tex2D(_CameraDepthNormalsTexture, o.uv[2]);

half4 sample3 = tex2D(_CameraDepthNormalsTexture, o.uv[3]);

half4 sample4 = tex2D(_CameraDepthNormalsTexture, o.uv[4]);

half edge = 1.0f;

edge *= CheckSame(sample1, sample2);

edge *= CheckSame(sample3, sample4);

fixed4 withEdgeColor = lerp(_EdgeColor, tex2D(_MainTex, o.uv[0]), edge);

fixed4 onlyEdgeColor = lerp(_EdgeColor, _BackgroundColor, edge);

return lerp(withEdgeColor, onlyEdgeColor, _EdgeOnly);

}

ENDCG

}

}

}