工程师&程序员的自我修养 Episode.4 基于百度飞桨PaddlePaddle框架的女朋友情绪分析&防被打消息推荐深度学习系统

具体为什么想到这个题目呢。。。大概是我也想不出别的什么有趣的话题或者项目的工作了吧。

有一天,柏拉图问老师苏格拉底什么是爱情?老师就让他到理论麦田里去,摘一棵全麦田里最大最金黄的麦穗来,期间只能摘一次,并且只可向前走,不能回头。柏拉图于是按照老师说的去做了。结果他两手空空走出了田地,老师问他为什么摘不到。他说:“因为期间只能摘一次,又不能走回头路,期间即使见到最大最金黄的,因为不知前面是否有更好的,所以没有摘;走到前面时,又发觉总不及之前见到的好,原来最大最金黄的麦穗早错过了;于是我什么也没有摘。”老师说:这就是“爱情”。

但是现实很骨感。当前在读信息、计科及机械等硬核工科男大多还处在“啊女朋友啊,今天一句话都没来得及说。”的状态中,而且经常代码写不来,实验做不好,debug完不成的卷容易造成说话用语的玄学。因而简单实现一个简单的利用微信qq消息推测女朋友当前隐含情绪以及推荐防被打消息的工具是大有裨益的。

友情提示:本深度学习模型的实战测试可能会有生命危险,请谨慎操作!

文章整体结构分为:

一、百度UNIT架构实现的自然多轮对话机器人功能

二、对话情绪识别理论

三、PaddleHub实现对话情绪识别

四、PaddleNLP实现对话情绪识别升级版

五、PaddlePaddle开发的情绪识别详细代码

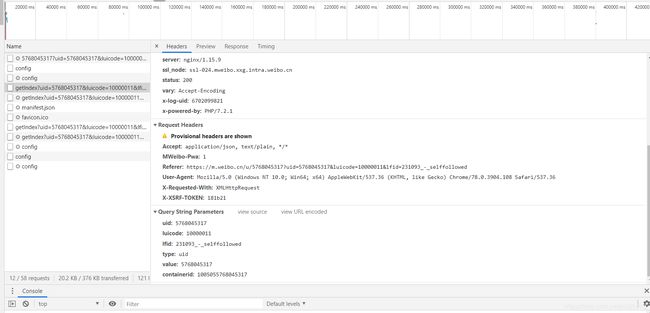

六、PaddlePaddle结合Python爬虫的女票微博情绪监控

一、百度UNIT架构实现的自然多轮对话机器人功能:

以下感谢 @没入门的研究生 相关文章及百度暂开放使用的UNIT对话机器人平台服务,深受启发。

1、准备工作:

百度UNIT,即百度智能对话定制与服务平台(Understanding and Interaction Technology),是百度积累多年的自然语言处理技术的集大成者,通过简单的四个步骤:创建技能、配置意图及词槽、配置训练数据、训练模型即可从无到有得到一个对话系统。

UNIT是一个商业服务平台,对于高级的对话功能提供了付费服务。不过,对于注册的开发者,UNIT提供了五个免费的技能对话的研发环境。想试一试的朋友可以利用这个免费技能实现一些非常有意思的功能。通过UNIT实现多轮对话的前提,是我们要注册成为该平台的开发者,并在百度控制台申请到UNIT功能的ID和KEY。以下详谈。

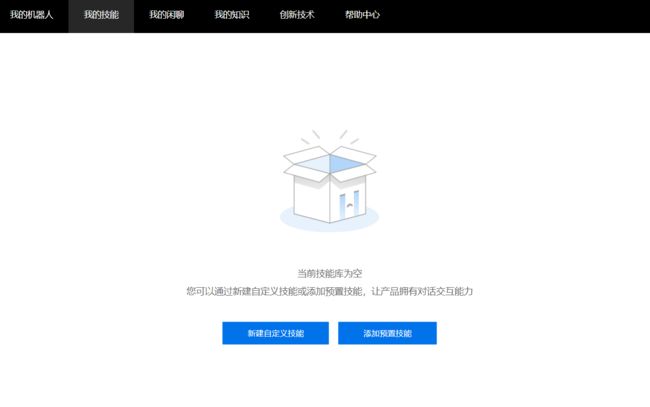

进入UNIT官网后,点击进入 即可看到注册界面,按照要求注册完成后,便可以进入到UNIT的技能库,如下图所示:

即可看到注册界面,按照要求注册完成后,便可以进入到UNIT的技能库,如下图所示:

此时我们的技能库还是空的。左上角有“我的机器人”和“我的闲聊”两个板块,实现多轮对话便会用到这两块。

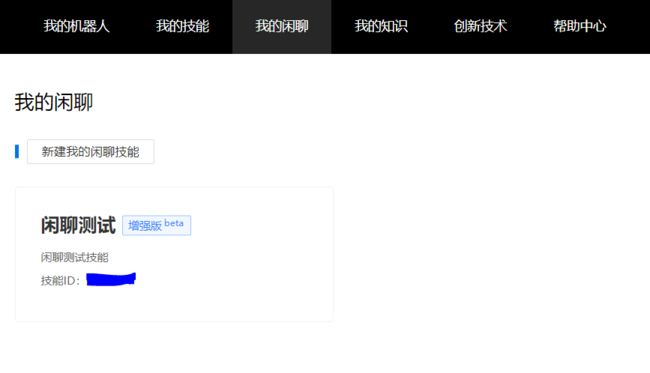

之后点击“我的闲聊”,然后点击新建我的闲聊技能,这里有普通,专业,增强版可选。其中增强版即百度Plato对话模型的中文对话模型。

点击“我的机器人”,然后点击“+”号,创建一个新的机器人:

创建完成后,点击刚刚创建的机器人,进入机器人页面,点击“添加技能”,添加刚刚创建的闲聊技能。

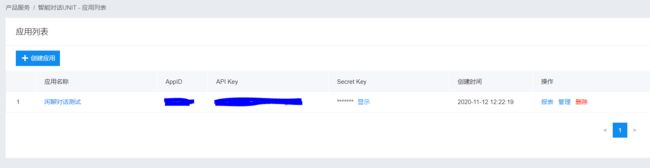

最后登录百度控制台,需要注意的是这个也需要注册,没有注册的朋友请自行注册完成。进入主页后,在产品服务下找到“智能对话定制与服务平台UNIT”,点击进入;点击“创建应用”,输入应用名称,应用描述后后点击“立即创建”即可创建应用。创建后,可以在页面看到创建应用的信息,纪录APPID和APIKEY信息。

至此,多轮对话的准备工作便已经完成了。

2、本机代码调用实现:

准备工作完成后,我们可以通过请求调用API的形式,接收返回结果进行多轮对话。更加详细的调用方法可以在UNIT官网技术文档获取,这里我直接提供出现成的调用对话函数,如下。其中APPID,SECRETKEY为控制台中申请应用得到的APPID和APIKEY,SERVICEID为机器人的ID,SKILLID为闲聊技能ID,而函数的参数解释如下:

text: 当前对话内容(人发出的对话)

user_id: 对话人的编号(对不同人进行不同的编号,来区分不同对象)

session_id, history: 见后边解释

log_id: 非必要,纪录日志的id号

# encoding:utf-8

import requests

APPID = "*************"

SECRETKEY = "****************"

SERVICEID = '****************'

SKILLID = '***************'

# client_id 为官网获取的AK, client_secret 为官网获取的SK

host = 'https://aip.baidubce.com/oauth/2.0/token?grant_type=client_credentials&client_id=%s&client_secret=%s' \

% (APPKEY, SECRETKEY)

response = requests.get(host)

access_token = response.json()["access_token"]

url = 'https://aip.baidubce.com/rpc/2.0/unit/service/chat?access_token=' + access_token

def dialog(text, user_id, session_id='', history='', log_id='LOG_FOR_TEST'):

post_data = "{\"log_id\":\"%s\",\"version\":\"2.0\",\"service_id\":\"%s\",\"session_id\":\"%s\"," \

"\"request\":{\"query\":\"%s\",\"user_id\":\"%s\"}," \

"\"dialog_state\":{\"contexts\":{\"SYS_REMEMBERED_SKILLS\":[\"%s\"]}}}"\

%(log_id, SERVICEID, session_id, text, user_id, SKILLID)

if len(history) > 0:

post_data = "{\"log_id\":\"%s\",\"version\":\"2.0\",\"service_id\":\"%s\",\"session_id\":\"\"," \

"\"request\":{\"query\":\"%s\",\"user_id\":\"%s\"}," \

"\"dialog_state\":{\"contexts\":{\"SYS_REMEMBERED_SKILLS\":[\"%s\"], " \

"\"SYS_CHAT_HIST\":[%s]}}}" \

%(log_id, SERVICEID, text, user_id, SKILLID, history)

post_data = post_data.encode('utf-8')

headers = {'content-type': 'application/x-www-form-urlencoded'}

response = requests.post(url, data=post_data, headers=headers)

resp = response.json()

ans = resp["result"]["response_list"][0]["action_list"][0]['say']

session_id = resp['result']['session_id']

return ans, session_id需要说明的是,多轮对话的历史信息,我们可以通过记录请求响应结果中的session_id实现,也可以手动记录对话纪录history,并写入请求的dialog_state中的SYS_CHAT_HIST。为此,我们定义一个用户类,来存储每个用户的对话历史,或相应的session_id。当输入了history时,session_id无效;当没有history时,务必输入session_id以确保对话过程中可以考虑历史对话信息。如下:

class User:

def __init__(self, user_id):

self.user_id = user_id

self.session_id = ''

self._history = []

self.history = ''

self.MAX_TURN = 7

def get_service_id(self, session_id):

self.session_id = session_id

def update_history(self, text):

self._history.append(text)

self._history = self._history[-self.MAX_TURN*2-1:]

self.history = ','.join(["\""+sent+"\"" for sent in self._history])

def start_new_dialog(self):

self.session_id = ''

self._history = []

self.history = ''

def change_max_turn(self, max_turn):

self.MAX_TURN = max_turn接下来,便可以进行对话了:

from dialog import dialog

from user import User

user_id = 'test_user'

user = User(user_id)

while True:

human_ans = input()

if len(human_ans) > 0:

user.update_history(human_ans)

robot_resp, session_id = dialog(human_ans, user.user_id, user.session_id, user.history)

user.session_id = session_id

user.update_history(robot_resp)

print("Robot: %s" % robot_resp)

else:

break

user.start_new_dialog()实际演示对话的话可以利用如下代码段实现:

from dialog import dialog

from user import User

user_id = 'test_user'

user = User(user_id)

while True:

human_ans = input()

if len(human_ans) > 0:

user.update_history(human_ans)

robot_resp, session_id = dialog(human_ans, user.user_id, user.session_id, user.history)

user.session_id = session_id

user.update_history(robot_resp)

print("You: %s" % human_ans)

print("Robot: %s" % robot_resp)

else:

break

user.start_new_dialog()这里You后紧接我们自己想说的话,Robot后为UNIT自动生成的回复,部分问题也可能是AI问我们,挑选出其中非问题的对话内容可以作为之后情绪识别的验证。

二、对话情绪识别理论:

此处感谢飞桨官方文章 七夕礼物没送对?飞桨PaddlePaddle帮你读懂女朋友的小心思 深有启发。

对话情绪识别适用于聊天、客服等多个场景,能够帮助企业更好地把握对话质量、改善产品的用户交互体验,也能分析客服服务质量、降低人工质检成本。对话情绪识别(Emotion Detection,简称EmoTect),专注于识别智能对话场景中用户的情绪,针对智能对话场景中的用户文本,自动判断该文本的情绪类别并给出相应的置信度,情绪类型分为积极、消极、中性。

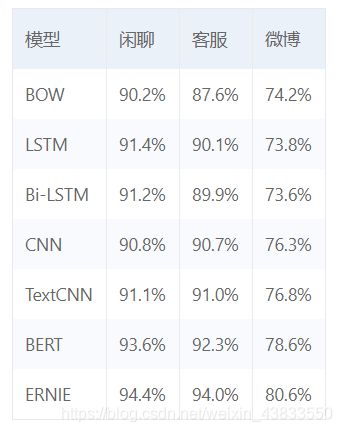

基于百度自建测试集(包含闲聊、客服)和nlpcc2014微博情绪数据集评测效果如下表所示,此外,PaddleNLP还开源了百度基于海量数据训练好的模型,该模型在聊天对话语料上fine-tune之后,可以得到更好的效果。

-

BOW:Bag Of Words,是一个非序列模型,使用基本的全连接结构。

-

CNN:浅层CNN模型,能够处理变长的序列输入,提取一个局部区域之内的特征。

-

TextCNN:多卷积核CNN模型,能够更好地捕捉句子局部相关性。

-

LSTM:单层LSTM模型,能够较好地解决序列文本中长距离依赖的问题。

-

BI-LSTM:双向单层LSTM模型,采用双向LSTM结构,更好地捕获句子中的语义特征。

-

ERNIE:百度自研基于海量数据和先验知识训练的通用文本语义表示模型,并基于此在对话情绪分类数据集上进行fine-tune获得。

在百度PaddlePaddle飞桨下属的各个高层API中,PaddleNLP是专门用于自然语言处理的。PaddleHub则是深度学习现成预训练仓库,也可以调用其中训练好的模型进行使用。这两种方法相对简单顶层,但是直观性比较差。此外以下我们会大致给出一个从PaddlePaddle底层搭建的情绪分析代码。

技术上,基于百度飞桨PaddleNLP的“对话情绪识别”模型则特别针对中文表达中口语化、语气词多、词汇乱序等常见情况与难题,优化除去口语化、同义词转换等预处理方式,保证识别数据的干净有效,从而让机器“更懂”用户所表达的“中心思想”。因此,该模型可以广泛的适用于电商、教育、地图导航等场景,帮助“机器”更好地理解“人心”。

三、PaddleHub实现对话情绪识别:

1、准备工作:

包括查看当前的数据集和工作区文件,并且将PaddlePaddle和PaddleHub库统一升级到2.0版本。

# 查看当前挂载的数据集目录, 该目录下的变更重启环境后会自动还原

# View dataset directory. This directory will be recovered automatically after resetting environment.

!ls /home/aistudio/data

# 查看工作区文件, 该目录下的变更将会持久保存. 请及时清理不必要的文件, 避免加载过慢.

# View personal work directory. All changes under this directory will be kept even after reset. Please clean unnecessary files in time to speed up environment loading.

!ls /home/aistudio/work

#需要将PaddleHub和PaddlePaddle统一升级到2.0版本

!pip install paddlehub==2.0.0 -i https://pypi.tuna.tsinghua.edu.cn/simple

!pip install paddlepaddle==2.0.0 -i https://pypi.tuna.tsinghua.edu.cn/simple 2、引入库文件和hub中关于情感分析的模型:

import paddlehub as hub

senta = hub.Module(name="senta_bilstm")3、调入我们需要检测的文本内容,放置在text变量中,每一句消息作为一个字符串存放在一个一维列表:

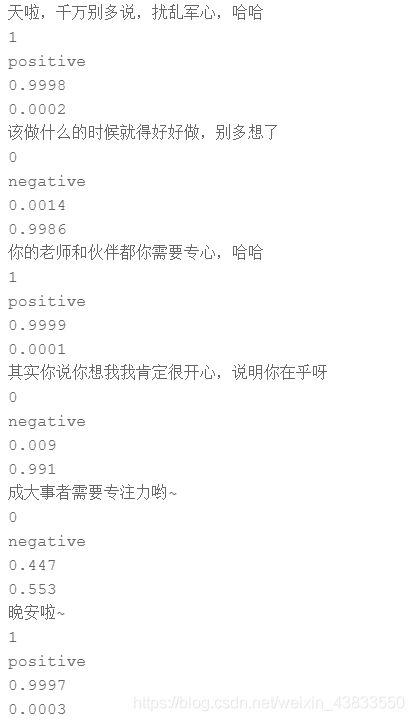

test_text = [

"天啦,千万别多说,扰乱军心,哈哈",

"该做什么的时候就得好好做,别多想了",

"你的老师和伙伴都你需要专心,哈哈" ,

"其实你说你想我我肯定很开心,说明你在乎呀" ,

"成大事者需要专注力哟~",

"晚安啦~"

]4、模型参数读取及调用预测:

input_dict = {"text": test_text}

results = senta.sentiment_classify(data=input_dict)

for result in results:

print(result['text'])

print(result['sentiment_label'])

print(result['sentiment_key'])

print(result['positive_probs'])

print(result['negative_probs'])5、预测结果:

其中数字2表示积极,1表示中性,0表示消极。后两排的概率表示PaddleHub_Senta_bilstm模型认为的积极和消极程度(以可能性作为评判标准)的深浅。

四、基于PaddleNLP的对话情绪分析升级版:

1、准备工作:

包括升级PaddleNLP到2.0版本和导入必要的库文件。

#首先,需要安装paddlenlp2.0。

!pip install paddlenlp#导入相关的模块

import paddle

import paddlenlp as ppnlp

from paddlenlp.data import Stack, Pad, Tuple

import paddle.nn.functional as F

import numpy as np

from functools import partial #partial()函数可以用来固定某些参数值,并返回一个新的callable对象2、数据集准备:

数据集为公开中文情感分析数据集ChnSenticorp。使用PaddleNLP的.datasets.ChnSentiCorp.get_datasets方法即可以加载该数据集。

#采用paddlenlp内置的ChnSentiCorp语料,该语料主要可以用来做情感分类。训练集用来训练模型,验证集用来选择模型,测试集用来评估模型泛化性能。

train_ds, dev_ds, test_ds = ppnlp.datasets.ChnSentiCorp.get_datasets(['train','dev','test'])

#获得标签列表

label_list = train_ds.get_labels()

#看看数据长什么样子,分别打印训练集、验证集、测试集的前3条数据。

print("训练集数据:{}\n".format(train_ds[0:3]))

print("验证集数据:{}\n".format(dev_ds[0:3]))

print("测试集数据:{}\n".format(test_ds[0:3]))

print("训练集样本个数:{}".format(len(train_ds)))

print("验证集样本个数:{}".format(len(dev_ds)))

print("测试集样本个数:{}".format(len(test_ds)))3、数据预处理:

#调用ppnlp.transformers.BertTokenizer进行数据处理,tokenizer可以把原始输入文本转化成模型model可接受的输入数据格式。

tokenizer = ppnlp.transformers.BertTokenizer.from_pretrained("bert-base-chinese")

#数据预处理

def convert_example(example,tokenizer,label_list,max_seq_length=256,is_test=False):

if is_test:

text = example

else:

text, label = example

#tokenizer.encode方法能够完成切分token,映射token ID以及拼接特殊token

encoded_inputs = tokenizer.encode(text=text, max_seq_len=max_seq_length)

input_ids = encoded_inputs["input_ids"]

segment_ids = encoded_inputs["segment_ids"]

if not is_test:

label_map = {}

for (i, l) in enumerate(label_list):

label_map[l] = i

label = label_map[label]

label = np.array([label], dtype="int64")

return input_ids, segment_ids, label

else:

return input_ids, segment_ids

#数据迭代器构造方法

def create_dataloader(dataset, trans_fn=None, mode='train', batch_size=1, use_gpu=False, pad_token_id=0, batchify_fn=None):

if trans_fn:

dataset = dataset.apply(trans_fn, lazy=True)

if mode == 'train' and use_gpu:

sampler = paddle.io.DistributedBatchSampler(dataset=dataset, batch_size=batch_size, shuffle=True)

else:

shuffle = True if mode == 'train' else False #如果不是训练集,则不打乱顺序

sampler = paddle.io.BatchSampler(dataset=dataset, batch_size=batch_size, shuffle=shuffle) #生成一个取样器

dataloader = paddle.io.DataLoader(dataset, batch_sampler=sampler, return_list=True, collate_fn=batchify_fn)

return dataloader

#使用partial()来固定convert_example函数的tokenizer, label_list, max_seq_length, is_test等参数值

trans_fn = partial(convert_example, tokenizer=tokenizer, label_list=label_list, max_seq_length=128, is_test=False)

batchify_fn = lambda samples, fn=Tuple(Pad(axis=0,pad_val=tokenizer.pad_token_id), Pad(axis=0, pad_val=tokenizer.pad_token_id), Stack(dtype="int64")):[data for data in fn(samples)]

#训练集迭代器

train_loader = create_dataloader(train_ds, mode='train', batch_size=64, batchify_fn=batchify_fn, trans_fn=trans_fn)

#验证集迭代器

dev_loader = create_dataloader(dev_ds, mode='dev', batch_size=64, batchify_fn=batchify_fn, trans_fn=trans_fn)

#测试集迭代器

test_loader = create_dataloader(test_ds, mode='test', batch_size=64, batchify_fn=batchify_fn, trans_fn=trans_fn)4、模型训练:

#加载预训练模型Bert用于文本分类任务的Fine-tune网络BertForSequenceClassification, 它在BERT模型后接了一个全连接层进行分类。

#由于本任务中的情感分类是二分类问题,设定num_classes为2

model = ppnlp.transformers.BertForSequenceClassification.from_pretrained("bert-base-chinese", num_classes=2)

#设置训练超参数

#学习率

learning_rate = 1e-5

#训练轮次

epochs = 20

#学习率预热比率

warmup_proption = 0.1

#权重衰减系数

weight_decay = 0.01

num_training_steps = len(train_loader) * epochs

num_warmup_steps = int(warmup_proption * num_training_steps)

def get_lr_factor(current_step):

if current_step < num_warmup_steps:

return float(current_step) / float(max(1, num_warmup_steps))

else:

return max(0.0,

float(num_training_steps - current_step) /

float(max(1, num_training_steps - num_warmup_steps)))

#学习率调度器

lr_scheduler = paddle.optimizer.lr.LambdaDecay(learning_rate, lr_lambda=lambda current_step: get_lr_factor(current_step))

#优化器

optimizer = paddle.optimizer.AdamW(

learning_rate=lr_scheduler,

parameters=model.parameters(),

weight_decay=weight_decay,

apply_decay_param_fun=lambda x: x in [

p.name for n, p in model.named_parameters()

if not any(nd in n for nd in ["bias", "norm"])

])

#损失函数

criterion = paddle.nn.loss.CrossEntropyLoss()

#评估函数

metric = paddle.metric.Accuracy()

#评估函数

def evaluate(model, criterion, metric, data_loader):

model.eval()

metric.reset()

losses = []

for batch in data_loader:

input_ids, segment_ids, labels = batch

logits = model(input_ids, segment_ids)

loss = criterion(logits, labels)

losses.append(loss.numpy())

correct = metric.compute(logits, labels)

metric.update(correct)

accu = metric.accumulate()

print("eval loss: %.5f, accu: %.5f" % (np.mean(losses), accu))

model.train()

metric.reset()

#开始训练

global_step = 0

for epoch in range(1, epochs + 1):

for step, batch in enumerate(train_loader, start=1): #从训练数据迭代器中取数据

input_ids, segment_ids, labels = batch

logits = model(input_ids, segment_ids)

loss = criterion(logits, labels) #计算损失

probs = F.softmax(logits, axis=1)

correct = metric.compute(probs, labels)

metric.update(correct)

acc = metric.accumulate()

global_step += 1

if global_step % 50 == 0 :

print("global step %d, epoch: %d, batch: %d, loss: %.5f, acc: %.5f" % (global_step, epoch, step, loss, acc))

loss.backward()

optimizer.step()

lr_scheduler.step()

optimizer.clear_gradients()

evaluate(model, criterion, metric, dev_loader)5、模型预测:

在倒数第六行的data变量列表中,同之前PaddleHub中提到的方法,可以有效地传入文本内容参数,以一条消息为单个字符串元素排列此一维列表。

def predict(model, data, tokenizer, label_map, batch_size=1):

examples = []

for text in data:

input_ids, segment_ids = convert_example(text, tokenizer, label_list=label_map.values(), max_seq_length=128, is_test=True)

examples.append((input_ids, segment_ids))

batchify_fn = lambda samples, fn=Tuple(Pad(axis=0, pad_val=tokenizer.pad_token_id), Pad(axis=0, pad_val=tokenizer.pad_token_id)): fn(samples)

batches = []

one_batch = []

for example in examples:

one_batch.append(example)

if len(one_batch) == batch_size:

batches.append(one_batch)

one_batch = []

if one_batch:

batches.append(one_batch)

results = []

model.eval()

for batch in batches:

input_ids, segment_ids = batchify_fn(batch)

input_ids = paddle.to_tensor(input_ids)

segment_ids = paddle.to_tensor(segment_ids)

logits = model(input_ids, segment_ids)

probs = F.softmax(logits, axis=1)

idx = paddle.argmax(probs, axis=1).numpy()

idx = idx.tolist()

labels = [label_map[i] for i in idx]

results.extend(labels)

return results

#待预测文本变量

data = ['有点东西啊', '这个老师讲课水平挺高的', '你在干什么']

label_map = {0: '负向情绪', 1: '正向情绪'}

predictions = predict(model, data, tokenizer, label_map, batch_size=32)

for idx, text in enumerate(data):

print('预测文本: {} \n情绪标签: {}'.format(text, predictions[idx]))6、预测结果:

预测结果类同前PaddleHub部分,此略,程序会直接给出这句话是更偏向积极的正向情绪还是消极的负向情绪。

五、PaddlePaddle层从头搭建的自然语言情绪分析(ERNIE模型):

效果上,我们基于百度自建测试集(包含闲聊、客服)和nlpcc2014微博情绪数据集,进行评测,效果如下表所示,此外我们还开源了百度基于海量数据训练好的模型,该模型在聊天对话语料上fine-tune之后,可以得到更好的效果。

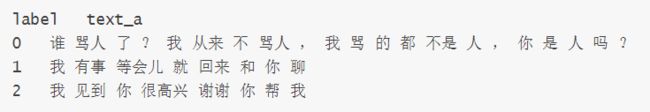

对话情绪识别任务输入是一段用户文本,输出是检测到的情绪类别,包括消极、积极、中性,这是一个经典的短文本三分类任务。数据集链接:https://aistudio.baidu.com/aistudio/datasetdetail/9740

数据集解压后生成data目录,data目录下有训练集数据(train.tsv)、开发集数据(dev.tsv)、测试集数据(test.tsv)、 待预测数据(infer.tsv)以及对应词典(vocab.txt)

训练、预测、评估使用的数据示例如下,数据由两列组成,以制表符('\t')分隔,第一列是情绪分类的类别(0表示消极;1表示中性;2表示积极),第二列是以空格分词的中文文本:

ERNIE:百度自研基于海量数据和先验知识训练的通用文本语义表示模型,并基于此在对话情绪分类数据集上进行 fine-tune 获得。

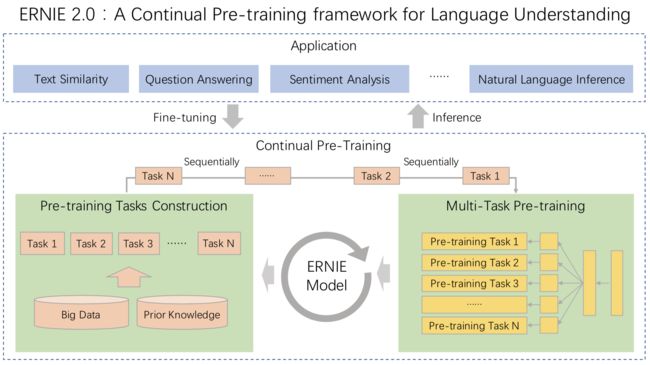

ERNIE 于 2019 年 3 月发布,通过建模海量数据中的词、实体及实体关系,学习真实世界的语义知识。相较于 BERT 学习原始语言信号,ERNIE 直接对先验语义知识单元进行建模,增强了模型语义表示能力。

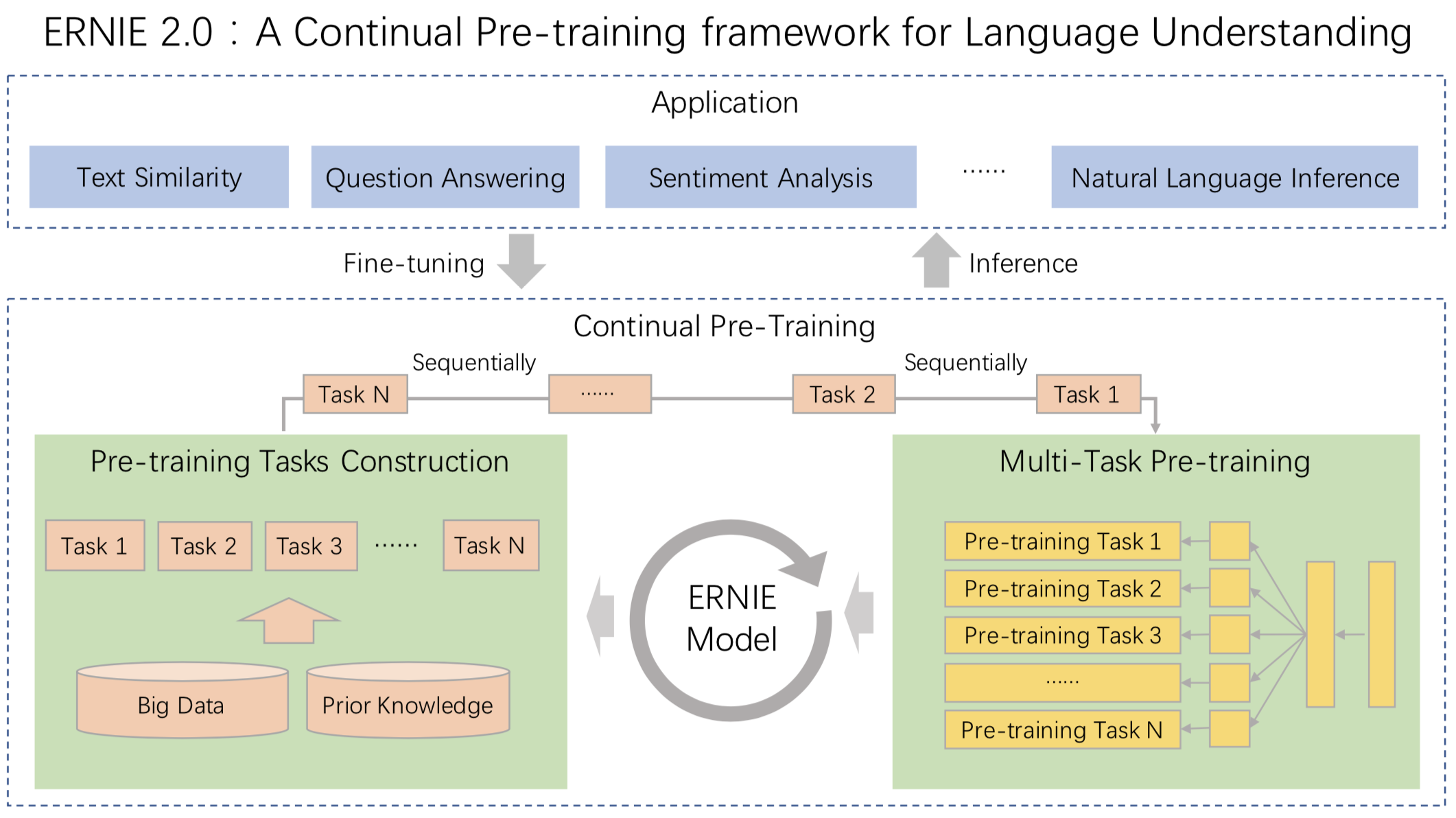

同年 7 月,百度发布了 ERNIE 2.0。ERNIE 2.0 是基于持续学习的语义理解预训练框架,使用多任务学习增量式构建预训练任务。ERNIE 2.0 中,新构建的预训练任务类型可以无缝的加入训练框架,持续的进行语义理解学习。 通过新增的实体预测、句子因果关系判断、文章句子结构重建等语义任务,ERNIE 2.0 语义理解预训练模型从训练数据中获取了词法、句法、语义等多个维度的自然语言信息,极大地增强了通用语义表示能力,示意图如下:

4 个 cell 定义 ErnieModel 中使用的基本网络结构,包括:

- multi_head_attention

- positionwise_feed_forward

- pre_post_process_layer:增加 residual connection, layer normalization 和 droput,在 multi_head_attention 和 positionwise_feed_forward 前后使用

- encoder_layer:调用上述三种结构生成 encoder 层

- encoder:堆叠 encoder_layer 生成完整的 encoder

关于 multi_head_attention 和 positionwise_feed_forward 的介绍可以参考:The Annotated Transformer

3 个 cell 定义分词代码类,包括:

- FullTokenizer:完整的分词,在数据读取代码中使用,调用 BasicTokenizer 和 WordpieceTokenizer 实现

- BasicTokenizer:基本分词,包括标点划分、小写转换等

- WordpieceTokenizer:单词划分

4 个 cell 定义数据读取器和预处理代码,包括:

- BaseReader:数据读取器基类

- ClassifyReader:用于分类模型的数据读取器,重写 _readtsv 和 _pad_batch_records 方法

- pad_batch_data:数据预处理,给数据加 padding,并生成位置数据和 mask

- ernie_pyreader:生成训练、验证和预测使用的 pyreader

数据集和ERNIE网络相关配置:

# 数据集相关配置

data_config = {

'data_dir': 'data/data9740/data', # Directory path to training data.

'vocab_path': 'pretrained_model/ernie_finetune/vocab.txt', # Vocabulary path.

'batch_size': 32, # Total examples' number in batch for training.

'random_seed': 0, # Random seed.

'num_labels': 3, # label number

'max_seq_len': 512, # Number of words of the longest seqence.

'train_set': 'data/data9740/data/test.tsv', # Path to training data.

'test_set': 'data/data9740/data/test.tsv', # Path to test data.

'dev_set': 'data/data9740/data/dev.tsv', # Path to validation data.

'infer_set': 'data/data9740/data/infer.tsv', # Path to infer data.

'label_map_config': None, # label_map_path

'do_lower_case': True, # Whether to lower case the input text. Should be True for uncased models and False for cased models.

}

# Ernie 网络结构相关配置

ernie_net_config = {

"attention_probs_dropout_prob": 0.1,

"hidden_act": "relu",

"hidden_dropout_prob": 0.1,

"hidden_size": 768,

"initializer_range": 0.02,

"max_position_embeddings": 513,

"num_attention_heads": 12,

"num_hidden_layers": 12,

"type_vocab_size": 2,

"vocab_size": 18000,

}训练阶段相关配置:

train_config = {

'init_checkpoint': 'pretrained_model/ernie_finetune/params',

'output_dir': 'train_model',

'epoch': 10,

'save_steps': 100,

'validation_steps': 100,

'lr': 0.00002,

'skip_steps': 10,

'verbose': False,

'use_cuda': True,

}预测阶段相关配置:

infer_config = {

'init_checkpoint': 'train_model',

'use_cuda': True,

}具体实现的详细代码:

#!/usr/bin/env python

# coding: utf-8

# ### 一、项目背景介绍

# 对话情绪识别(Emotion Detection,简称EmoTect),专注于识别智能对话场景中用户的情绪,针对智能对话场景中的用户文本,自动判断该文本的情绪类别并给出相应的置信度,情绪类型分为积极、消极、中性。

#

# 对话情绪识别适用于聊天、客服等多个场景,能够帮助企业更好地把握对话质量、改善产品的用户交互体验,也能分析客服服务质量、降低人工质检成本。可通过 AI开放平台-对话情绪识别 线上体验。

#

# 效果上,我们基于百度自建测试集(包含闲聊、客服)和nlpcc2014微博情绪数据集,进行评测,效果如下表所示,此外我们还开源了百度基于海量数据训练好的模型,该模型在聊天对话语料上fine-tune之后,可以得到更好的效果。

#

#

# | 模型 | 闲聊 | 客服 | 微博 |

# | -------- | -------- | -------- | -------- |

# | BOW | 90.2% | 87.6% | 74.2% |

# | LSTM | 91.4% | 90.1% | 73.8% |

# | Bi-LSTM | 91.2% | 89.9% | 73.6% |

# | CNN | 90.8% | 90.7% | 76.3% |

# | TextCNN | 91.1% | 91.0% | 76.8% |

# | BERT | 93.6% | 92.3% | 78.6% |

# | ERNIE | 94.4% | 94.0% | 80.6% |

# ### 二、数据集介绍

#

# 对话情绪识别任务输入是一段用户文本,输出是检测到的情绪类别,包括消极、积极、中性,这是一个经典的短文本三分类任务。

#

# 数据集解压后生成data目录,data目录下有训练集数据(train.tsv)、开发集数据(dev.tsv)、测试集数据(test.tsv)、 待预测数据(infer.tsv)以及对应词典(vocab.txt)

# 训练、预测、评估使用的数据示例如下,数据由两列组成,以制表符('\t')分隔,第一列是情绪分类的类别(0表示消极;1表示中性;2表示积极),第二列是以空格分词的中文文本:

#

# label text_a

# 0 谁 骂人 了 ? 我 从来 不 骂人 , 我 骂 的 都 不是 人 , 你 是 人 吗 ?

# 1 我 有事 等会儿 就 回来 和 你 聊

# 2 我 见到 你 很高兴 谢谢 你 帮 我

# In[1]:

# 解压数据集

get_ipython().system('cd /home/aistudio/data/data9740 && unzip -qo 对话情绪识别.zip')

# In[2]:

# 各种引用库

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import io

import os

import six

import sys

import time

import random

import string

import logging

import argparse

import collections

import unicodedata

from functools import partial

from collections import namedtuple

import multiprocessing

import paddle

import paddle.fluid as fluid

import paddle.fluid.layers as layers

import numpy as np

# In[3]:

# 统一的 logger 配置

logger = None

def init_log_config():

"""

初始化日志相关配置

:return:

"""

global logger

logger = logging.getLogger()

logger.setLevel(logging.INFO)

log_path = os.path.join(os.getcwd(), 'logs')

if not os.path.exists(log_path):

os.makedirs(log_path)

log_name = os.path.join(log_path, 'train.log')

sh = logging.StreamHandler()

fh = logging.FileHandler(log_name, mode='w')

fh.setLevel(logging.DEBUG)

formatter = logging.Formatter("%(asctime)s - %(filename)s[line:%(lineno)d] - %(levelname)s: %(message)s")

fh.setFormatter(formatter)

sh.setFormatter(formatter)

logger.handlers = []

logger.addHandler(sh)

logger.addHandler(fh)

# In[4]:

# util

def print_arguments(args):

"""

打印参数

"""

logger.info('----------- Configuration Arguments -----------')

for key in args.keys():

logger.info('%s: %s' % (key, args[key]))

logger.info('------------------------------------------------')

def init_checkpoint(exe, init_checkpoint_path, main_program):

"""

加载缓存模型

"""

assert os.path.exists(

init_checkpoint_path), "[%s] cann't be found." % init_checkpoint_path

def existed_persitables(var):

"""

If existed presitabels

"""

if not fluid.io.is_persistable(var):

return False

return os.path.exists(os.path.join(init_checkpoint_path, var.name))

fluid.io.load_vars(

exe,

init_checkpoint_path,

main_program=main_program,

predicate=existed_persitables)

logger.info("Load model from {}".format(init_checkpoint_path))

def csv_reader(fd, delimiter='\t'):

"""

csv 文件读取

"""

def gen():

for i in fd:

slots = i.rstrip('\n').split(delimiter)

if len(slots) == 1:

yield slots,

else:

yield slots

return gen()

# ### 三、网络结构构建

# **ERNIE**:百度自研基于海量数据和先验知识训练的通用文本语义表示模型,并基于此在对话情绪分类数据集上进行 fine-tune 获得。

#

# **ERNIE** 于 2019 年 3 月发布,通过建模海量数据中的词、实体及实体关系,学习真实世界的语义知识。相较于 BERT 学习原始语言信号,**ERNIE** 直接对先验语义知识单元进行建模,增强了模型语义表示能力。

# 同年 7 月,百度发布了 **ERNIE 2.0**。**ERNIE 2.0** 是基于持续学习的语义理解预训练框架,使用多任务学习增量式构建预训练任务。**ERNIE 2.0** 中,新构建的预训练任务类型可以无缝的加入训练框架,持续的进行语义理解学习。 通过新增的实体预测、句子因果关系判断、文章句子结构重建等语义任务,**ERNIE 2.0** 语义理解预训练模型从训练数据中获取了词法、句法、语义等多个维度的自然语言信息,极大地增强了通用语义表示能力,示意图如下:

#  #

# 参考资料:

# 1. [ERNIE: Enhanced Representation through Knowledge Integration](https://arxiv.org/abs/1904.09223)

# 2. [ERNIE 2.0: A Continual Pre-training Framework for Language Understanding](https://arxiv.org/abs/1907.12412)

# 3. [ERNIE预览:百度 知识增强语义表示模型ERNIE](https://www.jianshu.com/p/fb66f444bb8c)

# 4. [ERNIE 2.0 GitHub](https://github.com/PaddlePaddle/ERNIE)

# #### 3.1 ERNIE 模型定义

# class ErnieModel 定义 ERNIE encoder 网络结构

# **输入** src_ids、position_ids、sentence_ids 和 input_mask

# **输出** sequence_output 和 pooled_output

# In[5]:

class ErnieModel(object):

"""Ernie模型定义"""

def __init__(self,

src_ids,

position_ids,

sentence_ids,

input_mask,

config,

weight_sharing=True,

use_fp16=False):

# Ernie 相关参数

self._emb_size = config['hidden_size']

self._n_layer = config['num_hidden_layers']

self._n_head = config['num_attention_heads']

self._voc_size = config['vocab_size']

self._max_position_seq_len = config['max_position_embeddings']

self._sent_types = config['type_vocab_size']

self._hidden_act = config['hidden_act']

self._prepostprocess_dropout = config['hidden_dropout_prob']

self._attention_dropout = config['attention_probs_dropout_prob']

self._weight_sharing = weight_sharing

self._word_emb_name = "word_embedding"

self._pos_emb_name = "pos_embedding"

self._sent_emb_name = "sent_embedding"

self._dtype = "float16" if use_fp16 else "float32"

# Initialize all weigths by truncated normal initializer, and all biases

# will be initialized by constant zero by default.

self._param_initializer = fluid.initializer.TruncatedNormal(

scale=config['initializer_range'])

self._build_model(src_ids, position_ids, sentence_ids, input_mask)

def _build_model(self, src_ids, position_ids, sentence_ids, input_mask):

# padding id in vocabulary must be set to 0

emb_out = fluid.layers.embedding(

input=src_ids,

size=[self._voc_size, self._emb_size],

dtype=self._dtype,

param_attr=fluid.ParamAttr(

name=self._word_emb_name, initializer=self._param_initializer),

is_sparse=False)

position_emb_out = fluid.layers.embedding(

input=position_ids,

size=[self._max_position_seq_len, self._emb_size],

dtype=self._dtype,

param_attr=fluid.ParamAttr(

name=self._pos_emb_name, initializer=self._param_initializer))

sent_emb_out = fluid.layers.embedding(

sentence_ids,

size=[self._sent_types, self._emb_size],

dtype=self._dtype,

param_attr=fluid.ParamAttr(

name=self._sent_emb_name, initializer=self._param_initializer))

emb_out = emb_out + position_emb_out

emb_out = emb_out + sent_emb_out

emb_out = pre_process_layer(

emb_out, 'nd', self._prepostprocess_dropout, name='pre_encoder')

if self._dtype == "float16":

input_mask = fluid.layers.cast(x=input_mask, dtype=self._dtype)

self_attn_mask = fluid.layers.matmul(

x=input_mask, y=input_mask, transpose_y=True)

self_attn_mask = fluid.layers.scale(

x=self_attn_mask, scale=10000.0, bias=-1.0, bias_after_scale=False)

n_head_self_attn_mask = fluid.layers.stack(

x=[self_attn_mask] * self._n_head, axis=1)

n_head_self_attn_mask.stop_gradient = True

self._enc_out = encoder(

enc_input=emb_out,

attn_bias=n_head_self_attn_mask,

n_layer=self._n_layer,

n_head=self._n_head,

d_key=self._emb_size // self._n_head,

d_value=self._emb_size // self._n_head,

d_model=self._emb_size,

d_inner_hid=self._emb_size * 4,

prepostprocess_dropout=self._prepostprocess_dropout,

attention_dropout=self._attention_dropout,

relu_dropout=0,

hidden_act=self._hidden_act,

preprocess_cmd="",

postprocess_cmd="dan",

param_initializer=self._param_initializer,

name='encoder')

def get_sequence_output(self):

"""Get embedding of each token for squence labeling"""

return self._enc_out

def get_pooled_output(self):

"""Get the first feature of each sequence for classification"""

next_sent_feat = fluid.layers.slice(

input=self._enc_out, axes=[1], starts=[0], ends=[1])

next_sent_feat = fluid.layers.fc(

input=next_sent_feat,

size=self._emb_size,

act="tanh",

param_attr=fluid.ParamAttr(

name="pooled_fc.w_0", initializer=self._param_initializer),

bias_attr="pooled_fc.b_0")

return next_sent_feat

# #### 3.2 基本网络结构定义

# 以下 4 个 cell 定义 ErnieModel 中使用的基本网络结构,包括:

# 1. multi_head_attention

# 2. positionwise_feed_forward

# 3. pre_post_process_layer:增加 residual connection, layer normalization 和 droput,在 multi_head_attention 和 positionwise_feed_forward 前后使用

# 4. encoder_layer:调用上述三种结构生成 encoder 层

# 5. encoder:堆叠 encoder_layer 生成完整的 encoder

#

# 关于 multi_head_attention 和 positionwise_feed_forward 的介绍可以参考:[The Annotated Transformer](http://nlp.seas.harvard.edu/2018/04/03/attention.html)

# In[6]:

def multi_head_attention(queries, keys, values, attn_bias, d_key, d_value, d_model, n_head=1, dropout_rate=0.,

cache=None, param_initializer=None, name='multi_head_att'):

"""

Multi-Head Attention. Note that attn_bias is added to the logit before

computing softmax activiation to mask certain selected positions so that

they will not considered in attention weights.

"""

keys = queries if keys is None else keys

values = keys if values is None else values

if not (len(queries.shape) == len(keys.shape) == len(values.shape) == 3):

raise ValueError(

"Inputs: quries, keys and values should all be 3-D tensors.")

def __compute_qkv(queries, keys, values, n_head, d_key, d_value):

"""

Add linear projection to queries, keys, and values.

"""

q = layers.fc(input=queries,

size=d_key * n_head,

num_flatten_dims=2,

param_attr=fluid.ParamAttr(

name=name + '_query_fc.w_0',

initializer=param_initializer),

bias_attr=name + '_query_fc.b_0')

k = layers.fc(input=keys,

size=d_key * n_head,

num_flatten_dims=2,

param_attr=fluid.ParamAttr(

name=name + '_key_fc.w_0',

initializer=param_initializer),

bias_attr=name + '_key_fc.b_0')

v = layers.fc(input=values,

size=d_value * n_head,

num_flatten_dims=2,

param_attr=fluid.ParamAttr(

name=name + '_value_fc.w_0',

initializer=param_initializer),

bias_attr=name + '_value_fc.b_0')

return q, k, v

def __split_heads(x, n_head):

"""

Reshape the last dimension of inpunt tensor x so that it becomes two

dimensions and then transpose. Specifically, input a tensor with shape

[bs, max_sequence_length, n_head * hidden_dim] then output a tensor

with shape [bs, n_head, max_sequence_length, hidden_dim].

"""

hidden_size = x.shape[-1]

# The value 0 in shape attr means copying the corresponding dimension

# size of the input as the output dimension size.

reshaped = layers.reshape(

x=x, shape=[0, 0, n_head, hidden_size // n_head], inplace=True)

# permuate the dimensions into:

# [batch_size, n_head, max_sequence_len, hidden_size_per_head]

return layers.transpose(x=reshaped, perm=[0, 2, 1, 3])

def __combine_heads(x):

"""

Transpose and then reshape the last two dimensions of inpunt tensor x

so that it becomes one dimension, which is reverse to __split_heads.

"""

if len(x.shape) == 3:

return x

if len(x.shape) != 4:

raise ValueError("Input(x) should be a 4-D Tensor.")

trans_x = layers.transpose(x, perm=[0, 2, 1, 3])

# The value 0 in shape attr means copying the corresponding dimension

# size of the input as the output dimension size.

return layers.reshape(

x=trans_x,

shape=[0, 0, trans_x.shape[2] * trans_x.shape[3]],

inplace=True)

def scaled_dot_product_attention(q, k, v, attn_bias, d_key, dropout_rate):

"""

Scaled Dot-Product Attention

"""

scaled_q = layers.scale(x=q, scale=d_key**-0.5)

product = layers.matmul(x=scaled_q, y=k, transpose_y=True)

if attn_bias:

product += attn_bias

weights = layers.softmax(product)

if dropout_rate:

weights = layers.dropout(

weights,

dropout_prob=dropout_rate,

dropout_implementation="upscale_in_train",

is_test=False)

out = layers.matmul(weights, v)

return out

q, k, v = __compute_qkv(queries, keys, values, n_head, d_key, d_value)

if cache is not None: # use cache and concat time steps

# Since the inplace reshape in __split_heads changes the shape of k and

# v, which is the cache input for next time step, reshape the cache

# input from the previous time step first.

k = cache["k"] = layers.concat(

[layers.reshape(

cache["k"], shape=[0, 0, d_model]), k], axis=1)

v = cache["v"] = layers.concat(

[layers.reshape(

cache["v"], shape=[0, 0, d_model]), v], axis=1)

q = __split_heads(q, n_head)

k = __split_heads(k, n_head)

v = __split_heads(v, n_head)

ctx_multiheads = scaled_dot_product_attention(q, k, v, attn_bias, d_key,

dropout_rate)

out = __combine_heads(ctx_multiheads)

# Project back to the model size.

proj_out = layers.fc(input=out,

size=d_model,

num_flatten_dims=2,

param_attr=fluid.ParamAttr(

name=name + '_output_fc.w_0',

initializer=param_initializer),

bias_attr=name + '_output_fc.b_0')

return proj_out

# In[7]:

def positionwise_feed_forward(x, d_inner_hid, d_hid, dropout_rate, hidden_act, param_initializer=None, name='ffn'):

"""

Position-wise Feed-Forward Networks.

This module consists of two linear transformations with a ReLU activation

in between, which is applied to each position separately and identically.

"""

hidden = layers.fc(input=x, size=d_inner_hid, num_flatten_dims=2, act=hidden_act,

param_attr=fluid.ParamAttr(

name=name + '_fc_0.w_0',

initializer=param_initializer),

bias_attr=name + '_fc_0.b_0')

if dropout_rate:

hidden = layers.dropout(hidden, dropout_prob=dropout_rate, dropout_implementation="upscale_in_train", is_test=False)

out = layers.fc(input=hidden, size=d_hid, num_flatten_dims=2,

param_attr=fluid.ParamAttr(

name=name + '_fc_1.w_0', initializer=param_initializer),

bias_attr=name + '_fc_1.b_0')

return out

# In[8]:

def pre_post_process_layer(prev_out, out, process_cmd, dropout_rate=0.,

name=''):

"""

Add residual connection, layer normalization and droput to the out tensor

optionally according to the value of process_cmd.

This will be used before or after multi-head attention and position-wise

feed-forward networks.

"""

for cmd in process_cmd:

if cmd == "a": # add residual connection

out = out + prev_out if prev_out else out

elif cmd == "n": # add layer normalization

out_dtype = out.dtype

if out_dtype == fluid.core.VarDesc.VarType.FP16:

out = layers.cast(x=out, dtype="float32")

out = layers.layer_norm(

out,

begin_norm_axis=len(out.shape) - 1,

param_attr=fluid.ParamAttr(

name=name + '_layer_norm_scale',

initializer=fluid.initializer.Constant(1.)),

bias_attr=fluid.ParamAttr(

name=name + '_layer_norm_bias',

initializer=fluid.initializer.Constant(0.)))

if out_dtype == fluid.core.VarDesc.VarType.FP16:

out = layers.cast(x=out, dtype="float16")

elif cmd == "d": # add dropout

if dropout_rate:

out = layers.dropout(

out,

dropout_prob=dropout_rate,

dropout_implementation="upscale_in_train",

is_test=False)

return out

pre_process_layer = partial(pre_post_process_layer, None)

post_process_layer = pre_post_process_layer

# In[9]:

def encoder_layer(enc_input, attn_bias, n_head, d_key, d_value, d_model, d_inner_hid, prepostprocess_dropout,

attention_dropout, relu_dropout, hidden_act, preprocess_cmd="n", postprocess_cmd="da",

param_initializer=None, name=''):

"""The encoder layers that can be stacked to form a deep encoder.

This module consits of a multi-head (self) attention followed by

position-wise feed-forward networks and both the two components companied

with the post_process_layer to add residual connection, layer normalization

and droput.

"""

attn_output = multi_head_attention(

pre_process_layer(enc_input, preprocess_cmd, prepostprocess_dropout, name=name + '_pre_att'),

None, None, attn_bias, d_key, d_value, d_model, n_head, attention_dropout,

param_initializer=param_initializer, name=name + '_multi_head_att')

attn_output = post_process_layer(enc_input, attn_output, postprocess_cmd, prepostprocess_dropout, name=name + '_post_att')

ffd_output = positionwise_feed_forward(

pre_process_layer(attn_output, preprocess_cmd, prepostprocess_dropout, name=name + '_pre_ffn'),

d_inner_hid, d_model, relu_dropout, hidden_act, param_initializer=param_initializer,

name=name + '_ffn')

return post_process_layer(attn_output, ffd_output, postprocess_cmd, prepostprocess_dropout, name=name + '_post_ffn')

def encoder(enc_input, attn_bias, n_layer, n_head, d_key, d_value, d_model, d_inner_hid, prepostprocess_dropout,

attention_dropout, relu_dropout, hidden_act, preprocess_cmd="n", postprocess_cmd="da",

param_initializer=None, name=''):

"""

The encoder is composed of a stack of identical layers returned by calling

encoder_layer.

"""

for i in range(n_layer):

enc_output = encoder_layer(enc_input, attn_bias, n_head, d_key, d_value, d_model, d_inner_hid,

prepostprocess_dropout, attention_dropout, relu_dropout, hidden_act, preprocess_cmd,

postprocess_cmd, param_initializer=param_initializer, name=name + '_layer_' + str(i))

enc_input = enc_output

enc_output = pre_process_layer(enc_output, preprocess_cmd, prepostprocess_dropout, name="post_encoder")

return enc_output

# #### 3.3 编码器 和 分类器 定义

# 以下 cell 定义 encoder 和 classification 的组织结构:

# 1. ernie_encoder:根据 ErnieModel 组织输出 embeddings

# 2. create_ernie_model:定义分类网络,以 embeddings 为输入,使用全连接网络 + softmax 做分类

# In[10]:

def ernie_encoder(ernie_inputs, ernie_config):

"""return sentence embedding and token embeddings"""

ernie = ErnieModel(

src_ids=ernie_inputs["src_ids"],

position_ids=ernie_inputs["pos_ids"],

sentence_ids=ernie_inputs["sent_ids"],

input_mask=ernie_inputs["input_mask"],

config=ernie_config)

enc_out = ernie.get_sequence_output()

unpad_enc_out = fluid.layers.sequence_unpad(

enc_out, length=ernie_inputs["seq_lens"])

cls_feats = ernie.get_pooled_output()

embeddings = {

"sentence_embeddings": cls_feats,

"token_embeddings": unpad_enc_out,

}

for k, v in embeddings.items():

v.persistable = True

return embeddings

def create_ernie_model(args,

embeddings,

labels,

is_prediction=False):

"""

Create Model for sentiment classification based on ERNIE encoder

"""

sentence_embeddings = embeddings["sentence_embeddings"]

token_embeddings = embeddings["token_embeddings"]

cls_feats = fluid.layers.dropout(

x=sentence_embeddings,

dropout_prob=0.1,

dropout_implementation="upscale_in_train")

logits = fluid.layers.fc(

input=cls_feats,

size=args['num_labels'],

param_attr=fluid.ParamAttr(

name="cls_out_w",

initializer=fluid.initializer.TruncatedNormal(scale=0.02)),

bias_attr=fluid.ParamAttr(

name="cls_out_b", initializer=fluid.initializer.Constant(0.)))

ce_loss, probs = fluid.layers.softmax_with_cross_entropy(

logits=logits, label=labels, return_softmax=True)

if is_prediction:

return probs

loss = fluid.layers.mean(x=ce_loss)

num_seqs = fluid.layers.create_tensor(dtype='int64')

accuracy = fluid.layers.accuracy(input=probs, label=labels, total=num_seqs)

return loss, accuracy, num_seqs

# #### 3.4 分词代码

# 以下 3 个 cell 定义分词代码类,包括:

# 1. FullTokenizer:完整的分词,在数据读取代码中使用,调用 BasicTokenizer 和 WordpieceTokenizer 实现

# 2. BasicTokenizer:基本分词,包括标点划分、小写转换等

# 3. WordpieceTokenizer:单词划分

# In[11]:

class FullTokenizer(object):

"""Runs end-to-end tokenziation."""

def __init__(self, vocab_file, do_lower_case=True):

self.vocab = load_vocab(vocab_file)

self.inv_vocab = {v: k for k, v in self.vocab.items()}

self.basic_tokenizer = BasicTokenizer(do_lower_case=do_lower_case)

self.wordpiece_tokenizer = WordpieceTokenizer(vocab=self.vocab)

def tokenize(self, text):

split_tokens = []

for token in self.basic_tokenizer.tokenize(text):

for sub_token in self.wordpiece_tokenizer.tokenize(token):

split_tokens.append(sub_token)

return split_tokens

def convert_tokens_to_ids(self, tokens):

return convert_by_vocab(self.vocab, tokens)

# In[12]:

class BasicTokenizer(object):

"""Runs basic tokenization (punctuation splitting, lower casing, etc.)."""

def __init__(self, do_lower_case=True):

"""Constructs a BasicTokenizer.

Args:

do_lower_case: Whether to lower case the input.

"""

self.do_lower_case = do_lower_case

def tokenize(self, text):

"""Tokenizes a piece of text."""

text = convert_to_unicode(text)

text = self._clean_text(text)

# This was added on November 1st, 2018 for the multilingual and Chinese

# models. This is also applied to the English models now, but it doesn't

# matter since the English models were not trained on any Chinese data

# and generally don't have any Chinese data in them (there are Chinese

# characters in the vocabulary because Wikipedia does have some Chinese

# words in the English Wikipedia.).

text = self._tokenize_chinese_chars(text)

orig_tokens = whitespace_tokenize(text)

split_tokens = []

for token in orig_tokens:

if self.do_lower_case:

token = token.lower()

token = self._run_strip_accents(token)

split_tokens.extend(self._run_split_on_punc(token))

output_tokens = whitespace_tokenize(" ".join(split_tokens))

return output_tokens

def _run_strip_accents(self, text):

"""Strips accents from a piece of text."""

text = unicodedata.normalize("NFD", text)

output = []

for char in text:

cat = unicodedata.category(char)

if cat == "Mn":

continue

output.append(char)

return "".join(output)

def _run_split_on_punc(self, text):

"""Splits punctuation on a piece of text."""

chars = list(text)

i = 0

start_new_word = True

output = []

while i < len(chars):

char = chars[i]

if _is_punctuation(char):

output.append([char])

start_new_word = True

else:

if start_new_word:

output.append([])

start_new_word = False

output[-1].append(char)

i += 1

return ["".join(x) for x in output]

def _tokenize_chinese_chars(self, text):

"""Adds whitespace around any CJK character."""

output = []

for char in text:

cp = ord(char)

if self._is_chinese_char(cp):

output.append(" ")

output.append(char)

output.append(" ")

else:

output.append(char)

return "".join(output)

def _is_chinese_char(self, cp):

"""Checks whether CP is the codepoint of a CJK character."""

# This defines a "chinese character" as anything in the CJK Unicode block:

# https://en.wikipedia.org/wiki/CJK_Unified_Ideographs_(Unicode_block)

#

# Note that the CJK Unicode block is NOT all Japanese and Korean characters,

# despite its name. The modern Korean Hangul alphabet is a different block,

# as is Japanese Hiragana and Katakana. Those alphabets are used to write

# space-separated words, so they are not treated specially and handled

# like the all of the other languages.

if ((cp >= 0x4E00 and cp <= 0x9FFF) or #

(cp >= 0x3400 and cp <= 0x4DBF) or #

(cp >= 0x20000 and cp <= 0x2A6DF) or #

(cp >= 0x2A700 and cp <= 0x2B73F) or #

(cp >= 0x2B740 and cp <= 0x2B81F) or #

(cp >= 0x2B820 and cp <= 0x2CEAF) or

(cp >= 0xF900 and cp <= 0xFAFF) or #

(cp >= 0x2F800 and cp <= 0x2FA1F)): #

return True

return False

def _clean_text(self, text):

"""Performs invalid character removal and whitespace cleanup on text."""

output = []

for char in text:

cp = ord(char)

if cp == 0 or cp == 0xfffd or _is_control(char):

continue

if _is_whitespace(char):

output.append(" ")

else:

output.append(char)

return "".join(output)

# In[13]:

class WordpieceTokenizer(object):

"""Runs WordPiece tokenziation."""

def __init__(self, vocab, unk_token="[UNK]", max_input_chars_per_word=100):

self.vocab = vocab

self.unk_token = unk_token

self.max_input_chars_per_word = max_input_chars_per_word

def tokenize(self, text):

"""Tokenizes a piece of text into its word pieces.

This uses a greedy longest-match-first algorithm to perform tokenization

using the given vocabulary.

For example:

input = "unaffable"

output = ["un", "##aff", "##able"]

Args:

text: A single token or whitespace separated tokens. This should have

already been passed through `BasicTokenizer.

Returns:

A list of wordpiece tokens.

"""

text = convert_to_unicode(text)

output_tokens = []

for token in whitespace_tokenize(text):

chars = list(token)

if len(chars) > self.max_input_chars_per_word:

output_tokens.append(self.unk_token)

continue

is_bad = False

start = 0

sub_tokens = []

while start < len(chars):

end = len(chars)

cur_substr = None

while start < end:

substr = "".join(chars[start:end])

if start > 0:

substr = "##" + substr

if substr in self.vocab:

cur_substr = substr

break

end -= 1

if cur_substr is None:

is_bad = True

break

sub_tokens.append(cur_substr)

start = end

if is_bad:

output_tokens.append(self.unk_token)

else:

output_tokens.extend(sub_tokens)

return output_tokens

# #### 3.5 分词辅助代码

# 以下 cell 定义分词中的辅助性代码,包括 convert_to_unicode、whitespace_tokenize 等。

# In[14]:

def convert_to_unicode(text):

"""Converts `text` to Unicode (if it's not already), assuming utf-8 input."""

if six.PY3:

if isinstance(text, str):

return text

elif isinstance(text, bytes):

return text.decode("utf-8", "ignore")

else:

raise ValueError("Unsupported string type: %s" % (type(text)))

elif six.PY2:

if isinstance(text, str):

return text.decode("utf-8", "ignore")

elif isinstance(text, unicode):

return text

else:

raise ValueError("Unsupported string type: %s" % (type(text)))

else:

raise ValueError("Not running on Python2 or Python 3?")

def load_vocab(vocab_file):

"""Loads a vocabulary file into a dictionary."""

vocab = collections.OrderedDict()

fin = io.open(vocab_file, encoding="utf8")

for num, line in enumerate(fin):

items = convert_to_unicode(line.strip()).split("\t")

if len(items) > 2:

break

token = items[0]

index = items[1] if len(items) == 2 else num

token = token.strip()

vocab[token] = int(index)

return vocab

def convert_by_vocab(vocab, items):

"""Converts a sequence of [tokens|ids] using the vocab."""

output = []

for item in items:

output.append(vocab[item])

return output

def whitespace_tokenize(text):

"""Runs basic whitespace cleaning and splitting on a peice of text."""

text = text.strip()

if not text:

return []

tokens = text.split()

return tokens

def _is_whitespace(char):

"""Checks whether `chars` is a whitespace character."""

# \t, \n, and \r are technically contorl characters but we treat them

# as whitespace since they are generally considered as such.

if char == " " or char == "\t" or char == "\n" or char == "\r":

return True

cat = unicodedata.category(char)

if cat == "Zs":

return True

return False

def _is_control(char):

"""Checks whether `chars` is a control character."""

# These are technically control characters but we count them as whitespace

# characters.

if char == "\t" or char == "\n" or char == "\r":

return False

cat = unicodedata.category(char)

if cat.startswith("C"):

return True

return False

def _is_punctuation(char):

"""Checks whether `chars` is a punctuation character."""

cp = ord(char)

# We treat all non-letter/number ASCII as punctuation.

# Characters such as "^", "$", and "`" are not in the Unicode

# Punctuation class but we treat them as punctuation anyways, for

# consistency.

if ((cp >= 33 and cp <= 47) or (cp >= 58 and cp <= 64) or

(cp >= 91 and cp <= 96) or (cp >= 123 and cp <= 126)):

return True

cat = unicodedata.category(char)

if cat.startswith("P"):

return True

return False

# #### 3.6 数据读取 及 预处理代码

# 以下 4 个 cell 定义数据读取器和预处理代码,包括:

# 1. BaseReader:数据读取器基类

# 2. ClassifyReader:用于分类模型的数据读取器,重写 _readtsv 和 _pad_batch_records 方法

# 3. pad_batch_data:数据预处理,给数据加 padding,并生成位置数据和 mask

# 4. ernie_pyreader:生成训练、验证和预测使用的 pyreader

# In[15]:

class BaseReader(object):

"""BaseReader for classify and sequence labeling task"""

def __init__(self,

vocab_path,

label_map_config=None,

max_seq_len=512,

do_lower_case=True,

in_tokens=False,

random_seed=None):

self.max_seq_len = max_seq_len

self.tokenizer = FullTokenizer(

vocab_file=vocab_path, do_lower_case=do_lower_case)

self.vocab = self.tokenizer.vocab

self.pad_id = self.vocab["[PAD]"]

self.cls_id = self.vocab["[CLS]"]

self.sep_id = self.vocab["[SEP]"]

self.in_tokens = in_tokens

np.random.seed(random_seed)

self.current_example = 0

self.current_epoch = 0

self.num_examples = 0

if label_map_config:

with open(label_map_config) as f:

self.label_map = json.load(f)

else:

self.label_map = None

def _read_tsv(self, input_file, quotechar=None):

"""Reads a tab separated value file."""

with io.open(input_file, "r", encoding="utf8") as f:

reader = csv_reader(f, delimiter="\t")

headers = next(reader)

Example = namedtuple('Example', headers)

examples = []

for line in reader:

example = Example(*line)

examples.append(example)

return examples

def _truncate_seq_pair(self, tokens_a, tokens_b, max_length):

"""Truncates a sequence pair in place to the maximum length."""

# This is a simple heuristic which will always truncate the longer sequence

# one token at a time. This makes more sense than truncating an equal percent

# of tokens from each, since if one sequence is very short then each token

# that's truncated likely contains more information than a longer sequence.

while True:

total_length = len(tokens_a) + len(tokens_b)

if total_length <= max_length:

break

if len(tokens_a) > len(tokens_b):

tokens_a.pop()

else:

tokens_b.pop()

def _convert_example_to_record(self, example, max_seq_length, tokenizer):

"""Converts a single `Example` into a single `Record`."""

text_a = convert_to_unicode(example.text_a)

tokens_a = tokenizer.tokenize(text_a)

tokens_b = None

if "text_b" in example._fields:

text_b = convert_to_unicode(example.text_b)

tokens_b = tokenizer.tokenize(text_b)

if tokens_b:

# Modifies `tokens_a` and `tokens_b` in place so that the total

# length is less than the specified length.

# Account for [CLS], [SEP], [SEP] with "- 3"

self._truncate_seq_pair(tokens_a, tokens_b, max_seq_length - 3)

else:

# Account for [CLS] and [SEP] with "- 2"

if len(tokens_a) > max_seq_length - 2:

tokens_a = tokens_a[0:(max_seq_length - 2)]

# The convention in BERT/ERNIE is:

# (a) For sequence pairs:

# tokens: [CLS] is this jack ##son ##ville ? [SEP] no it is not . [SEP]

# type_ids: 0 0 0 0 0 0 0 0 1 1 1 1 1 1

# (b) For single sequences:

# tokens: [CLS] the dog is hairy . [SEP]

# type_ids: 0 0 0 0 0 0 0

#

# Where "type_ids" are used to indicate whether this is the first

# sequence or the second sequence. The embedding vectors for `type=0` and

# `type=1` were learned during pre-training and are added to the wordpiece

# embedding vector (and position vector). This is not *strictly* necessary

# since the [SEP] token unambiguously separates the sequences, but it makes

# it easier for the model to learn the concept of sequences.

#

# For classification tasks, the first vector (corresponding to [CLS]) is

# used as as the "sentence vector". Note that this only makes sense because

# the entire model is fine-tuned.

tokens = []

text_type_ids = []

tokens.append("[CLS]")

text_type_ids.append(0)

for token in tokens_a:

tokens.append(token)

text_type_ids.append(0)

tokens.append("[SEP]")

text_type_ids.append(0)

if tokens_b:

for token in tokens_b:

tokens.append(token)

text_type_ids.append(1)

tokens.append("[SEP]")

text_type_ids.append(1)

token_ids = tokenizer.convert_tokens_to_ids(tokens)

position_ids = list(range(len(token_ids)))

if self.label_map:

label_id = self.label_map[example.label]

else:

label_id = example.label

Record = namedtuple(

'Record',

['token_ids', 'text_type_ids', 'position_ids', 'label_id', 'qid'])

qid = None

if "qid" in example._fields:

qid = example.qid

record = Record(

token_ids=token_ids,

text_type_ids=text_type_ids,

position_ids=position_ids,

label_id=label_id,

qid=qid)

return record

def _prepare_batch_data(self, examples, batch_size, phase=None):

"""generate batch records"""

batch_records, max_len = [], 0

for index, example in enumerate(examples):

if phase == "train":

self.current_example = index

record = self._convert_example_to_record(example, self.max_seq_len,

self.tokenizer)

max_len = max(max_len, len(record.token_ids))

if self.in_tokens:

to_append = (len(batch_records) + 1) * max_len <= batch_size

else:

to_append = len(batch_records) < batch_size

if to_append:

batch_records.append(record)

else:

yield self._pad_batch_records(batch_records)

batch_records, max_len = [record], len(record.token_ids)

if batch_records:

yield self._pad_batch_records(batch_records)

def get_num_examples(self, input_file):

"""return total number of examples"""

examples = self._read_tsv(input_file)

return len(examples)

def get_examples(self, input_file):

examples = self._read_tsv(input_file)

return examples

def data_generator(self,

input_file,

batch_size,

epoch,

shuffle=True,

phase=None):

"""return generator which yields batch data for pyreader"""

examples = self._read_tsv(input_file)

def _wrapper():

for epoch_index in range(epoch):

if phase == "train":

self.current_example = 0

self.current_epoch = epoch_index

if shuffle:

np.random.shuffle(examples)

for batch_data in self._prepare_batch_data(

examples, batch_size, phase=phase):

yield batch_data

return _wrapper

# In[16]:

class ClassifyReader(BaseReader):

"""ClassifyReader"""

def _read_tsv(self, input_file, quotechar=None):

"""Reads a tab separated value file."""

with io.open(input_file, "r", encoding="utf8") as f:

reader = csv_reader(f, delimiter="\t")

headers = next(reader)

text_indices = [

index for index, h in enumerate(headers) if h != "label"

]

Example = namedtuple('Example', headers)

examples = []

for line in reader:

for index, text in enumerate(line):

if index in text_indices:

line[index] = text.replace(' ', '')

example = Example(*line)

examples.append(example)

return examples

def _pad_batch_records(self, batch_records):

batch_token_ids = [record.token_ids for record in batch_records]

batch_text_type_ids = [record.text_type_ids for record in batch_records]

batch_position_ids = [record.position_ids for record in batch_records]

batch_labels = [record.label_id for record in batch_records]

batch_labels = np.array(batch_labels).astype("int64").reshape([-1, 1])

# padding

padded_token_ids, input_mask, seq_lens = pad_batch_data(

batch_token_ids,

pad_idx=self.pad_id,

return_input_mask=True,

return_seq_lens=True)

padded_text_type_ids = pad_batch_data(

batch_text_type_ids, pad_idx=self.pad_id)

padded_position_ids = pad_batch_data(

batch_position_ids, pad_idx=self.pad_id)

return_list = [

padded_token_ids, padded_text_type_ids, padded_position_ids,

input_mask, batch_labels, seq_lens

]

return return_list

# In[17]:

def pad_batch_data(insts,

pad_idx=0,

return_pos=False,

return_input_mask=False,

return_max_len=False,

return_num_token=False,

return_seq_lens=False):

"""

Pad the instances to the max sequence length in batch, and generate the

corresponding position data and input mask.

"""

return_list = []

max_len = max(len(inst) for inst in insts)

# Any token included in dict can be used to pad, since the paddings' loss

# will be masked out by weights and make no effect on parameter gradients.

inst_data = np.array(

[inst + list([pad_idx] * (max_len - len(inst))) for inst in insts])

return_list += [inst_data.astype("int64").reshape([-1, max_len, 1])]

# position data

if return_pos:

inst_pos = np.array([

list(range(0, len(inst))) + [pad_idx] * (max_len - len(inst))

for inst in insts

])

return_list += [inst_pos.astype("int64").reshape([-1, max_len, 1])]

if return_input_mask:

# This is used to avoid attention on paddings.

input_mask_data = np.array([[1] * len(inst) + [0] *

(max_len - len(inst)) for inst in insts])

input_mask_data = np.expand_dims(input_mask_data, axis=-1)

return_list += [input_mask_data.astype("float32")]

if return_max_len:

return_list += [max_len]

if return_num_token:

num_token = 0

for inst in insts:

num_token += len(inst)

return_list += [num_token]

if return_seq_lens:

seq_lens = np.array([len(inst) for inst in insts])

return_list += [seq_lens.astype("int64").reshape([-1])]

return return_list if len(return_list) > 1 else return_list[0]

# In[18]:

def ernie_pyreader(args, pyreader_name):

"""define standard ernie pyreader"""

pyreader_name += '_' + ''.join(random.sample(string.ascii_letters + string.digits, 6))

pyreader = fluid.layers.py_reader(

capacity=50,

shapes=[[-1, args['max_seq_len'], 1], [-1, args['max_seq_len'], 1],

[-1, args['max_seq_len'], 1], [-1, args['max_seq_len'], 1], [-1, 1],

[-1]],

dtypes=['int64', 'int64', 'int64', 'float32', 'int64', 'int64'],

lod_levels=[0, 0, 0, 0, 0, 0],

name=pyreader_name,

use_double_buffer=True)

(src_ids, sent_ids, pos_ids, input_mask, labels,

seq_lens) = fluid.layers.read_file(pyreader)

ernie_inputs = {

"src_ids": src_ids,

"sent_ids": sent_ids,

"pos_ids": pos_ids,

"input_mask": input_mask,

"seq_lens": seq_lens

}

return pyreader, ernie_inputs, labels

# #### 通用参数介绍

# 1. 数据集相关配置

# ```

# data_config = {

# 'data_dir': 'data/data9740/data',

# 'vocab_path': 'data/data9740/data/vocab.txt',

# 'batch_size': 32,

# 'random_seed': 0,

# 'num_labels': 3,

# 'max_seq_len': 512,

# 'train_set': 'data/data9740/data/test.tsv',

# 'test_set': 'data/data9740/data/test.tsv',

# 'dev_set': 'data/data9740/data/dev.tsv',

# 'infer_set': 'data/data9740/data/infer.tsv',

# 'label_map_config': None,

# 'do_lower_case': True,

# }

# ```

# 参数介绍:

# * **data_dir**:数据集路径,默认 'data/data9740/data'

# * **vocab_path**:vocab.txt所在路径,默认 'data/data9740/data/vocab.txt'

# * **batch_size**:训练和验证的批处理大小,默认:32

# * **random_seed**:随机种子,默认 0

# * **num_labels**:类别数,默认 3

# * **max_seq_len**:句子中最长词数,默认 512

# * **train_set**:训练集路径,默认 'data/data9740/data/test.tsv'

# * **test_set**: 测试集路径,默认 'data/data9740/data/test.tsv'

# * **dev_set**: 验证集路径,默认 'data/data9740/data/dev.tsv'

# * **infer_set**:预测集路径,默认 'data/data9740/data/infer.tsv'

# * **label_map_config**:label_map路径,默认 None

# * **do_lower_case**:是否对输入进行额外的小写处理,默认 True

#

#

# 参考资料:

# 1. [ERNIE: Enhanced Representation through Knowledge Integration](https://arxiv.org/abs/1904.09223)

# 2. [ERNIE 2.0: A Continual Pre-training Framework for Language Understanding](https://arxiv.org/abs/1907.12412)

# 3. [ERNIE预览:百度 知识增强语义表示模型ERNIE](https://www.jianshu.com/p/fb66f444bb8c)

# 4. [ERNIE 2.0 GitHub](https://github.com/PaddlePaddle/ERNIE)

# #### 3.1 ERNIE 模型定义

# class ErnieModel 定义 ERNIE encoder 网络结构

# **输入** src_ids、position_ids、sentence_ids 和 input_mask

# **输出** sequence_output 和 pooled_output

# In[5]:

class ErnieModel(object):

"""Ernie模型定义"""

def __init__(self,

src_ids,

position_ids,

sentence_ids,

input_mask,

config,

weight_sharing=True,

use_fp16=False):

# Ernie 相关参数

self._emb_size = config['hidden_size']

self._n_layer = config['num_hidden_layers']

self._n_head = config['num_attention_heads']

self._voc_size = config['vocab_size']

self._max_position_seq_len = config['max_position_embeddings']

self._sent_types = config['type_vocab_size']

self._hidden_act = config['hidden_act']

self._prepostprocess_dropout = config['hidden_dropout_prob']

self._attention_dropout = config['attention_probs_dropout_prob']

self._weight_sharing = weight_sharing

self._word_emb_name = "word_embedding"

self._pos_emb_name = "pos_embedding"

self._sent_emb_name = "sent_embedding"

self._dtype = "float16" if use_fp16 else "float32"

# Initialize all weigths by truncated normal initializer, and all biases

# will be initialized by constant zero by default.

self._param_initializer = fluid.initializer.TruncatedNormal(

scale=config['initializer_range'])

self._build_model(src_ids, position_ids, sentence_ids, input_mask)

def _build_model(self, src_ids, position_ids, sentence_ids, input_mask):

# padding id in vocabulary must be set to 0

emb_out = fluid.layers.embedding(

input=src_ids,

size=[self._voc_size, self._emb_size],

dtype=self._dtype,

param_attr=fluid.ParamAttr(

name=self._word_emb_name, initializer=self._param_initializer),

is_sparse=False)

position_emb_out = fluid.layers.embedding(

input=position_ids,

size=[self._max_position_seq_len, self._emb_size],

dtype=self._dtype,

param_attr=fluid.ParamAttr(

name=self._pos_emb_name, initializer=self._param_initializer))

sent_emb_out = fluid.layers.embedding(

sentence_ids,

size=[self._sent_types, self._emb_size],

dtype=self._dtype,

param_attr=fluid.ParamAttr(

name=self._sent_emb_name, initializer=self._param_initializer))

emb_out = emb_out + position_emb_out

emb_out = emb_out + sent_emb_out

emb_out = pre_process_layer(

emb_out, 'nd', self._prepostprocess_dropout, name='pre_encoder')

if self._dtype == "float16":

input_mask = fluid.layers.cast(x=input_mask, dtype=self._dtype)

self_attn_mask = fluid.layers.matmul(

x=input_mask, y=input_mask, transpose_y=True)

self_attn_mask = fluid.layers.scale(

x=self_attn_mask, scale=10000.0, bias=-1.0, bias_after_scale=False)

n_head_self_attn_mask = fluid.layers.stack(

x=[self_attn_mask] * self._n_head, axis=1)

n_head_self_attn_mask.stop_gradient = True

self._enc_out = encoder(

enc_input=emb_out,

attn_bias=n_head_self_attn_mask,

n_layer=self._n_layer,

n_head=self._n_head,

d_key=self._emb_size // self._n_head,

d_value=self._emb_size // self._n_head,

d_model=self._emb_size,

d_inner_hid=self._emb_size * 4,

prepostprocess_dropout=self._prepostprocess_dropout,

attention_dropout=self._attention_dropout,

relu_dropout=0,

hidden_act=self._hidden_act,

preprocess_cmd="",

postprocess_cmd="dan",

param_initializer=self._param_initializer,

name='encoder')

def get_sequence_output(self):

"""Get embedding of each token for squence labeling"""

return self._enc_out

def get_pooled_output(self):

"""Get the first feature of each sequence for classification"""

next_sent_feat = fluid.layers.slice(

input=self._enc_out, axes=[1], starts=[0], ends=[1])

next_sent_feat = fluid.layers.fc(

input=next_sent_feat,

size=self._emb_size,

act="tanh",

param_attr=fluid.ParamAttr(

name="pooled_fc.w_0", initializer=self._param_initializer),

bias_attr="pooled_fc.b_0")

return next_sent_feat

# #### 3.2 基本网络结构定义

# 以下 4 个 cell 定义 ErnieModel 中使用的基本网络结构,包括:

# 1. multi_head_attention

# 2. positionwise_feed_forward

# 3. pre_post_process_layer:增加 residual connection, layer normalization 和 droput,在 multi_head_attention 和 positionwise_feed_forward 前后使用

# 4. encoder_layer:调用上述三种结构生成 encoder 层

# 5. encoder:堆叠 encoder_layer 生成完整的 encoder

#

# 关于 multi_head_attention 和 positionwise_feed_forward 的介绍可以参考:[The Annotated Transformer](http://nlp.seas.harvard.edu/2018/04/03/attention.html)

# In[6]:

def multi_head_attention(queries, keys, values, attn_bias, d_key, d_value, d_model, n_head=1, dropout_rate=0.,

cache=None, param_initializer=None, name='multi_head_att'):

"""

Multi-Head Attention. Note that attn_bias is added to the logit before

computing softmax activiation to mask certain selected positions so that

they will not considered in attention weights.

"""

keys = queries if keys is None else keys

values = keys if values is None else values

if not (len(queries.shape) == len(keys.shape) == len(values.shape) == 3):

raise ValueError(

"Inputs: quries, keys and values should all be 3-D tensors.")

def __compute_qkv(queries, keys, values, n_head, d_key, d_value):

"""

Add linear projection to queries, keys, and values.

"""

q = layers.fc(input=queries,

size=d_key * n_head,

num_flatten_dims=2,

param_attr=fluid.ParamAttr(

name=name + '_query_fc.w_0',

initializer=param_initializer),

bias_attr=name + '_query_fc.b_0')

k = layers.fc(input=keys,

size=d_key * n_head,

num_flatten_dims=2,

param_attr=fluid.ParamAttr(

name=name + '_key_fc.w_0',

initializer=param_initializer),

bias_attr=name + '_key_fc.b_0')

v = layers.fc(input=values,

size=d_value * n_head,

num_flatten_dims=2,

param_attr=fluid.ParamAttr(

name=name + '_value_fc.w_0',

initializer=param_initializer),

bias_attr=name + '_value_fc.b_0')

return q, k, v

def __split_heads(x, n_head):

"""

Reshape the last dimension of inpunt tensor x so that it becomes two

dimensions and then transpose. Specifically, input a tensor with shape

[bs, max_sequence_length, n_head * hidden_dim] then output a tensor

with shape [bs, n_head, max_sequence_length, hidden_dim].

"""

hidden_size = x.shape[-1]

# The value 0 in shape attr means copying the corresponding dimension

# size of the input as the output dimension size.

reshaped = layers.reshape(

x=x, shape=[0, 0, n_head, hidden_size // n_head], inplace=True)

# permuate the dimensions into:

# [batch_size, n_head, max_sequence_len, hidden_size_per_head]

return layers.transpose(x=reshaped, perm=[0, 2, 1, 3])

def __combine_heads(x):

"""

Transpose and then reshape the last two dimensions of inpunt tensor x

so that it becomes one dimension, which is reverse to __split_heads.

"""

if len(x.shape) == 3:

return x

if len(x.shape) != 4:

raise ValueError("Input(x) should be a 4-D Tensor.")

trans_x = layers.transpose(x, perm=[0, 2, 1, 3])

# The value 0 in shape attr means copying the corresponding dimension

# size of the input as the output dimension size.

return layers.reshape(

x=trans_x,

shape=[0, 0, trans_x.shape[2] * trans_x.shape[3]],

inplace=True)

def scaled_dot_product_attention(q, k, v, attn_bias, d_key, dropout_rate):

"""

Scaled Dot-Product Attention

"""

scaled_q = layers.scale(x=q, scale=d_key**-0.5)

product = layers.matmul(x=scaled_q, y=k, transpose_y=True)

if attn_bias:

product += attn_bias

weights = layers.softmax(product)

if dropout_rate:

weights = layers.dropout(

weights,

dropout_prob=dropout_rate,

dropout_implementation="upscale_in_train",

is_test=False)

out = layers.matmul(weights, v)

return out

q, k, v = __compute_qkv(queries, keys, values, n_head, d_key, d_value)

if cache is not None: # use cache and concat time steps

# Since the inplace reshape in __split_heads changes the shape of k and

# v, which is the cache input for next time step, reshape the cache

# input from the previous time step first.

k = cache["k"] = layers.concat(

[layers.reshape(

cache["k"], shape=[0, 0, d_model]), k], axis=1)

v = cache["v"] = layers.concat(

[layers.reshape(

cache["v"], shape=[0, 0, d_model]), v], axis=1)

q = __split_heads(q, n_head)

k = __split_heads(k, n_head)

v = __split_heads(v, n_head)

ctx_multiheads = scaled_dot_product_attention(q, k, v, attn_bias, d_key,

dropout_rate)

out = __combine_heads(ctx_multiheads)

# Project back to the model size.

proj_out = layers.fc(input=out,

size=d_model,

num_flatten_dims=2,

param_attr=fluid.ParamAttr(

name=name + '_output_fc.w_0',

initializer=param_initializer),

bias_attr=name + '_output_fc.b_0')

return proj_out

# In[7]:

def positionwise_feed_forward(x, d_inner_hid, d_hid, dropout_rate, hidden_act, param_initializer=None, name='ffn'):

"""

Position-wise Feed-Forward Networks.

This module consists of two linear transformations with a ReLU activation

in between, which is applied to each position separately and identically.

"""

hidden = layers.fc(input=x, size=d_inner_hid, num_flatten_dims=2, act=hidden_act,

param_attr=fluid.ParamAttr(

name=name + '_fc_0.w_0',

initializer=param_initializer),

bias_attr=name + '_fc_0.b_0')

if dropout_rate:

hidden = layers.dropout(hidden, dropout_prob=dropout_rate, dropout_implementation="upscale_in_train", is_test=False)

out = layers.fc(input=hidden, size=d_hid, num_flatten_dims=2,

param_attr=fluid.ParamAttr(

name=name + '_fc_1.w_0', initializer=param_initializer),

bias_attr=name + '_fc_1.b_0')

return out

# In[8]:

def pre_post_process_layer(prev_out, out, process_cmd, dropout_rate=0.,

name=''):

"""

Add residual connection, layer normalization and droput to the out tensor

optionally according to the value of process_cmd.

This will be used before or after multi-head attention and position-wise

feed-forward networks.

"""

for cmd in process_cmd:

if cmd == "a": # add residual connection

out = out + prev_out if prev_out else out

elif cmd == "n": # add layer normalization

out_dtype = out.dtype

if out_dtype == fluid.core.VarDesc.VarType.FP16:

out = layers.cast(x=out, dtype="float32")

out = layers.layer_norm(

out,

begin_norm_axis=len(out.shape) - 1,

param_attr=fluid.ParamAttr(

name=name + '_layer_norm_scale',

initializer=fluid.initializer.Constant(1.)),

bias_attr=fluid.ParamAttr(

name=name + '_layer_norm_bias',

initializer=fluid.initializer.Constant(0.)))

if out_dtype == fluid.core.VarDesc.VarType.FP16:

out = layers.cast(x=out, dtype="float16")

elif cmd == "d": # add dropout

if dropout_rate:

out = layers.dropout(

out,

dropout_prob=dropout_rate,

dropout_implementation="upscale_in_train",

is_test=False)

return out

pre_process_layer = partial(pre_post_process_layer, None)

post_process_layer = pre_post_process_layer

# In[9]:

def encoder_layer(enc_input, attn_bias, n_head, d_key, d_value, d_model, d_inner_hid, prepostprocess_dropout,

attention_dropout, relu_dropout, hidden_act, preprocess_cmd="n", postprocess_cmd="da",

param_initializer=None, name=''):

"""The encoder layers that can be stacked to form a deep encoder.

This module consits of a multi-head (self) attention followed by

position-wise feed-forward networks and both the two components companied

with the post_process_layer to add residual connection, layer normalization

and droput.

"""

attn_output = multi_head_attention(

pre_process_layer(enc_input, preprocess_cmd, prepostprocess_dropout, name=name + '_pre_att'),

None, None, attn_bias, d_key, d_value, d_model, n_head, attention_dropout,

param_initializer=param_initializer, name=name + '_multi_head_att')

attn_output = post_process_layer(enc_input, attn_output, postprocess_cmd, prepostprocess_dropout, name=name + '_post_att')

ffd_output = positionwise_feed_forward(

pre_process_layer(attn_output, preprocess_cmd, prepostprocess_dropout, name=name + '_pre_ffn'),

d_inner_hid, d_model, relu_dropout, hidden_act, param_initializer=param_initializer,

name=name + '_ffn')

return post_process_layer(attn_output, ffd_output, postprocess_cmd, prepostprocess_dropout, name=name + '_post_ffn')

def encoder(enc_input, attn_bias, n_layer, n_head, d_key, d_value, d_model, d_inner_hid, prepostprocess_dropout,

attention_dropout, relu_dropout, hidden_act, preprocess_cmd="n", postprocess_cmd="da",

param_initializer=None, name=''):

"""

The encoder is composed of a stack of identical layers returned by calling

encoder_layer.

"""

for i in range(n_layer):

enc_output = encoder_layer(enc_input, attn_bias, n_head, d_key, d_value, d_model, d_inner_hid,

prepostprocess_dropout, attention_dropout, relu_dropout, hidden_act, preprocess_cmd,

postprocess_cmd, param_initializer=param_initializer, name=name + '_layer_' + str(i))

enc_input = enc_output

enc_output = pre_process_layer(enc_output, preprocess_cmd, prepostprocess_dropout, name="post_encoder")

return enc_output

# #### 3.3 编码器 和 分类器 定义

# 以下 cell 定义 encoder 和 classification 的组织结构:

# 1. ernie_encoder:根据 ErnieModel 组织输出 embeddings

# 2. create_ernie_model:定义分类网络,以 embeddings 为输入,使用全连接网络 + softmax 做分类

# In[10]:

def ernie_encoder(ernie_inputs, ernie_config):

"""return sentence embedding and token embeddings"""

ernie = ErnieModel(

src_ids=ernie_inputs["src_ids"],

position_ids=ernie_inputs["pos_ids"],

sentence_ids=ernie_inputs["sent_ids"],

input_mask=ernie_inputs["input_mask"],

config=ernie_config)

enc_out = ernie.get_sequence_output()

unpad_enc_out = fluid.layers.sequence_unpad(

enc_out, length=ernie_inputs["seq_lens"])

cls_feats = ernie.get_pooled_output()

embeddings = {

"sentence_embeddings": cls_feats,

"token_embeddings": unpad_enc_out,

}

for k, v in embeddings.items():

v.persistable = True

return embeddings

def create_ernie_model(args,

embeddings,

labels,

is_prediction=False):

"""

Create Model for sentiment classification based on ERNIE encoder

"""

sentence_embeddings = embeddings["sentence_embeddings"]

token_embeddings = embeddings["token_embeddings"]

cls_feats = fluid.layers.dropout(

x=sentence_embeddings,

dropout_prob=0.1,

dropout_implementation="upscale_in_train")

logits = fluid.layers.fc(

input=cls_feats,

size=args['num_labels'],

param_attr=fluid.ParamAttr(

name="cls_out_w",

initializer=fluid.initializer.TruncatedNormal(scale=0.02)),

bias_attr=fluid.ParamAttr(

name="cls_out_b", initializer=fluid.initializer.Constant(0.)))

ce_loss, probs = fluid.layers.softmax_with_cross_entropy(

logits=logits, label=labels, return_softmax=True)

if is_prediction:

return probs

loss = fluid.layers.mean(x=ce_loss)

num_seqs = fluid.layers.create_tensor(dtype='int64')

accuracy = fluid.layers.accuracy(input=probs, label=labels, total=num_seqs)

return loss, accuracy, num_seqs

# #### 3.4 分词代码

# 以下 3 个 cell 定义分词代码类,包括:

# 1. FullTokenizer:完整的分词,在数据读取代码中使用,调用 BasicTokenizer 和 WordpieceTokenizer 实现

# 2. BasicTokenizer:基本分词,包括标点划分、小写转换等

# 3. WordpieceTokenizer:单词划分

# In[11]:

class FullTokenizer(object):

"""Runs end-to-end tokenziation."""

def __init__(self, vocab_file, do_lower_case=True):

self.vocab = load_vocab(vocab_file)

self.inv_vocab = {v: k for k, v in self.vocab.items()}

self.basic_tokenizer = BasicTokenizer(do_lower_case=do_lower_case)

self.wordpiece_tokenizer = WordpieceTokenizer(vocab=self.vocab)

def tokenize(self, text):